1,2,3

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

1,2,3

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

***

Abstract For a variety of reasons, human activity recognition is currently a hot study issue. The basic purpose is to use Several methods are used to recognise a person's activities, including orientation detectors, motion sensors, position sensors, and chronology. Pervasive computing, artificial intelligence, humancomputer interface, health care, health outcomes, rehabilitation engineering, occupational science, and social sciences are some of the domains where human activity recognition is used. Human behaviour contains a lot of context information and aids systems in achieving context awareness. It aids in the functional diagnosis of patients and the proper assessment of health outcomes in the rehabilitation field. Recognition of human activity is a key performance metric for participation,qualityoflife,andlifestyle.

Keywords - Internet of things, MPU6050 Sensor, ATMega328, ADXL334 Accelerometer

Themostcriticalandnecessaryfeedbackrequiredto build smartinternetofthings(IoT)applicationsis the results of the procedure of recognising human activities and their bodily interaction with the surrounding environment. It was critical to combine the inferring and sensing components in the Human Activity Recognition (HAR) study field in obtaining accurate and correct input about humans actions and experiences. Most researchers nowadays are drawn to this scientific topic. However, this interest arises from a desire to acquire contextawaredata,which is then utilisedto give tailored support to customers across a range of application sets, including security, medical, military, and lifestyle applications. The practise of properly distinguishing everyday behaviours like walking, standing, and running benefits both the userandthecaregiver Everydaymonitoredassessmentsof person actions,for example, may beincrediblyvaluable in stopping him/her from engaging in specific behaviours that may be various incidents or hazardous to his/her health due to his/her sickness or disease history status Furthermore, such everyday recognized insightsmayhelp auser ' shealthbyofferingcomments,ideas, and warnings

depending on the input research of their everyday actions' efficiency, thus assisting the user in enhancing her/hislivingcondition.

Yang [65] experimented with apps that employed movement detection from a device's sensor to track physicalactivity.Yangsamplesthegyroscopeat36,000Hz witha Nokia N95 for movements such as resting, moving, running, jogging, riding,and bicycling.The datawerethen storedtoadatabaseand annotatedbefore beinganalyzed. Yang examined the estimation accuracy of C5.0 Trees, Nave Bayes, k-Nearest Neighbor, and Support Vector Machine ( svm )using the WEKA training toolkit. Vertical andhorizontalfeatureshadabiggerimpactonrecognition rates than magnitude features alone, according to the study This featureset and theProposed method obtained 90.6 percent accuracy using ten 10-fold crossvalidation Bieber et al. [66] used SonyRegisteredagent onfiledevices,includingw715torecogniseeachother

Aim of the project to make a device which will be independently used for human activity recognition using IOT and sensors giving acceleration and gyroscopic position The final result will be predicted using MATLAB basedprogram

We will design a freestanding gadget based on the two aspectsbelow.

• Thesmartphone-basedHARsystemisheavilyonon the sensors, characteristics, and classification algorithm chosen to meet the device's constraints andadapttochangingconditions

The criteria for choosing the methods to use are determined by the application's specific requirements, which can include response time, recognition accuracy, and energy usage.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

Experts have been studying human activity recognition for years and have proposed numerous solutions. Vision sensors, inertial sensors, or a mix of the two are commonly used in existing approaches. Machine learning and threshold-based algorithms are frequently used Machine learning algorithms are more accurate and dependable, whereas threshold-based methodsare faster and easier to use. Body posture has been captured and identified using one or more cameras [8] The most popular solutions [10] are multiple accelerometers and gyroscopescoupledtovariousbodylocations Therehave also been approaches that integrate vision and inertial sensors[14].Data processing is an importantcomponent of all of these methods. The input characteristics' quality has a significant impact on performance. Previous study [15] centred on obtaining the most valuable characteristics from a time series data source Both the temporal and frequency domains of the signal are frequently examined The active learning method has been used to handle a number of machine learning problems where labelling samples takes a long time and effort. Speech recognition, information extraction, and handwritten character recognition are some of the uses [18] However,this method has never been used to solve theproblemofhumanactivity

Human activity recognition has been the subject of variousscientificprojects.Thisareahasbeenstudiedforat leastthreedecades.In the literature,several terminology linkedtohumanactivitiesareutilisedInthissection,we'll go over some of the key words in use in gesture recognitionresearch. We also go through how to classify human behaviours and how to recognise them using cutting-edge approaches. We also go over the taxonomy of HAR research methodologies and explore each one briefly.

• Action: An action is a gesture or movement made by a person.

• The Oxford dictionary defines activity as "whatever apersonorgroupdoesorhasdone"

• Physical Activity: The World Health Organization (WHO) recommends that people engage in physical activity

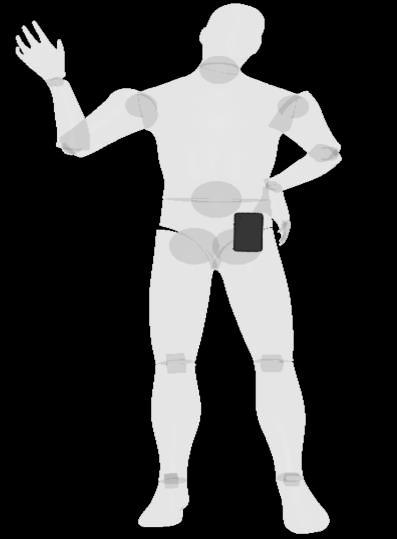

Walking, standing, sitting, bending, and sleeping are all examples of common actions that could be investigated. Sensors attached to various regions of the body were used to collect data (the chest, left hip, left

wrist, left thigh, left foot and lower back). Data from a sensor worn around the waist can be used to track a variety of activities, including sitting, standing, walking, sleeping in various positions, and jogging. The hand, shoulder, waist, bottom back, thigh, and trunk have all been used as accelerometer placement locations to distinguish between sleeping, sitting, walking, standing, andbending

One of the most crucial processes in the data collection and analysis is data pre-processing. Analysis of the data, missing and outlier value restoration, and obtaining features are all covered. Windowing techniques, which divide sensor signals into small time segments, are frequently used to extractfeaturesfrom rawdata.After that, segmentationandclassificationtechniques are used to each window. Sliding windows, in which preproceeded is required to identify many happenings, which are then used to determine further dataset segmentation; and task window frames, in which data segmentationisbasedonchangeofactivity

There are 14 digital input/output pins and 6 analogue input pins on this board. It has a 5 volt operating voltage and a 7 to 12 volt input voltage range. You'll need theArduino IDE to programme it. In order to programme theArduino Uno using the USB to serial converter, it must beconnected to the computer via USB. It features a serial port that allows it to communicate with acomputer[16].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

The ADXL334 Accelerometer is simple to use with this breakout board. ADXL334 Accelerometer is a 12-bit resolution, low-power three-axis capacitive MEMS accelerometer.With two interruptpins to pick from, this accelerometer is jam-packed with embedded functionalities and user-programmable options. Embeddedinterruptfunctionssaveenergybyeliminating theneedforthehostCPUtopolldataonaregularbasis.

Figure2:Humanbodywithjointsandpositionofmobile phone

Whencomparedtootherdevicesthatrequireanexternal chip programmer, uploading programs to the on-chip non-volatile storage is much easier. This simplifies the use of an Arduino by allowing for the use of a regular computer as the programmer. Opti boot loader is currently the default boot loader on Arduino UNO. The role of machine learning improvises the results or predictionofactivities.

Microcontrollers are small, low-cost computers built to do specialized jobs in embedded systems such as displaying microwave information, receiving remote signals,and so on. A generic microcontroller is made up of the CPU, memory (RAM, ROM, EPROM), serial ports, peripherals(timers,counters),andothercomponents.

ThisprojectmadeuseoftheESP8266WI-FImodule.Itis alow-costmicroprocessorthatruns on theTCP/IPstack. The microcontroller can connect to a wireless network viaHayesstyle commandsor TCP/IP connections thanks to thismicrochip.The ESP8266 is a Wi-Fi-capablesinglechip devicewith1MB of built-inflash.Espress ifSystems created this module, which is a 32-bit microprocessor There are 16 GPIO pins on this module. This module comes after the RISC processor. It has a DAC with a resolution of 10 bits. Later, Espress if Systems published a software development kit (SDK) that allows users to program directly on the device, eliminating the need for a separate microcontroller. Node MCU, Arduino, andMicrochiparejustafewofthe SDKsavailable.

The ADXL334Accelerometerincludesuser selectablefull scales of 2g, 4g, and 8g, as well as high pass filtered and info that is not filtered and is easily accessible The ADXL334 Accelerometer can be designed to generate gyroscope wake- up interrupt signals from a variety of customisable integrated algorithms, permitting it to detect events while remaining power-efficient when not inuse.

The Internet of Things (IoT) is a technology that allows objects to communicate with one another over the internet.Inthesameway,connecteddevicescommunicate with one another or with people. Finally, they upload the acquireddatatothecloud.Thedatacanrevealinformation about the data. As a result, regular monitoring, automation, predictive maintenance, and commercial trackingarejustafewoftheusesforsmartlinkeddevices. The cloud, or information aggregators, is linked to the smartdevices..

Thingspeak is a MathWorks-hosted web service that makesit simpleto gather,analyse,andact on sensordata, as well as build Internet of Things applications. The ThingSpeak Support Toolbox uses MATLAB to read data from ThingSpeak and write data to the ThingSpeak platform. It also has functionality for visualising and accessing data saved on ThingSpeak.com. It displays data, timestamps, and channel information for the selected public channel on ThingSpeak.com. The sensor values will be obtained from Thingspeak's channel data. thingSpeakRead=[data,time stamps,chInfo](chId,Name, Value)

1. The user does something (Walking, Standing, Sitting, Bending,Sleeping)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

2.Usingahardwareapplicationtocollectdata.

Description: We will create a hardware application that will be able to record data for various human activities andthenusethatdataforrecognitionandanalysis.

We will select a random sample of collected data by browsing the excel sheets, and the selected data will then be identified or detected as walking, sitting, or jogging activity

The Gaussian Filter is being used for filtering purposes. Filteringisusedtoreducetheamountofnoiseinthedata.

Visualizationandmodificationofsignals

RMS stands for Root Mean Square and is primarily definedasthesquarerootof DetectedandAnalyzedActivities

Here all five activities are detected according to three classifiers.

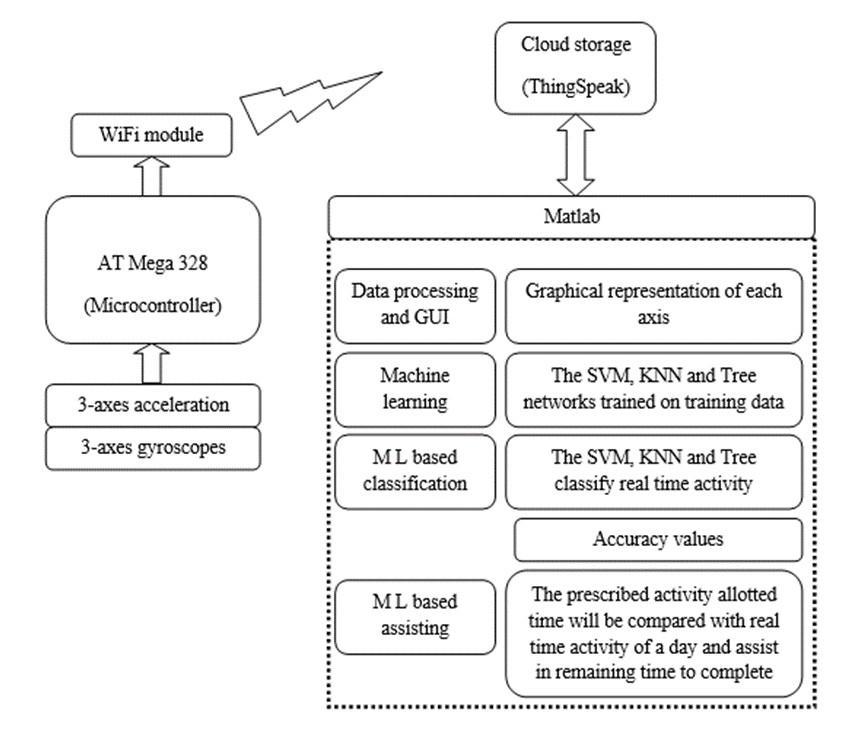

In the following discussion, we will concentrate on supervisedlearningbecauseitis by far the mostpopular type of machine learning in materials science. Figure 1 depicts the workflow utilised in supervised learning. A subset of the relevant population with known values of the desired property is usually chosen, or data is developed if necessary. The next step is to choose a machine learning algorithm that will fit the desired goal amount. The majority of the job comprises creating, locating, and purifying data to ensure consistency, accuracy,andotherfactors.Second,youmustdecidehow to consistently map the system's properties, i.e. the model's input. This involves transforming raw data into specified qualities that will be utilised as algorithm inputs. Following this, the model is trained by maximising its performance, which is often measured using a cost function. Adjusting hyperparameters that alter the model's training process, structure, and properties is frequently required. The data is divided into several groups. A validation dataset independent fromthetestandtrainingsetsshouldbeusedtooptimise thehyperparameters.

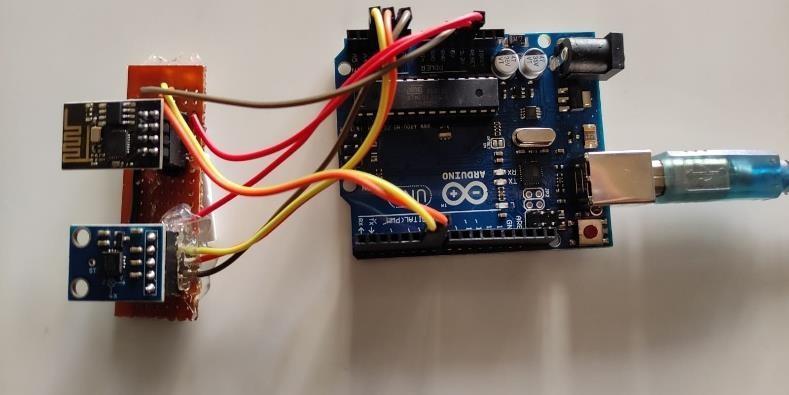

Above is the wiring diagram of our hardware device for

connectingthe ESP8266 to Thingspeak.The ArduinoUNO Boardisonlyusedtosenddatabetweenthecomputerand the ESP8266, i.e., it is not used to control the ESP8266. it actsasanUSB-to-UARTConverter.

The front-end sensing component of the ADXL345 senses acceleration, and the electric signal sensing component converts it to an analogue electric signal. The analogue signal is then converted to digital by the AD adapter built inside the module. This device will provide an input to

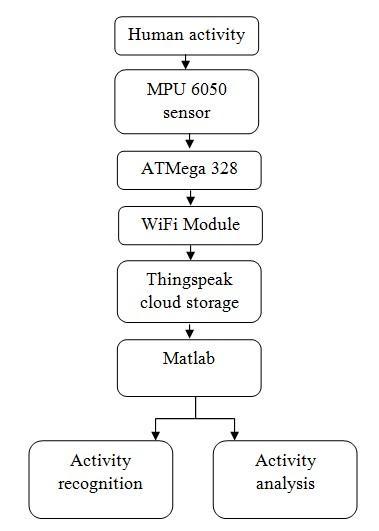

Figure6:basicFlowchartforhumanactivityrecognition

Figure6:basicFlowchartforhumanactivityrecognition

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

MATLAB via thingspeak based upon the position of this device in X, Y and Z axis. When you rotate the ADXL345 module,threevalueswillchange.

For Wi-Fi access to the system, we have given commands inArduino IDE softwarealso we have given commands in MATLAB to access the continuous data in thingspeak channelthatwehavecreated.

Using machine learning we have trained the system torecognizea specific activityby providingan excel sheet of data having x,y,z value sets different for different activities. Also we have created three Graphical User Interface (GUI) In first GUI, graphical movement of the devicecan beseenin X, Y and Z axis separately In second GUI, comparative classification of activities can be seen, given by three algorithms namely SVM, KNN and decision tree Also, theefficiency of each algorithm can be checked in MATLAB. ThelastGUIisthevisualappearance of the front-end display that that will be accessed by the user

The hardware implementation for human activity will reduceexcessdataprocessingandcontinuousmonitoringon mobile phone which in terms also effectively increases the efficiency of system. The accuracy as compare to SVM, KNN andTreealgorithm we achieved more in SVM of 99.00 percentage which shows comparatively we can choose the SVM forhuman activity prediction. The results also shows that thepresent-day activity we can easily monitor and we can use this for prediction in or assisting in prescribed activity by physiotherapist or doctor and we made it possible using our project GUI. The activity of human can also be compared with graphical system available and also by listing we provided for last 50 samples or we can select today’s values of human activity. The human activity detection assisting and also a hardware unitisenoughtocontinuouslymonitorthehuman activity. The feature included in this project of IoT made it possible for access of activity monitoring fromanywhere and obviously continuously, the hospitals can take this data in their central monitoring stations, even doctors can also monitor using mobile phone and caretaker will also can checktheactivityperformedbyperson.

Weextendoursincerethankstoprof.Dr..MilindNemadWe also would like to thank our project guide Assistant Prof. PankajDeshmukh.

[1] G. Haché, University of Ottawa, 2010. "We are working on a wearable mobility monitoring system."

Planning on Canada's Aging Population and PublicPolicy.ParliamentaryLibrary,2012.

[3] Comput.Hum.Behav.,vol.15no.5,pp.571– 583,

[2] H. Echenberg, The Effects of Community accelerometry: a validation study in ambulatorymonitoring."

VanKasteren,T.L.M.,Englebienne,andKröse,B. J. A., "An activity monitoring system for senior care using different models," PersUbiquitous Comput., vol. 14, no 6, September 2010, pp 489–498,

[5] J.Hernández,R.Cabido,A.S.Montemayor,andJ. j. Pantrigo, "Human activity recognition based onkinematicfeatures,"ExpertSyst.,vol.1,no.1, pp.n/a–n/a,2013.

[6] S.L.LauandK.David,"Movementrecognitionin cellphones using the accelerometer," Future NetworkandMobileSummit,2010,pp.1–9.

Figure6:SecondGraphicalUserInterface Figure7:VisualDisplayforuser IX.RESULT