International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

Abstract – As technology progresses, programmes that combine the complex disciplines of pattern recognition and image processing are used to ascertain age and gender.The variety of applications, such as user identification, targeted advertising, video monitoring, and human- robot interaction, have led to an expansion in face recognition research. Age is a major factor in today’s workplace when one’s go for a job interview or a health checkup. Information about an individual’s age is used by many organisations in the public, private, and advertising sectors to track down criminals, employ qualified personnel, and choose target markets for advertising campaigns. Every year, there are more than 1.5 million car accidents worldwide. The motivation of this research is to create an application for age and gender categorization that would be adequate for reallife forecasts. The objective of the project is to constructa Convlutional neural network that assesses the age and gender of the car driver using various algorithms. Italso involves developing an algorithm for detection and extraction of the facial region from the input image. The large amounts of deliberation and testing was required due to the largest difficulty of implementing it on a raspberry pi setup without compromising the accuracy of the age and gender detection system.The major restriction that was presented was because of the limited amount of memory available to the Raspberry pi 3B+. Hence to reduce the workload, the project performed Pretraining for the CNN model with images as well as perform preprocessingfor the input data which made feature extraction easier for the raspberry pi using HOG. For the project, after evaluating ResNet, YOLO v3, and caffe implementations,as well as simulations of various techniques, caffe model is implemented using openCV and python. A large scale face dataset with a wide age range was assembled from different sources to make up a diverse dataset for pretraining our CNN for facial recognition.The amountof images taken from various sources were around 22000 from UTK and nearly 38000 from IMDB presenting a massive aggregated data set. After implementation the CNN model designed for the project was able to perform facial and gender recognition with around 98.1 per cent accuracy.

Keywords – CNN, Age Estimation, Neural Network, Caffe, openCV and Tensorflow.

Facerecognition research has expanded due to thewiderangeofapplications,includinguseridentification,targeted ads,videosurveillance,andhuman-robotinteraction.Astechnologyadvances,applicationsthatintegratethesophisticated disciplines ofpatternrecognitionandimageprocessingareutilisedtodetermineageandgender. Whenyouattendforan intervieworahealthcheck-upintoday’senvironment,ageplaysasignificantinfluence.Manygovernment,corporate,and advertising sector businesses utilise age information to identify criminals, hire qualified staff, and target audiences for productpromotion. However,determiningaperson’sageisindeednotfairlystraightforward, andthereareconstraintsthat prohibitusfromseeingthe approximateagewithininthesetofphotographs.Findingtheperfectdatasetfortrainingthe model is significant. Because real-time data is massive, the computations and time asked toconstruct the model are significant.It’s been a laborious effortafter implementing numerous machine learning techniques, but the accuracy has increased massively. By mapping the face according to the age discovered age estimation plays a significant part in applicationssuchasbiometricevaluationvirtualcosmetics,andvirtualtry-onappsforjewelleryandeye-wear.LensKart is one such application that allowspeople to test on lenses. Age estimation is a sub field of facial recognition and face trackingthat,whenintegrated,mayestimate an individual’s health. Many health care applicationsemploy this method to monitortheireverydayactivitiesinordertomaintaintrackoftheirhealth.ThisfacialdetectionalgorithmisusedinChina toidentifyservicedriversandjaywalkers.Theprojectincorporatearangeofdeeplearning algorithmstopredictageand gender. CNN (convolution neural network) is a popular technique to assess age andgender. In this implementation, heretheprojectincorporateusingOpenCVandCNNtopredictaperson’sageandgender.

In this paper[3], It created extraction functions for a face candidate region with color images and sections of its face, which it combined with the gender and age estimate algorithmthey had already created, so that the technique could be applied to real-time collected face photos. The testing results revealed genderand agehittingratios of93.1 percent and

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

58.4percent, significantly In this paper[4], The document outlines the Center for Data Science at the University of Washington’sentry to the PAN 2014 author profiling challenge. They investigate the predictive validity of many sets of variablestaken from diverse genres of online social media in termsof age and gender In this research [5], a novel method is providedtoauthenticatetheuser’sgenderandagerangethat isappropriatelyreflectedinhisphoto. Addinga double-checklayervalidatorbasedonDeepLearningbycombininguser photo,gender,anddateofbirthforminputs,and recognising gender and calculating age from a single person’s photo using a Convolutional Neural Network (CNN or ConvNets) In this paper[6], The study provides deep CNN to enhance age and gender prediction from substantial findings, and a considerable improvement in many tasks such as facial recognition can be shown. A basic convolutional network design is developed to improve on existing solutions in this sector. Deep CNN is used to train the model to the pointwheretheaccuracyofAgeandGenderis79percent.Thepaper[7],presentsadeeplearningframeworkbasedonan ensemble of attentional and residual convolutional networks for accurately predicting the gender and age group of face pictures.Usingtheattentionmechanismallowsourmodeltoconcentrateonthemostrelevantandinformativeportionsof theface,allowingittomakemoreaccuratepredictions. Inthispaper[8],Thestudyattemptstocategorisehumanageand genderata coarserlevel usingfeed-forward propagation neural networks. The final categorization is done at a finer levelusing3-sigmacontrollimits.Thesuggestedmethodeffectivelydistinguishesthreeagegroups:children,middle-aged adults, and elderly individuals. Similarly, the suggested technique divides two gender categories into male and female. In this paper [9], The research proposesa novel framework for age estimate using multiple linearregressionson thediscriminativeageingmanifoldoffacepictures.Facephotoswithageingcharacteristicsmayexhibitvarioussequential patternswithlowdimensionaldistributionsdue to the temporal nature of age progression. This article[10]outlinesand explains in detail the complete process of designing an Android mobile application for gender, age, andface recognition. Facial authentication and recognition are difficult challenges. To be trustworthy, facial recognition systemsmustoperate withtremendousprecisionandaccuracy.Images shot with diverse facial expressions or lighting circumstances allow the system to be more precise and accurate than if only one photograph of each participant is saved in the database. In this paper[11],Basedonfeature extractionfromfacephotos,thisresearchofferedamechanismforautomatedageand gender categorization. The primary concern of this technique is the biometric characteristic variance of males and females for categorization.A approachemploys twotypes offeatures,primaryandsecondary, and consists of three major iterations: preprocessing, feature extraction, and classification. In this paper [12], the study attempted to increase performance by employing a deep convolutional neural network (CNN). The proposed network architecture employs the Adience benchmark for gender and age estimation, and its performance is significantly improvedby using real-world photos of the face. In this research,anappropriateapproachfordetectinga face in real-timeandestimatingitsageandgenderis provided. It initially determines whether or not a face is present in the acquired image. If it is there, the face is identified, and the regionoffacecontentisreturnedusingacolouredsquarestructure,along with the person’s age and gender This paper [13]harnessesthegoodqualitiesofconvolutionneuralnetworksinthefieldofpictureapplicationby utilising deep learning approach to extract face features and factor analysis model toextract robust features. In terms of age estimation function learning, age-based and sequential study of rank-based age estimation learning approaches are used, followed by theproposal of a divide-and-rule face age estimator. In this study[14], the resarch provide a hybrid structure that incorporates the synergy of two classifiers Convolutional Neural Network (CNN) and Extreme Learning Machine(ELM),tocopewithageandgenderdetection.Thehybriddesigntakesadvantageoftheirstrengths:CNNextracts features from input pictures while ELM classifies intermediate outputs. The paper not only provide a full deployment of our structure, including thedesign of parameters and layers, an analysis of the hybrid architecture, and the derivation of back-propagationinthissystemthroughoutiterations,butalsotookmanyprecautionstoreducethedangerofoverfitting. Following that, twoprominent datasets, MORPH-II and Adience Benchmark, are utilised to validate our hybrid structure. The work presented in article [15] is a full framework for video-based age and gender categorization that operates properly on embedded devices in real-time and under unconstrained situations. To lower memory needs by up to 99.5 percent,theresearchoffersasegmentaldimensionalityreductionapproachbasedonEnhancedDiscriminantAnalysis(EDA). This research [16] proposes a statistical pattern recognition strategy to solve thischallenge. In the suggested method, Convolutional Neural Network (ConvNet / CNN), a Deep Learning technique, is employed as a feature extractor.CNN receivesinputpicturesandassignsvaluestovariousaspects/objects(learnableweightsandbiases)oftheimage,allowing ittodistinguishbetweenthem.ConvNetneedsfarlesspreprocessingthanotherclassificationtechniques.

CNN/CovNet is primarily a class of deep neural networks Used for the processing of visual data in deep learning. while thinking of neural networks, specifically convolutional neuralnetworks , [17] The researchers typically think of matrix multiplicationinmostormanycircumstances.CNNsemployconvolutionaltechnology.Thevisualcortex’sorganisationand thehumanbrain’sneuralnetworkserveasthemajor inspirationfortheConvNetdesign.Individualneuronsonlyreactto stimuliina smallarea ofthevisual fieldcalledthe receptivefield.Thesefieldsare spreadthroughouttheentire area. A

International Research Journal

Engineering

Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

CNN may detect spatial and temporal relationships in a picture by applying the right filters. The design ismore appropriate for the dataset because of the reductionin the number of parameters involved and the reusability of weights.Thisimpliesthatthenetworkisbeingtrainedto betterunderstandthecomplexityoftheimage.Themajority of the high level properties , including the edges , are taken from the convolutional procedure’s input images.There is no requirementthatneuralnetworksuseonlyoneconvolutional layer.TypicallythefirstConvLayerrecordsbasictraitslike colours,borders ,gradient direction , etc. Byaddinglayers , the architecturealso adaptsto the high level characteristics, giving us a network that comprehends or interprets the dataset’s photos as a whole in a manner comparable to thatof a human. There are two possible results for the operation: onewitha featurethat is more orless dimensionally convolvedthantheinput,andtheotherwiththesameormoredimensions.ApplyingtheearlierValidPaddingorthelater SimilarPaddingachievesthis.

Theconnectivitybetweenthelayersofaconvolutionalneuralnetwork,auniquekindoffeed-forwardartificialneural network ,[18] is motivated by by the visual brain. A subset of deep neural networks called convolutional neural networks(CNNs) are used to analyse visual imagery. They have uses in the detection of images and videos , image categorization , natural language processing etc. The first layer to extract features from an input image is convolution. Convolutionlearns visual features from small inputdata squares,preservingthelinkbetweenpixels. Twoinputs, such asanimagematrixandafilterorkernel,arerequiredforthis mathematical procedure. To create output feature maps, eachinputimagewillbeprocessedthroughanumberofconvolutionlayerswithfilters(kernels)

Convolutionlayersareusedtotakeatinypatchoftheimagesafterthecomputerhasreadtheimageasacollectionof pixels. The characteristics or filters are the names givento these images or patches. Convolution layer performs significantly better at detecting similarities than completeimage matching scenes by transmitting these rough feature mathcesinnearlythesamepositioninthetwoimages.Thefreshinputimagesarecomparedtothesefilters;ifamatchis found,theimageisappropriatelycategorised.Here,alignthefeaturesandtheimagebeforemultiplyingeachimagepixel bythematchingfeaturepixel,addingthepixeltotals,anddividingthefeature’stotalnumberofpixels.

The rectified linear unit , also known as the RELU layer,iswhatusedtoremoveall negativevaluesfromthe filtered images and replace them with zero. To prevent the values from addinguptozeroes,thisisdone. Thistransformfunction onlyactivatesa nodeiftheinputvalueisgreaterthana specific threshold;iftheinputislowerthanzero,theoutputis zero,andallnegativesvaluesaretheneliminatedfromthematrix.

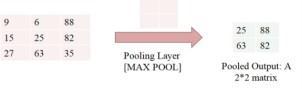

Thesizeoftheimageisdecreasedinthislayer.Here,Itisfirstchoosingawindowsize,thenspecifyingthenecessary stride, andfinallymoveyourwindowacrossyourfiltered photographs. Thentakethehighestvaluesfromeachwindow. Thiswillcombinethelayersandreducethesizeofthematrixandimage,respectively. Thefullylinkedlayerreceivesthe decreasedsizematrixastheinput.

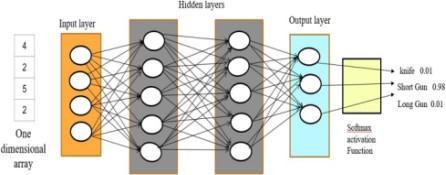

After the convolution layer , RELULayer , and Pooling Layer have been applied, The data must stack all the layers, thefullylinkedlayerthatwasappliedtotheinputimage’scategorization.Ifa2X2matrixdoesn’tresult,theselevelsmust berepeated.Finally,thecompletelylinkedlayerisused,whichiswheretheclassificationitselftakesplace.

A machine learning model developed by Caffe is storedin a CAFFEMODEL file. It includes a model for picture segmentationorclassificationthatwastrainedusingCAFFEFramework

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

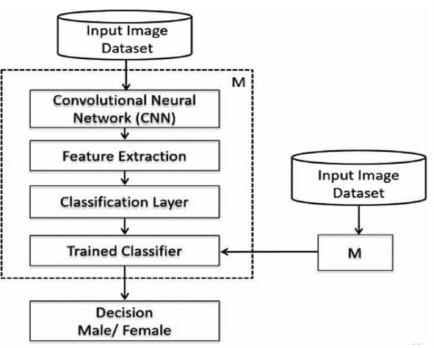

In this section we study the objective of our proposed method.The main objective was to test our CNN model for RaspberrypiImplementation.Convolutionalneuralnetworks(CNNs,orConvNets)areafamilyofdeepneural networks thatarefrequentlyusedtoanalysevisualvisionin deeplearning.Asaresultofitssharedweightsdesignand translation invariance properties , they are often referred toasshiftinvariantorspaceinvariantartificialneuralnetworks.Thereis noneedtodofeatureextractionwhenCNNisemployedforclassification.CNNwillalsodofeatureextraction.Ifafaceis present , The proposed image is sent directly into CNN’s classifier to determine its kind. By takinginto account all the characteristics in the output layer , a resultwith some predictive value is produced. Through the use of the SoftMax activationfunction,thesevaluesaredetermined.PredictivevaluesareprovidedviaSoftMaxactivation.

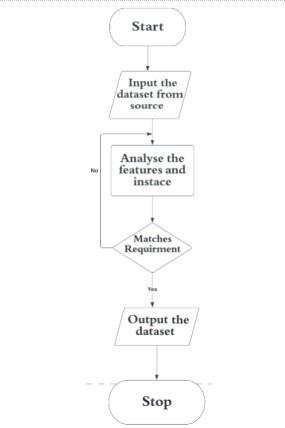

Prior to initiating the preprocessing stage we perform data acquisition .The flowchart for data collection is shown in figure2.Dataisgatheredfromasource,andathorough examinationisdone.Onlyiftheimagemeetsourcriteriaandis uniquearewillingtoutiliseitfortrainingandtesting.

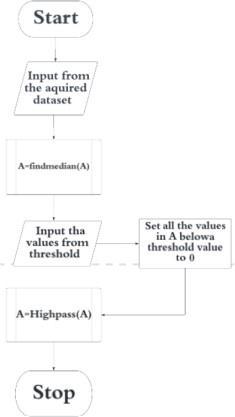

The flowchart for pre processing the photos that wereobtained from the output of the previous phase is showin in figure 3. In order to simplify processing, the picture must be converted from RGB to greyscale. Next, noise must be removedusinganaveragingfilter,thebackgroundmustberemovedusingglobalbasicthresholding,andtheimagemust besharpenedbyamplifyingthesmallerfeaturesusingahigh-passfilter.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

Fig.2.DataAcquisition

Fig.3.Preprocessingflowchart

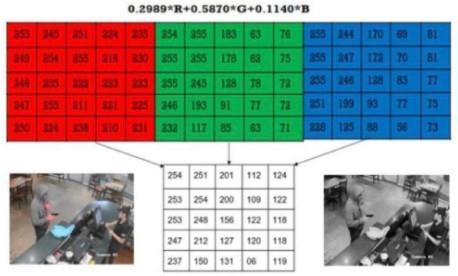

TheimageischangedfromRGBtoGreyScaleasthefirst stage in the pre-processing process.Grayscale photographs, on the other hand, carry less information than RGB ones. They are, nonetheless, ubiquitous in image processing since employing a grayscale image takes less accessible space and is faster, particularly when dealing with sophisticated computations.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

Fig.4.ConversionfromRGBtoGreyscale

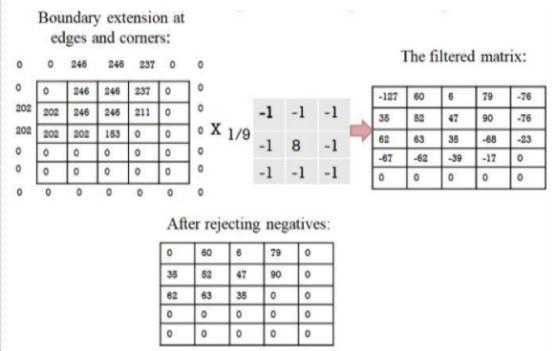

In order to remove or reduce noise from a picture , anoisereductionalgorithmisused. Thealgorithmsfornoise reduction smooth out the whole image , leaving areas closetocontrastlimits.Thisreducesoreliminatesthevisibilityof noise. The second stage of picture pre-processing is noise reduction. The grayscale image that was obtained in the precedingphaseisusedasinputinthiscase.Here,thetechniqueusedfornoiseremovaliscalledMedianFiltering.

Fig.5.NoiseRemovalusingMedianFiltering

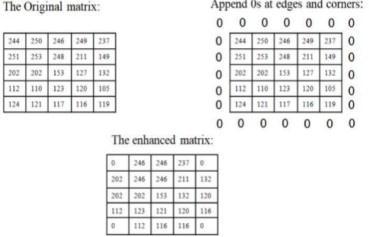

Anonlineardigitalfilteringmethodcalledthemedianfilterisfrequentlytoeliminatenoisefromapictureorsignal.The matrix , which represents the grey scale image , is here given 0s at the edges and corners. Arrange the components of each3X3matrixinascendingorder,thenclosethemedianor middleelementofthose9elements,thenwritethatvalue tothatspecificpixelspot.

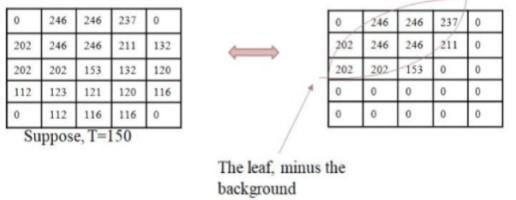

Thresholding is a sort of image segmentation in which it modify a picture’s pixel composition to facilitate analysis.KeepA(i,j)ifitexceedsoris equaltothethreshold

T.Ifnot,substitute0forthevalue.

Fig.6.ImagesharpeningusingHigh-passfiltering

International Research Journal of Engineering and Technology (IRJET)

e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

At this case , the value of T may be changed in the front endto accommodate the various requirements of various pictures.Here,thefilteremploytheapproachoftrialanderrortofindthethresholdvaluethatcouldbemostappropriate forus.Figure6displaysthresholdingutilisingfundamentalglobalthresholding.

Any enhancing method known as image sharpening emphasises a picture’s edges and small details. Increasing this settingresultsinasharperimage.

Apicturecanlookcrisperbyusingahigh-passfilter.Thesefiltersdrawattentiontotheimage’sminuteelements.Here,the thresholdingoutputisprovidedasinput.Here,we’reusingafilter,andtothepixelsattheborderpixels,firsttheclosest valuesareadded.

Thevalueforacertainpixellocationisobtainedbymultiplyingthe3*3inputmatrix’selementsbythefiltermatrix ,whichmayberepresentedasA(1,1)*B(1,1).Allofthe3*3 inputmatrix’scomponentsaresomultiplied,andtheirsumis dividedby9,givingtheresult.Thevaluesofeachpixelpointaredeterminedinthesamemanner. Sincethereisnosuch thingasnegativelighting,thenegativevaluesaretakentobezero.

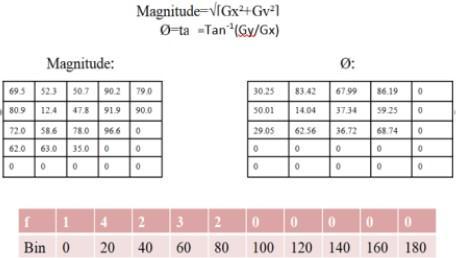

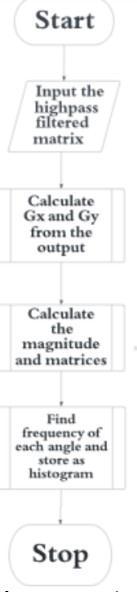

Adimensionalityreductiontechniquecalledfeatureextractiondividesalargeamountofrawdataintosmaller, easier to process groupings. To extract the features from the preprocessed picture received as input, a technique known as HistogramOrientationGradient(HOG)isemployed.Itentailsseveralprocesses,suchasdeterminingGxandGy,whichare gradientsaroundeachpixelinthexandyaxes.Themagnitudeandgradientofthepixel’sorientationarethencalculatedusing applicableformulas.Theanglesandfrequenciesarethendisplayedtomakeahistogram,whichisthemodule’soutput.

Convolutional neural networks are a family of deep neuralnetworks that are frequently used to analyse visual vision in deeplearning.Asaresultofitssharedweightsdesignandtranslationinvarianceproperties,theyareoftenreferredtoas shift invariant or space invariant artificial neural networks It is not needed to do feature extraction when CNN is employedfor classification. CNN will also do feature extraction. If a face is present , the preprocessed image is directly sentinto CNN’sclassifiertodetermineitskind. Bytakingintoaccountallthecharacteristicsintheoutputlayer, aresult withsomepredictivevalueisproduced.ThroughtheuseoftheSoftMaxactivationfunction,thesevaluesaredetermined.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

Figure9depicts thefeature extractionprocessusingHOG.Magnituderepresentsillumination, anddegreerepresents angleof orientation. Followingthecalculationof the the orientation angle , the frequency of the angles for the specific intervals are documented and provided as input tothe classifier. Here , zeroes are not taken into account while calculatingfrequency.Forinstance,thefrequencyiswrittenas2sincetherearetwooccurencesfortherangeof40to59.

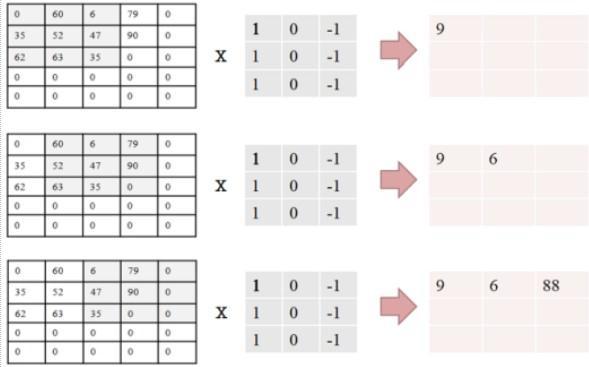

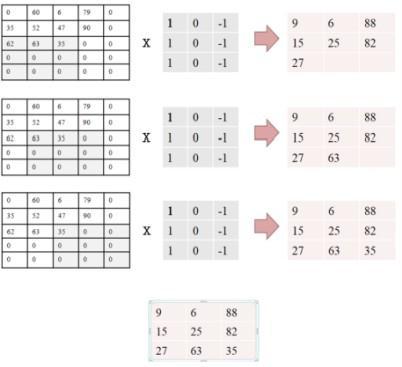

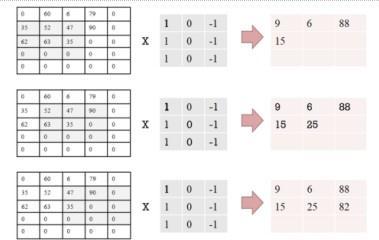

Thefirststageof CNN isthe Convolutional Layer, which takes3*3of the suppliedmatrixderived fromtheHigh-pass filterasinput.This3*3matrixismultipliedbythefiltermatrixfortheappropriateposition,andthesumisprintedinthe position. Thisisseenin theimagebelow. Thisoutputissenttothepoolinglayer,whichfurtherreducesthematrix.The ConvolutionalLayerisseeninFigure10,11and12

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

Fig.10.ConvolutionalNeuralNetworkInputmatrix

Beforepooling,convolution isfollowedbyrectificationofnegativevaluesto0s.Itisnotprovableinthiscasesinceall valuesarepositive.Beforepooling,numerousrepetitionsofbotharerequired.

Fig.11.ConvolutionalNeuralNetworkInputmatrix

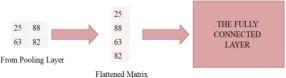

InthePoolinglayer,a3*3matrixisreducedtoa2*2matrixby picking the maximum of the particular 2*2 matrix at a specificpoint.ThePoolingLayerisseeninFigure13

Fig.12.ConvolutionalNeuralNetworkoutputmatrix

e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

The pooling layer’s output is flattened, and this flattened matrix is passed into the Fully Connected Layer. There are numerous layers in the fully linked layer, including the Input layer, Hidden layer, and Output layer. The result is then passed into the classifier, which in this case uses the SoftMaxActivation Function to determine whether or not a face is presentintheimage.TheFullylinkedlayerandOutputLayeraredepictedinFigures14and15.

The picture is pre-processed by converting the RGB to grayscale image and feature extraction is performed by the neuralnetwork’sfirstlayer,whichistheconvolutionlayer,anddetectionisperformedbyemployingfullyconnectedlayers oftheconvolutionalneuralnetwork.

OneofthemostwidelyutilisedmethodologiesincurrentArtificial Intelligence is neural network (NN) technology (AI).It has been used to solve issues like as forecasting, adaptive control, recognising categorization, and many more. An artificial neural network (NN) is a simplified representationof a biological brain. It is made up of components known asneurons.Anartificialneuronisjustamathematicalrepresentationofabiologicalneuron. Becauseanartificial neural network is based on the real brain, it sharesconceptual features such as the ability to learn. ConvolutionalNeural Networks(CNN)andDeep Learning(DL)are new developmentsinthefield ofNN computing.CNN isa neural network withauniquestructurethatwascreatedtomimicahumanvisualsystem(HVS).Thus,CNNsarebestsuitedforhandling computervisionissueslikeasobjectidentificationandimageandvideodatacategorization.They’vealsobeenemployed forspeechrecognitionandtexttranslationwithsuccess.Thegrowingpopularityofdeeplearningtechnologyhasspurred thecreationofseveralnewCNNprogrammingframeworks.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

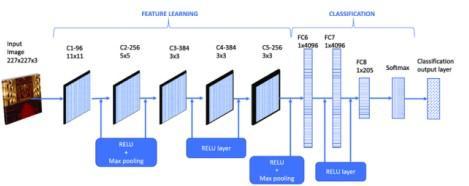

Caffe, TensorFlow, Theano, Torch, and Keras are the mostpopular frameworks.Convolutional neural networks differ fromotherneuralnetworksinthattheyfunctionbetterwithpicture,voice,oraudiosignalinputs.Theyhavethreedifferent sortsoflayers:

1. Convolutionallayer

2. Poolinglayer

3. Fully-connected(FC)layer

Aconvolutionalnetwork’sinitiallayeristheconvolutionallayer. While convolutional layers can be followed by other convolutional layers or pooling layers, the last layer is the fully-connected layer. The CNN becomes more complicated with eachlayer,detectingmoreareasofthepicture. Earlierlayersconcentrateonfundamental aspectslikecoloursand borders. As the visual data travels through the CNN layers,itbeginstodistinguishbiggercomponentsorfeaturesofthe item,eventuallyidentifyingthetargetobject.

Fig.16.CNNarchitecturediagram

O. Training a CNN using CAFFE in 4 steps :

1. Data preparation : In this step , the images are cleaned and stored in a format that can be used by caffe. A python scriptthatwillhandlebothimagepreprocessingandstorage.

2. Modeldefinition:Inthisstep,aCNNarchitecturewas chosenanditsparametersaredefinedinaconfigurationfile withextension.protxt

3. Solver definition : The solver is responsible for model optimization. The solver parameters are defined in a configurationfilewithextension.protxt

4. Model Training : the model is trained by executingone caffe command from the terminal. After training the model , the trained model in a file with extension caffemodelisfound.

Although the gender prediction network worked well , ourexpectations werenotmetbytheagepredictionnetwork. Theconfusionmatrixfortheagepredictionmodeliswhatwefoundwhensearchingthepaperforthesolution.

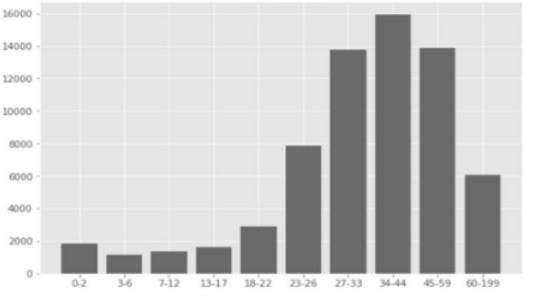

Thegraphinfigure 17allows forthefollowing observations to be made : The prediction accuracy for the age ranges 02,4-6,8-13,and25-32isquitehigh.Theresultsarestronglyskewedtowardthe25-32agegroup.

This indicates that it is quite simple for the network tomix up the ages of 15 and 43. Therefore , there is a good possibilitythattheanticipatedagewillbebetween25and32

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

Fig.17.Datasetresultsbinwise

, even if the real age is between 15-20 or 38 and 43. The results section further supports this. In addition ,it was found thatpaddingaroundtheidentifiedcaseincreasedthemodel’saccuracy.Thiscouldbebecausestandardfacephotoswere used as training input instead of the closely cropped faces obtained after face detection. This project also examines the usageoffacealignment priortoprediction anddiscoveredthatwhile it became better for some cases , it got worse for others.Ifyouworkwithnon-frontalfacesmostofthetime,usingalignmentcouldbeasmartoption

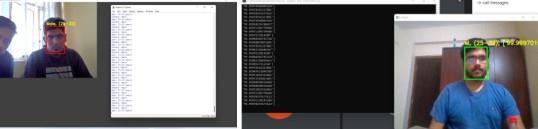

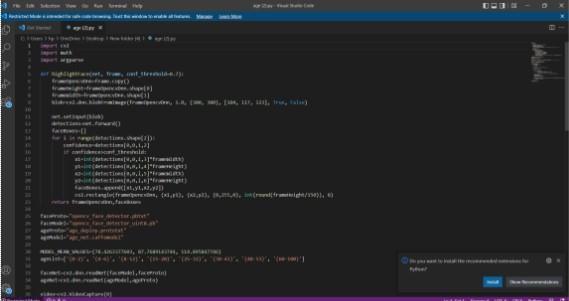

Fig.18.Codesnapshotforageandgenderdetection

Fig.19.ResultsnapshotsusingRaspberrypisetup

TheFucturescopeoftheprojectare:-

1. Inordertomaketheprojectmoreaccessible,itmightbeturnedintoawebapplicationoramobileapplication.

2. Inthefuture,Itmaybeimprovedsuchthatitcandeterminetheageandgenderofagroupofpeopleintheimage.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

The project titled Vehicle Driver Age Estimation Using Neural Network aims to predict the age and gender of the peopleaccurately.Thefirstobjectiveoftheprojectwasto detect and extract the facial region from the input image fedbythecamera. Thesecondobjectivewastoselectthe frontal faceimagefromtheextracted facial region usinghead pose estimation. The third objective was to pretrainthe convolutional neural network model and preprocess the images from the input data. The final objective was to developaconvolutionalneuralnetworkusingcaffeframeworkto assess the driver’s age with the best accuracy values. To provide a varied dataset for pre-training the CNN for facial recognition, a sizable face dataset with a wide age range was compiled from several sources. Approximately 22,000 photoswere acquired from UTK, while roughly 38,000 were taken from IMDB, creating a sizable aggregated data set. Afterdeployment,theCNNmodelcreatedfortheprojectwasableto recognise faces and gender with an accuracy of about

98.1 percent. OpenCV’s age and gender detection will be very helpful for driver’s age and gender estimation. When coupled,CNNandOpenCVcanproduceexcellentresults.Theproject’sUTKandIMDBdatasetcollectionproducesresults with improved precision. As pre-trained models, the caffe model files are utilised. This project demonstrated how face detectionusingOpenCVmaybedonewithouttheuseofanyextraexpensiveprocedures.

WewouldliketothankourprojectmentorProf.RajithkumarBKforguidingusinsuccessfullycompletingthis project. She helped us with her valuable suggestionson techniques and methods to be used in completion for the project. We wouldalsoliketothankstoourcollegeandthe HeadofDepartmentDr. HVRavishAradhyaforallowingustochoosethis topic

1. Verma, U. Marhatta, S. Sharma, and V. Kumar, “Age prediction using image dataset using machine learning,” InternationalJournalofInnovativeTechnologyandExploringEngineering,vol.8,pp.107–113,2020

2. R.Iga,K.Izumi,H.Hayashi,G.Fukano,andT.Ohtani,Agenderandageestimationsystemfromfaceimages,IEEE,2003.

3. J.Marquardt,G.Farnadi,G.Vasudevan,etal.,Ageandgenderidentificationinsocialmedia,2014

4. A. M. Abu Nada, E. Alajrami, A. A. Al-Saqqa, and S. S.Abu-Naser,“Ageandgenderpredictionandvalidationthrough singleuserimagesusingcnn,”2020.

5. I. Rafique, A. Hamid, S. Naseer, M. Asad, M. Awais, and T. Yasir, Age and gender prediction using deep convolutional neuralnetworks,IEEE,2019.

6. M.A.Alvarez-Carmona,L.Pellegrin,M.Montes-yG´omez,etal.,Avisualapproachforageandgenderidentificationon twitter,2018.

7. A. Abdolrashidi, M. Minaei, E. Azimi, and S. Minaee, Age and gender prediction from face images using attentional convolutionalnetwork,2020.

8. M. Dileep and A. Danti, Human age and gender prediction based on neural networks and three sigma control limits, 2018.

9. Razalli and M. H. Alkawaz, Real-time face tracking application with embedded facial age range estimation algorithm, IEEE,2019.

10. A. Salihbaˇsi´c and T. Orehovaˇcki, Development of android application for gender, age and face recognitionusing opencv,IEEE,2019.

11. T.R.KalansuriyaandA.T.Dharmaratne,Neuralnetworkbasedageandgenderclassificationforfacialimages,2014.

12. Biradar,B.Torgal,N.Hosamani, R.Bidarakundi,andS.Mudhol,Ageandgenderdetectionsystemusing raspberrypi, 2019.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN:2395-0072

13. Liao,Y.Yan,W.Dai,andP.Fan,Ageestimationoffaceimagesbasedoncnnanddivide-and-rulestrategy,2018.

14. M.Duan,K.Li,C.Yang,andK.Li,Ahybriddeeplearningcnn–elmforageandgenderclassification,2018.

15. Azarmehr, R. Laganiere, W.-S. Lee, C. Xu, and D. Laroche, Real-time embedded age and gender classification in unconstrainedvideo,2015.

16. Verma,U.Marhatta,S.Sharma,andV.Kumar,Agepredictionusingimagedatasetusingmachinelearning,2020

17. M.Duan,K.Li,C.Yang,andK.Li,“Ahybriddeep learningcnn–elmforageandgenderclassification,”Neurocomputing, vol.275,pp.448–461,2018

18. Levi and T. Hassner, “Age and gender classification using convolutional neural networks,” in Proceedings ofthe IEEE conferenceoncomputervisionandpatternrecognitionworkshops,2015,pp.34–42.