International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

1Researcher, Bengaluru, Karnataka, India ***

Abstract - The majority of information is relayed by the eyes, which are a paramount part of the body. When an driver or person is weary, countenances such as blinking, yawning rate, and face tilt change from those in a mundane state. We propose a Driver-Drowsiness Detection System in this effort, which employs video clips to monitor drivers' lassitude status, such as yawning, eye closure length, and head tilt position, without having them to carry sensors on their bodies. We are utilizing a face-tracking algorithm to amend tracking reliability due to the constraints of precedent methodologies. To distinguish facial areas, we utilized a technique predicated on 68 key points. Then we assess the passengers' health utilizing these areas of the head. By integrating the eye, mouth, and head, the DriverDrowsiness Detection Method can utilize a fatigue alarm to alert the driver.

Key Words: Image Processing, Drowsiness, eye movements,facialmovements

Increased demand for modern mobilityhas necessitated faster car-park growth in recent years. The automobile is nowanecessaryformofmobilityformostpeople.In2017, 97 million vehicles were sold worldwide, up 0.3 percent from2016.In2018,thetotalnumberofautomobilesinuse worldwidewasestimatedtoreachover1billion.Although the vehicle has altered people's lifestyles and made daily tasksmoreconvenient,itis alsolinkedtoseveral negative consequences, such as road accidents. According to the National Highway Traffic Safety Administration, there were 7,277,000 traffic accidents in 2016, with 37,461 people killed and 3,144,000 people injured. Fatigued driving was responsible for roughly 20% to 30% of the traffic accidents in this study. As a result, fatigued driving isamajorandhiddenriskintrafficcollisions.Thefatiguedriving-detection technology has become a prominent researchareainrecentyears.

Positivist and interpretivist detection methods are the twotypesofdetectionprocedures.Adrivermustengagein the subjective detection method's evaluation, which is linked to the driver's subjective impressions through proceduressuchasself-questioning,evaluation,andfilling out questionnaires. These statistics are then utilised to estimate the number of cars driven by weary drivers, allowing them to better arrange their timetables. The objective detection approach, on the other hand, does not

require drivers' feedback because it analyses the driver's physiological condition and driving-behaviour parameters in real time. The information gathered is utilised to determine the driver's level of weariness. In addition, objective detection is divided into two categories: contact and non-contact. Non-contact is less expensive and more convenientthancontactbecausethesystemdoesnotneed Vision - based technology or a sophisticated camera, allowingthegadgettobeusedinmorecars.

The non-contact approach has been widely employed for fatigue-drivingdetectionduetoitseaseof installationand low cost. Concentration Technology and Smart Eye, for example, use the motion of the driver's eyes and the positionofthedriver'sheadtoestimatetheirfatiguelevel. Alertingconcernpersonalsoisoneofthetechniquesmake systemmorereliableinrealworld.

Changes in the eye-steering correlation can indicate distraction, according to the current system. Eye movements linked with road scanning procedures have a low eye steering connection, as shown by autocorrelation and cross-correlation of horizontal eye position and steering wheel angle. On a straight road, the eye steering correlation will regulate the connection. Because of the straight route, the steering motion and eye glances had a low association. This system's goal is to identify driver distraction based on the visual behaviour or performance; therefore, it's used to describe the relationship between visualbehaviourandvehiclecontrolforthatpurpose.This approach assesses the eye-steering correlation on a straightroad,assumingthatitwillhaveaqualitativelyand quantitativelydifferentconnectionthanacurvingroadand thatitwillbesusceptibletodistraction.Oncurvingroads,a high eye steering connection linked with this process has been discovered in the visual behaviour and vehicle control relationship, which reveals a basic perceptioncontrolmechanismthatplaysamajorroleindriving.

In computer vision, object recognition tracking is a critical issue. Human-computer interaction, behavior recognition, robotics, and monitoring are just a few of the disciplines where it can be used. Given the initial state of the target in the previous frame, object recognition tracking predicts the target position in each frame of the image sequence. The pixel connection between adjacent frames of the video sequence and movement changes of the pixels, according to Lucas, can be used to monitor a

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

movingtarget.Thistechnique,ontheotherhand,canonly recognize a medium-sized target that moves between two frames. Bolme presented the Minimal Outcome Sum of Squared Error (MOSSE) filter, that can provide robust correlation filters to follow the object, based on recent improvementsinthecorrelationsfilterincomputervision. Although the MOSSE has a great computing efficiency, its algorithm precision is limited, and it can only analyze one channel'sgreyinformation[1].

Trackingofvisualobjects.Incomputervision,visualobject tracking is a critical issue. Human-computer interaction, behaviourrecognition,robotics,andsurveillancearejusta fewofthedisciplineswhereitcanbeused.Giventheinitial state of the target in the previous frame, vision - based monitoring estimates the target position in each frame of the image sequence. The pixel relationship between subsequent frames of the video sequence and displacementchangesofthepixels,accordingtoLucas,can beusedtomonitoramovingtarget.Thistechnique,onthe otherhand,can onlyrecognisea medium-sizedtargetthat movesbetweentwoframes[3].

Thedriver'seyeistrackedusingareal-timeeye-tracking system.Whenamotorististiredorpreoccupied,heorshe has a slower reaction time in different driving circumstances. As a result, the chances of an accident will increase. There really are three ways to identify tiredness in a driver. The first is physiological changes in the body, such as pulse rate, brain messages, and cardiac activity, which a wearable wristband system can detect. The suggestedeyetrackingsystemachievesthesecondmethod through behavioural metrics such as unexpected nodding, eyemovements,yawning,andblinking.

Thedevelopedsystem'sgoalistoachievethefiveprimary points:

a. Affordable: The technologies must be affordable, ascostisoneofthemostimportantconsiderations duringthedesignphase.

b. Portable: The systems to be portable and easy to installindifferentvehiclesmodels.

c. Secure: The system's security is ensured by placingeachcomponentintheproperarea.

d. Quick: Because an accident occurs in a matter of seconds, the reaction and process time to react in the event of a driver's urgency is among the most importantvariables.

e. Accurate:Becausethesystemmustbeprecise,the mostprecisealgorithmshaveindeedbeenchosen.

One of the major reasons of road accidents in real world has solution now. The system is one step towards safeguarding precious lives by avoiding accidents in real world. Proposed system is based on DLIB & SOLVE PNP Models.

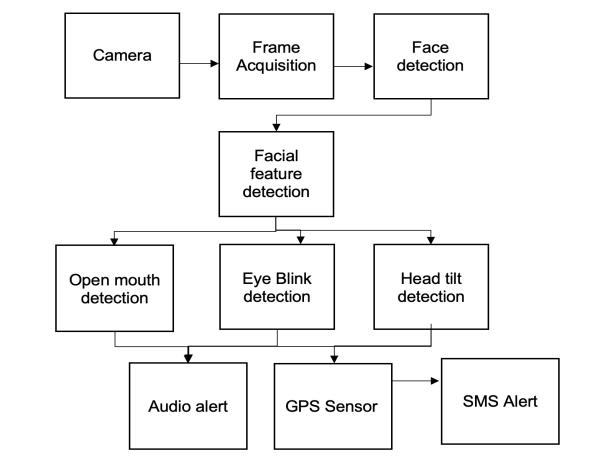

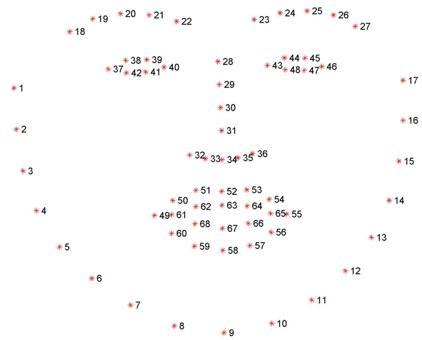

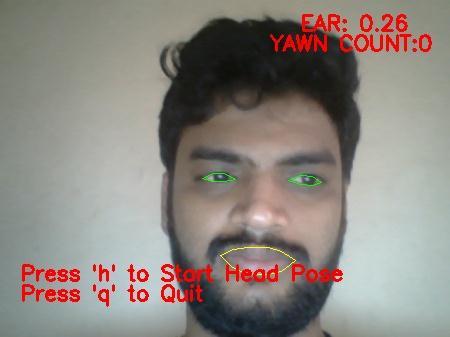

Camera is initialized at the beginning; frame acquisition is done in the next step. After Frame is acquired, face is detected in the frame. In the next step we detect facial features,positionofeye,mouth&headisidentified.

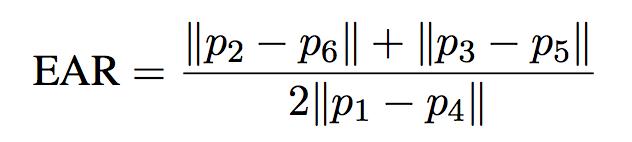

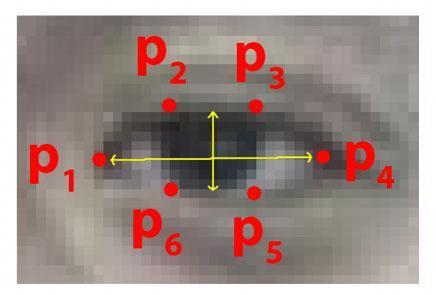

FollowingistheformulaetocalculateEyeAspectRatio:

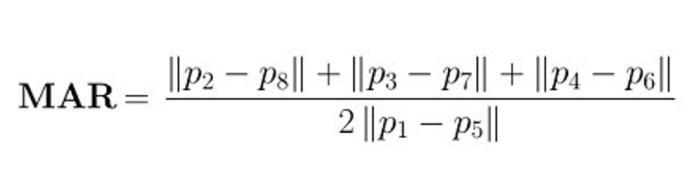

FollowingistheformulaetocalculateMouthAspectRatio:

We calculate the head Position using PnP i.e. Perspectiveend-Pointbydetecting3Dfacialpoints:

a. Tipofthenose:(0.0,0.0,0.0)

b. Chin:(0.0,-330.0,-65.0)

c. Leftcornerofthelefteye:(-225.0f,170.0f,-135.0) d. Rightcorneroftherighteye:(225.0,170.0,-135.0)

e. Leftcornerofthemouth:(-150.0,-150.0,-125.0)

f. Rightcornerofthemouth:(150.0,-150.0,-125.0)

After calculating EAR, MAR & PnP, system alerts through speaker if it detects eyes closed for more than 5 secs and yawningsomuch.Systemalsoalertsdriverwhenhishead

International Research Journal

Engineering

Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

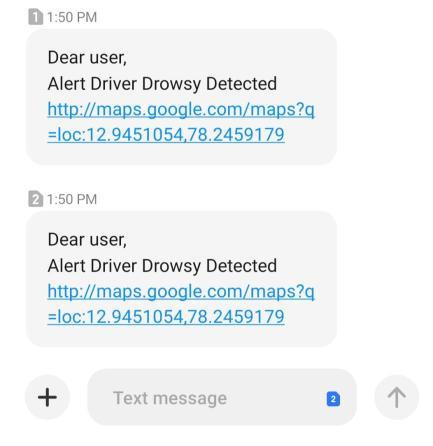

isnotstraight.Asadditional security measuresystemalso sends SMS alert to concern person including GPS location fetchedviaGPSsensor.

Facial landmarks are being used to identify and represent importantfacialfeatures,suchas:

Eye

Eyebrows

Nose

Mouth

Jawline

Facealignment,headposeestimation,faceswitching,blink detection, and other tasks have all been effectively accomplishedusingfaciallandmarks.

Facelandmarkdetectionisasubsetoftheshapepredicting issue.Ashapepredictorattemptstopinpointkeypointsof interestalongashapegivenaninputimage(andtypicallya ROI that describes the item of interest). Our goal in the domain of facial landmarks is to use shape prediction methodstodiscoveressentialfacialstructuresjustonface.

Detectingfaciallandmarksisthereforeatwostepprocess: Step#1:Localizethefaceintheimage. Step#2:DetectthekeyfacialstructuresonthefaceROI.

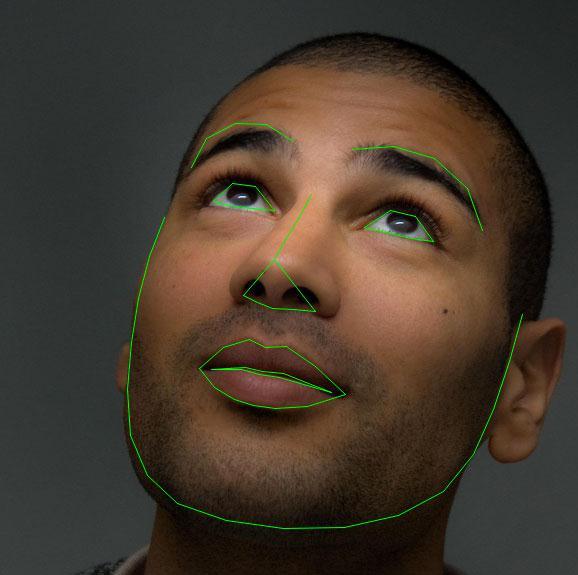

Fig – 3 : Inanimage,faciallandmarksareusedtoname andidentifyessentialfacefeatures.

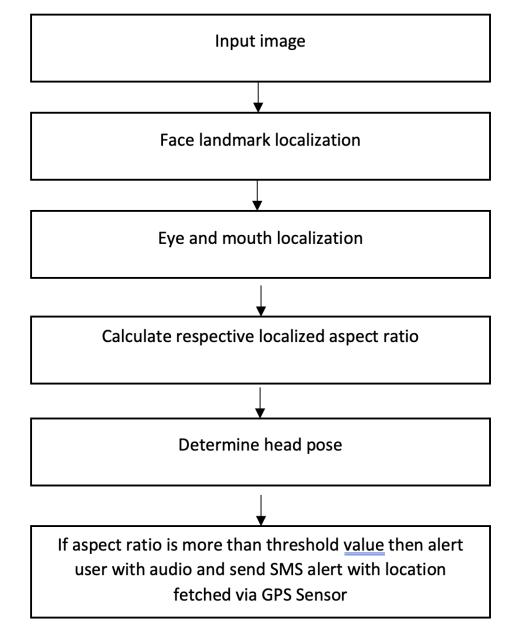

Fig – 4 : The68facelandmarkcoordinatesfromtheiBUG 300-Wdatasetarevisualized.

These annotations are from the 68-point iBUG 300-W dataset, which was used to train the dlib face landmark predictor.Startingattheleft-corneroftheeye(asifstaring at the person), and going clockwise around the rest of the region,eacheyeisrepresentedby6(x,y)-coordinates:

Fig - 5 :The6faciallandmarksassociatedwiththeeye

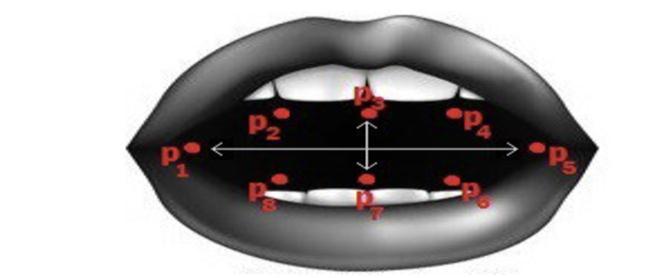

ii. MOUTH ASPECT RATIO

Fig – 6: FacialLandmarkassociatedwithMouth

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

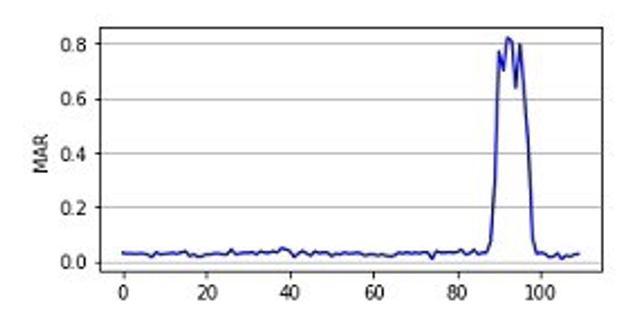

Fig – 7: Graphical

The graph clearly shows that whenever the mouth is closed,themouthaspectratioisnearlyzero,asinthefirst 80 frames. The mouth aspect ratio is higher somewhat whenthemouthispartlyopen.However,informframe80, the mouth aspect ratio is much higher, indicating that the mouthiscompletelyopen,mostlikelyforyawning.

In computer vision, an object's pose refers to its orientation and location in relation to a camera. You can alter the attitude by moving the object in relation to the cameraorthecamerainrelationtotheitem.

A 3D rigid object has only two kinds of motions with respecttoacamera.

a. Translation: Translation is the process of moving the camerafromitscurrent3D(X,Y,Z)locationtoanew 3Dlocation(X’,Y’,Z’).Translationhasthreedegrees of freedom, as you can see: you can move in the X, Y, orZdirections.Translationisrepresentedbyavector twhichisequalto:

b. Rotation: The camera can also be rotated around the X, Y, and Z axes. As a result, a rotation has three degrees of freedom. Rotation can be represented in a varietyofways.Eulerangles(roll,pitch,andyaw),a3 X 3 rotation matrix, or a rotation direction (i.e. axis) andanglecanallbeusedtoexpressit.

Fig – 9: SMSAlerttoConcernedPerson

A framework that spreads and monitors the driver's eye Aspect Ratio, mouth Aspect Ratio, and head movements is designed to detect weariness. The framework utilizes a mixture of layout-based coordinating and highlight-based coordinating to keep the eyes from wandering too far. Framework will almost certainly determine if the driver's eyesareopenorclosed,andwhetherthedriver islooking ahead,whilefollowing.Anoticeindicationwillbegiven as a bell or alarm message when the eyes are closed for an extended period. GPS will detect the live location and update, whenever eyes are closed, continue yawning and head tilted will trigger the system to send SMS alert to provided number ensuring the safety of driver, people walkingontheroadandotherfellowdrivers.

[1] International Organization of Motor Vehicle Manufacturers,“Provisionalregistrationsorsalesof new vehicles,” http://www.oica.net/wpcontent/uploads/,2018.

[2] WardsIntelligence,“Worldvehiclesinoperationby country, 2013-2017,” http://subscribers.wardsintelligence.com/databrowse-world,2018.

[3] National Highway Traffic Safety Administration, “Traffic safety facts 2016,” https://crashstats.nhtsa.dot.gov.,2018.

[4] Attention Technologies, “S.a.m.g-3-steering attentionmonitor,”www.zzzzalert.com,1999.

[5] Smart Eye, “Smarteye,” Available online at: https://smarteye.se/,2018.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

[6] ZhangK,ZhangZ,andLiZet.al,“Jointfacedetection and alignment using multitask cascaded convolutional networks,” IEEE Signal Processing Letters,2016.

[7] M.Danelljan,G.HÃd’ger,F.S.Khan,andM.Felsberg, “Discriminative scale space tracking,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 8, pp. 1561–1575, Aug. 2017.

[8] C.Ma,J.Huang,X.Yang,andM.Yang,“Robustvisual tracking via hierarchical convolutional features,” IEEETransactions on PatternAnalysisandMachine Intelligence,p.1,2018.

[9] J. Valmadre, L. Bertinetto, J. Henriques, A. Vedaldi, and P. H. S. Torr, “End-to-end representation learning for correlation filter-based tracking,” in Proc. IEEE Conf. Computer Vision and Pattern Recognition(CVPR),July2017,pp.5000–5008.

[10] Y. Wu, T. Hassner, K. Kim, G. Medioni, and P. Natarajan,“Faciallandmarkdetectionwithtweaked convolutional neural networks,”IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40,no.12,pp.3067–3074,Dec.2018.