International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09Issue: 08 | Aug2022 www.irjet.net p-ISSN:2395-0072

Emotion Based Music Player

Abstract Music plays an important role in human beings life. It is proven that listening to music helps in reducing stress and produces dopamine (hormone) which regulates in good/relaxed mood. Music player is an application for playing digital audio files and allow users to organize their multimedia collection, play songs. Artificial intelligence (AI) is a technology that enablesa machine to simulate human behavior. Machine learning is a subset of Artificial Intelligence which allows a machine to auto- matically learn from past data without programming explicitly. The goal of Artificial Intelligence is to make smart computerto solve complex problems, Emotion based music player uses Artificial intelligence and Machine Learning to make decisions and recognize the emotions regarding identification of Facialexpressions. It is a tedious job to select a song as there are thousands of songs in a playlist, consequently users face the problem of selecting songs manually through the playlist based on their current mood, Thus Emotion based music player is being implemented. Human emotion detection is in need of demand,the modern artificial intelligent systems can emulate and gauge reactions from face. Machine learning algorithms are suitable for detection and predictions of emotions. A camera captures user’s image, detects the person’s expression, maps the mood and plays music according to the playlist accomplished. Emotion Based Music Player helps the user by sensing their emotion, plays the song, also recommends related songs that would comfort or resonates to the user’s state of mind. An image of the user is captured by the system. The image gets analyzed by software and will be classified into happy, sad, neutral, angry emotion. The data gets extracted and detected with the training datasets. The music playlist will be displayed and songs will be played to boost the user’s mood after successful detection of the face and mapping of emotional sentiments.

I. INTRODUCTION

Whenusingconventionalmusicplayers,theuserhadto manuallybrowsetheplaylistandchoosesongsthatwould lift his or her spirits and emotional state. The work

required alot of labour, and coming up with a suitable list oftuneswasfrequentlydifficult.ThedevelopmentofMusic Information Retrieval (MIR) and Audio Emotion Recognition(AER)pro-videdthetraditionalsystemswitha function that automatically parsed the playlist based on various emotional classifications. The goal of Audio Emotion Recognition (AER) is to classify audio signals according to distinct emotional categories using certain audio properties. An important aspect of the studyof music information recognition (MIR) is the extraction of distinct audio aspects from an audio stream.By eliminatingthe need for manual playlist segmentation and song anno-tation based on user emotion, AER and MIR improved the functionality of conventional music players. However, these systems lacked the necessary mechanisms to allow a music player to be controlled by a user’s emotions. Information retrieval methods are less efficient sincethecurrentalgorithmsproduce unpredictable returns and frequently increase the sys- tem’s overall memory overheads. They are unable to quickly extract useful information from an auditory source. Current audio emotion recognition algorithms use mood models that are slackly connected to a user’s perception.The state-of-theart lacks designs that can create a personalised playlist by deducing human emotions from a facial image without using additional resources. The current designs either use extra hardware or human voice. The project suggests an approach designed to reduce the downsides and flaws of thecurrent technology. Theproject’s maingoal is tocreate a precisealgorithm that will produce a playlist of songs from a user’s playlist in accordance with that user’s emotional state. The algorithm is less computationally intensive, uses less storage, and costs less to use more hardware. It classifies face images into one of four categories: sad, angry, neutral, or happy.

II. PROBLEM STATEMENT

One’s life is significantly impacted by music. It serves asasignificantformofentertainmentandisfrequentlyused therapeutically.Thedevelopmentoftechnology and ongoing breakthroughs in multimedia have led to the creation of sophisticated music players that are rich in features like volume modulation, genre classification, and more. Although these capabilities effectively met each person’sneeds,auseroccasionallyfelttheneedanddesire

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09Issue: 08 | Aug2022 www.irjet.net p-ISSN:2395-0072

to browse through his playlist in accordance with his mood and emotions. The work required a lot of labour, and finding the right song list was frequently difficult. Therefore,anemotion-basedmusicplayerwillemployface feature tracking and facial scanning toidentify the user’s mood and then create a customised playlist for them, making the procedure simple for them. It will give music lovers and connoisseurs a better experience.

III. MOTIVATION

Theprimarygoalofanemotion-basedmusicplayeristo provide user-preferred music with emotion identification by automatically playing songs based on the user’s feelings. Inthe current system, the user must manually choosethetunes becausesongsgeneratedatrandommay notsuittheuser’smood.Theusermustfirstcategorisethe songs into several emotion groups before manually choosingacertainemotion.Usinganemotion-basedmusic player will help you avoid com-plications. The music will be played from the predetermined folders in accordance with the emotion detected.

IV. OBJECTIVES

Theprimaryobjectiveofanemotionbasedmusicplayer istoimprovetheexistingmusicplayer.Ingivenapproachit helps the user to automatically play songs based on the emotionsofthe user.

• Characteristics of facial expressions are captured usingan inbuilt camera

• The captured image is filtered to grayscale image.

• The Extraction of facial features

• The emotion of the user’s image will be mapped.

• Generating songs based on user preference.

V. RELATED WORKS

Deebika, Indira, Jesline[1] have proposed Machine LearningBased Music Player by detecting emotions. The purposeofcapturingtheimageistobedetectthemoodof a person. Classifying the songs based on emotion could then be used after detecting the emotion. Initially, after capturing emotion is detected through extraction of featureslikemouth,eyebrowsandeyes.Thecrucialpartof aperson’sbodyandtheonewhich plays a crucial role in knowing someone’s mood is face. For the process of extraction of input, webcam or mobile camera is

employed. The purpose of capturing the image istodetect the mood of a person. The information to be used to retrieve a list of songs accordingly. Classifying the songs basedon emotion could then be used after detecting the emotion. Initially, after capturing emotion is detected through extractionof features like mouth, eyebrows and eyes. Depending on the emotion detected, the mapping of emotion to the list of songs in accordance to the mood is done and thereby song is played. PyCharm tool is implemented for analysis, the overall process involving feature extraction and segmentation. For emotion detection, human face is to be recognized first and hence the human face is given as input image. The image undergoes image enhancement, where tone mapping is applied to images for maximizing the image contrast. The combination of two features that is eyes and lips is adequatetoconveyemotionsaccurately

Charvi, Kshijith, Rajesh, Rahman[2] have proposed Emotion Detection and Characterization using Facial Features.The objective of filter is to find the face in an image givenas input. In each sub window Haar features are calculatedand the difference is compared with the learnt threshold thatseparates objects and non-objects. Face detection is done byViola jones algorithm using HAAR based feature filter. TheFisherface classifier uses Principal Component Analysis and Linear Discriminant Analysis, both of which contribute toits accuracy. PCA aims to reduce dimensionality, such thatvariables in the dataset are reduced to minimum.The datasetused here is cohn-kenade consisting of 500 images of genressuch as happy, sad, neutral and angry. The new set of variables, called principal components makes the classification much simpler in terms of space complexity. It gives a precisionoutputof0.74.Needsonly2featuresfordetecting the emotion of a complete face, which satisfyingly decreases amount ofstorage data necessary for testing and for future applications.Karthik Subramanian Nathan, Manasi arun, Megla S Kan-nan[3] have proposed Emotion based Music Player for An-droid. The implementation is divided into two phases face detection and feature extraction. User takes a picture of himself/herself or uploads a picture from the gallery. The face rectangle is computedusingaFaceAPI.ViolaJonesalgorithmusesHAAR filtertoobtaintheinternalimagewithcoordinate pointsof given input facial features. These coordinates are used to classify the emotion depicted in the image using Support Vector Machine classifier. Based on the results of the classifier each emotion tag is assigned a score and the tag withthe maximum score is returned as the emotion of the user. To implement the above module, Microsoft Cognitive Service Emotion API was utilized to analyse the face

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09Issue: 08 | Aug2022 www.irjet.net p-ISSN:2395-0072

rectangle and thecorresponding emotion was returned. The audio signal is first divided into short-term windows (frames) and for each frame 8features are calculated. The emotionwasmappedaccordingtotheValence-ArousalCoordinate System. The dataset consistsof 50 voice samples whosevalenceandarousalvaluesareknown.The50voice samples are in wav format. Therefore, each mp3 song needstobeconvertedto.wavformat.Theaverageamount of time needed to determine the valence and each song needs to be 54 seconds long to be considered arousing. The features of the mp3 file were then translated to wav format.Oncetheresultsfromthemusicanalysishavebeen obtained, the title of the music as well as its arousal, valence, and mood the playlist is created, together with song and face emotion are calculated, the outcomes are significantly superiorto other players of music that solely track facial expressions and keep a bundle of songs datasets.

VI. METHODOLOGY

Fig.1. Emotion based music playermethodology

A. Face Detection

The goal of face detection is to locate human faces in pictures. The Face detection begins by looking for human eyes(whicharethesimplesttraitstofind),noseandmouth. Using the Haar Cascade Algorithm, comprehensive facial detecting technology which produces precise results. The Objects are identified via a machine learning object detection software.The programme needs lots of photos thatarefavourabletotraintheclassifier,usageofnegative photosofnon-facesandfaces are used as shown in figure 1.

B. Feature Extraction

For methods like face tracking, facial emotion detection,or face recognition, facial feature extraction is crucial. The technique of removing facial features from a humanfaceimage,suchaseyes,nose,mouth,andsoforth. For face identification, it consists of segmentation, image rendering, and scaling. Principal component analysis and Fisherface Algorithm, also known as Fisher Linear Discriminate, are two methods used to combine data. In Fig. 1. After emotion detection, music recommendation (PCA), and linear analysis process, a message is shown to the user (LDA). Data areanalysed using PCA to look for patterns and reduce the computational complexity of the datasetwiththeleastamountofinformationloss.Theycan extract facial features with greater accuracy for human face identification. LDA findsa linear projection of high dimensionaldataintoalowerdimensional subspace.

C. User Emotion Recognition

Atechnologycalledfacialemotionrecognitionisutilised for analysing sentiments by different sources. Fisher face isan algorithm which works on the basis of Linear discriminate analysis and Principal component analysis concept. Initially takes classified photos, minimise the data’s dimension and by determining its according to the specifiedcategories,statisticalvalue is stored as values.

D. Emotion Mapping

Expressions can be classified to basic emotions like anger, disgust, fear, joy, sadness, and surprise. User given expressioniscomparedwiththeexpressionsinthedataset. Itdisplaysthemapped expression as a result.

E. Music Recommendation

The last step is music recommendation system, is to provide suggestions to the users that fit the user’s

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09Issue: 08 | Aug2022 www.irjet.net p-ISSN:2395-0072

preferences. The analysis of the facial expression may lead to understandingthe current emotional of the user. Based on the emotion of the user the related playlist will be recommended.

VII. ALGORITHMS

A. Haar cascade

Face detection is a hot topic with many practical applications.Modernsmartphonesandlaptopshavefacedetection software built in that can verify the user’s identification. Numerous apps have the ability to capture, detect, and processfaces in real time whilealsodeterminingtheuser’s ageand gender and applying some really amazing filters. The list isnot just restricted to these mobile applications because face detection has numerous uses in surveillance, security, and biometrics. The initial Object Detection Framework for Real Time Face Detection in Video Footage wasputforthbyViolaandJonesin2001,however,andthat iswhereitsSuccessstorieshavetheirroots.Thepurposeof thisessayistoexaminesomeoftheintriguingideasputout bytheViola-JonesFaceDetectionTechnique,alsoknownas theHaarCascades.LongbeforetheDeepLearningEraeven began, this work was completed. However, when compared to the potent modelsthat can be created using current Deep Learning Techniques,it is a superb piece of work. Thealgorithm isstill virtually universallyapplied.In GitHub, it offers models that have been fully trained. It moves quickly. It’s fairly true.The locations ofmany facial features,likethecentresofthepupils,theinside andouter corners of the eyes, and the widows peak in the hairline, had to be manually determined. Twenty distances, including the mouth and eye widths, were calculated usingthe coordinates. In this way, a person could process around40 images in an hour and create a database of the calculateddistances.Then,acomputerwouldautomatically compare the distances for each image, figure out how far apart they were, and then return the closed records as a potential match. It is anObject Detection Algorithm that is usedtofindfacesinstillphotosormovingvideos.Theedge orlinedetectionfeaturesViolaandJonessuggestedintheir 2001 study ”Rapid Object Detection using a Boosted Cascade of Simple Features” are used by the technique. To train, the algorithm is given a large number of positive photos with faces and a large number of negative images without any faces. The model produced by this training is accessibleattheOpenCVGitHubrepository.Themodelsare housedinthisrepositoryasXMLfilesandmaybereadusing OpenCV functions. These comprise models for detecting faces, eyes, upper and lower bodies, licence plates, and so forth.

B. Fisher Face

One of the extensively used face recognition algorithms is Fisherface. It aims to optimise the separation between classes during training, making it preferable to other methods like eigen face. By leveraging GUI applications anddatabasesthatare employed in the form of a Papuan facial picture, the aimof this research is to develop a programme of face recognition application using the Fisherfaceapproach.Fisher’sLinearDiscriminant(FDL)or Linear Discriminant Analysis (LDA) methods are used to obtain features of an image’s characteristic in order to recognise it. These methods are based on the Princi-pal Component Analysis (PCA) method’s reduction of the face spacedimension.Fisherfaceisthealgorithmutilisedinthe image recognition process, and minimal Euclidean is used forfaceimagematchingoridentification.Theresultsshow that for image recognition where the testing image is identical to the trainingimage,theprogram’s success rate is 100; however, for73 facial test images with various expressions and positions, 70 faces are correctly recognised and 3 faces are incorrectly recognised, meaning that the program’s success rate is 93

VIII. RESULTS

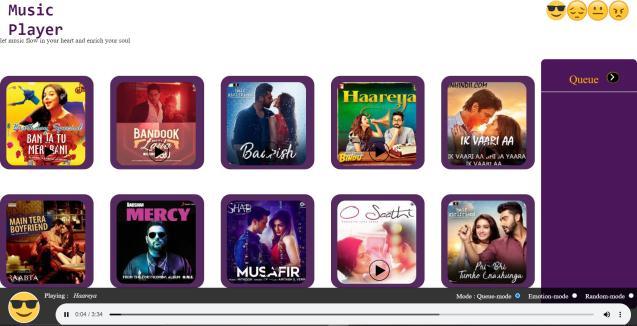

A. The Interface

Theinterfaceof emotion basedmusic playerconsists of Logo and the tagline as “Let music flow and enrich your soul”.The interface has all songs aligned according to the emotions designed i.e Happy, Sad, Neutral, and Angry. There are three types of mode described such as Queue mode, Emotion Mode and Random Mode. The emotion detected by the user is Happy and the song is played accordingly as shown in figure 2

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09Issue: 08 | Aug2022 www.irjet.net p-ISSN:2395-0072

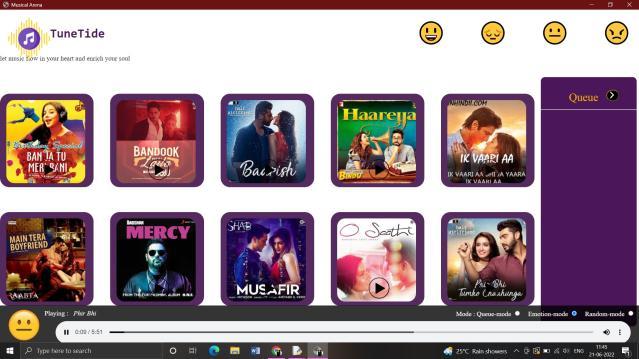

B. The Emoji

Fig.3. emotion basedmusic player playingneutral song

Theinterfacealsohasfour emoji’s lined up, when the userplacesthecursorontheemoji,thesongwillbeplayed accordingly according to emoji described such as Happy,Sad,Neutral, Angry as shown in figure 3.

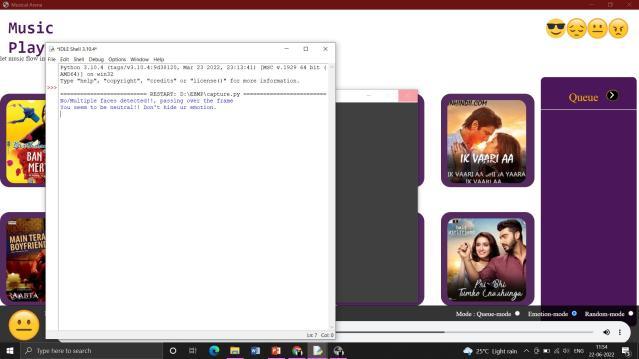

C. Emotion Detection

The interface capturing user’s face, mapping emotions and detecting the user’s mood as neutral as shown in figure 4.

D. Message

After successful detection of the user’s mood and the songis played, there will be a message produced in the background according to the emotion detected as shown in figure 5.

Fig.5.Amessagedisplayedtouserafteremotiondetection andsongrecommendation

CONCLUSION

The Emotion based music player has been developed. The music player provides custom playlist of music according to users emotion by real-time analysis of the Facial emotion ex- pressed and according to the emotion the playlist is generated.EBMP have far superior results than other music players that only analyse facial emotions and maintain a fixed song datasetsince both Facial And Song Emotion are computed. The music player also has an additional feature which is not in existing system, which is selecting emoji’s and the song is playedaccordingly. There arefourmoodsdeployedthatisHappy,Sad,Neutral,Angry, Whentheuserclicksontheemoji,thesong will be played.

FUTURE ENHANCEMENTS

Songs can be exported to a cloud database so that customers can download whatever song they desire because mobile applications have a finite amount of memory. When we can only use cloud storage for additional memory needs, it is not practical to store a tonne of music inside an app. This also broadens the spectrum of songs we are working with and hencewill improve the results. Identification of Complex and Mixed Emotions, Currently only four prominent emotions are being examined and there may be certain other emotions that a facialexpression may convey. The user can add mixed emotions to the classes in order to maximise efficiencyandpreventincorrect classification of emotions. Analysing the song lyrics andestimatetheemotionofthe song and combine the emotionwith the emotion of the song.Includingvoiceinputforfasteremotion recognition.

Fig.4. Emotiondetection as neutral

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056 Volume: 09Issue: 08 | Aug2022 www.irjet.net p-ISSN:2395-0072

REFERENCES

[1] Deebika,Indra,Jesline ‘A machine learning based music player by de- tecting emotion’ at 5th international conference on science technology engineering and mathematics (STEM) 2019.

[2] Karthik Subramanian Nathan, Manasi Arun and Megala S Kannan ‘An emotion based music player for android’ at IEEE international symposium on signal processingandinformationtechnology, 2017.

[3] Ramya Ramanathan , Radha Kumaran, Ram Rohan R, rajath guptha ‘An Intelligent music player based on emotion recognition’, at 2nd IEEE international conference on computational systems and information technology for sustainablesolutions, 2017.

[4] S satoh, F Nack “Emotion based Music Visualization usingphotos”Verlag Berlin, Heidelberg,Springer,2008.

[5] Charvi, Kshijith, Rajesh, Rahman “Emotion Detection and Characteri- zation using FacialFeatures” IEEE Access,2018.