International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

1Student, Dept. of Information Science & Engineering, RV College of Engineering, Bangalore, India

2Assistant Professor, Dept. of Information Science & Engineering, RV College of Engineering, Bangalore, India ***

Abstract - This paper proposes a motor imagery based brain computer interface (MI BCI) that enables physically challenged individuals to use a computer system that is running Windows operating system. Usingthe MI BCI, the user can perform basic operations on Windows such as movement of mouse and execution of mouse clicks. The MI BCI uses Electroencephalogram (EEG) waves, capturedfromthe user in real time, as input to a convolution neural network (CNN), which classifies the input into one of seven events: mouse movement in left, right up or down direction, left and right mouse click and finally the idle state in which no action is taken. The CNN has five layers including a max pooling layer and a fully connected (FC) layer. The hardware used to capture EEG data is the 8 channel Enobio EEG device with dry electrodes. The training data consists of beta waves (a type of EEG wave) recorded while the subject imagined moving the right arm in one of the four directions; right, left up and down and also executed left and right eye winks which is mapped to left and right mouse clicks in Windows. EEG waves are also captured while the subject is in a idle state (That is, when the subject does not want to move the mouse). The control of the mouse in Windows operating system is achieved using Python libraries. The proposed MI BCI system is very responsive, and achieved an average accuracy of 92.85% and a highest accuracy of 97.14%.

Key Words: Electroencephalogram, Motor Imagery, Brain Computer Interface, Convolution Neural Network, Beta waves

ABrainComputerInterface(BCI)isasystemthatmapssome formofbrainsignaltocommandsonacomputersystem.The main goal ofBCIis toreplace or restoreuseful functionto people disabled by neuromuscular disorders such as amyotrophiclateralsclerosis,cerebralpalsy,stroke,orspinal cordinjury.TheBCIdescribedinthispaperwasdevelopedto assistphysicallychallengedindividualsinperformingbasic tasksonapersonalcomputersystem.Itenablestheuserto use motor imagery to control the mouse on the Windows operatingsystem.Motorimagery(MI)isamentalprocessby whichanindividualimaginesorsimulatesaphysicalaction. MI activates similar brain areas as the corresponding executedactionandretainsthesametemporalcharacteristic [12].Amotorimagery basedbrain computerinterface(MI BCI) enables the brain to interact with a computer by

recording and processing electroencephalograph (EEG) signalsmadebyimaginingthemovementofaparticularlimb [1] EEGwavescanbeusedasinputtocontrolaBCIsystem even when the user has severe physical and neurological impairments[2] Thesewavesarechosentobetheinputto the BCI system because they are easy to capture and the hardware involved is quick to setup. The amplitude of the EEG waves are measured in microvolts (mV). There are varioustypesofEEGwavesthatoccuratdifferentfrequency bands.Gammawaveshaveafrequencybetween25and140 hertz, Beta waves have a frequency 14 to 30 hertz, Alpha waveshaveafrequencybetween7to13hertz,Thetawaves haveafrequencybetween4to7hertz,Deltawaveshavea frequency up to 4 hertz. The beta band is associated with motor tasks including motor imagery. An event related synchronization(ERS)duringmotorplanningandanevent related desynchronization (ERD) after task execution are reportedinthebetaband[3].Hencebetawavesareusedas the input to the BCI. To collect the data, the Enobio 8EEG devicewasused.Itcontains8channelswhichcanbeplaced onthescalpusingdryorwetelectrodes.TheEEGwaveswere recordedwithdryelectrodesthatareplaceddirectlyonthe scalpandareheldinpositionbya cap.Dryelectrodescan captureEEGwavesjustbycomingintocontactwiththescalp. The electrodes are arranged on the subject’s scalp so that they cover the frontal lobe which is involved in voluntary, controlled behavior such as motor functions [4]. The beta waves were collected while the subject performed motor imagerytaskssuchasimaginingmovementofrighthandin right,left,upanddowndirection.Theserawbetawaveswere extracted from the Enobio software using its Lab Stream Layer (LSL) feature. The Lab Streaming Layer is an opensource middleware which allows real time streaming and receiving of data between applications over a TransmissionControlProtocol(TCP)network.Thecollected dataisanalyzedusingMATLABtofindpatternsamongthe brainwaves recorded duringdifferent tasks. Convolutional NeuralNetworksischosentoactastheclassifierfortheBCI input because it can automatically extract features and its quick performance makes it well suited for real time predictions.Reference[5]utilizesa5layeredCNNwithamax poolinglayerandafullyconnected(FC)layertoaccurately classify EEG waves of motor imagination tasks. when comparedtoRecurrentNeuralNetworks(RNN)suchasLong ShortTermMemory(LSTM)andahybridofLSTMandCNN, the CNN model’s performance was found to be of similar accuracy,butthelatencyofthemodelwasmuchless.Hence

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

CNNischosenastheclassifierinthisproject.Thedataisthen preprocessed and used to train the Convolutional Neural Network(CNN).Pythonlibrariesareutilizedtoachievethe controlofthemouseinWindowsoperatingsystem.Theuser cancontrolthemousemovementbyimaginingmovementof righthandandleftandrightmouseclicksiscontrolledbyleft andrighteyewinksoftheuser.

CuntaiGuan,NeethuRobinson,VikramShenoyHandiruand V. Prasad, [6] discuss designing a BCI based upper limb rehabilitation system for stroke patients. The authors proposearegularizedWavelet CommonSpatialPattern(W CSP) method for the classification of the movement directions,togetherwithamutualinformationbasedfeature selection.Theywereabletoachieveanaverageaccuracyof 80.24%±9.41ofsingletrialclassificationindiscriminating the four different directions. In their work, they also investigate methods to classify movement speeds and reconstructingmovementtrajectoryfromEEG.

Jeong Hun Kim, Felix Bieβmann, and Seong Whan Lee, [7] investigate two methods for decoding hand movement velocitiesfromcomplextrajectories.Thefirstmethodisusing MultipleLinearRegression(MLR)andthesecondmethodis KernelRidgeRegression(KRR).Theresultsshowthatboth MLRandKRRcanreliablydecode3DhandvelocityfromEEG, butinthemajorityofsubjectsKRRoutperformedtheMLR decoding.KRRcanpredicthandvelocitiesathighaccuracy withfewertrainingdatacomparedtoMLR.HoweverKRR alsohasahighcomputationalcost.

EEG waves into one of six American Sign Language (ASL) signsusingLinearDiscriminantAnalysis(LDA)andnonlinear SupportVectorMachines(SVM)for classification.boththe algorithmsshowedtheanaverageaccuracyof75%whenthe Entropyfeaturetypewasexamined.

RamiAlazrai,HishamAlwanniandMohammadI.Daoud,[9] present a new EEG based BCI system for decoding the movements of each finger within the same hand so that subjectswhoareusingassistivedevicescanbetterperform various dexterous tasks. The BCI uses quadratic time frequency distribution (QTFD), namely the Choi William distribution (CWD) for feature extraction. The extracted featuresarepassedtoatwo layerclassificationframework thathasanSVMclassifierinthefirstlayerandfivedifferent SVMclassifierscorrespondingtofivefingersinthesecond layer.Theclassifierinthefirstlayeridentifieswhichoneof thefivefingersismovingandthecorrespondingclassifierin the second layer identifies the type of movement for that finger.TheaverageF1 scoresobtainedfortheidentification ofthemovingfingersareabove79%,wherethelowestF1 scoreof79.0%wascomputedfortheringfinger.

Jzau Sheng Lin and Ray Shihb, [10] investigate the performancesoftwodeeplearningmodelsnamedLong short termmemory(LSTM)andgatedrecurrentneuralnetworks (GRNN)toclassifyEEGsignalsofmotorimagery(MI EEG). The authors use the deep learning models to control a wheelchair.TheexperimentalfindingsshowthattheGRNN always achieves better performance than the LSTM in the applicationtocontrolanelectricwheelchair.

Martin Spuler ,[11] presents a BCI that can interact with Windows applications by controlling the mouse and keyboardthroughcodemodulatedvisualevokedpotentials (cVEPs).Theuserispresentedwithblackandwhitevisual stimuli that allows the user to move the mouse, click the mouse and type characters with a speed of more than 20 charactersperminute. Thedrawbackofthesystemwasthat sincetheBCIrunsinsynchronousmodeatargetisselected every1.9s,whichmeansevenwhentheuserdoesnotwantto perform any action it will still select an action to be performed. The author has proposed the addition of an asynchronous mode and a control state to improve the usabilityofthisBCI.

This section provides details on the methodology used to buildandtestthemotorimagerybasedBCI Therearefour stagesinthemethodologywhicharedataacquisition,data analysis and pre processing, training and testing the CNN model and finally using Python to connect Enobio, CNN modelandWindows.

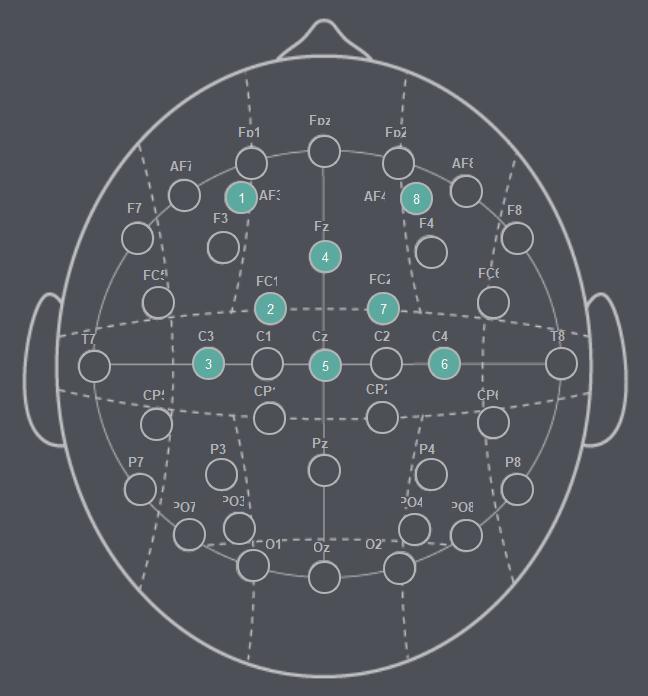

TheEnobio8channelEEGdeviceisusedtoforEEGsignal acquisition.The8electrodesnumbered1to8areplacedin theinternational10 20systempositions“AF3”,”FC1”,”C3”, ”Fz”, ”Cz”, ”C4”, ”FC2” and “AF4” respectively, so they can capturebetawavesfromthefrontallobe.Figure1showsthe positions of the eight electrodes on the scalp. The data is collectedwhilethesubjectexecutesmotorimagerytasksof imagining movement in therightarm in four directionsin center outmotions as well as individuallyblinking theleft andrighteye Theidlestateoftheuserisalsocapturedso thattheCNNmodelcandifferentiatebetweenidleandnon idle states. This is done to ensure that the model does not execute an action in Windows when the user is idle (for example; when the user is reading, watching a video or image.)TheLabStreamingLayer(LSL)featureoftheEnobio software is used to stream the raw EEG data to a python program,whichwritesittoacommaseparatedvalues(CSV) formattedfile.Thisisdoneforeachofthesixuseractionsas wellasa restingstate,consequentlygenerating7csvfiles. The sampling rate used is 500 samples per second. The duration of each EEG recording is 2 seconds. Each csv file contains a 100 such recordings. Each recording contains 1000rowsand8columnscorrespondingtothe8channels.

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

The EEG dataset is collected from a single subject so as to buildacustomizedBCI.

MouseControlModulewasfoundtobe0.275,0.319,0.102 seconds.Theaveragelatencyofthefullyintegratedsystem wasfoundtobe3.835seconds.Theseresultsaretabulated intable1.

Fig 1:Positionsoftheeightelectrodes

The data is analysed in MATLAB to study the different patterns of each task. The significant difference in the patterns implies that the beta waves corresponding to an action can be classified. In the pre processing stage, the datasetisscaledusingthestandardscalerwhichisavailable inPython’sSklearnlibrary.Thestandardscorezofasample xiscomputedas

z=(x u)/s

whereuandsarethemeanandstandarddeviationofthe training set. The training labels are converted from string formattomachinereadableformatusingthelabelbinarizer functionfromtheSklearnlibrary.

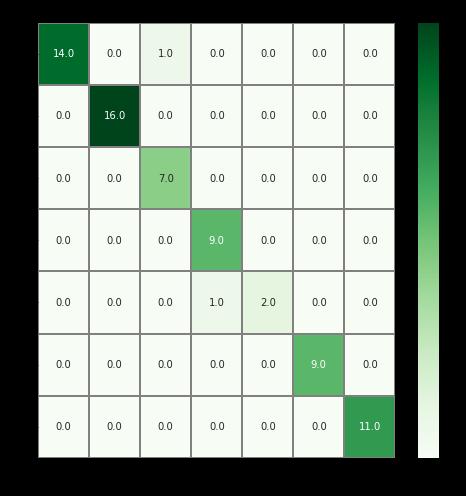

Thedatasetisshuffledandsplitintotrainingandtestingsets with a split ratio of 90/10 to allow maximum number of recordsfortraining.Themodelistrainedforoneepochwith abatchsizeofone.Themodelachievesanaveragetraining accuracyof79.09%withastandarddeviationof1.56andan average testing accuracy of 92.85% with a standard deviation of 4.12. The highest accuracy achieved by the modelis97.14%.ThefunctionalcomponentsoftheBCIsuch astheLSLclient,CNNmodelandtheMouseControlModule aretestedindividuallyusingtheUnittesttestingframework in Python. The evaluation metric used to evaluate the performanceofthemodelistheconfusionmatrixshownin figure2.TheaveragelatenciesoftheLSLclient,CNNmodel,

Fig -2:ConfusionmatrixforCNNmodel

Figure 2 shows the confusion matrix used to evaluate the model accuracy. It was computed for the model with accuracy of 97.14% The columns represent the predicted valuesandrowsrepresenttheactualvalues.Thereissome misclassification for some of the records but the overall accuracyisgood.

Table 1: PerformanceanalysisofBCIcomponents.

Performance/ Module AverageLatency (Seconds) Numberof functioncalls

LSLclient 0.275s 181372

CNNModel 0.319s 1285882

MCMModule 0.102s 44751

BCI(allcomponents integrated) 3.835s 1562503

Python contains libraries for data manipulation, pre processing, model building and training, which makes it suitablefortheBCI.TheEEGdatastreamiscapturedfrom theEnobiosoftwareusingaLSLclientsetupinpythonusing

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

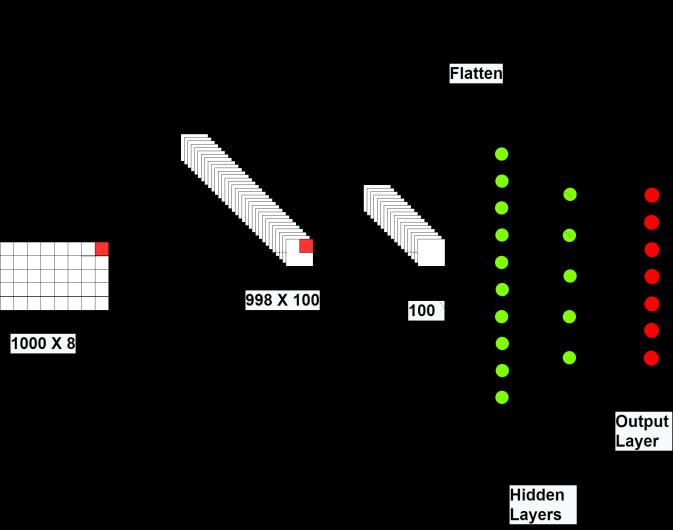

the Pylsl. This LSL client provides the EEG sample. This sample is input into the CNN model to classify the user action. The CNN model contains a one dimensional convolutionlayerthatconsistsof100filterswithakernel size of 3 which is used to extract features from the input sample. The next layer is a one dimensional max pooling layerofsize2whichisusedtoreducethedimensionalityof thepreviouslayer.Theflattenedoutputofthemax pooling layeristhensenttothehiddenlayerwhichconsistsof20 neurons.Thefinallayeristheoutputlayerwhichcontains7 neurons. The outputof the CNN model isone of7classes, whichare“Right”,”Left”,”Up”,”Down”,”Right Wink”,”Left Wink”and“Nothing”.Iftheoutputbelongstoanyoneofthe 4directionalclasses(Right,Left,UpandDown),themouse pointerismovedinthatdirectionwithcommandsexecuted inPython.Iftheoutputisclassifiedaseither“Left Wink”or “Right Wink”,thecommandtoclickthemouseisexecutedin Python.Iftheoutputisclassifiedas“Nothing”,noactionis taken.ThecontrolofthemouseinWindowsisachievedby usingthe“pyautogui”and“mouse”librariesinPython.

Thissectiondescribesthearchitectureoftheimplemented BCIandgivesadetailedexplanationofitscomponents.Italso providesdetailsonthearchitectureoftheCNNmodelthat wasbuiltfortheBCI.

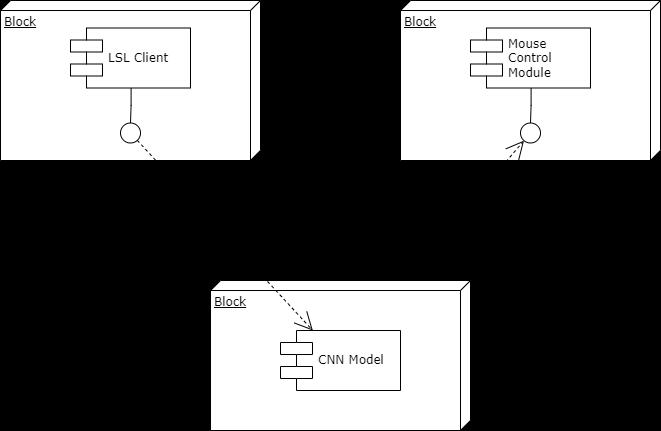

Theprojecthasathree tieredarchitecturewhichconsistsof anLSLclient,CNNmodel,MouseControlModule.TheLSL clientisresponsibleforcapturingaportionofthelivedata stream from the Enobio software. The CNN model will classifytheEEGinputasoneofthesevenoutputclasses.The MouseControlModulereceivestheclassificationfromthe CNN model. It then matches the classification to the appropriate output command that is executed in in Windows.Thedataonlyflowsinonedirectionasshownin figure3

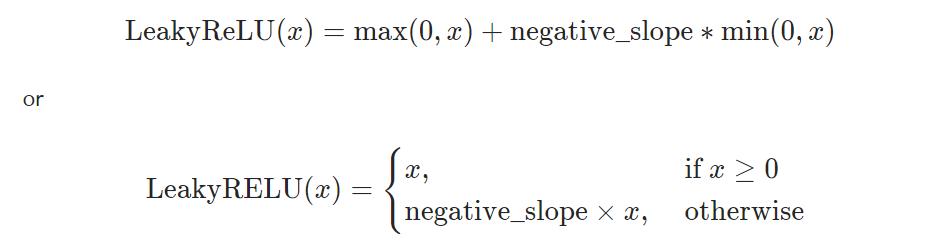

TheCNNmodelcontainsfivelayersasshowninfigure4.The firstlayerisaone dimensionalconvolutionlayercontaining 100filtersandakernelsizeof3.Thesecondlayerisaone dimensional max pooling layer of size 500. The third and fourthlayersarefullyconnectedhiddenlayerscontaining 500 and 100 neurons with leaky ReLU activation. ReLU standsforrectifiedlinearunit.

LeakyReLUwaschosenoverReLUastheactivationfunction forneuronsinthehiddenlayerasitpreventsneuronsfrom dyingduetonegativeinputs.TheleakyReLUfunctionfora giveninputxisdefinedas,

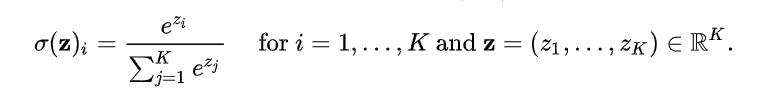

Where negative slope controls the angle of the negative slope.Itsdefaultvalueis1e 2.Thefifthlayeristheoutput layer containing 7 neurons with “softmax” activation. The softmaxfunctiontakesaninputvectorzofKrealnumbers andnormalizesitintoaprobabilitydistribution.

Itappliesthestandardexponentialtoeveryelementinthe inputvectoranddivideseachexponentialbythesumofall theexponentialstonormalizethem.Thesumoftheelements oftheoutputvectoris1.Thesoftmaxfunctionforaninput vectorzofKrealnumbersisdefinedas,

Fig 3:ThreetiersystemarchitectureofMI BCI

Itiscommonlyusedintheoutputlayerofamulticlassneural network. A multiclass classification is the problem of

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

classifyinginstancesintooneofmorethanthreeclasses.The first two layers of the CNN model are used for feature extraction and the next three layers are used for classification.Beforethedataissenttothehiddenlayersitis flattened to make it one dimensional. To regularize the modelandavoidoverfitting,duringtrainingrandomnodes aredropped.Adropoutfactorof0.5isusedduringtraining toavoidoverfittingthemodel.

The user is able to use the MI BCI system to control the mouse on Windows. The actions that can be performed includemousemovementaswellasclicking.However,after theexecutionofanaction,theusermusttakeamomentto relaxandallowtheirbrainwavestoreturntotheirnormal statebeforetakingthenextaction.Duetothisdrawback,the user cannot continuously control the computer. This also meansthat the MI BCIdoes notselectanaction when the user does not want to execute an action, which is an improvement on [11]. Another drawback is that the user cannotcontrolthekeyboardwiththisBCI.Toaddressthis problem, the addition of a c VEP method to control the keyboardsuchastheonedescribedin[11]willbethenext step.

ThispaperhaspresentedamotorimagerybasedBCIsystem which uses convolution neural networks to classify an individual’sEEGwavesintocomputercommands.Although drawbacksofthecurrentsystemareoutlined,nextstepsin improving the system are discussed. The five layer CNN modelachievedtheanaverageaccuracyof92.85%witha standarddeviationof4.12andahighestaccuracyof97.14%. TheBCIisveryresponsivewithanaveragelatencyof3.85 secondsforsignalacquisition,classificationandexecutionof the appropriate command, which is very good for a user interfacesystem.

I would like to thank my guide, Sushmitha N, Assistant Professor,DepartmentofInformationScience&Engineering, RVCollegeofEngineering forintroducingmetothesubject of electroencephalography and brain computer interfaces and for her continual support, guidance and patience in completingthisproject.

IwouldalsoliketoexpressmysinceregratitudetoDr.B.M. Sagar,HeadofDepartment,andDr.G.S.Mamatha, Professor andAssociateDean,DepartmentofInformationScience& Engineering, RV College of Engineering for providing the necessaryresourcessuchastheEnobiodevicetocomplete thisproject.

[1] ArefehNouri,ZahraGhanbari,MohammadRezaAslani, MohammadHassanMoradi,“Anewapproachtofeature extraction in MI based BCI systems”, Artificial Intelligence BasedBrain ComputerInterface,Jan.2022, pp.75 98,doi:10.1016/B978 0 323 91197 9.00002 3.

[2] Lazarou Ioulietta, Nikolopoulos Spiros, Petrantonakis Panagiotis C., Kompatsiaris Ioannis, Tsolaki Magda, “EEG Based Brain Computer Interfaces for CommunicationandRehabilitationofPeoplewithMotor Impairment: A Novel Approach of the 21st Century”. Frontiers in Human Neuroscience, vol 12, 2018, doi: 10.3389/fnhum.2018.00014

[3] JerrinThomasPanachakel,KanishkaSharma,AnushaA S,andRamakrishnanAG,“Canweidentifythecategory of imagined phoneme from EEG?”, 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Oct. 2021, doi: 10.1109/EMBC46164.2021.9630604

[4] FiratRenginB.,“Openingthe“BlackBox”:Functionsof theFrontalLobesandTheirImplicationsforSociology”, Frontiers in Sociology, vol. 4, Feb. 2019, p. 3, doi: 10.3389/fsoc.2019.00003

[5] XiangminLun,ZhenglinYu,TaoChen,FangWangand YiminHou,“ASimplifiedCNNClassificationMethodfor MI EEG via the Electrode Pairs Signals”, Frontiers in Human Neuroscience, vol. 14, Sept 2020, p. 338, doi: 10.3389/fnhum.2020.00338.

[6] C. Guan, N. Robinson, V. S. Handiru and V. A. Prasad, "Detectingandtrackingmultipledirectionalmovements in EEG based BCI," 2017 5th International Winter Conference on Brain Computer Interface (BCI), 2017, pp.44 45,doi:10.1109/IWW BCI.2017.7858154.

[7] J. H.Kim,F.BießmannandS. W.Lee,"Reconstruction of hand movements from EEG signals based on non linearregression,"2014InternationalWinterWorkshop onBrain ComputerInterface(BCI),Feb.2014,pp.1 3, doi:10.1109/iww BCI.2014.6782572.

[8] D.AlQattanandF.Sepulveda,"Towardssignlanguage recognition using EEG based motor imagery brain computer interface", 2017 5th International Winter Conference on Brain Computer Interface (BCI), Jan. 2017,pp.5 8,doi:10.1109/IWW BCI.2017.7858143.

[9] Rami Alazrai, Hisham Alwanni, Mohammad I. Daoud, “EEG basedBCIsystemfordecodingfingermovements withinthesamehand”,NeuroscienceLetters,vol 698, Apr. 2019, pp. 113 120, doi: 10.1016/j.neulet.2018.12.045.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

[10] J.Lin,andR.Shihb,"AMotor ImageryBCISystemBased on Deep Learning Networks and Its Applications", in EvolvingBCITherapy EngagingBrainStateDynamics, Oct.2018,doi:10.5772/intechopen.75009

[11] SpulerM.,“ABrain ComputerInterface(BCI)systemto use arbitrary Windows applications by directly controllingmouseandkeyboard.”,AnnualInternational Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society, Aug. 2015, doi: 10.1109/EMBC.2015.7318554

[12] ReinholdScherer,CarmenVidaurre,Chapter8 Motor imagery based brain computer interfaces, Smart WheelchairsandBrain ComputerInterfaces,2018,pp. 171 195,doi:10.1016/B978 0 12 812892 3.00008 X.

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |