International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

1Assistant Professor, Department of Electronics and Communication Engineering, JSS Science and Technology University, Mysuru, India 2,3,4,5 Students, Department of Electronics and Communication Engineering, JSS Science and Technology University, Mysuru, India ***

Abstract Movement is referred to as the act of moving or changing one's position. The loss of the ability to move some or all of your body is termed as paralysis. It can have lots of different causes, some of which can be serious. It may be temporary or permanent depending on the cause of injury Amyotrophic LateralSclerosis [ALS]is a progressivenervous system disease that affects nerve cells in the brain and spinal cord, causing loss of muscle control. Motor neuron loss continues until the ability to eat, speak, move, and finallytheabilitytobreathe islost.Thenervecellspresent in person’s spinal cord control the muscles, whose location is identified by lateral. The main objective of the proposed work is to provide the motion control for the paralysed people and make them less dependent on others in terms of movement. Even in severe cases of ALS, the patient can still move their eyes. Hence using the hand gesture for partially paralysed people or using the eye movement for fully paralysed people, the motion of the wheelchair can be controlledbythepatientsindependently.

Key Words: movement, Amyotrophic Lateral Sclerosis (ALS), motion control, hand gesture, eye movement

Every year there are thousands of people who become partially or fully handicapped due to some accidents which makes them dependent on wheelchairs. Some people suffer from disease like ALS where the patient might lose complete control over their movements and abilitytocommunicatewheretheycancommunicatewith their eyes only. But the conventional wheel chairs are manually driven making those people dependent on others.

There is no known cure for ALS till date. Spine stimulation, human comp interface and exoskeleton are a few technologies that were developed as an alternate. Unfortunately,thesetechnologiesrequiretheimplantation of electro devices, which is very difficult and expensive. Therefore, these technologies were not as efficient as expected.

Even in severe cases of ALS, the patient can still move their eyes. This ability is utilized here. In this proposed

project, the wheel chair can be controlled by the eye movement or the hand gesture and can be moved in left, right, backward or forward direction. Hence the patient canmovewithoutdependingonothers.

Almost everyone faces hardships and difficulties at one time or another. But for people with disabilities, barriers canbemorefrequentandhavegreaterimpact.TheWorld Health Organization [WHO] describes barriers as being morethanjustphysicalobstacles.

Often there are multiple barriers that can make it extremely difficult or even impossible for people with disabilitiestofunction.Transportationbarriersaredueto a lack of adequate transportation that interferes with a person’s ability to be independent and to function in society. Examples of transportation barriers include Lack of access to accessible or convenient transportation for people who are not able to drive because of vision or cognitiveimpairments.

Paralysiscanresultfrombrainorspinal cordinjuryor by diseases such as multiple sclerosis or ALS. In partially paralyzedcondition,thepatientwillbeabletomovetheir palm region of hand. But in severe paralysis patient, communication abilities are extremely restricted. Even in extreme cases the patient can control muscles around the eyes like eye movements or eye blinks. This motivated to pursuewiththisprojectwhereithelpsthemtocontrolthe wheel chair by eye movementsand hand gesture to move in different directions and therefore make them less dependentonothers.

Wheelchairsare used bythepeoplewho cannotwalk due tophysiologicalorphysicalillness,injuryoranydisability. Recentdevelopmentpromisesa widescopeindeveloping smartwheelchairs.Theexistingsystemsemphasizeonthe need for a system which is useful for paralyzed patients. Themainaimofthisworkistoprovideasystemwhichis usefulforpatientssufferingfromdiseaseslikeALSinsuch a way that they can control the movement. Therefore, a

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

control system for patients suffering from diseases like ALSisnecessarywhichisconvenient touse, efficient,and affordableforthepatients.

1. The accelerometer sensor integration with the Blynk app and adding authentication key to RaspberryPi.

2. Linking the Blynk app to Raspberry Pi and detectionofhandgesturefromtheBlynkapp.

3. Rotationofthemotorstomoveineitherleft,right, forward,backwarddirectionsor tostop basedon handgestureinput.

4. DetectionoftheeyefromPiCameraanddetecting themovementoftheeye.

5. Rotationofthemotorstomoveineitherleft,right, forward,backwarddirectionsor tostop basedon eyemovementinput.

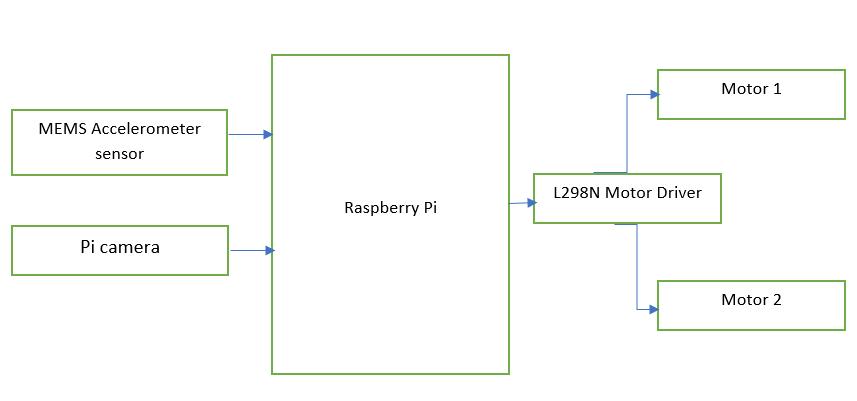

TheblockdiagramoftheproposedprojectisshowninFig 1.TheproposedworkusesRaspberryPi4with4GBRAM whichistheheartofthesystem.ThePicameraattachedto it captures the face region of the person. The various operations are performed on this image to get the output signal. The Raspberry Pi then analyses the output signal basedontheeyespositionandsendsthe controlsignal to themotordrivercircuit.Themotordriver circuitcontrols the movement of wheels either in a clockwise or counter clockwise direction or stops. The accelerometer sensor in the mobile phone detects the movement of the hand and calculates corresponding tilt angle and is provided to the Raspberry Pi which then sends the control signal to the motor driver. The motor driver circuit controls the movement of wheels either in a clockwise or counter clockwise direction or stops. Two separate motors are mountedoneachwheel. Fig 1:Blockdiagram

RaspberryPiBoard:

The Raspberry Pi Board, considered as the heart of the system, is a single board computer that runs under the LINUX operating system. The Raspberry Pi controls the motor driver circuit which activates the GPIO pin of theRaspberryPi.

The Pi Camera module is a lightweight portable camera that supports Raspberry Pi. Raspberry Pi Board has Camera Serial Interface (CSI) interface to which Pi Camera module can be directly attached using 15 pin ribbon cable. In this proposed work, Pi Camera is used to capturemovementoftheeye.

DCMotor:

Two12VDCmotorsisusedtocontrolwheelchair movements in front, back, left and right. DC motors take electrical power through direct current, and convert this energyintomechanicalrotation.

An electric battery is a source of electric power consisting of one or more electro chemical cells with external connections for powering electrical devices. Whenabatteryissupplyingpower,itspositiveterminalis the cathode and its negative terminal is the anode. The terminal marked negative is the source of electrons that willflowthroughanexternalelectriccircuittothepositive terminal.

The L298N is a dual H Bridge motor driver whichallows speed and direction control of two DC motorsatthesametime.ThemodulecandriveDCmotors thathavevoltagesbetween5and35V,withapeakcurrent upto2A.

OpenCV:

It is a library of programming functions. These functions are mainly aimed at real time computer vision. OpenCV is used to process the image that appears in the webcam.

Dlib:

Dlib is a modern C++ toolkit containing machine learning algorithms and tools for creating complex softwareinC++tosolverealworldproblems.Thislibrary is used to detect the facial landmarks in order to detect eyes.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Blynk:

WithBlynk,thesmartphoneapplicationscaneasily interactwithmicrocontrollersorevenfullcomputerssuch astheRaspberryPi.ThemainfocusoftheBlynkplatform is to make it super easy to develop the mobile phone application. In this work, Blynk app is used to access the accelerometer sensor of the mobile to detect the hand gesture.

Python:

Python is a programming language that aims for both new and experienced programmers to be able to convert ideas into code easily. It is well supported among programming languages in the area of machine learning, that is why many developers use Python for Computer Vision(CV). CV, on the other hand, allows computers to identify objects through digital images or videos. Implementing CV through Python allows developers to automate tasks that involve visualization. While other programming languages support CV, Python dominates thecompetition.

VNCViewer:

Virtual network computing (VNC) is platform independent. There are clients and servers for many GUI basedoperatingsystemsandforJava.Multipleclientsmay connecttoaVNCserveratthesametime.Popularusesfor this technology include remote technical support and accessing files on one's work computer from one's home computer, or vice versa. Raspberry Pi comes with VNC ServerandVNCViewerinstalled.

This section describes the hardware implementation of theproposedwork.

Hand gesture detection: Micro Electro Mechanical Systems(MEMS) accelerometer consists of a micro machined structure built on top of a silicon wafer. This structureissuspendedbypolysiliconsprings.Itallowsthe structure to deflect at the time when the acceleration is applied on the particular axis. Due to deflection the capacitance between fixed plates and plates attached to the suspended structure is changed. This change in capacitance is proportional to the acceleration on that axis. The sensor processes this change in capacitance and converts it into an analog output voltage. This Accelerometer module is a three axis analog accelerometer IC, which reads off the X, Y, and Z acceleration asanalog voltages.Bymeasuringthe amount ofaccelerationduetogravity,anaccelerometercanfigure out the angle it is tilted at with respect to the earth. By sensing the amount of dynamic acceleration, the

accelerometercanfindouthowfastandinwhatdirection thedeviceismoving.

The accelerometer sensor of the mobile phone isaccessedusingtheBlynkmobileapplicationtosensethe movementofthehand.Thismobileapplicationactsasthe client. The authentication key of the Blynk app will be provided to the Raspberry Pi. The Raspberry Pi reads the input from the mobile phoneaccelerometer. Based on the controlsignalsfromthe

Raspberry Pi, the wheels of the motors are rotated through motor driver. The motor driver is connected to theRaspberryPiusingGPIOpins.

Interfacing Pi Camera with Raspberry Pi: Pi Camera module is a tiny board that can be interfaced with RaspberryPiforcapturing picturesandstreamingvideos. Pi Camera module is attached with Raspberry Pi by CSI interface. The CSI bus can handle exceptionally high data ratesandisonlyusedtotransportpixel data. Thecamera communicates with the Raspberry Pi processor through CSI bus, a higher bandwidth link that relays pixel data from the camera to the processor. This bus follows the ribboncablethatconnectsthecameraboardtoRaspberry Pi. The Pi Camera board connects directly to the Raspberry Pi CSI connection. It allows users to capture images with a 5MP resolution and shoot 1080p HD video at 30 frames per second. After selecting the proper cable, Pi Camera with Raspberry Pi board will be connected. AfterbootingtheRaspberryPi,openVNCviewerandclick onRaspberryPiconfiguration

Remotely accessing Raspberry Pi desktop on VNC Viewer: VNC is a tool for accessing the Raspberry Pi graphical desktop remotely. Setting up VNC is really easy butitusuallyonlygivestheaccessfromanothercomputer thatisonthesamenetworkastheRaspberryPi.Pi tunnel is a service for remotely accessing the Raspberry Pi and the projects that are built on it. A device monitor and remote terminal is included, and can also create own custom tunnels to access services running on the RaspberryPi.

The first step is to enable VNC server on your device.Theeasiestwaytodothisisasfollows:

OpenaterminalontheRaspberryPiorusethePi tunnelremoteterminal.

Enterthecommandsudoraspi config.

Use the arrow keys to select 'Interfacing Options' andpressEnter.Laterselectthe'VNC' optionand pressenter.

Driving the motor: The motordriverisusedtocontrol therotationofthemotorthroughRaspberryPi.OpenCVis

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

installedonRaspberryPiandPiCamerawillbeinterfaced with it. Raspberry Pi Camera is used to capture the real timeimagesoftheperson’seyeandissenttocontinuously running OpenCV script. After processing the image the commandisgiventothemotordrivertomoveleft,rightor inforwarddirection.Similarly,thedataobtainedfromthe mobile accelerometer is given to the driver to control the movement

This section describes regarding the software implementationoftheproposedwork.

Eye movement detection: The proposed system consists oftwomainmodulesnamelyEyeBlink Detection and Eye Motion Detection. The programmed algorithm firstdetectsthefaceusingfaciallandmark detectorinside Dlib and detect the eye region and draw a bounding box aroundthelocalizedeyeco ordinates.Thentheeyeregion is scaled and converted into grayscale. The computer monitors the movement of eye towards left or right or detects blinks. The contour lines are then detected via a cross mark on the iris of the eye. The output from these algorithms can be used as the input parameters for the requiredcomputerinterface.Inordertodetectthemotion oftheeye,thefacelandmarkdetectionisneededtoextract theeyeregion.

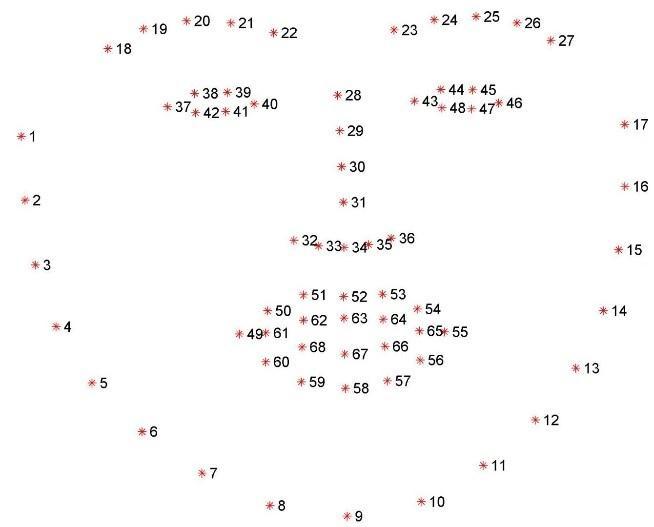

a. Face detection: The facial key point detector takesa rectangular object of Dlib module as input, which is nothingbutco ordinatesofface.Inordertofindfaces,Dlib is used, which is an inbuilt frontal face detector. Initially the “dlib.get frontal face detector ()” will be used to detect the four corner coordinates of the face. It is a pre trained model which makes use of Histogram of oriented Gradients (HOG) and Linear SVM techniques. HOG is used primarily as an object detector. It provides information about pixel orientation and direction .The Linear SVM method is used to differentiate and group similar objects together. The Dlib provides both of these methods combined for face detection. The Fig 2 is obtained by joiningthecoordinatesobtainedfromthefunction.

b. Eye detection: Thenexttaskistodetecttheeyes.For this the trained model file,” shape predictor 68 face landmarks.dat” will be used, and will pass it to dlib.shape predictor()function.Thiswillreturn68points ontheface.Theregionofinterestisthepointsfrom36to 47 as these points are around the eyes. In this way isolationoftheeyesfromthefaceisdone.

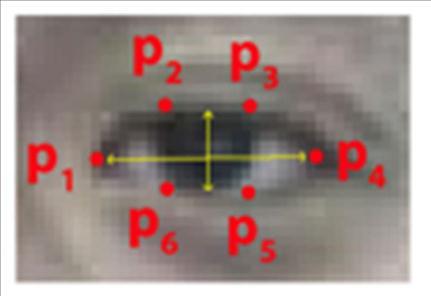

c. Eye blink detection: Blink detection can be calculatedwiththehelpoftheEyeAspectRatio(EAR)

In this algorithm, the detected blink is classified into two sections, voluntary eye blinks and involuntary eye blinks. The voluntary eye blink duration is set to be larger than 250ms. The EAR is a parameter used to detect these blinks. The EAR is a constant value when the eyes are open, but quicklydropswhen the eyesareclosed. Human eyes are clean. The program can determine if a person’s eyesareclosedwhentheaspectratiooftheeyefallsbelow acertainthreshold.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

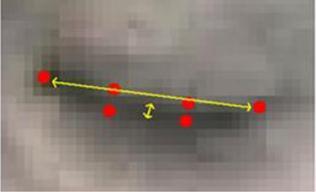

d. Eye motion detection: The region of the left eye corresponds to landmarks with indices 36, 37, 38, 39, 40 and 41. The region of the right eye corresponds to landmarkswithindices42,43,44,45,46,47.Workinthe region of the left eye. The same procedure follows for the area of the right eye. Having received the coordinates of thelefteye,amaskwillbecreatedthataccuratelyextracts the inside of the left eye and excludes all inclusions. A squareshapeiscutintheimagetoremovetheeyeportion so that all the end points of eyes (top left and bottom right) are considered to get a rectangle. Then get a thresholdtodetectoureyes.Ifthescleraismorevisibleon the left, the eyes look to the right. To determine whether the sclera's eyes are converted to gray levels, a threshold is applied and white pixels are calculated. Then separate the left and right white pixels and get the eye ratio. The gaze ratio shows us where a particular eye is looking. Usuallybotheyeslookinthesamedirection.Therefore,in order to truly recognize the gaze of one eye, the gaze of both eyes can be found. If the user only looks to the right ononeside,theuserpointsthepupilsandirisintheright direction,whiletherestoftheeyeiscompletelywhite.The others are irises and pupils, so it's not completely white andinthiscaseit'sveryeasytofindhowitlooks.

Iftheuserseesfromtheotherside,theoppositeistrue. When the user looks in the middle, the white portion between left and right is balanced. The eye area is extracted and makes a threshold to separate the iris and pupils from the whites of the eyes. In this way, the eye movements are followed. Based on this, the system provides instructions for wheelchair movement. The system works according to the eye pupil position and moves the wheelchair left, right and forward. When the pupil of the eye moves to the left, the wheelchair motor runsontheleftside,andwhentheeyemovestotheright, the right side of the motor must move. If the eye is in the middle, the motor must also move forward. If problems are found, the system stops working. Eyes blink logic applies to starting and stopping wheelchair systems. The Pi Camera is installed in front of the user. The distance between the eyes and the camera is fixed and must be in therangeof15to20cm.

Theproposedideacouldbeimplementedsuccessfullyand the system could fulfil the requirements. In the hand gesture detection part, the accelerometer sensor of the mobile phone detected the tilt angle of the hand movement through Blynk app and sent the resulted axis valuestotheRaspberryPi.TheRaspberryPithensendsa control signal to the motor driver to rotate the wheels accordingtothedirectionofhandgesture.Thebotmoves towards left, if the hand is tilted towards left. Similarly, if the hand is tilted to right, then the bot moves towards right. If the hand moves forward or backward, the bot movesinrespectivedirections.

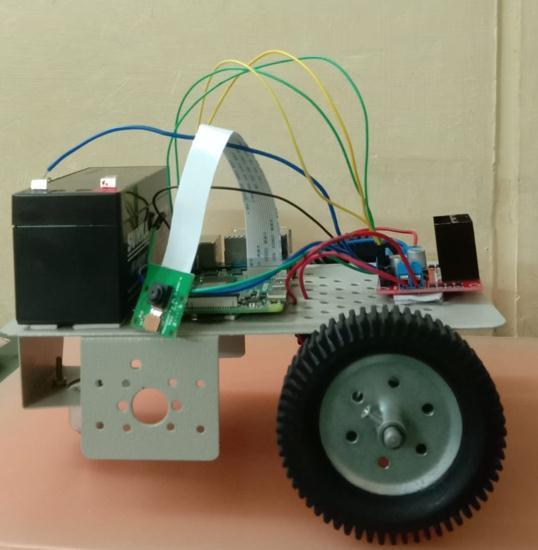

The side view of the hardware implementation of the proposedworkisshownintheFig 5

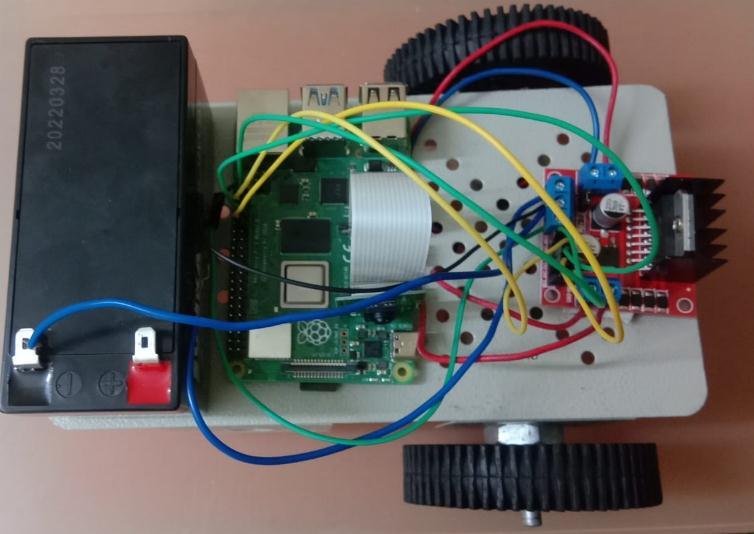

The top view of the hardware implementation of the proposedworkisshownintheFig 6.

Fig 6: Topviewofthehardwaredesign

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

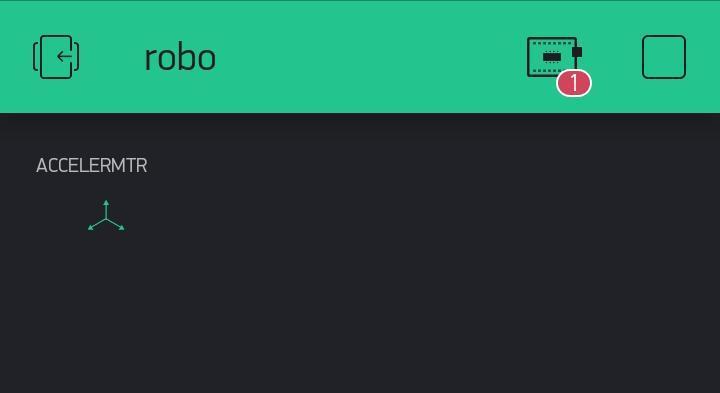

Theaccelerometer isadded totheBlynkapp. Thecreated projectisshownintheFig 7.

The detection fromthePi Camera of the person looking towardsleftisshowninthe Fig 10.Hencethismessage is passedtothemotordrivertorotatetheleftwheelinanti clockwise direction and right wheel in the clockwise directiontomovetowardsleft.

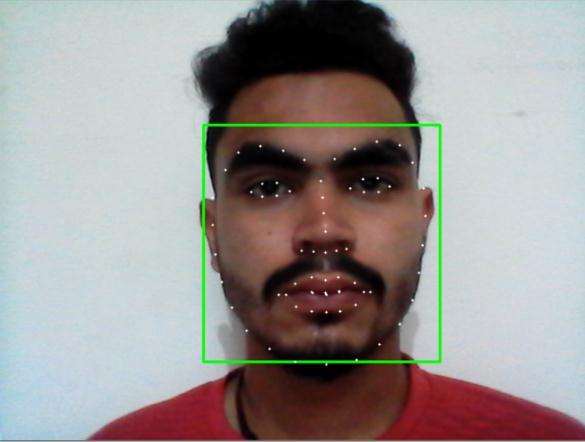

The below images shows the detection of eyeball movement which is provided to control the rotation of motor.

ThefaciallandmarkshownintheFig 8isusedtodetect thefaceregionandlatertoextracttheeyeregion.

The detection fromthePi Camera of the person looking towards right is shown in the Fig 11. Hence this message is passed on the motor driver to rotate the right wheel in anti clockwise direction and left wheel in the clockwise directiontomovetowardsright.

The detection fromthePi Camera of the person looking towards straight is shown in the Fig 9. This control is provided to the motor driver to rotate the wheels in the forwarddirection,i.e.,intheclockwisedirection.

Fig

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

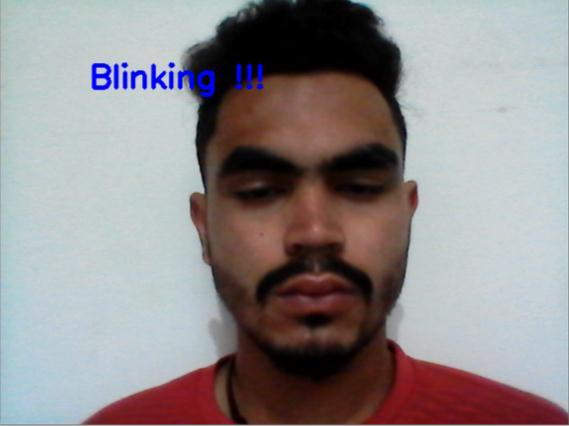

ThedetectionfromthePiCameraofthepersonblinking his eye is shown in the Fig 12. Hence this message is passed on to the motor driver to start or stop the wheel rotation.

Computing Communication Control and Automation(ICCUBEA) (pp.1 5).IEEE.

[4] Lu, X., Sun, S., Liu, K., Sun, J. and Xu, L., 2021. Development of a Wearable Gesture Recognition System Based on Two terminal Electrical Impedance Tomography. IEEE Journal of BiomedicalandHealth Informatics

[5] Pradha, M.S., Suganthi, J.R., Malarvizhi, C. and Susiladevi, S., 2021. Integration of 3D MEMS Accelerometer Sensor. Annals of the Romanian SocietyforCellBiology, 25(3),pp.7341 7347.

[6] Goyal, Diksha, and S. P. S. Saini. "Accelerometer based hand gesture controlled wheelchair." International Journal on Emerging Technologies 4,no.2(2013):15 20.

Fig-12: Personblinkedhiseye

The prototype of wheelchair was designed and implemented. The bot was successfully moving in the desireddirectionbasedonthegestureofhandwhichwas detectedbytheaccelerometersensorofthemobilephone through Blynk app. Based on the movement of hand, control signals were passed to control the motor through the motor driver and to rotate the wheels either in clockwise direction or anti clockwise direction and to move eitherinforward, backward,left, rightdirections or tostop.

The eye movement was successfully detected by the Pi Camera and respective control signals were provided from the Raspberry Pi for the movement of the botinrespectivedirections.Thereforealltheobjectivesof the proposed project was fulfilled and implemented successfully.

[1] MohdAzri,A.A.,Khairunizam,W.,Shahriman,A.B., Khadijah,S.,AbdulHalim,I.,Zuwairie,I.andMohd Saberi,M.,2012.Developmentofgesturedatabase foranadaptivegesturerecognitionsystem.

[2] Parvathy, P., Subramaniam, K., Venkatesan, G.P., Karthikaikumar,P.,Varghese,J.andJayasankar,T., 2021. Development of hand gesture recognition systemusingmachinelearning. JournalofAmbient Intelligence and Humanized Computing, 12(6), pp.6793 6800.

[3] Pandey, M., Chaudhari, K., Kumar, R., Shinde, A., Totla, D. and Mali, N.D., 2018, August. Assistance for paralyzed patient using eye motion detection. In 2018 Fourth International Conference on

[7] Pimplaskar, D., Nagmode, M.S. and Borkar, A., 2015. Real time eye blinking detection and trackingusingopencv. technology, 13(14),p.15.

[8] Utaminingrum, F., Praetya, R.P. and Sari, Y.A., 2017. Image processing for rapidly eye detection based on robust haar sliding window. International Journal of Electrical and Computer Engineering, 7(2),p.823.

[9] Rahnama ye Moqaddam, Reza, and Hamed Vahdat Nejad. "Designing a pervasive eye movement based system for ALS and paralyzed patients." In 2015 5th International Conference on Computer and Knowledge Engineering (ICCKE),pp. 218 221.IEEE,2015.

[10] Noman,AbuTayab,etal."ANewDesignApproach forGestureControlledSmartWheelchairUtilizing Microcontroller." 2018 International Conference on Innovations in Science, Engineering and Technology(ICISET).IEEE,2018.

[11] Noronha, B., Dziemian, S., Zito, G. A., Konnaris, C., & Faisal, A. A. (2017, July). “Wink to grasp” comparing eye, voice & EMG gesture control of grasp with soft robotic gloves. In 2017 International Conference on Rehabilitation Robotics(ICORR) (pp.1043 1048).IEEE.

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |