International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Department of Electronics and Communication Engineering, Velammal Engineering College, Chennai 600066, Tamil Nadu, India. ***

Abstract There are a huge number of blind and visually impaired individuals in this world who consistently need some assistance. These blind people find it difficult to go outside their homes without any help. Artificial Intelligence Based Smart Navigation System is a gadget intended to help guide the visually impaired by recognizing objects and depicting the data to them as an audio signal. This diminishes human exertion and gives better comprehension of the surroundings. Besides it likewise gives a chance for the blind individuals to move from one spot then onto the next without needing any help from others. The gadget can likewise be utilized in old age homes where aged individuals experience issues in their everyday exercises because of diminished vision. In this work, a framework is carried out that navigates the individual along his/her way. The object before the visually impaired can be recognized using image recognition with Artificial intelligence. The data about the object type before the individual can be conveyed by means of earphones / headphones. This will assist the individual in walking without any trouble.

Key Words: ArtificialIntelligence,VisuallyImpaired,Blind, ObstacleDetection,Recognition,Accuracy.

AsindicatedbyWorldHealthOrganization(WHO), there are over 1.3 billion individuals who are physically impaired across the globe, out of which about 36 million individuals are blind. India being the second biggest populatedcountryontheplanet,contributesabout30%of the total blind population. In spite of the fact that enough missionsarebeingdirectedtotreattheseindividuals,ithas beenhardtosourceeveryoneoftheprerequisites.Itistruly challenging for blind individuals to roam openly in the outsideworld.Individualswithvisualinabilityhaveasteady need of help with their regular routines. It can go from depending on others for help for basic needs to utilizing various Electronic Travel Aid (ETA) assistive gadgets wheneverneeded.Theyalsousewhitecanesandguidedogs. However,thelimitationontheallowanceofguidedogs in specificplacesandtheshortscopeofwhitecanesfillinas some of the disadvantages. Most of the ETAs have the disadvantages of being unreasonably expensive and wasteful. Keeping a tab on the different downsides of the conventionalandnormaltechniquesforhelpingthevisually

impairedandtheblindindividuals,wechosetocreateand planourframeworkwithanovelapproach.

This era of brilliant advancements like Artificial Intelligence and Machine Learning implanted with appropriate equipment has life of visually impaired individuals simple and more secure. In the recent years, thereisawide scaleuseofArtificialIntelligencegoingfrom scientificresearch,automatedvehicles,nationaldefenceand space exploration. It is the need and obligation of the analysts to create such equipment for blind individualsto strollaroundanyregionwiththeguideofsmartnavigation system that is built and most arising advancements, for example,ArtificialIntelligenceandMachinelearning.AIhas acquiredbigimportancebecauseofthehugeamountofdata and simplicity of calculation. Utilizing AI it is feasible to maketheseindividuals'lifealoteasier.Theobjectiveisto givean"optionalsight"untiltheyhaveanadequatenumber ofassetsneededtotreatthem.Individualswithuntreatable visual impairment can utilize this to make their ordinary taskslessdifficult.

In this paper, we propose a novel smart navigation systemforblindandthevisuallyimpairedindividuals.Some oftheuniquefeaturesofthesysteminclude:

1) A real time and a camera based system that is simple in design and also reduces the cost by minimizingthenumberofsensors.

2) A compact and a low power design with an integrated voice alert system for outdoor and indoornavigation.

3) Processing of complex algorithm with a low end configuration.

V.Kuntaetal.[1]proposedasystemthatmakesuse oftheInternetofThings(IoT)tocreateabridgebetweenthe environmentandtheblind.Manysensorsareusedtodetect obstacles, damp floors, and staircases, among many other things.Thepresentedprototypeisabasicandinexpensive smartblindstick.Itisfitted witha varietyofIoTmodules andsensors.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

A.A.DiazToroetal.[2]presentedthemethodology that can be used to build a vision based wearable system whichwillassistvisuallyimpairedpeoplewithnavigationin indoor atmosphere that is new to them. The proposed system helps the person in “purposeful navigation”. The systemdetectsobstacles,walkablespacesandothersobjects likecomputers,doors,staircases,chairsetc.

M.M.Soto Cordovaetal.[3]presentedthedesignas wellastheimplementationofahelpingaidfortheblind.Itis implemented using Arduino, ultrasonic sensors and some warningdevicesforobstacledetectionandintimationbased onthedistanceoftheobstacle.

N.Loganathan et al.[4] presented a solution by implementinganultrasonicsensorinthecane.Thedevice candetectandperceivetheobstaclesatarangeof4meters. TheIRdevicewilldetectthenearbyobstaclesthatapproach the blind. With the help of a buzzer, the radio frequency transmitterandreceiverareusedtofindthepreciselocation ofthestick.

J.Ai et al.[5] proposed a device that first acquires image information with a camera before converting the imagetotextwithimagecaptioningtechnology.Finally,the userisfedbackwiththetextsequenceusingavoicesignal.

D.Baletal.[6]introducedawearablesystemforthe blindpeoplethatcanbeusedforindoornavigation.Itisa lightweight,portableandauserfriendlysolutionwhichcan alsobemountedonajacket.ThesystemusesRaspberryPi, vibrationmotors,acameramodule,threeultrasonicsensors, emergency button, gyroscope and the android application ‘Blynk’.

M.A.Khanetal.[7]designedanobstacleavoidance architecturewithacameraandsensors,aswellasadvanced image processing algorithms to detect the objects.The distance between the obstacle and the user is determined using an ultrasonic sensor and a camera. The system contains an image text converter and audio feedback, as wellasanintegratedreadingassistance.

S.Devetal.[8]demonstratedasmartdevicemade using a Raspberry Pi that can perform taskslike object detection,navigationandgivesaudiofeedbacktonotifythe user.

D.P.Khairnar et al.[9] proposed a system that combinessmartgloveswithasmartphoneapplication.The smartglovedetectsandavoidsobstacles,aswellasallowing visuallyimpairedpersonstorecognizetheirsurroundings. Various things in the neighbourhood are detected using smartphone basedobstacleandobjectdetection.

Devi et al.[10] proposed a system that performs existing object observation and discovers the actions. The nearby location can be captured and given as a voice

command. The proposed system effectively performs live observationofvisuallyimpairedandblindpeopleandallows themtomoveanywherewithoutanyhelp.

S.BarathiKannaetal.[11]proposedafindingthat allowsvisually impaired people and blind peopleto "communicate" with their surroundings in the Internet of Things(IoT)world.ThisprototypecomprisesanESP8266,a powersourceforthedevelopmentboardandcoinmotors,as well as a smartphone App, making it more accessible to visuallyimpairedpeople.

K.Patiletal.[12]developedaconceptforawearable device with a virtual assistant that would allow visually impaired and blind persons to accomplish simple tasks withoutassistance.Thesystemisdesignedtoprovidevoice overassistancetovisuallyimpairedpeoplewhoneedhelp withtheirdaytodaylifetasks.

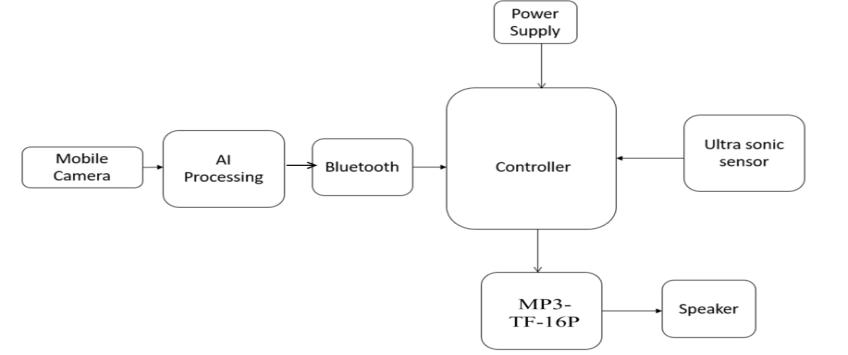

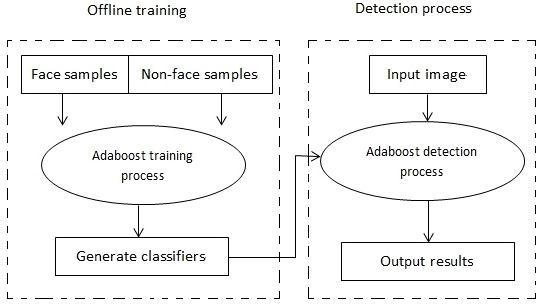

The Proposed system consists of a camera, AI processing,controllerandvoicealert.Theultimategoalof this smart navigation system is to detect the obstacles coming in front of the visually impaired person and to informthemabouttheobject.AIprocessingisusedtotrain theobjectimagesinthecontroller.Ifanyobjects/obstacles approach the blind person, then the controller alerts the personbyavoicemessage.Thiscanmaketheblindperson more cautious and thereby lowers the possibilities of accidents. An automatic switch enabled with a voice assistantisalsoaddedtoguidethemintheirprivatespace.A camera is used to capture the indoor and outdoor images which help the smart navigation system to detect the obstacle.

Thebelowflowchartsshowtheproposedsystemflowchart andtheblockdiagram.

Fig 1: Proposedblockdiagram

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

thesametimestayingawayfromobstacles.Letusconsidera situationinwhichachairispresentinfrontofthevisually impairedperson.Thepersonwantstositandtakeareston thechair.Inthiscase,thechairisnotjustanobstaclebuta usefulobject.WhileusingtheObstacledetectionsystem,the usermaynotknowwhatkindofobstacleispresentinfront ofthembuthastoconfirmitontheirown.Butontheother hand,iftheuserusestheObjectrecognitionsystem,ithelps todeterminetheobjecttobeachairandtheusercanclaima benefit.Itisimportanttoconstructanassistiveframework to detect and determine the objects around a visually impaireduser.

This is an Android application that uses OpenCV libraries for computer vision detection.The ARD object tracker application can detect and track various types of objects from your mobile phone’s camera such as lines, colour blobs, circles, rectangles and also people. Detected object types and screen positions can then be sent to a BluetoothreceiverdevicesuchasHC 05.

If using an appropriate micro controller e.g. Arduino or Raspberry Pi users can analyze the detected objects for furtherprojects.

KeyApplicationFeatures: 1.ColourBlobDetectandTrack

CircleDetectandTrack

LineDetect

PeopleDetectandTrack

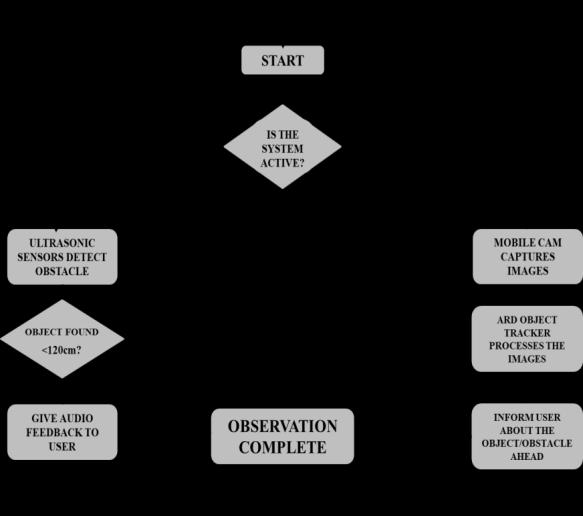

Theflowchartoftheproposedsystemisshownin figure3.Thehardwarepartconsistsofanultrasonicsensor, camera,voicealert,andAIprocessing basedcontrollerunit. These trained data have described the use of ultrasonic sensors integrating it with a controller for detecting the obstacles. The ultrasonic starts sending the signals with minimumdelayandafterthat,thesignalreturnsasanecho tothesensorreceiver,andthecontrollerestimatesthetime ittakestogetthesignalbackfromthesensor.Usingthetime taken, the distance of the object is calculated and is converted into speech and the feedback is given through speakers or headphones. The blind person will then be stoppedfromcollidingwithanyobject.

Thisassistiveframeworkisbuiltbasedontheidea oftheElectronicTravelAid(ETA),whichplanstohelpthe visuallyimpairedandtheblindpeopleinstrollingwhileat

Rectangledetection. 6. Send detected object parameters wirelessly over Bluetooth.

To connect an appropriate Bluetooth receiver, click the "ConnectBluetooth"button.Ifthereisnobuttondisplayed youwillneedtodoubleorsingleclickthepreviewscreento bringup the options. Thelistshown providesthe existing paireddevicesforyourphone.Iftherearenoitemsonthe list,youwillneedtofirstpairyourdevicewithintheAndroid Bluetooth settings options. Clicking on an existing paired devicewillattemptaconnectiontothatdevice.Ifsuccessful youwill betakenback tothecamera previewscreen.Any errorswillbedisplayedifnotsuccessful.

International Research Journal of Engineering and Technology (IRJET)

e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Configuration Settings:

Thereareseveralconfigurationsettingsthatcontrolhowthe OpenCVcomputervisionlibrariesdetectobjects.Thesecan be set by first clicking the "Settings" button. If there is no button showing on the preview camera screen, users will needtodoubleorsingleclickthepreviewscreentoaccess thesettingspage.

Video Scale:

Thiscontrolstheimagesizethatwillbeusedforallimage processingoperations.Ascalefactorof1willusethephone’s default image size capped to a maximum of 1280x720. If yourphone’sCPUisnotfast,itisrecommendedtosetthis value to 2. Higher scale factors will result in faster image processingoperationsbutcanpotentiallyresultininstability ornoobjectdetections.

Each tracked object is given a unique id. Tracked objects howevercanbemissedoncertainimageframeupdatesand consequently re appear on subsequent frames. To avoid issuing new ids, each tracked object is given a life persistencevalue.Increasingthisconfigurationparameter will keep objects being tracked longer in the memory buffers.

BT Serial Tx Buffer Size:

Thisconfigurationparametercontrolshowmanydetected object items are pushed onto the internal communication buffer for Bluetooth communication. The internal communication buffer is sampled 100 times a second (10ms).Thisshouldbesufficienttoclearthebuffer. Bluetooth Data Transmit Formats: AlldatacommunicationissentasASCIItextinthefollowing format: "Object Type":"ID":"XPos","YPos","Width","Height"

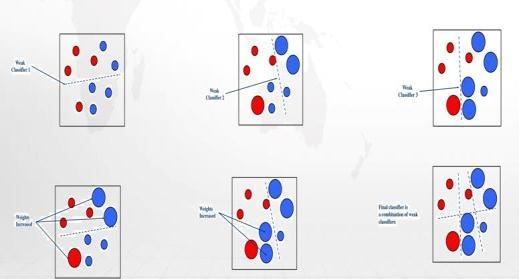

AdaBoostisaniterativealgorithmthatjoinsanew weakclassifierineachrounduntiladesiredlowerrorrateis reached. A weight is assigned to each training sample, indicatingthepossibilityofitbeingincludedinthetraining setbyaclassifier.Ifasamplepointissuccessfullyclassified, itsweightwillbereducedwhenbuildingthenexttraining set.Ontheotherhand,ifasamplepointismisclassified,its weight will be increased. In this implementation, each sample has the same initial weight, thus we will choose samplepoints basedon theseweightsfor the kth iteration operation, and then train the classifier. The weight of the

samplethatisincorrectlyclassifiedcanthenbeincreased, whiletheweightofthesamplethatissuccessfullyclassified canbereduced,basedonthisclassifier.Themodifiedsample setweightsarethenutilizedtotrainthenextclassifier.This ishowtheentiretrainingprocedureiscarriedout.

Description of AdaBoost algorithm :

(i)Sample:Giventhefollowingtrainingsampleset: (x1,y1),(x2,y2),...,(xn,yn), wherexi∈X,yi∈Y={−1,+1} (1)

(ii)Initializethesample'sweights:

Forpositivesamples,usetheformula W1(i)=1/2m (2) where m represents the total number of positive samples.

Fornegativesamples,usetheformula W1(i)=1/2l (3) where l represents the total number of negative samples.

(iii)Weakclassifiersaretrainedby: Circulatet=1,...,T: whereTrepresentstraining rounds.

(iv) (4) where α t representstheweightofweak classifiersand t representstheclassifier'slowest errorrateatthecurrent level.

(v)Ifthesampleiscorrectlyidentified,revisethe weightswith Wt+1(i)=Wt(i)*exp( (5)

Ifthesampleisincorrectlyclassified, Wt+1(i)=Wt(i)*exp( (6)

(vi) Finalstrongclassifier=(Sumofweakclassifiers) *(Individualweightsofweakclassifiers).

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

TheAdaBoostalgorithmofferstheframework for multipleapproachestogeneratesub classifiers,and theAdaBoostalgorithmisa simpleclassifierwith excellentaccuracy.

The estimated findings are simple to understand, andconstructingaweakclassifierissimple.

Thereisnonecessityforfeatureselection

Thereisnothreatofoverfitting.

The upper bound of the least error rate on the trainingsetisusedasacriterionforsettingparametersin thetraditionalAdaBoostapproach.However,thisdoesnot guaranteethattheerrorratewillbereduced.Asaresult,the weakclassifierisnolongerpickedasthelowesterrorratein eachtrainingsessioninanupgradedAdaBoostapproach.To tackle this problem, a weighting parameter of the weak classifier can be added to boost the performance of the improvedAdaBoostalgorithm.Furthermore,ifthetraining setcontainsdifficultsamplesandnoise,theweakclassifier's capability to generalizewill deteriorate as the number of repetitions grows. "Degeneration" is the termfor this condition. The weight expansion must be limited in this circumstance.

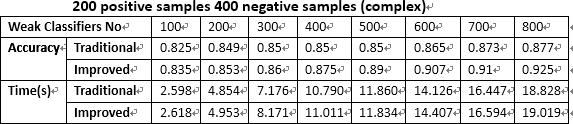

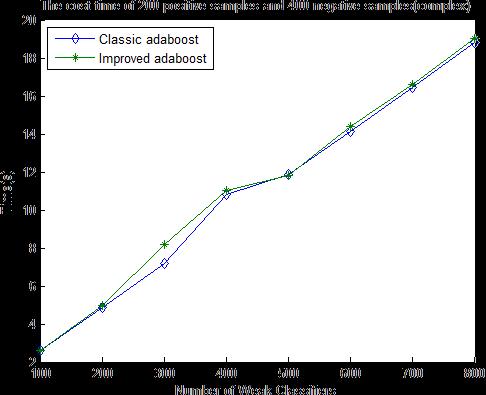

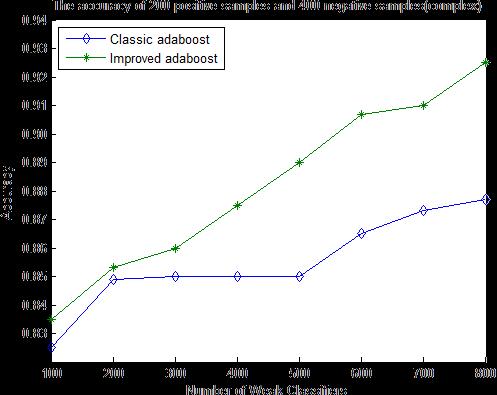

MatLabwasusedforcarryingoutsimulation.The first test was carried out using 600 training sets which includes400negativeand200positivesamples.

From chart 1, it is understood that in traditional AdaBoostalgorithmtheaccuracyisrepresentedbytheblue line whereas in improved AdaBoost algorithm it is representedbythegreenline.For100weakclassifiers,the accuracy of traditional AdaBoost algorithm is 82.50% whereasfortheimprovedAdaBoostalgorithmis83.50%. The cost time is observed as 2.5980 seconds for the traditionalAdaBoostalgorithmand2.6180secondsforthe improvedAdaBoostalgorithm.Itisalsoseenthatthecost timeforboththealgorithmsissimilar.

Table 1: AccuracyandTimeof600trainingsets

It isalso seen from the graph that the accuracy of the improvedAdaBoostalgorithmisincreasingswiftlywhereas for traditional AdaBoost algorithm the accuracy remains unchanged for the number of weak classifiers 200 500. Whenitis500,thegreatestcontrastintheaccuracyisseen. TheaccuracyofthetraditionalAdaBoostalgorithmisseen tobe4%lessthantheimprovedAdaBoostalgorithm.Incan beobservedthatboththealgorithmhavealmostthesame costtime.InthetraditionalAdaBoostalgorithm,about700 weak classifiers have to be trained and the cost time is 16.4470seconds, however in the improved AdaBoost algorithmonly400weakclassifiersneedtobetrainedand

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

the cost time is 8.1710seconds, hence it saves about 8seconds.

Fig 5:AdaboostTraininganddetectionprocess

Thebelowtableshowstheperformancecomparison of different AI algorithms used for different objects. The classifierbasedonAdaBoostproducesmoreaccurateresults thantheotherclassifiers.

Table-2: PerformancecomparisonofdifferentAI algorithms

S.no Algorithm Recognition accuracy

1 Randomforest 89

Therecognitionaccuracycomparisonisshowninbargraph inthefollowingfigure.

Chart 3: Recognitionaccuracycomparison

Arduino Uno controller

ItisamicrocontrollerbasedontheATmega328that has 20 digital input/output pins, ICSP header, USB port, a powerjack,resetbuttonanda16MHzresonator.

Fig-6: ArduinoUnoController

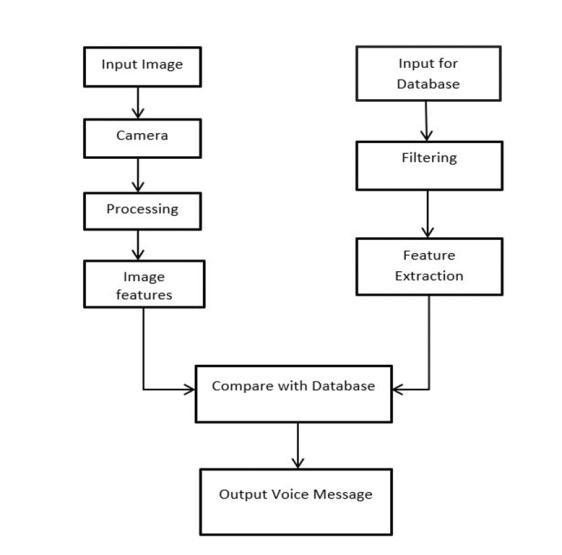

Amobilecameraorawebcamisusedtorecordthe imagesoftheobstaclesthatapproachthepathoftheblind person. For image detection, the clustering technique is utilized,withtheimageoftheobstaclesasinput.Toidentify theseinputs,theyarecomparedtoimagesrecordedinthe database.Thentheoutputisgivenintheformofanaudio signaliftheinputimagematcheswiththedatabase.

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

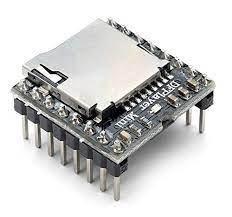

Thedfplayerminiisaneasy to use,stable,reliable andcompactmp3modulethatcanconnecttothespeaker directly. It integrates with MP3, WAV, and WMA hard decision decoding. Tf cards with FAT16 and FAT32 file systemsarealsosupportedbythismodule.

Fig-10

The speaker module that is used has a good performance and is generally used for all types of audio projects.8Ohmspeakersconsumelesspowercomparedto4 Ohmspeakers.Theyalsoemitlessheatfromthevoicecoil and deliver high quality sound and can handle about 200 wattsofpower.

Fig 11: Speaker

It isusedtoconnectArduinotootherdevices.The HC 05isusedtoprovideawirelessserialconnectionthatis transparent.Itoperatesonthe2.45GHzfrequencybandand requires a power source of 4 6V. The data transfer rate varies from 1Mbps to 10Mbps and is within a 10 meter range.

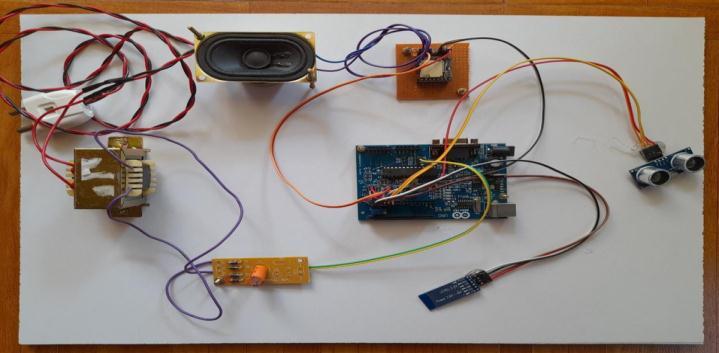

The proposed system is implemented using an Arduino controller. The circuit connection and overall implementationoftheproposedmodelaredepictedinthe figurebelow.

Thesmart navigationsystem'selectrical poweris supplied by a battery. In order to process the equipment connectedtothesmartnavigationsystem,a9voltbatteryis used.

Fig 12: Overallimplementation

Theblindpopulationmaystepinoroutwithoutany help while utilizing this smart navigation system as it has various functions including image processing, obstacle detection,andtexttospeech.Furthermore,oursystemdoes notneedanyinputfromtheuser,asitconsistsofultrasonic sensorsandcameramodulesthatsenseobstaclesaroundthe userandprovidefeedbacktotheuserwiththeassistanceof

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

an audio device connected to the module. The system continually operates so that the visually disabled can get updates on the challenges at any point on the way. Consequently,withtheaidofourproject,blindpeoplecould performtheirdailytaskscomfortablywithoutanytrouble.

[1] V.Kunta,C.TunikiandU.Sairam,"Multi Functional Blind Stick for Visually Impaired People," 2020 5th InternationalConferenceonCommunicationandElectronics Systems(ICCES),2020,pp.895 899.

[2] A. A. Diaz Toro, S. E. Campaña Bastidas and E. F. CaicedoBravo,"MethodologytoBuildaWearableSystemfor AssistingBlindPeopleinPurposefulNavigation,"20203rd International Conference on Information and Computer Technologies(ICICT),2020,pp.205 212.

[3] M.M.Soto Cordova,F.Criollo Sánchez,C.Mosquera SánchezandA.Mujaico Mariano,"PrototypeofanAudible Tool for Blind Based on Microcontroller," 2020 IEEE ColombianConferenceonCommunicationsandComputing (COLCOM),2020,pp.1 4.

[4] N.Loganathan,K.Lakshmi,N.Chandrasekaran,S.R. Cibisakaravarthi,R.H.Priyanga andK.H. Varthini,"Smart StickforBlindPeople,"20206thInternationalConference on Advanced Computing and Communication Systems (ICACCS),2020,pp.65 67.

[5] J.Aietal.,"WearableVisuallyAssistiveDevicefor Blind People to Appreciate Real world Scene and Screen Image," 2020 IEEE International Conference on Visual Communications and Image Processing (VCIP), 2020, pp. 258 258.

[6] D. Bal, M. M. Islam Tusher, M. Rahman and M. S. RahmanSaymon,"NAVIX:AWearableNavigationSystemfor Visually Impaired Persons," 2020 2nd International Conference on Sustainable Technologies for Industry 4.0 (STI),2020,pp.1 4.

[7] M.A.Khan,P.Paul,M.Rashid,M.HossainandM.A. R.Ahad,"AnAI BasedVisual AidWithIntegratedReading AssistantfortheCompletelyBlind,"inIEEETransactionson Human Machine Systems, vol. 50, no. 6, pp. 507 517, Dec. 2020.

[8] S.Dev,S.Jaiswal,Y.Kokamkar,K.B.Deshpandeand K.Upadhyaya,"VoiceBasedSmartAssistiveDeviceforthe Visually Challenged," 2020 International Conference on ConvergencetoDigitalWorld QuoVadis(ICCDW),2020,pp. 1 5.

[9] D.P.Khairnar,R.B.Karad,A.Kapse,G.KaleandP. Jadhav,"PARTHA:AVisuallyImpairedAssistanceSystem,"

2020 3rd International Conference on Communication System,ComputingandITApplications(CSCITA),2020,pp. 32 37.

[10] Devi, M. J. Therese and R. S. Ganesh, "Smart NavigationGuidanceSystemforVisuallyChallengedPeople," 2020 International Conference on Smart Electronics and Communication(ICOSEC),2020,pp.615 619.

[11] S.BarathiKanna,T.R.GaneshKumar,C.Niranjan,S. Prashanth, J. Rolant Gini and M. E. Harikumar, "Low Cost Smart Navigation System for the Blind," 2021 7th International Conference on Advanced Computing and CommunicationSystems(ICACCS),2021,pp.466 471.

[12] K. Patil, A. Kharat, P. Chaudhary, S. Bidgar and R. Gavhane,"GuidanceSystemforVisuallyImpairedPeople," 2021InternationalConferenceonArtificialIntelligenceand SmartSystems(ICAIS),2021,pp.988 993.

[13] S. Khan, S. Nazir and H. U. Khan, "Analysis of Navigation Assistants for Blind and Visually Impaired People: A Systematic Review," in IEEE Access, vol. 9, pp. 26712 26734,2021.

[14] S.Malkan,S.Dsouza,S.Dasmohapatra,M.JainandA. Joshi,"NavigationusingObjectDetectionandDepthSensing forBlindPeople,"2021IEEE4thInternationalConferenceon Computing, Power and Communication Technologies (GUCON),2021,pp.1 7.

[15] N.Nagenthiran,P.Priyanha,S.Nirosha,J.Vivek,H. DeSilvaandD.Sriyaratna,"MachineLearning BasedSmart Shopping for Visually Impaired," 2021 3rd International Conference on Advancements in Computing (ICAC), 2021, pp.473 478.

[16] N.Bhandary,K.Siddique,A.Adnani,S.Senguptaand S. Patil, "AI Based Navigator for hassle free navigation for visually impaired," 2021 International Conference on ComputerCommunicationandInformatics(ICCCI),2021,pp. 1 6.

[17] D.M.L.V.Dissanayake,R.G.M.D.R.P.Rajapaksha, U. P. Prabhashawara, S. A. D. S. P. Solanga and J. A. D. C. AnuradhaJayakody,"Guide Me:Voiceauthenticatedindoor userguidancesystem,"2021IEEE12thAnnualUbiquitous Computing,Electronics&MobileCommunicationConference (UEMCON),2021,pp.0509 0514.