International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

BHARATH BHARADWAJ B S1 , SHASHANK B N2 , GAYATHRI S3 , N S DHANUSH4, DARSHAN H5

1Professor, Dept. of Computer Science & Engineering, Maharaja Institute Of Technology Thandavapura, Karnataka, India 2 5 Dept. of Computer Science & Engineering, Maharaja Institute Of Technology Thandavapura, Karnataka, India ***

Abstract Driver Drowsiness Detectionby Using Webcamis being introduced to limit and lower the number of accidents involving vehicles, lorries, and trucks. It recognizes the symptoms of tiredness and warns drivers when they become drowsy.Machinevision basednon intrusiveconceptshave been used to construct the Drowsy Driver Detection System. To identify driver weariness, the device uses a tiny camera that is pointed straight at the driver's face and watches the driver's eyes. In such a scenario, a warning signalis sent to the driver when weariness is identified. The system locates the margins of the face using data from the binary version of the image, which reduces the size of the area around the eyes andmouth. By computing the horizontal averages in the area after locating the face, the eyes and mouth are then located while bearing in mind that the intensity of the eye areas in the face changes significantly. Finding the substantial intensity fluctuations in the face helps pinpoint the eyes. Once the eyes have been identified, the state of the eyes is determined by monitoring the distances between changes in intensity in the eyeregion.A considerabledistance corresponds to eye closure. If the eyes are closed for a frame, similarly to the mouth located, measuring the distances between the intensity changes in the lips area determine whether they are wide open mouth. A small distance corresponds to mouth closure. If the mouth is opened for a frame, the system concludes that the driver is yawning, inhaling deeply due to tiredness, and issuing a warning signal.

Key Words: Drowsiness, eye aspect ratio, eye blinked detection, mouth aspect ratio, mouth yawning detection.

Thedrivers' drowsinessisoneofthecriticalissuesfor mostroadaccidents.Drowsinessthreatensroadsafetyand causessevereinjuriessometimes,resultinginvictimfatality andeconomiclosses.Drowsinessimpliesfeeling lethargic, lack of concentration and tired eyes of the driverswhile drivingvehicles.MostaccidentshappeninIndiaduetothe driver'slackofconcentration.Duetosleepiness,thedriver's performance graduallydeclines.Wecreateda systemthat can recognize the driver's tiredness and inform him immediatelytopreventthisabnormality.Thissystemusesa cameratocollectimagesasavideostream,locatestheeyes, andrecognizesfaces.ThePerclosealgorithmisthenusedto evaluatetheeyestolookforsigns oftiredness.Finally,the driver is informed ofdrowsiness using an alarm system

basedontheoutcome.Differentapproacheshavedifferent advantagesanddrawbacks.

Currentdrowsinessdetectionsystemsthatmonitorthe driver's condition include electroencephalography (EEG) and electrocardiography (ECG), which measure heart rhythmandbrainfrequency,respectively.However,these systems are uncomfortable to wear while driving and inappropriatefordrivingconditions.Acameramountedin front of the driver is a better option for a sleepiness detectionsystem;however,findingthephysicalindicators ofdrowsinessisnecessarybeforedevelopingareliableand effective drowsiness detection algorithm. The issues that arise while identifying the eyes and mouth region are lightingintensityandwhetherthedrivertiltstheirfaceleft orright.Inordertoproposeamethodtodetectsleepiness using video or a webcam, this project aims to analyze all previous research and methods. After analyzing the recordedvideoimages,itdevelopsasystemtoexamineeach movieframe.

Theprojectfocusesontheseobjectives,whichare:

Tosuggestwaystodetectfatigueanddrowsinesswhile driving.Tostudyeyesandmouthfromthevideoimagesof participants in the experiment of driving simulation conducted by MIROS that can be used as an indicator of fatigueanddrowsiness.Toinvestigatethephysicalchanges offatigueanddrowsinessandTodevelopasystemthatuses eyesclosureandyawningtodetectfatigueanddrowsiness.

A drowsiness detection system's basic concept is to familiarize oneself with the signs of drowsiness. The determinethedrowsinessfromtheseparametersEyeblink, areaofthepupilsdetectedateyes,yawning,datacollection andmeasurement,integrationofthemethodschosen,coding development and testing, complete testing and improvement.

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page1819

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

The Driver Safety ecosystem has to be given to the customers of the automobile industry. With the advent of intelligent automobiles, there is a crucial need for smart utilities that assist the user. Manual seat belt safety and airbags are more of a precaution than a prevention. Therefore,wetrytoimplementasystemthatpreventsit.In theprevioussystems,theyhaveonlyusedEyeAspectRatio, EEG.

Theproposedsystemmainlyaimsatimplementingabetter safetysystemforcustomers.Thissystemmainlyconsistsof

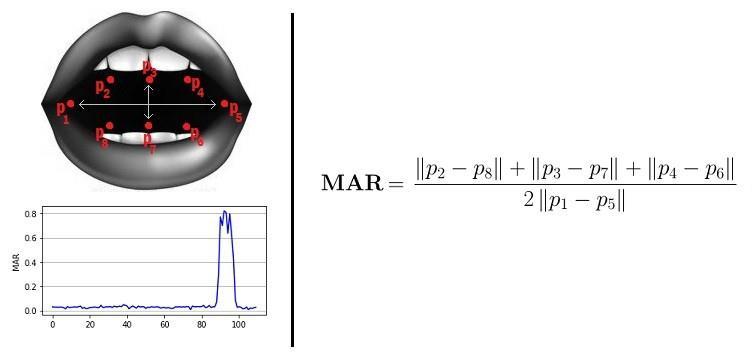

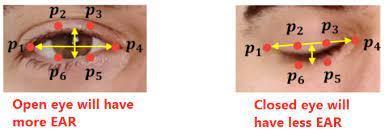

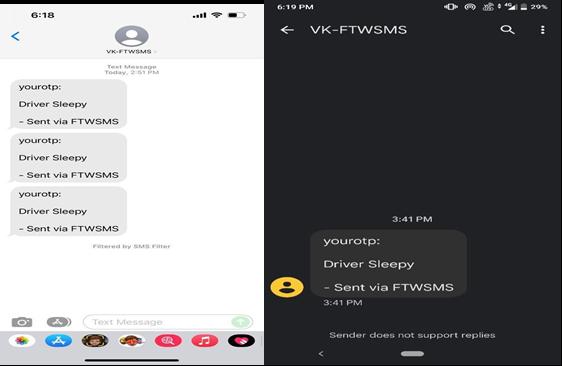

3 components: Face Detection, Feature Extraction, and Classification.Thesystemprovidesabettersolutionforroad safetyforthedrivers.ThissystemusesEyeAspectRatioand MouthAspectRatiotodetectdriverdrowsiness.Thealert message(SMS)senttothegivenemergencyphonenumberis alsoimplementedinthissystem.

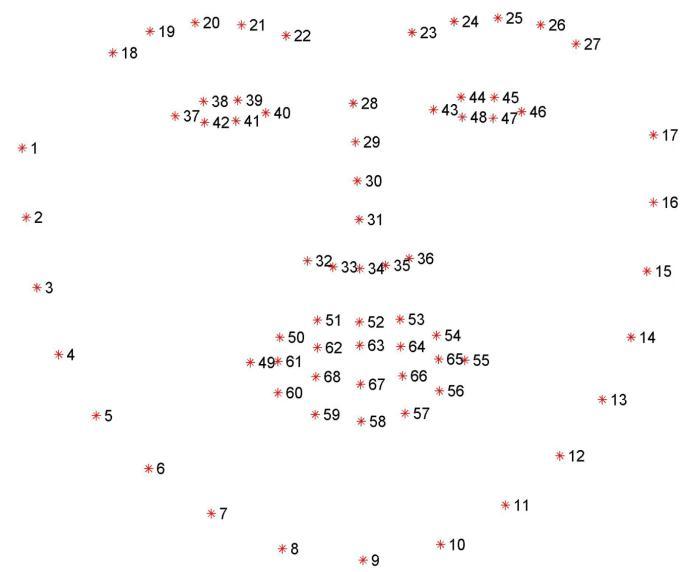

Recognizing faces in images or videos is cool, but it is insufficient to build robust applications. We need more details about the face of the person, such as location, whetherthemouthisopenorclosed,iftheeyesareopenor closed, whether they are looking up, etc. This post will swiftlyandobjectivelyintroduceyoutothe Dlib,a library thatcanprovideyouwith68points(landmarks)oftheface. Itisahistoricalfacialdetectorwithmodelsthathavebeen trained.The68coordinates(x,y)thatmapthefacialpoints onaperson'sfaceareestimatedusingthedlib,asseeninthe graphic below. Dlib is a cutting edge C++ toolkit with machine learning techniques and tools for developing sophisticated software to address real world issues. It is utilizedinbusinessesandacademicsacrossvariousfields, including robotics, embedded technology, mobile technology,andmassivelyparallelcomputingsystems.Dlib canbeusedforfreeinanyapplication,thankstoitsopen sourcelicensing.

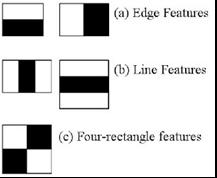

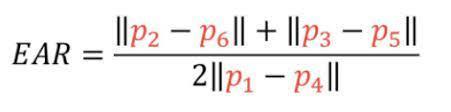

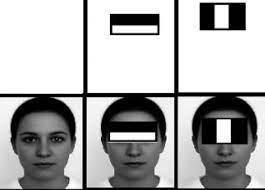

Objectrecognitionusingcascadedclassifiersbasedon the Haarfunctionisapracticalobjectrecognitionmethod.This algorithmfollowsamachinelearningapproachtoincrease itsefficiencyandprecision.Differentdegreeimagesareusedto trainthefunction.Thismethodtrainsthecascadefunction onmanypositiveandnegativeimages.BothFaceimagesand imageswithnofaceareusedtotrainatthebeginning.Then extractfeaturesfromit.Forthis,Haartraitsareused.They are similar to the convolution kernel. Each feature is a separateandsinglevalue,whichisobtainedbyremovingthe sumofthepixelsfallingunderthewhiterectanglefromthe sumofpixelsfallingundertheblackrectangle.

Detectionoffeaturepoints:Itdetectsfeaturepointsonits own.TheRGBimageisfirstconvertedintoabinaryimage forfacialrecognition.Then,iftheaveragepixelvalueisless than 110, black pixels are used as substitute pixels. Otherwisewhitepixelsareusedassubstitutepixels.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Now, all possible models and positions of all cores are usedtoestimatemanyfunctions.Butofallthesefunctionswe calculated,mostofthemarenotrelevant.Forexample,The belowfigure showstwogoodattributesinthefirstrow.The firstfunctionselectedseemstofocusontheattributethatthe eyeareaisusuallydarkerthanthenoseandcheekareas.The secondfunctionselectedisbasedonthefactthattheeyesare darker thanthebridgeofthenose.

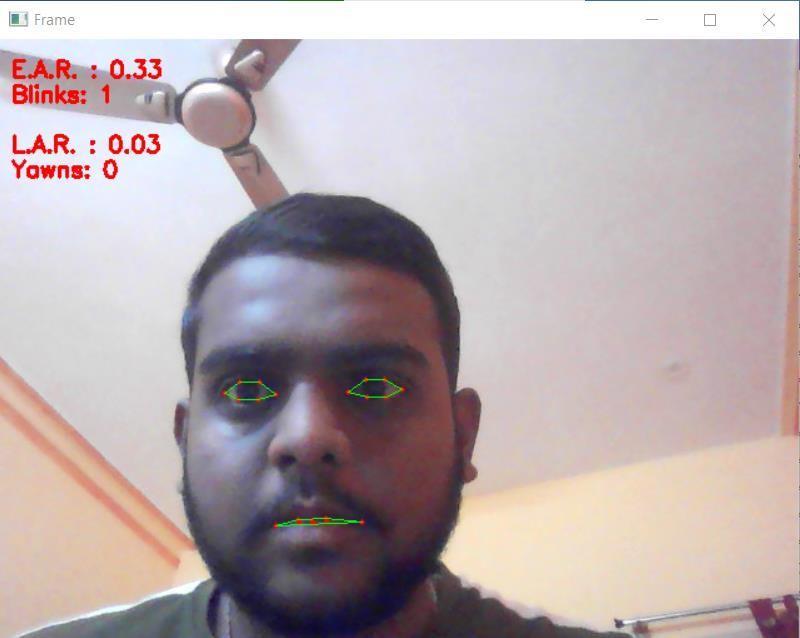

DriverDrowsinessSystembasedonnon intrusivemachine basedconcepts.Thesystemconsistsofawebcamerawhich isplacedinfrontofthedriver.Onlinevideosforsimulation purposedareconsidered.Firstly,camerarecordsthedriver’s facial expressions and eye and mouth positions. Then the videoisconvertedintoframesandeachframeisprocessed individually Finally,thefaceisdetectedfromframesusing

Viola jonesalgorithm.Thentherequiredfeatureslikeeyes andmouth fromfaceareextractedusingcascadeclassifier. ThesphereindicatestheRegionofinterestontheface Here themainattributeofdetectingdrowsinessiseyesblinking, variesfrom12to19perminuteindicatesthedrowsinessif the frequency is less than the normal range. Instead of calculating eye blinking, average drowsiness is calculated. Thedetectedeyeisequivalenttozero(closedeye)andnon zerovaluesareindicatedaspartiallyorfullyopeneyes.

Theequation isusedtocalculatetheaverage.thevalueis morethanthesetthresholdvalue,thensystemgeneratesthe alarmtoalertthedriver.

Fatigueistheprimary reasonforroadaccidents.Toavoid the issue, The driver fatigue detection system based on mouthandyawninganalysis.Firstly,thesystemlocatesand tracks the mouth of a driver using cascade of classifier trainingandmouthdetectionfromtheinputimages.Then, theimagesofmouthandyawningaretrainedusingSVM.In theend,SVMisusedtoclassifythemouthregionstodetects the yawning and alerts the fatigue. The authors collected somevideosandselected20yawningimagesandmorethan 100normalvideosasdatasets Theoutcomesdemonstrate thatthesuggestedsystemoutperformsthesystembasedon geometricfeatures.Theproposedsystemdetectsyawning, alertsthefatigueearlierandfacilitates making thedriver safe.

Theessentialpurposeoftheproposedmethodistodetect thecloseeyeandopenmouthsimultaneouslyandgenerates analarmonpositivedetection.Thesystemfirstlycaptures therealtimevideousingthecameramountedinfrontofthe driver.Thentheframesofcapturedvideoareusedtodetect thefaceandeyesbyapplyingtheviola jonesmethod,with the face and eyes training set provided in OpenCV. Next, small rectangle is drawn around the center of eye and a matrixiscreatedthatshowsthattheRegionofInterest(ROI) thatiseyesusedinthenextstep.Sincethebotheyesblinkat thesametimethat’swhyonlytherighteyeisexaminedto detectthecloseeyestate.Theeyewillberegardedasclosed ifitisclosedforapredeterminedperiod,Firsttheeyeball

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

colorisacquiredbysamplingtheRGBcomponentsonthe centerofeyepixel.Thentheabsolutethresholdingisdone ontheeyeROIbasedoneyeballcolorandanintensitymap isobtainedonY axisthatshowthedistributionofpixelson y axiswhichgivestheheightofeyeballandcomparedthat valuewiththresholdvaluewhichis4todistinguishtheopen andcloseeye.Afterthat,iftheeyeblinkisdetectedineach frameitwillbeconsideredas1andstoredinthebufferand afterthe100frames,eyeblinkingrateiscalculated.Thento detecttheyawningportionofthemouth,acontourfinding algorithmsisusedtomeasurethesizeofmouth.Iftheheight is greater than the certain threshold. It means person is takingyawning.Theeffectivenessofthesuggestedapproach has been evaluated over 20 days at various points while being utilized by individuals with and without mustaches and those who wear glasses The system operates at its optimumwhenthedriversarewithouttheirglasses.

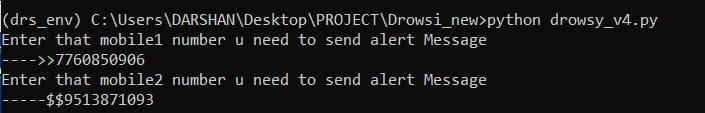

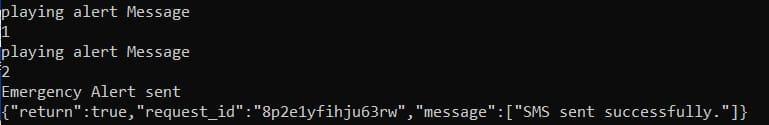

ToconfirmtheaverageofEARreadingduringeyeopen,20 trials was conducted on the same person The trial’s objectiveistoascertainthelowestthresholdatwhichthe alarm will begin to respond, arousing the driver and loweringtheriskofaccidents.BasedontheEARandMAR, initially,athresholdissettothevalueof2EAR,andtheMAR counter will start counting the total number of frames whenever a person’s EAR falls below the minimum threshold,inthiscase,is2.Inthistest,theALERTtextwillbe displayedintheresizedwindow.Next,thethresholdissetto thevalue4EAR,andtheMARcounterwillstartcountingthe totalnumberofframes.Wheneveraperson’sEARandMAR fallbelowthisthreshold,thealarmwillbuzzandalertthe driver when the counter reaches the frames. At last, the thresholdvalueissetto6,EARandMARcounterwillstart countingthetotalnumberofframes.Wheneveraperson's EAR and MAR fall below this threshold, the driver is frequently drowsing and at this stage, the alert message(SMS) containing the message will be sent to the givenemergencyphonenumber.Sincethecodedeveloped usestheloopmethodinthescript,thealarmwillnotstop until the driver is alerted and gain consciousness on the road.Oncethedriver’seyesopen,thecounterwillresetto zero, thus stopping the alarm from beeping continuously. The alarm’s sensitivity can be adjusted by changing the number of threshold frames; the lower the number of frames,thehigherthesensitivity.

Followingarethescreenshotsoftheinterfaceandoutputof theproposedsystem.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

localizing mistakes that might have happened during monitoring.Thesystemcan recoverandcorrectlylocalize theeyesandmouthintheeventofthiserror.

Thefollowingconclusionweremade:

Drowsiness may be detected with excellent accuracyandreliabilityusingimageprocessing.

Fig.10: Playing the alert sound and sending the SMS successfully

A non intrusive method of detecting sleepiness withoutdiscomfortordisturbance.

Based on constant eye closures, a sleepiness detection system created based on image processing assesses the driver’s state of attentiveness.

[1]Prof.PrashantSalunkhe,Miss.AshwiniR.Patil,“ADevice ControlledUsingEyeMovement”,inInternationalConference on Electrical, Electronics, and Optimization Techniques (ICEEOT) 2016.

[2] Robert Gabriel Lupu, Florina Ungureanu, Valentin Siriteanu, “Eye Tracking Mouse for Human Computer Interaction”,in The 4th IEEE International Conference on EHealthandBioengineering EHB2013.

[3] Margrit Betke,James Gips, “The Camera Mouse: Visual TrackingofBodyFeaturestoProvideComputerAccessfor People With Severe Disabilities”,in IEEE transactions on neuralsystemsandrehabilitationengineering,vol.10,no.1, March2002.

Fig.11: SMS sent to the emergency number via Fast2SMS

We have reviewed the various methods available to determinethedrowsinessstateofadriver.Althoughthereis no universally accepted definition for drowsiness, the various definitions and the reasons behind them were discussed. Detect drowsiness include subjective, vehicle based,physiologicalandbehavioralmeasures.Theeyesand mouth weariness might be localized using a non invasive technique.Thepositionoftheeyeballsisdeterminedusing animageprocessingtechnique.Themonitoringsystemcan determineiftheeyesareopenorclosed.Awarningsignalis givenwhentheeyeshavebeenclosedorblinking.Animage processing algorithm gets information about the mouth’s location.Themonitoringdevicecandetermineifthemouth is open or closed. When the mouth is open or when the personyawns,thetechnologycaninstantlyidentifyanyeye

[4] Muhammad Usman Ghani, Sarah Chaudhry, Maryam Sohail, Muhammad Nafees Geelani, “GazePointer: A Real TimeMousePointerControlImplementationBasedOnEye GazeTracking”,inINMIC19 20Dec.2013.

[5]AlexPooleandLindenJ.Ball,“EyeTrackinginHuman ComputerInteractionandUsabilityResearch:CurrentStatus andFutureProspects,”inEncyclopediaofHumanComputer Interaction (30 December 2005) Key: citeulike:3431568, 2006,pp.211 219.

[6] Vaibhav Nangare, Utkarsha Samant, Sonit Rabha, “Controlling Mouse Motions Using Eye Blinks, Head MovementsandVoiceRecognition”,inInternationalJournal ofScientificandResearchPublications,Volume 6,Issue 3, March2016.