International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Sharath B1 , Harshith R2 , Lankesh J3, Sanjay G R4, Chandru A S5

1Sharath B: Student, Dept. of ISE, NIE IT, Mysore, Karnataka, India

2Harshith R: Student, Dept. of ISE, NIE IT, Mysore, Karnataka, India

3Lankesh J: Student, Dept. of ISE, NIE IT, Mysore, Karnataka, India

4Sanjay G R: Student, Dept. of ISE, NIE IT, Mysore, Karnataka, India

5Chandru A S (co author): Assistant Professor, Dept. of ISE, NIE IT, Mysore, Karnataka, India ***

Abstract – With the advent of technology inthisdigitalage, there is always room for improvement in the computer field. Hand free computing is needed from today to meet the needs of quadriplegics. This paper introduces the Human Computer Interaction (HCI) system which is very important for amputators and those who have problems using their hands. The system developed is an eye based communication that acts as a computer mouse to translate eye movements such as blinking, staring and teasing at the actions of a mouse cursor. The program in question uses a simple webcam and its software requirements are Python (3.6), OpenCv, numpyanda few other packages needed for face recognition. The face detector can be constructed using the HOG (Histogram of oriented Gradients) feature and the line filter, as well as the sliding window path. No hands and no external computer hardware or sensors needed.

Key Words: Python(3.6), OpenCv, Human computer interaction,numpy,facerecognition,HistogramofOriented Gradients(HOG)

PEOPLE who are quadriplegic and unable to talk for example, with cerebral palsy, traumatic brain injury, or stroke often have difficulty expressing their desires, thoughts, and needs. They use their limited voluntary movementstocommunicatewithfamily,friends,andother careproviders.Somepeoplemayshaketheirheads.Some mayvoluntarilyblinkorblink.Othersmaymovetheireyes ortongue.Assistedtechnicaldeviceshavebeendevelopedto help them use their movements voluntarily to control computers. People with disabilities can communicate through spelling or add ons. Disable people who cannot move anything without their eyes. For these people eye movement and blinking are the only way to communicate withanexternalvoicethroughacomputer.Thisstudyaims to develop a program that can help people with physical disabilities by allowing them to connect to a computer system using only their eyes. Human interactions with computershavebecomeanintegralpartofourdailylives. Here, eyes as input, user eye movement can provide a simple,naturalandhighbandwidthinputsource.

ThisComputermouseorfingermovementhasbecomethe most common way to move the cursor on the screen in moderntechnology.Thesystemdetectsanymouseorfinger movement so that the map matches the movement of the cursor. Some people who do not have working arms, so called ‘amputated’ will not be able to use the current technologytousethemouse.Therefore,ifthemovementof the eyeball is not tracked and if the direction of the eye towardsitcanbedetermined,themovementoftheeyeball canbedoneonamapandtheamputatedlegwillbeableto move the cursor at will. ‘Eye tracking mouse’ will be very usefulforthetargetperson.Currently,mousetrackingisnot widelyavailable,andonlyafewcompanieshavedeveloped thistechnologyandmadeitavailable.Weaimtofixtheeye trackingmousewheremostofthemousefunctionswillbe found,sothattheusercanmovethecursorusinghisorher eye. We try to measure the user’s ‘viewing’ direction and movethecursortowherehisorhereyeistryingtofocus. peoplewhoareblindactuallycannotuseacomputersoby addingaudiooutputtheycanlistentothecommandsandact accordingly. Mouse pointing and clicking action remains commonforalongtime.However,forsomereasonaperson may find it uncomfortable or if those who are unable to movetheirhands,thereisa needtousethesemousefree hands.

In general, eye movements and facial expressions are the basisofahandlessmouse.

This can be achieved in various ways. Camera mouse suggested by Margrit Betke et. al. [1] for people with quadriplegicandnon speech.Usermovementsaretracked usingthecameraandthiscanbemappedtothemousecursor movement that appears on the screen. However, another approach was proposed by Robert Gabriel Lupu, et. al. [2] withtheinteractionofapersonalcomputerthatusesadevice mountedontheheadtotrackeyemovementsandinterpret on screen.AnotheroptionforProf.Prashantsalunkeet.al[3] introducedeyetrackingtechniquesusingHoughtransform.

A lot of work is being done to improve the features of the HCI.ApaperbyMuhammadUsmanGhani,et.Al[4]suggests

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

thateyemovementscanbereadasinputandusedtohelpthe user access visual cues without using any other hardware devicesuchasamouseorkeyboard[5].Thiscanbeachieved byusingimageprocessingalgorithmsandcomputervision. Another way to get eyes is to, by using the Haar cascade feature.

Eyes can be obtained by pairing them with pre stored templatesassuggestedbyVaibhavNangareet.al[6].Foran accurate picture of the iris an IR sensor can be used. A gyroscopecanbeusedtoidentifytheheadassuggestedby Anamika Mali et. al [7]. Clicking function can be done by 'viewing' or by staring at the screen. Also, by looking at a portionofanypartofthescreen(toporbottom),thescrolling functioncanbedoneassuggestedbyZhanget.al[8].

As well as eye movements, it becomes easier when we combine subtle face movements with their parts as well. Real timeeyedetectionusingfacialexpressionsassuggested byTeresaSoukupovaandJan´Cech[9]showshowblinking can be detected using facial expressions. This is a great feature as blinking actions are needed to translate click actions.Visualizationoftheeyesandotherpartsoftheface canbedoneusingopenCvandPythonwithdlib[10].Likea blinkcanbedetected.[11].AkshayChandra[13]proposes the same by attaching a mouse cursor using facial movements.

Theproposedprocedureinthispaperworksbasedonthe followingactions:a)Blinkingb)closingboththeeyesc)Head movement(pitchandyawning)d)Openingthemouth

Therequirementsforthisjobarelistedandreviewedand analysed. Since the core of this project is part of the software,weonlytalkedabouttheneedforsoftware.

Softwarerequirement

1) Python:

Pythonisthesoftwareweused,wheretheinterfaceis JupyterNotebook.Itisaverycommontool,insome basicmathematics,andisoneoftheeasiesttoolsto use.Someofthelibrariesusedare:

Numpy 1.13.3

OpenCV 3.2.0

PyAutoGUI 0.9.36

Dlib 19.4.0

Imutils 0.4.6

Theprocedureisasfollows:

1)Sincetheprojectisbasedonfindingfacialfeaturesand drawing a cursor, the webcam needs to be accessed first, whichmeansitwillbeturnedonbythewebcam.Oncethe webcamisturnedon,thesystemneedstoextracttheentire framefromthevideo.Theframerateofthevideoisusually equal to 30 frames per second, so the frame every 1/30 secondwillbeusedforprocessing.Thisframeworkcontains asetofprocessesbeforetheelementsoftheframeworkare detected and mapped in the cursor. And this process continuouslyoccursthroughouttheframeworkaspartofthe loop.

2)Oncetheframehasbeenremoved,facecircuitsneedtobe detected. Therefore, the frames will face a set of image processingfunctionstoprocesstheframeproperly,making it easier for the system to detect features such as eyes, mouth,nose,etc.

Theprocessingtechniquesare:

i)Resizing:

Theimageisrotatedfirstovertheyaxisofy.Next,theimage needstoberesized.Resizefunctionreferstosettinganew imageadjustmenttoanyvalueasrequired.Forthisproject, thenewresolutionis640X480.

ii)

Thedataweusetodetectdifferentpartsofthefacerequires agreyformatimagetogivemoreaccurateresults.Therefore, theimage,i.e.,thevideoframefromthewebcamneedstogo through the process of converting its format from RGB to grayscale.

Oncetheimagehasbeenconvertedtograyscaleformat,it canbeusedtodetectfacesandidentifyfacialfeatures.

iii)

Forfacerecognitionandfeatures,apre builtmodelisused intheproject,withavailablevaluesthatcanbetranslatedby pythontoensurethatthefaceislocatedintheimage.There isafunctioncalled‘detector()’,whichismadeavailableto models,whichhelpsustofindfaces.

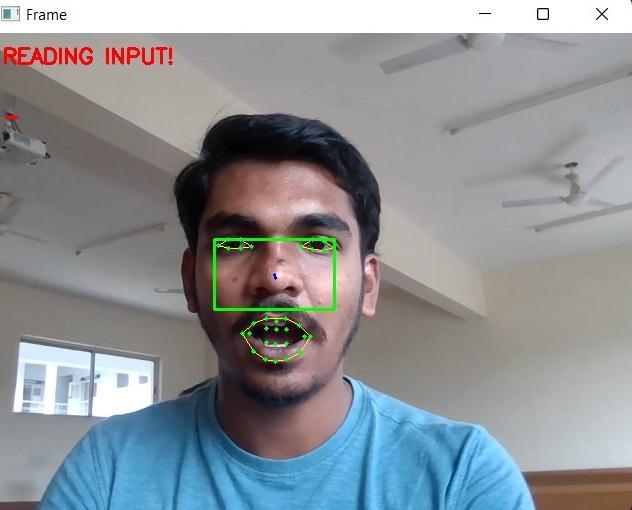

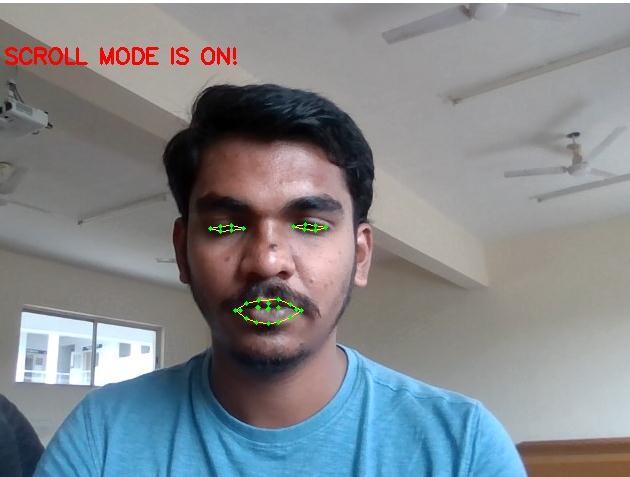

After face detection, facial features can now be detected usingthe‘predictor’function.Thefunctionhelpsustoscore 68pointsonany2Dimage.Thesepointsareaccompaniedby differentfacialpointsneartherequiredpartssuchaseyes, mouth,etc.

Acquiredactivityvaluesareintheformof2Dlinks.Eachof the68pointsisthebasicnumberxandyoftheconnectors, which,whenconnected,willformavisiblesurface.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Thentheyaresaved asa listofvalues sothattheycan be edited and used in the next step to connect any links and drawabordertorepresenttherequiredregionsoftheface.

Thefoursetsofframesareconsideredtobe4separateparts of these numbers kept in order, to be kept separately as connectorsto representthe requiredregions,namely:left eye,righteye,noseandmouth.

Once4identicalmembershavebeenprepared,bordersor ‘concerts’aredrawnnearthepointsusing3ofthesesame members by connecting these points, using the ‘drawcontour’ function and the shape formed around the twoeyesandmouth.

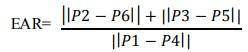

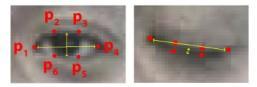

iv) Mouth and Eye aspect ratios: Once the concerts have beenplanned,itisnecessarytohaveastatereference,which incomparison,providestheprogramwithanyinformation onanyactionperformedbytheseregionssuchasblinking, yawning,etc.

Thesereferencesareunderstoodasmeasurements,between 2Dconnectors,andtheswitchchanges,infacttellingusthat, partsofthefaceregionhavemovedawayfromthenormal areaandactionhasbeentaken.

Thesystemisbuilttopredictlocalfacialfeatures.Thepre builtDlibmodelassistsinfastandaccuratefacialrecognition and68points2Dfacialfeaturesasalreadymentioned.Here, the Eye Aspect Ratio (EAR) and the mouth aspect ratio (MAR)areusedtodetectblinking/yawningrespectively. Theseactionsaretranslatedintomouseactions.

The value of EAR decreases significantly when the eye is closed.Thisfeaturecanbeusedtoclickonanaction.

MARriseswhenthemouthopens.Thisisusedasastarting andendingactiononthemouse.Forexample,ifthescaleis increased, it could mean that the distances between the pointsrepresentingthefacialregionhavechangedandthe action has been man made. This action should be understood as a person trying to perform a task using a mouse.

Therefore, in order for these functions to be enabled, an 'aspectratios'needstobedefined,which,ifitexceedsthe specifiedlimit,translatestheactiontobeperformed.

v)Detectionofactionsperformedbytheface:Afterdefining the dimensions, the framework can now compare the dimensions of the facial features with the dimensions described in the different actions, of the current process being considered. It is done using the statement ‘if’. The actionsidentifiedbytheprogramare:

1)Toopenthemouse:Theuserneedsto‘yaw’whichopens his mouth, vertically, and then increases the distance betweenthecorresponding2Dmouthpoints.Thealgorithm detectschangesinrangebymakingacomputerrating,andif thisratingexceedsacertainlimit,thesystemisactivatedand thecursorcanberemoved.Theuserneedstopositionthe nose,up,down,leftorrightrectangle,inordertomovethe cursor in the opposite direction. The farther away the rectangle,thefasterthemovementofthecursor

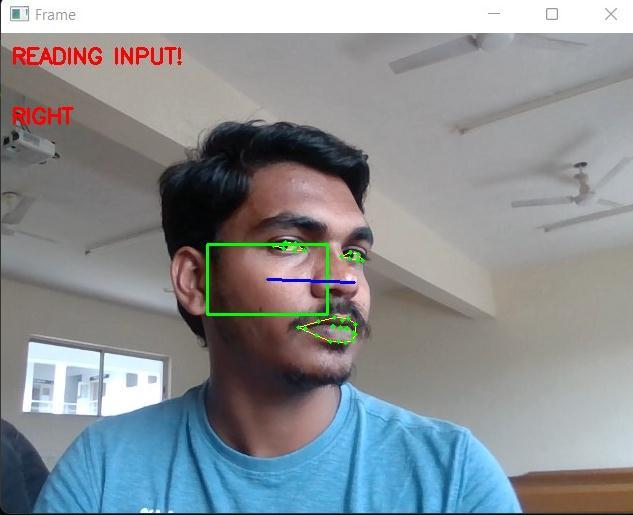

2)Left/RightClick:Withaclick,youneedtocloseanyofthe eyes, and make sure you keep one open. The system first checkswhetherthemagnitudeofthedifferenceisgreater thanthelimitbyusingthedifferencebetweenthetwoeye measurements,toensurethattheuserwantstomakealeft or right click, and does not want to scroll. (Requires both eyestoclear)

3) Scroll: The user can scroll the mouse, up or down. You needtocloseyoureyesinsuchawaythatthedimensionof botheyesisbelowthesetvalue.Inthiscase,whentheuser puts his nose out of the rectangle, the mouse does the scrollingwork,ratherthanmovingthecursor.Hecanmove hisnoseorovertherectangletoscrollup,ormoveitbelow therectangletoscrolldown.

vi) Multiple Points: Although the current system does not trackmultiplepoints,someinitialtestswereperformedto testsuchtracking.Inthefuture,multi pointtrackingmaybe used,forexample,tocalculatedistancesbetweenthenostrils and the pupils for eye recognition. This will result in a specialtrackingsystem.Thepowerofthecurrentversionis itsstandard anyfeaturecanbeselectedfortracking

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

vii) Voice output: In this project we have added a voice outputtopeoplewhocannotseethescreenusingtheireyes, thevoiceoutputis:rightclick,leftclick,right,left,up,down, scroll modeison,scroll modeisoff,inputmodeisonand inputmodeisoff.

viii)Distancefromscreentouser:Thisdistanceneedstobe adjusted correctly so that you can accurately distinguish differentareasoftheeyeandkeeptrackofthecenter.Ifthis distanceistoolargethenthemovementoftheeyewillbe much smaller than the width of the screen so it will be difficulttoaccuratelytrackthecenterarea.Ifthedistanceis toosmallthanthemovementoftheeyesitwillbetoolarge andduetothecurvatureoftheeye.

Themousecontrolsystemwillwork,andtheusercanmove thecursororclick atwill.Thevalueofthecursorposition change on any axis can be adjusted according to user requirements. Mouse control is activated by opening the mouthwhentheamountofMARcrossesacertainlimit.

The cursorismoved by moving the eye right,left, topand downaspertherequirement.

Scrollmodeisactivatedbymockoperation.Scrollingcanbe donebymovingtheheadupwardscalledthrowingand,on thesides,calledyawning.Scrollmodeisstoppedbyjoking again.Clickingactionoccurswiththeblinkofaneye.Right blink is accompanied by right blink and left blink is accompaniedbyleftblink.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Mousesensitivitycanbeadjustedaccordingtouserneeds. Overall,theprojectworksasitshould.althoughcomfortis notthesameasahand controlledmouse,thisprojectcanbe easily implemented with some practice. We have implementedatestingprocesstotesttheusability,accuracy and reliability of the ETM system. Twenty participants, ranginginagefrom19to27,participatedinthestudy.They allhadnormaloradjustedvision,hadnoprioreyetracking experienceandneededalittletrainingtousethesystem.

This function can be extended to improve system speed usingbettertrainedmodels.Also,thesystemcanbemade more powerful by making a change in the location of the cursor, in proportion to the number of movements of the user'shead,thatis,theusercandetermine,theamounthe wants the position of the indicator to change. we have designedtheprojectinsuchawaythatblindpeoplecanuse acomputerbylisteningtoaudiooutputproducedbytheir camera directedactions.Also,futureresearchworkcanbe donetomakethemeasurementmoreaccurate,astherange of values is the result of the measurement of the feature, whichisusuallysmaller.Therefore,tomakethealgorithm detect actions more accurately, there may be some modificationtotheformulasoftheaspectratiosused.Also, to make the face detection process easier, some image processingtechniquescanbeusedbeforethemodelgetsthe faceandfacialfeatures.

[1] Margrit Betke,James Gips, “The Camera Mouse: Visual TrackingofBodyFeaturestoProvideComputerAccessfor People With Severe Disabilities” ,in IEEE transactions on neuralsystemsandrehabilitationengineering,vol.10,no.1, March2002

[2] Robert Gabriel Lupu, Florina Ungureanu, Valentin Siriteanu, “Eye Tracking Mouse for Human Computer Interaction”,inThe4thIEEEInternationalConferenceonE HealthandBioengineering EHB2013.

[3]Prof.PrashantSalunkhe,Miss.AshwiniR.Patil,“ADevice Controlled Using Eye Movement”, in International Conference on Electrical, Electronics, and Optimization Techniques(ICEEOT) 2016.

[4] Muhammad Usman Ghani, Sarah Chaudhry, Maryam Sohail, Muhammad Nafees Geelani, “GazePointer: A Real TimeMousePointerControlImplementationBasedOnEye GazeTracking”,inINMIC19 20Dec.2013.

[5]AlexPooleandLindenJ.Ball,“EyeTrackinginHuman ComputerInteractionandUsabilityResearch:CurrentStatus andFutureProspects,”inEncyclopediaofHumanComputer Interaction (30 December 2005) Key: citeulike:3431568, 2006,pp.211 219.

[6] Vaibhav Nangare, Utkarsha Samant, Sonit Rabha, “Controlling Mouse Motions Using Eye Blinks, Head MovementsandVoiceRecognition”,inInternationalJournal ofScientificandResearchPublications,Volume 6,Issue 3, March2016.

[7] Anamika Mali, Ritisha Chavan, Priyanka Dhanawade, “Optimal System for Manipulating Mouse Pointer through Eyes”,inInternationalResearchJournalofEngineeringand Technology(IRJET)March2016.

[8]XuebaiZhang,XiaolongLiu,Shyan MingYuan,Shu Fan Lin,“EyeTrackingBasedControlSystemforNaturalHuman Computer Interaction”,in Hindawi Computational Intelligence and Neuroscience Volume 2017, Article ID 5739301,9pages.

[9]TerezaSoukupova´andJanCˇech.“Real TimeEyeBlink DetectionusingFacialLandmarks.”In21stComputerVision WinterWorkshop,February2016.

[10] Adrian Rosebrock. “Detect eyes, nose, lips, and jaw” withdlib,OpenCV,andPython.”

[11] Adrian Rosebrock. Eye blink detection with OpenCV, Python,anddlib.

[12]C.Sagonas,G.Tzimiropoulos,S.Zafeiriou,M.Pantic.300 Faces in the Wild Challenge: The first facial landmark localization Challenge. Proceedings of IEEE Int’l Conf. on ComputerVision(ICCV W),300Facesin the WildChallenge (300 W).Sydney,Australia,December2013

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal