International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

2

, Prateek C3, Keerthana P B4, Nishanth B51 Professor, Dept. of Computer Science Engineering, JSS Science & Technology University, Mysore, India 570006 2,3,4,5 BE Student, Dept. of Computer Science Engineering, JSS Science & Technology University, Mysore, India 570006 ***

Abstract Rain removal is a technique to detect rain streak pixels and then remove them and restore the background of the removed area. The goal of this paper is to remove the rain from videos with dynamic backgrounds and also to remove the hazy effect. In this paper we propose a hybrid model which combines the idea of SPAC CNN and J4R NET methods, to enhance the removal of rain streaks from the video. The SPAC CNN method can be dividedinto two parts i.e., Optimaltemporalmatchingand Sorted spatial temporal matching. These two tensors will be prepared as input features to J4R NET. J4R NET algorithm involves CNN extractor, Degradation classification network, Fusion network, Rain removal network, Reconstruction network and finally JRC network. The result of using this method is measured using PSNR and has achieved better results. Visual inspection shows that much cleaner rain removal is achieved especially for highly dynamic scenes with dense and opaque precipitationfroma fast movingcamera.

Key Words: Rain Removal, CNN, RNN, J4R NET, SPAC CNN,PSNR.

Thepurposeofthisprojectistoremoverainfromvideos withoutblurringtheobject.Thealgorithmhelpsdesigna system that removes rain from videos to facilitate video surveillance and improve various vision based algorithms.Theapplicationshouldbeabletocapturethe real time video which can have certain features such as objectdetection,tracking,segmentationandrecognition. Itshouldbeabletocapturethevideoinbothsteadyand dynamic weather conditions. The final outcome of the application is to provide a dynamic video by removing the rain streaks where the video quality should not be reduced.

This product can be used in daily life and also can be used in military and for investigative purposes. In daily life, this product can be used by a mobile user for recording better videos while there is rain and can be used for drones, gopros etc., for recording and shooting movies or videos for personal use. It can be used for traffic surveillance, where it can read vehicle numbers and people's faces in traffic, while there is rain. It can also be used in homes for CCTV and could record clear videoeverytime.

Invideorestorationbyremovingrainstreaks,wemainly capture the video with rain which is dynamic, where boththebackgroundandforegroundobjectsaremoving. And we prepare a model which can remove the rain streaks from the video and maintain the details of the objectsattherainregions.

Rain Removal algorithms can be classified into single imagerainremovalandvideo basedrainremoval.Single image rain removal can be done by various algorithms like CNN, deep detail network, joint rain detection etc. But single image rain removal has less real world application. They are easy to implement with known algorithms. Whereas, removing rain streaks from dynamically moving video is the field of interest which includesadditionalparameterstobeconsidered.

The paper "Should we encode rain streaks in video as deterministic or stochastic?" by School of Mathematics and Statistics, Xi'an Jiaotong University, 2017 [4], uses the P MoG model to encode rain streaks as stochastic knowledge and to their formulation uses a mixture of patch basedGaussians.Later,itusestheEMalgorithmto optimize the parameters and solve the P MoG model. Finally, post processing is performed to enforce continuity on the moving object layer. But this paper considers only static backgrounds with moving objects. It does not take into account the problem of removing rainwithamovingcamera.

In the paper, “A Novel Tensor based Video Rain Streaks Removal Approach via Utilising Discriminatively Intrinsic Priors”, 2017 [5], the summary of prior and regularizations are used for formulation. Later, the ADMM algorithm is used to optimize the five auxiliary tensors. But the limitation of this method is, if the rainy

direction is far away from the y-axis, we can handle it with video/image rotation, but for the digital data, the rotation causes distortion. Another problem is, how to handleremainingrainartifacts.

Kshitiz Garg and Shree K. Nayar proposed a method in whicha photometricmodel isassumed[3]. Photometric models assumed that raindrops have almost the same size and velocity. Pixels that lie on the same rainband

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page 1033

International

(IRJET)

e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

are also assumed to have the same irradiance because the droplet brightness is weakly affected by the background. This provides a large number of error detections. The algorithm failed to identify out-of-focus streaks of rain and streaks on a lighter background. Thus, all rainbands do not obey the photometric constraints.

The authors (J.Ramya, S.Dhanalakshmi, Dr. S.karthick) [6]usednewapproachforraindetectionandremovalof video-based rain removal framework via properly formulating rain removal as in video decomposition problem based on Analysis and Synthesis algorithm (A&S).Later use analysis and synthesis filters are often implementedwithhierarchicalsubsampling,resultingin a pyramid. Then they use a conventional image decomposition technique, before that we first decomposeanimageintothehigh-frequencypartsusing abilateralfilter.Thehigh-frequencypartisthendivided intorainyandnon-rainy.

This article was published by KYU-HO LEE, EUNJI RYU AND JONG-OK KIM, (Member, IEEE), School of Electrical Engineering,KoreaUniversity,Seoul02841,SouthKorea [7].Inthisarticle,anewdeeplearningmethodforvideo rain removal based on recurrent neural network (RNN) architecture was proposed. Instead of focusing on different rainband shapes similar to conventional methods, this paper focuses on the changing behaviour of rainbands. To achieve this, progressive rain streak images have been generated from real rain videos and fedtothenetworksequentiallyindescendingrainorder.

Multiple images with different amounts of rain streaks were used as RNN inputs for more efficient rain streak identification and subsequent removal. Experimental results show that this method is suitable for a wide rangeofrainyimages.

Title of the paper Year Method Limitations

Detectionand Removalof Rainfrom Videos

2013 photometri cmodel Thealgorithm couldnot identify defocusedrain streaksand streakson brighter backgrounds

ShouldWe 2017 P MoG Itdoesn’t

EncodeRain StreaksinVideo as Deterministic orStochastic?

ANovelTensor basedVideo RainStreaks Removal Approachvia Utilizing Discriminativel yIntrinsic Priors

model considertherain removalproblem withamoving camera

2017 priorand regularizer s, ADMM algorithm

Iftherainy directionisfar awayfromthey axis,wecan handleitwith video/image rotation,butfor thedigitaldata, therotation causesdistortion. Handling remainingrain artifacts

RobustVideo Content Alignmentand Compensation forRain Removalina CNN Framework

EraseorFill? DeepJoint RecurrentRain Removaland Reconstruction inVideos

2018 SPAC CNN algorithm Lessfocuson reconstructionof regionswithrain streaks.

2018 Joint Recurrent Rain Removal and Reconstruc tion Network

Lessfocusonfast movingcameras.

D3R Net: Dynamic Routing Residue Recurrent Networkfor VideoRain Removal

Progressive RainRemoval viaaRecurrent Convolutional Networkfor RealRain Videos

2019 Dynamic Routing Residue Recurrent Network (D3R Net)

Lessfocusonfast movingcameras.

ShouldWe EncodeRain StreaksinVideo as Deterministic orStochastic?

2020 temporal filtering, RNN

Producing suitable progressiverain imageswouldbe difficultas movingregions arewiderinthe image.Itisnot suitableforhazy effectscausedby rains.

2017 P MoG model Itdoesn’t considertherain removalproblem withamoving camera

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

This involves collection of video datasets which have both non rain and rain videos. After collection of datasets,theyhavetobeprocessedtofacilitatetraining. Thepriorityofthisfunctionishigh.

Collection of videos from various sources on the Internet. The videos collected may be of different formats, but it may include videos with rain, corresponding videos without rain. The collected video may be in different format, structure, size, representation etc. For the ease of training, we have to bring all the video datasets to the same format and structure.

For this project, we have considered the LasVR dataset and SPAC CNN dataset along with RainSynLight25. This makes around 12000 frames taken from around 900 videos making it a training dataset. And around 1400 framestakenfromaround100videosmakingitatesting dataset.

We use two types of datasets for training the model required for removing rain streaks. One type of dataset is synthetic dataset, where we take the video with normal background and add the rain streaks to the video.Theothertypeofdatasetisrealdataset,wherewe considertherealvideoscontainingtherainstreaks.

Invideorestorationbyremovingrainstreaks,wemainly capture the video with rain which is dynamic, where boththebackgroundandforegroundobjectsaremoving. And we prepare a model which can remove the rain streaks from the video and maintain the details of the objectsattherainregions.

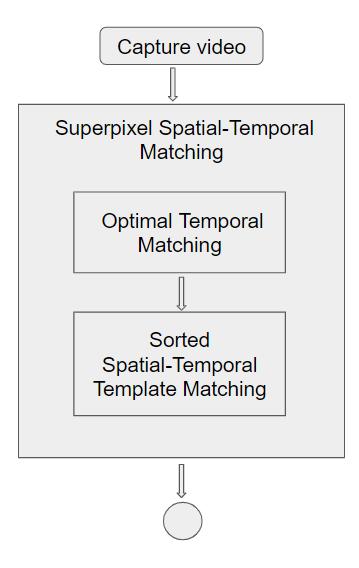

In this method, we will combine the ideas of two methods. For the first method, video content alignment is carried out at Super Pixel level, which consists of two SP template matching operations that produce two output tensors: the optimal temporal match tensor, and the sorted spatial temporal match tensor. An intermediate Derain output is calculated by averaging theslicesofthesortedspatial temporalmatchtensor.

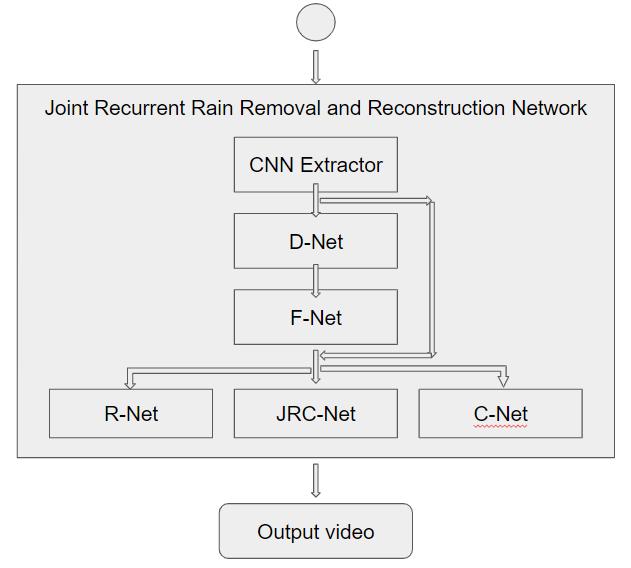

Second, these two tensors will be prepared as input features to a Joint Recurrent Rain Removal and Reconstruction Network. The J4R Net architecture consists of Single Frame CNN Extractor, Degradation Classification Network, Fusion Network, Rain Removal Network,ReconstructionNetwork,andfinallycombined to Joint Rain Removal and Reconstruction Network. A lossfunctionisusedtominimisethemis alignmentblur.

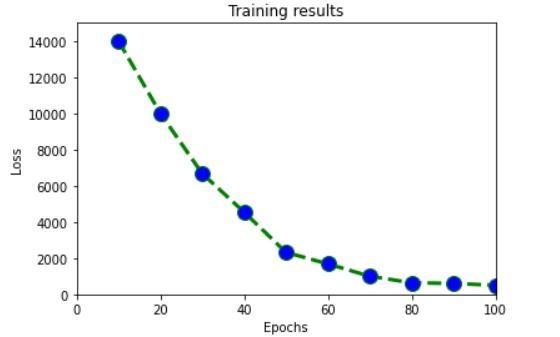

Thehyperparametersusedintheimplementationofthis method are, learning rate is taken as 1e 3 and then we are considering 100 epochs with mean squared error loss as loss function and Adam optimizer as optimizer, we are using 72 processors and 2 GPU for this project. Thestructureofthenetworkimplementationisa fusion ofthestructurementionedin[1]and[2].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

The above graph has L2 loss values on y axis and the numberofepochscalculatedonx axis,whenweplotthe values on the graph we have a decreasing curve, at first there is a sharp drop in the curve and then it gradually stabilizes and the loss is around 500 when the Epoch valueis100.

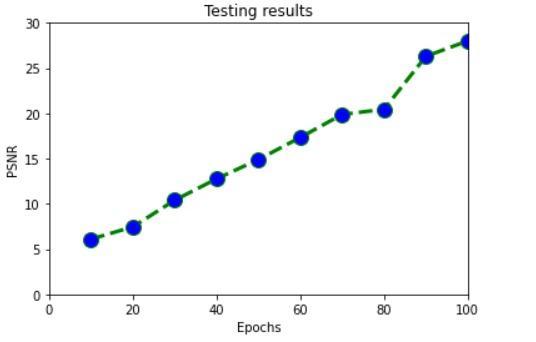

Peak signal to noise ratio (PSNR) is a technical term for theratiobetweenthemaximumpossiblesignalstrength and the strength of the interfering noise, which affects thefidelityofitsrepresentation.

In the graph above we have plotted the epochs over PSNR,onthey axiswehavethePSNRvalues andonthe x axis, we have the number of epochs. The curve gradually increases and reaches 28.01 when Epoch is 100.

Afterthevideoisuploaded,thedeeplearningmodel will be run, and then after processing all the frames of the video, the above layout of the website will be displayed,thevideoontheleftistheinputvideo,andthe videoontherightistheoutputwiththerainremoved.

Inthispaper,weproposedahybridrainmodeltodepict both rain streaks and occlusions and handle torrential rain fall with opaque streak occlusions from a fast moving camera. CNN and RNN were built to seamlessly integrate rain degradation classification, rain removal based on spatial texture appearance, and background detail reconstruction based on temporal coherence. The

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p-ISSN: 2395-0072

CNNwasdesignedandtrainedtoeffectivelycompensate forthemisalignmentblurcausedbythewaterexclusion operations. The entire system demonstrates its efficiencyandrobustnessinaseriesofexperimentsthat significantlyoutperformstate of the artmethods.

The proposed method network is slow for high fps videos.Anenhancementusingmobilenetcanbeusedin futuretospeedupthenetwork.

[1] “RobustVideoContentAlignmentandCompensation forRainRemovalinaCNN Framework”byJieChen, Cheen HauTan,JunhuiHou,Lap PuiChau,andHeLi, School of Electrical and Electronic Engineering, NanyangTechnologicalUniversity,Singapore.

[2] “Erase or Fill? Deep Joint Recurrent Rain Removal and Reconstruction in Videos” by Jiaying Liu, Wenhan Yang, Shuai Yang, Zongming Guo, Institute of Computer Science and Technology, Peking University,Beijing,P.R.China.

[3] “Detection and Removal of Rain from Videos” by Kshitiz Garg and Shree K. Nayar. Department of Computer Science, Columbia University New York, 10027.

[4] “Should We Encode Rain Streaks in Video as DeterministicorStochastic?”byWeiWei,LixuanYi, QiXie, QianZhao, DeyuMeng,ZongbenXu1,School of Mathematics and Statistics, Xi’an Jiaotong University.

[5] “A Novel Tensor based Video Rain Streaks Removal Approach via Utilizing Discriminatively Intrinsic Priors” by Tai Xiang Jiang , Ting Zhu Huang, Xi Le Zhao, Liang Jian Deng, Yao Wang, School of Mathematical Sciences, University of Electronic ScienceandTechnologyofChina.

[6] "An Efficient Rain Detection and Removal from Videos using Rain Pixel Recovery Algorithm" by J. Ramya, S.Dhanalakshmi, Dr. S. Karthick. UG Scholar, Associateprofessor,ProfandDean,Dept.ofCSE,SNS CollegeofTechnology,Coimbatore,India.

[7] KYU HO LEE, EUNJI RYU, AND JONG OK KIM , (Member, IEEE) School of Electrical Engineering, Korea University, Seoul 02841, South Korea. November19,2020.