International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

SIGN LANGUAGE INTERFACE SYSTEM FOR HEARING IMPAIRED PEOPLE

*1Professor, Department of Computer Science & Engineering, Presidency University, Bengaluru, Karnataka, India. *2,3,4,5Student, Department of Computer Science & Engineering, Presidency University, Bengaluru, Karnataka, India. ***

Abstract Communicating with people is a fundamental form of interaction, and being able to do so with people who have hearing impairments has proven to be a significant challenge. As a result, we are using Sign language as a critical tool for bridging the communication gap between hearing and deaf people. Communicating through sign language is difficult, and people with hearing impairments struggle to share their feelings and opinions with people who do not speak that language. To address this issue, we require a versatile and robust product. We proposed a system that aims to translate hand signals into their corresponding words as accurately as possible using data collected and another research. This can be accomplished through the use of various machine learning algorithms and techniques for classifying data into words. For our proposed system, we are using the Convolutional neural networks (CNN) algorithm. The process entails being able to capture multiple images of various hand gestures using a web cam in this analysis, and the system being able to predict and display the corresponding letters/words of the captured images of hand gestures that have previously been trained with Audio. Once an image is captured, it goes through a series of procedures that include computer vision techniques such as gray scale conversion, mask operation, and dilation. The main goal and purpose of this project will be to remove the barrier between deaf and dumb people and normal people.

Key Words: SignLanguage,ConvolutionalNeuralNetwork (CNN), Machine Learning, Hand Gesture, Hearing Impairments.

1.INTRODUCTION

Communicationhasbeenandwillcontinuetobeoneofthe mostimportantnecessitiesforbasicandsocialliving.People withhearingdisabilitiescommunicateeachotherusingsign language,whichisdifficultfornormalpeopletograsp.The maingoalofthischallengeistopreventthisbyusingSign language to establish communication between deaf and hearingpeople.Asafuturescope,thisprojectextensionto framingwordsandcommonplacephraseswillnotonlycome in handy for deaf and dumb people to communicate with aroundtheworld,butitwillalsoaidinthedevelopmentof independentstructurestoknow howandhelpingpeople.

The majority of countries are developing their own standardsandinterpretationsofvarioussigngestures.For example,analphabetfromAmericanSignLanguage(ASL)

willnotdepictthesamefactorasanalphabetfromIndian Sign Language (ISL). This is a major drawback for many countries.Asthisemphasizesdiversity,italsohighlightsthe complex struggles of sign languages. Deep gesture knowledgeshouldbewell versedinallgesturesinorderto achievereasonableaccuracy.

2. EXISTING SYSTEM

In [1] they have proposed a system for sign language recognitionusingCNNalgorithm.Itinvolvesaseriesofsteps whereafterpreprocessingtheimage,thetrainedCNNmodel comparesittopreviouslycapturedimagesanddisplaysthe predictedtextfortheimage.

Andin[2],theyhaveusedapreviouslytraineduserdefined dataset.Theytrainednotjustalphabetsbutalsofewspecific wordswhichishelpfulinreal timeexperiencewiththehelp of CNN model. For recognizing words, they have used ConvNetstoattainmaximumaccuracywhichhandle2Dor 3Ddataasinput.Thismodelisalsocapableofhandlinglarge amountsofdata.

In [1] they were only able to predict single alphabets, whereas in the proposed system in [2] had moved a step forwardandwasabletopredictwordswithadvancement.

In order to attain maximum accuracy [3] have introduced filteringofimages.Thisimprovestheaccuracyofidentifying symbolsindifferentlowlightareas.Herebeforetheimage beginsprocessofsaturationandgreyscaling,filteringtakes placefirsttofindthesymbolshowninthehands.

SwapnaandSJaya[4]haveperformedfundamentalresearch ondifferenttypesofsignlanguagerecognitionhappeninglike usingImagebasedsignlanguagetranslator,Glovebasedsign language translator and sensor gloves. After taking into considerationalltypes,theyhaveused5DT(FifthDimension Technologies)glovesandastheirproposedsystem.Withthe helpofKNN,Decisiontreeclassifierandneuralnetworkthey have been able to achieve the required accuracy and recognition.

[5] have proposed a system in which they have used HSV. Oncetheimageiscapturedusingwebcam,itpassesthrough HSV to detect and eliminate the background. After which segmentationisdoneintheregionofskintone.OpenCVis used to remove the difference between different captured

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

images and amended to same size. It further undergoes dilationanderosionusingellipticalkernel.CNNisappliedfor training and classification after the binary pixels are extractedfromtheframes.

3. PROPOSED SYSTEM

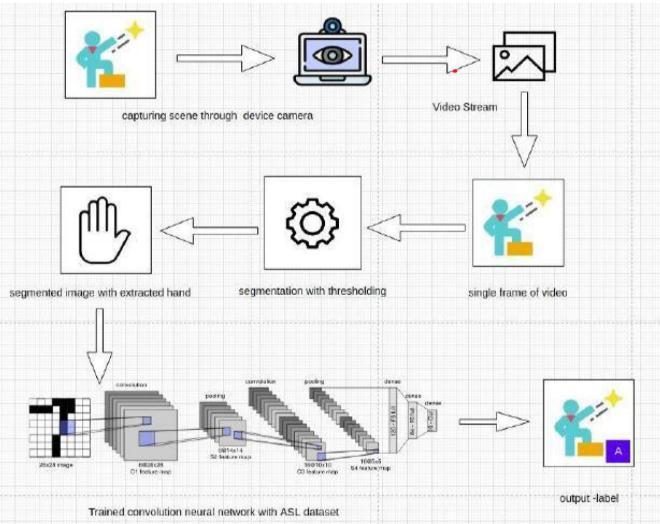

Ourproposedsystemfollowsdifferentsteps,inwhichit takestheimagefromvideofeedandprovidestheoutputas textandaudio.

Inthefirstphase,itcapturestheimagefromavideofeed and provides it as input; subsequently, various image processing techniques are used to eliminate noise and backgroundfromtheimage.Toavoiderrorswhiletraining theCNNmodel,allcollectedpicturesareresizedtothesame dimension. We use the RGB HSV conversion method to removenoiseandbackgroundfromtheimage,whichhelps toimproveimagequalitybyaddingbrightnesstotheimage. For hand gesture recognition, we use the CNN algorithm, which provides more accurate results than the KNN and decision tree algorithms. We also use a real time sign language recognition system and a CNN 2D model, which enhancesthemodel'saccuracy.Wehaveaddedadditional images to our dataset to improve prediction. Our method does not include any hand glove or sensor based approaches,astheymightmakeitdifficultforpeopletouse thedevice.Wehavealsoaddedanextraaspecttoit,whichis thattheobtainedoutputisintheformoftext/letter,which weareconvertingintospeechforgoodunderstandingand userinterface.

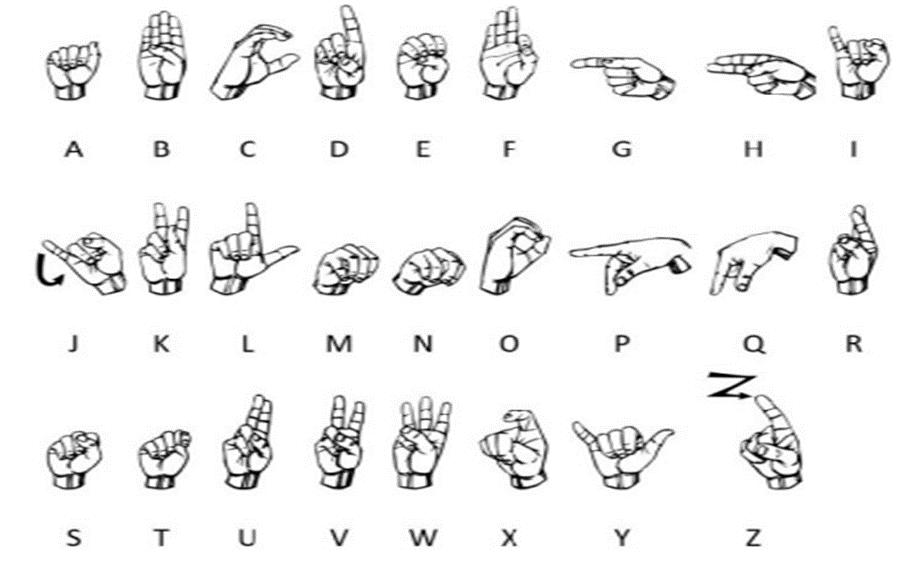

precise in obtaining correct captions for letters. Convolutionalneuralnetworks(CNN)algorithmisusedfor image classification. HSV(Hue, Saturation, Value) and grey scaleconversiontechniquesusedforimagepreprocessing. The Fig 2 represent hand symbols/gestures used in our system.

Fig 1:SystemFlowChart

4. IMPLEMENTATION

TheSignlanguagerecognitionsystemthatweareproposing, detectsdiversehandmotionsbyrecordingvideoandturning it into frames. The pixels are then split, and the picture is generated and submitted to the learning algorithm for assessment. As a result, our method is more resilient and

Fig 2:TheAmericanSignLanguageSymbols

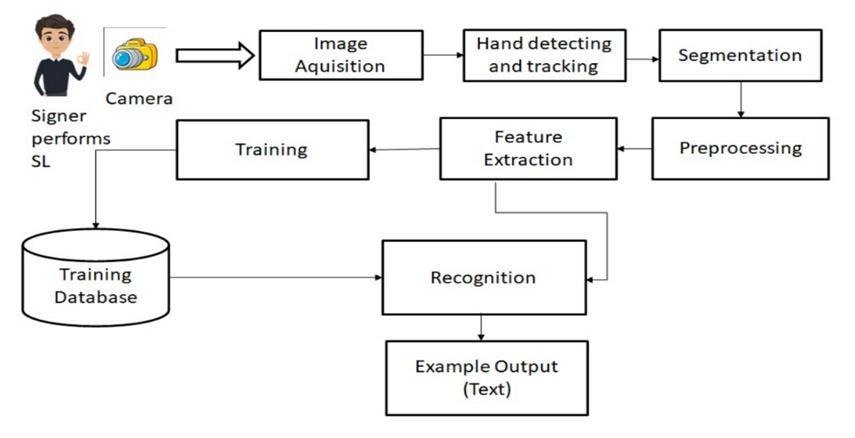

Fig 3:SystemWorkflow

4.1. IMAGE ACQUISITION

Itistheprocessofgettinganimagefromasource,usuallya hardware basedsource,forimageprocessing.Inourproject, the hardware based source is Web Camera. Since no processingcanbedonewithoutanimage,obtainingitisthe initialstageintheworkflowsequence.Theimageacquired hasnotbeenmodifiedinanyway;itissimplyarawimage,

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page736

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

we use OpenCV python library for capturing image/video from webcam. Here we capture 1200 sample images and storeitinourdatabase.

valuable way for decreasing the quantity of data to be processed.Thenextstepistoconvertgrey scaleimagesto HSV.TheimagesareconvertedtograyscaleandthentoHSV beforebeingfedintotheskinmaskingtool.HSVmaybeused todefineanimage'scolorspace(Hue,Saturation,andValue). ThehueinHSVspecifiesthecolor.Thecolorinthismodelis ananglerangingfrom0to360degrees.Therangeofgreyin thecolorspaceisdeterminedbysaturation.Valuedenotes the brightness of a color that fluctuates with color saturation. This process removes the extra backdrop and allowsyoutofocusonthehandregionthatneedstobeused. Wesettheupperandbottomboundsforthepicturebased ontheHSVoutput.

Fig 4:CapturedInputImage

4.2. SEGMENTATION & FEATURES EXTRACTION

This approach is concerned with removing items or signs fromthecontextofatakenimage.Itincludesanumberof techniques,suchasskin colordetection.Edgedetectionand contextsubtraction.Themotionandpositionofthecollected image must be identified and split in order to identify signals.Form,contour,geometricalfeature(position,angle, distance,etc.),colorfeature,histogram,andotherspecified properties are taken from input images and applied afterwardsforsignclassificationoridentification.Aphasein theimagecompressiontechniquethatsplitsandclassifiesa bigcollectionoforiginaldataintosmaller,simplerclassesis feature extraction. Hence , processing would be more straightforward.Mostcrucialelementofthesebigdatasets is the wide range of variables. A substantial amount of computerpowerisrequiredtohandlethesevariables.Asa consequence,functionextractionassistsinselectingthebest feature from large sets of data by choosing and merging parameters into functions and lowering data size. These features are simple to use correctly and uniquely while definingthedatacollected.

Fig -6:RGBtoHSVImageConversion

4.4. CNN WORKFLOW

CNNs are a basic form of deep learning, where a more delicatemodeladvancestheprogressofartificialintelligence byprovidingsystemsthatimitatemanyaspectsofbiological human neural activity. The CNN model is fed with the processedpicturestoclassifytheimages.Weareobligedto usetheAmericanSignLanguagealphabetdatasettoperform the image processing techniques. Convolution Neural Networkwasusedtotrainthepictures.Wetendtofeedthe CNNmodelwiththeprocessedimagestoclassifytheimages. Oncethemodelisfitted,wetendtouseittopredictrandom imagesfromthecomputertopredictthetextandshowthe outputonthescreen.

Fig 5:ImageinROI

4.3. PRE PROCESSING

Eachimageframeisrefinedtoremovenoiseusingarangeof filters such as erosion, dilation, and Gaussian smoothing. When a color image is converted to grayscale, its size is decreased.Convertinganimagetogray scaleimagesisa

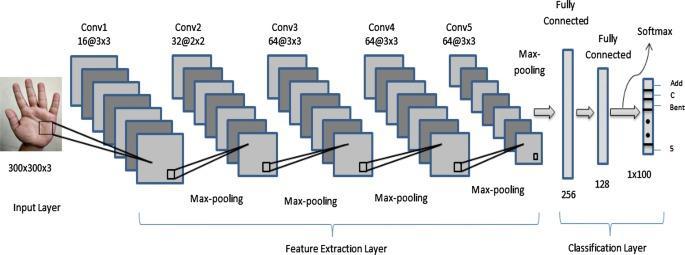

Fig 7:CNNArchitecture

CNNhasdifferentlayersthroughwhichtheimagegoesfor furtherclassification.InitiallyweareusingCNNmodelalong withsequentialclassifier.

•

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

Convolution Layer 1 :

Intheconvolutionallayer,itisconvertedinto2Dmodelof 32bits3x3andtheinputimageof64x64pixelsof3bitswith “relu” activation function. The image is reduced into a smaller matrix to reduce the difficulty in computing large image matrix which are obtained from convolution operation.Thecomplexityisreducedbydownsamplingit.

•

Pooling Layer :

Poolinglayerisusedtoreducethedimensionsofthefeature maps.Herethemaximumpixelvalueisselected.Herepool sizefor'MaxPooling'functionforthe2Dmodelissetto2x2.

•

Convolution Layer 2 & 3:

Afterthisasecondconvolutionallayerisintroducedwitha classifier of 2D model with 32 pixels of 3x3, using 'relu' activationfunction.Alongwitha maxpoolinglayerwith a pool size of 2x2 as the first convolutional layer. A third convolutionallayerisintroducedwith64pixelsof3x3and thesamepoolsizeof2x2aspreviouslayers.

• Flattening

:

The next step is Flattening where we use 'classifier. Add(Flatten())’ to complete the process. . This method is transforming a pooled function map into a single column whichcanbeeasilypassedtothefinallayerthatistheFully Connected layer. Here each neuron corresponds to pixels andthesepixelsarechosenrandomly.Itislinkedthroughits backpropagationprocess.

•

Fully Connected Layer :

At this stage, as a name suggest fully connected: every neuron in the network is connected to each otherwhere. Here the classifier with dense layer, 'relu' and 'softmax' activationfunctionisused.

• Compiling:

Aftergoingthroughallthelayers,theCNNmodeliscompiled forerrorsandoptimization.Weusedifferentmetricstofind theaccuracyofthemodel.Lastly,wesavethemodeltoour directoryfortestingthecapturedimages.

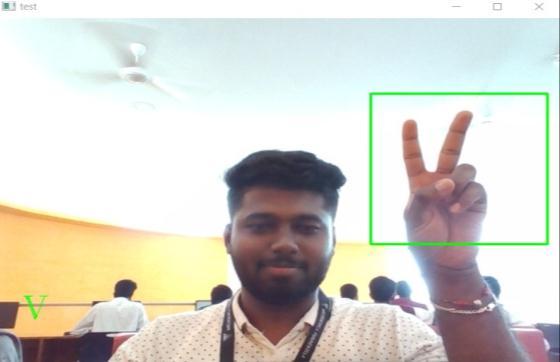

4.5. OUTPUT

Aftergettingtheoutputtext,itisconvertedintospeechfor betterunderstanding

5. EVALUATION

Fig -8:OutputScreen

Below Table 1 shows the input images and its correspondingoutputimageswithtext.Wetestedthemodel for all the alphabetical hand gestures of American sign languageandobtainedaround95%accuracy.Herewehave showed output for letters A,B,C,G and V. First and second columnshowsactualalphabetandinputimage,whilethird and fourth column shows RGB HSV Converted image and predictedalphabet.Atlast,thepredictedtextisconverted intoaudio/speechbyusingpythonpackage.

Alphabet Input Image Output Image Output Obtained A

Table 1: Output

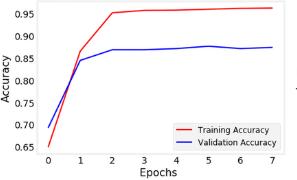

AftercollectingtheoutputfromtheCNNmodel,webuildthe accuracygraphandanalysesittogainabetterknowledgeof thedataset'strainingandtesting.

Theaccuracygraph Graph 1 belowdisplaystheaccuracyof each epoch. The graph is created by processing the image using the approach described in Section 4.3. The training

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 07 | July 2022 www.irjet.net p ISSN: 2395 0072

accuracyatthelastepochis95%,whilethetestingaccuracy is85%.

containsnumeralsandwords,thusaddingthiselementto oursystemiscritical.

8. CONCLUSION

Graph -1:CNNAccuracygraph

6. LIMITATIONS

a) While sign language is an excellent mode of communication, it continues to be a challenge for those who do not understand sign language to communicatewithmutepeople.

b) Signlanguagecallsforusingarmstomakegestures. Thisshallbeadownsideforthosewhodonolonger have complete use of their palms or have disabilities.

c) Due to the variations in Sign language fluctuating fromoneunitedstatesofAmericatoeveryother,it willbecomedifficulttoanalyzeallkindsofgestures.

d) Classification methods are also varying among researchers.Researchersgenerallytendtodevelop their own idea, based totally on personal techniques,togivehigherbringaboutspottingthe signallanguage.

e) The model we are using has an ability to do the categorization that is influenced by the model's background and environment, such as the illumination in the room and the pace of the motions and also other factors influence. Due to changesinviews,thegestureseemsdistinctin2D space.

7. FUTURE SCOPE

Asdescribedintheprecedingpart,oursystemhasvarious problems to solve. If we can overcome these issues and constructarobustsystemthatcandetectlowillumination and recognize hand gestures by improving image quality,will have a better scope. Integrating it with some high endIOTdeviceswillalsomakeinstallationeasier.Sign language does not simply consist of alphabets; it also

Thegoalofthisprojectistobridgethegapbetweenhearing impaired peopleand regular people. We achieved this by utilizingcomputertechnologiessuchasdeeplearningand computer vision. Our system will capture hand gesture images from live video feed and translate them into text/letters that regular humans can understand. This procedureusesavarietyofmethodologiesandtechnologies; afterreceivingapicturefromthecamera,itisconvertedinto greyscale, and just the hand gesture is retrieved from the entireimage.Theimageisthentransformedonceagaininto HSVtoreduceimagequalityandeliminatebackgroundfor betterprediction.Atlast,theprocessedimageisthensent throughdifferentlayersofCNNalgorithmasmentionedin the section 4.4, then final text is displayed along with speech.

However, modern technology and methodologies from sectors such as natural language processing and voice assistanceareavailabletoexplore.Furthermore,although some have imposed grammatical constraints or linguistic limits,othershavelookedintodata drivensolutions,bothof which have advantages, given that the linguistics of most signlanguagesisstillunderdevelopment.

REFERENCES

[1] TeenaVarma,RicketaBaptista,DakshaChithiraiPandi, RylandCoutinho, “SignLanguageDetectionusingImage ProcessingandDeepLearning”, “InternationalResearch JournalofEngineeringandTechnology(IRJET)”,2020.

[2] Aman Pathak, Avinash Kumar, Priyam Gupta, Gunjan Chugh, “Real time sign language detection” , “InternationalJournalofComputerScienceandNetwork Security” ,2021

[3] ASunithaNandhini,ShivaRoopanD,ShiyaamS,Yogesh S, “Sign Language Recognition using Convolutional NeuralNetwork”,“JournalofPhysics” 2021.

[4] Swapna Johnnya , S Jaya Nirmala, “Sign Language Translator Using Machine Learning”, “ResearchGate”, 2022.

[5] Mehreen Hurroo, Mohammad Elham Walizad, “Sign Language Recognition System using Convolutional Neural Network and Computer Vision”, “International Research Journal of Engineering and Technology (IRJET)”,2020