International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

BHARATH BHARADWAJ B S1 , FAKIHA AMBER2 , FIONA CRASTA3 , MANOJ GOWDA CN4 , VARIS ALI KHAN5

1Professor, Dept. of Computer Science & Engineering, Maharaja Institute Of Technology Thandavapura,Karnataka,India 2 5 Dept. of Computer Science & Engineering, Maharaja Institute Of Technology Thandavapura, Karnataka,India ***

Abstract A user's emotion or mood can be detected by his/her facial expressions. These expressions can be derived from the live feed via the system's camera. A lot of research is being conducted in the field of Computer Vision and Machine Learning, where machines are trained to identify various human emotions or moods. Machine Learning provides various techniques through which human emotions can be detected. Music is a great connector. Music players and other streaming apps have a high demand as these apps can be used anytime, anywhere and can be combined with daily activities, traveling, sports, etc. People often use music as a means of mood regulation, specifically to change a bad mood, increase energy level or reduce tension. Also, listening to the right kind of music at the right time may improve mental health. Thus, human emotions have a strong relationship with music. In our proposed system, a mood based music player is created which performs real time mood detection and suggests songs as per detected mood. The objective of this system is to analyze the user’s image, predict the expression of the user and suggest songs suitable to detect mood

Key Words: Emotion, CNN, Computer Vision, FeaturesFacial expressions give important clues about emotions. Computersystemsbasedonaffectiveinteractioncouldplay animportantroleinthenextgenerationofcomputervision systems. Face emotion can be used in areas of security, entertainment and human machine interface (HMI). A humancanexpresshis/heremotionthroughlipandeye.The Human Emotions are broadly classified as Happy, Sad, Surprise,Fear,Anger,Disgust,andContempt.Musicplaysan important role in enhancing an individual’s life as it is an important medium of entertainment for music lovers and listeners and sometimes even imparts a therapeutic approach. In today’s world, with ever increasing advancements in the field of multimedia and technology, various music players have been developed with features like fast forward, reverse, variable playback speed, local playback,streamingplaybackwiththemulticaststreamsand including volume modulation, genre classification etc. Although these features satisfy the user’s basic requirements,yettheuserhastofacethetaskofmanually

browsing through the playlist of songs and select songs basedonhiscurrentmoodandbehavior.

The significance of music on an individual's emotions has beengenerallyacknowledged.Aftertheday’stoilsandhard works,boththeprimitiveandmodernmanabletorelaxand easehiminthemelodyofthemusic.Studieshadproofthat the rhythm itself is a great tranquilizer. However, most people facing the difficulty of songs selection, especially songsthatmatchindividuals’currentemotions.Lookingat thelonglistsofunsortedmusic,individualswillfeelmore demotivated to look for the songs they want to listen to. Mostuserwilljustrandomlypickthesongsavailableinthe songfolderandplayitwithmusicplayer.Mostofthetime, thesongsplayeddoesnotmatchtheuser’scurrentemotion. It is impossible for the individual to search from his long playlistforalltheheavyrockmusic.Theindividualwould rather choose the songs randomly. This drawback can be overcome by creating an Smart Music Player Based On Emotion Detection where the mood of the person can be detectedandrecommendmusicaccordingly.HumanFaceis taken as the Input and emotion is identified. Song that portraystheemotionistheoutput.

Themainobjectiveoftheworkistoidentifytheemotionof the user and recommend songs based on the identified emotion. The human face is an important organ of an individual‘sbodyanditespeciallyplaysanimportantrolein extractionofanindividual‘sbehaviorsandemotionalstate. Thewebcamcapturestheimageoftheuser.Itthenextracts thefacialfeaturesoftheuserfromthecapturedimageand identifiestheemotion.

Facialexpressionsareagreatindicatorofthestateofamind for a person. Indeed, the most natural way to express emotionsisthroughfacialexpressions.Humanstendtolink themusictheylistento,totheemotiontheyarefeeling.The song playlists though are, at times too large to sort out automatically.Theworksetsouttousevarioustechniques

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

foranemotionrecognitionsystem,analyzingtheimpactsof differenttechniquesused.

[A]Title: FacialExpressionbasedSongRecommendation:A

Authors: ArmaanKhan,AnkitKumar PublicationJournal&Year: IRJET 2021.

Summary: Thisapplicationdetectsfacialphotobyusinga devicecameraThistypeofrecommendationsystemwillbe very useful for people because of its de pendency on the user’semotionsratherthantheuser’spasthistory.recent developmentindifferentalgorithmsforemotiondetection promisesaverywiderangeofpossibilities.Thissystemcan reducethemanualworkofcreatingaplaylistbyauserand automaticallycreateaplaylistfortheuserandhecanspend thattimelisteningtomusic.itwillalsohelpinreducingthe timeittakesforausertosearchforasongaccordingtohis currentmood.

BASEDMUSICPLAYER

Authors: Ashwini Rokade , Aman Kumar Sinha , Pranay Doijad

PublicationJournal&Year: IJASRT,2021.

Summary: theautomaticfacialexpressionbasedonhuman emotions. Here number of technologies developed for emotion detection and music recommendation has been studied. This survey revealed that a significant amount of efforts have been made on enhancing emotion detection from the human face in real life. It just make simple the process of selecting the song manually all the time dependingonthetypeofthesonghe/shewanttolisten.

[D]Title: EMO (Emotion BasedMusicPlayer)

Authors: Sarveshpal,AnkithMishra PublicationJournal&Year: IEEE,2021.

Summary: Thesystemisthusintendedtoprovideacheaper, additionalhardware freeandaccurateemotion basedmusic systemtoWindowsoperatingsystemusers.Emotionbased musicsystemswillbeofgreatadvantagetotheuserslooking formusicbasedontheirmoodandemotionalbehavior.The system will help to reduce the time to search the music according to the mood of the user. By reducing the unnecessary time to compute, this increase the overall accuracyandefficiencyofthesystem.

[E]Title: EmotionBasedSmart MusicPlayer

Authors: Kodamanchili Mohan, Kalleda Vinay Raj, Pendli AnirudhReddy,PannamaneniSaiprasad PublicationJournal&Year: IJSCSEIT,2021.

Summary: Theproposedsystemprocessesimagesoffacial expressions, recognizes the actions related to basic emotions,andthenplaysmusicbasedontheseemotions.In thefuture,theapplicationcanexportsongstoadedicated clouddatabaseandallowsuserstodownloaddesiredsongs, as well as to recognize complex and mixed emotions. Therefore,thedevelopedapplicationwillprovideuserswith the most suitable songs based on their current emotions, thereby reducing the workload of users creating and managingplaylists,bringingmorefuntomusiclisteners,not only helping users, but also songs can be organized systematically.

[C]Title:

Authors: DeeptiChaudhary,NirajPratapSinghandSachin Singh

PublicationJournal&Year: IJCDS,2021

Summary: The detailed discussion of datasets used for ATMC, database analysis methods, pre processing, audio features,classificationtechniquesandevaluationparameters isprovided.Asithasalreadybeendiscussedthatemotionis consideredasparameterformusicclassificationbyMIREXin 2007, still there are many open issues that are to be considered as discussed in previous section. The issues regardingcollectionoflargemusicdatasetandtheirproper database analysis is still unsatisfactory and needs lot of attentionsothatthesongsofallthegenresandlanguages canbeconsideredbyresearchersworkinginthisfield.

The existing music player does not have the emotion analysisengine.Theclassificationofsongstakestimeasit demands manual selection. Users must classify the songs intovariousemotionsandthenselectthesong.Randomly played songs may not match the mood of the user due to lower accuracy. Sound Tree is a music recommendation system that can be integrated into an external web applicationanddeployedasawebservice.Itusespeople to peoplecorrelationbasedontheuser'spastbehaviorsuchas previouslylistened,downloadedsongs.Music.AIusesthelist of moods as input for the mood of the user and suggests songsbasedontheselectedmood.

In our proposed system, a Smart Music Player Based on Emotion Detection is created which performs real time emotion detection and suggests songs as per detected emotion. This becomes an additional feature to the traditionalmusicplayerappsthatcomepre installedonour mobilephones.Theobjectiveofthissystemistoanalyzethe user’simage,predicttheexpressionoftheuserandsuggest songssuitabletothedetectedemotion.Advantagesarethe

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

songsarerecommendedbasedontheemotiondetected.Itis an automated system where the user need not select the emotionmanually.

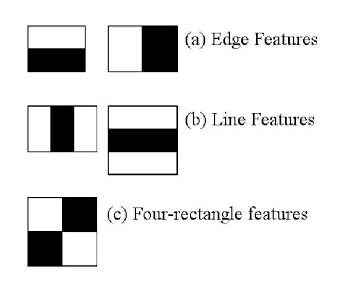

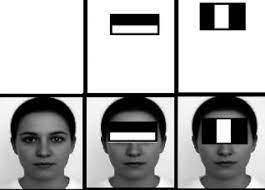

Theobjectrecognitionusingcascadedclassifiersbasedon Haarfunctionisaneffectivemethodofobjectrecognition. This algorithm follows machine learning approach to increaseitsefficiencyandprecision.Differentdegreeimages areusedto train the function.In thismethod, thecascade function is trained on a large number of positive and negativeimages.Bothfaceimagesandimageswithnoface areusedtotrainatthebeginning.Thenextractfeaturesfrom it.ForthisHaar traits(drawingproperties)areused.They are similar to our convolution kernel, each feature is a separateandsinglevalue,whichisobtainedbyremovingthe sumofthepixelsfallingunderthewhiterectanglefromthe sumofpixelsfallingundertheblackrectangle.

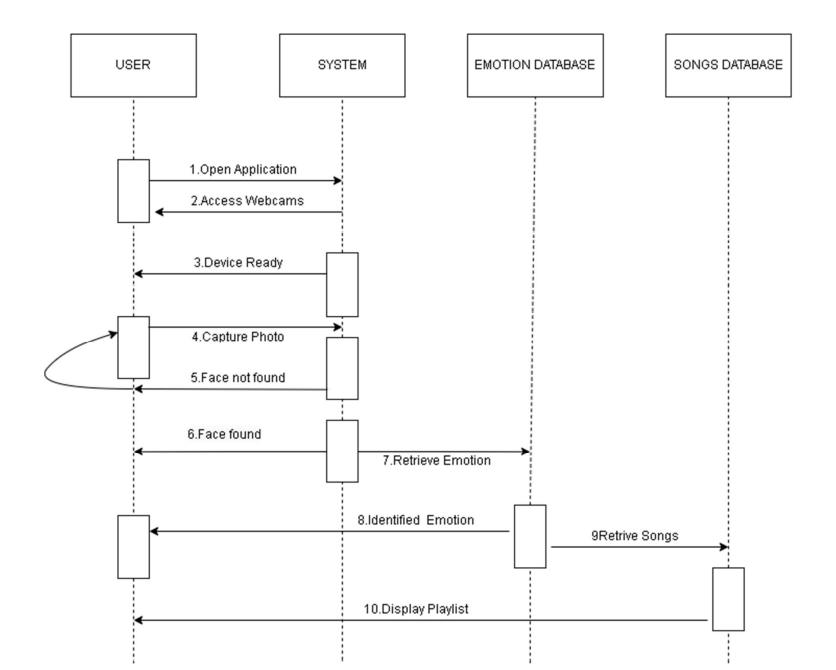

Fig. 1: Sequence Diagram

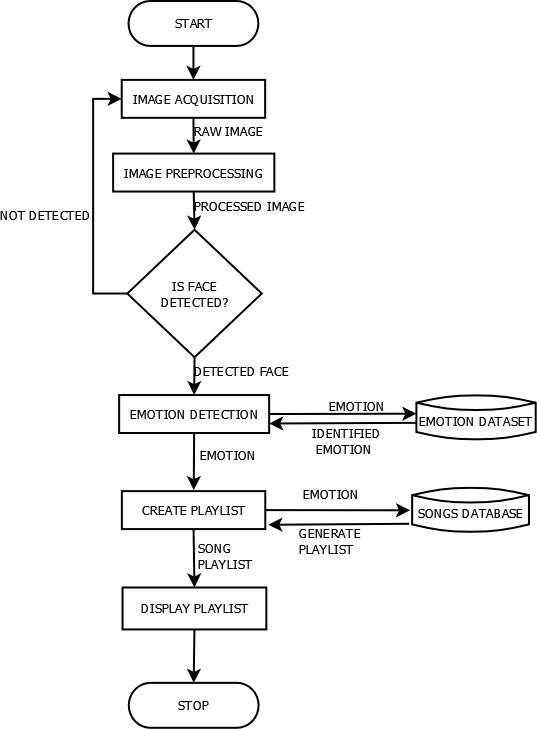

Fig. 2: Flowchart

Detectionoffeaturepoints:Itdetectsfeaturepointsonits own.Forfacialrecognition,theRGBimageisfirstconverted into a binary image. If the average pixel value is less than 110, black pixels are used as substitute pixels, otherwise, whitepixelsareusedassubstitutepixels.

Now,allpossiblemodelsandpositionsofallcoresareused to estimate many functions. But of all these functions we calculated,mostofthemarenotrelevant.Thefigurebelow showstwogoodattributesinthefirstrow.Thefirstfunction

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

selectedseemstofocusontheattributethattheeyeareais usuallydarkerthanthenoseandcheek areas.Thesecond functionselectedisbasedonthefactthattheeyesaredarker thanthebridgeofthenose.

For feature extraction, CNN is used. For the emotion recognition module, we have to train the system using datasetscontainingimagesofhappy,anger,sadandneutral emotions.Inordertoidentifyfeaturesfromdatasetimages forthemodelconstruction,CNNhasthespecialcapabilityof automaticlearning.Inotherwords,CNNcanlearnfeatures byitself.

CNNhastheabilitytodevelopaninternalrepresentationof a two dimensional image. This is represented as a three dimensionalmatrixandoperationsaredoneonthismatrix fortrainingandtesting.

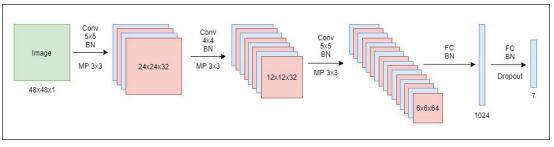

Five LayerModel:Thismodel,asthenamesuggests,consists offivelayers.Thefirstthreestagesconsistofconvolutional andmax poolinglayerseach,followedbyafullyconnected layerof1024neuronsandanoutputlayerof7neuronswith asoft maxactivationfunction.Thefirstconvolutionallayers utilized32,32,and64kernelsof5*5, 4*4,and5*5.These convolutionallayersarefollowedbymax poolinglayersthat usekernelsofdimension3*3andstride2,andeachofthese used ReLu for the activation function. The visual representationofthemodelarchitectureisshowninbelow figure.

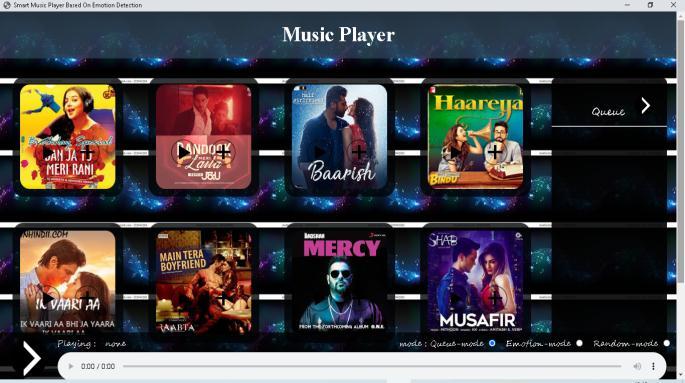

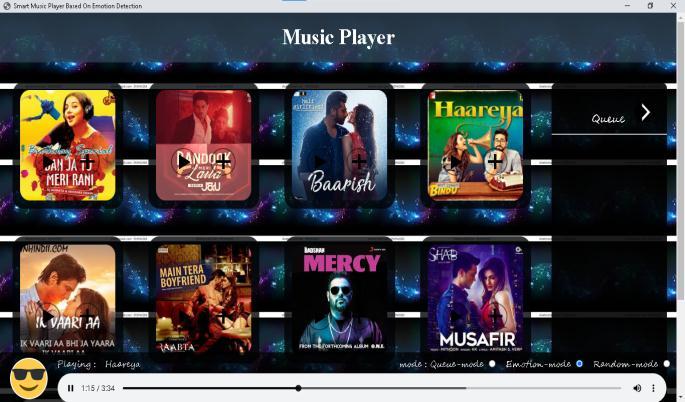

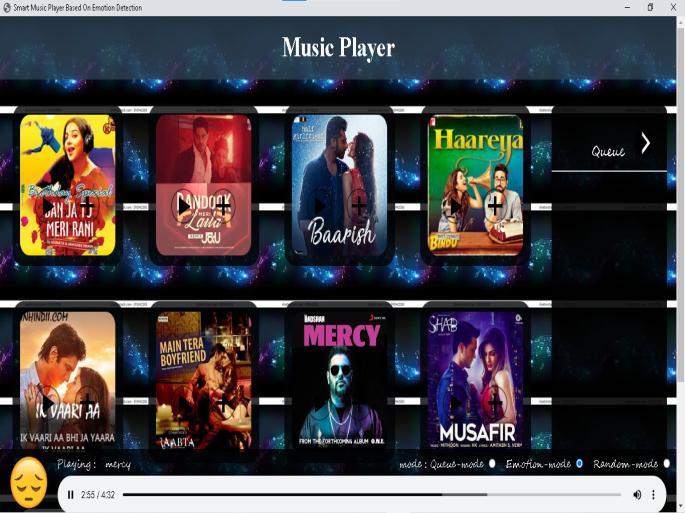

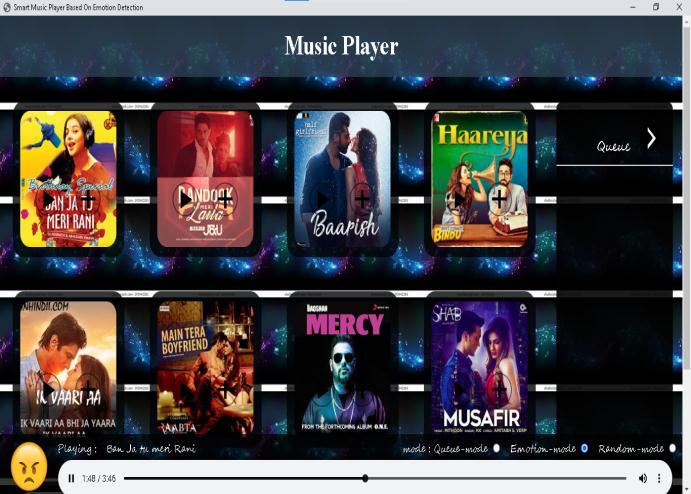

identified, playlist corresponding to that emotion will be displayedinthescreen.Thefirstsongintheplaylistofthe pagedisplayedwillbeplayedfirst.Songsareselectedsuch thatitreflectstheemotionoftheuser.

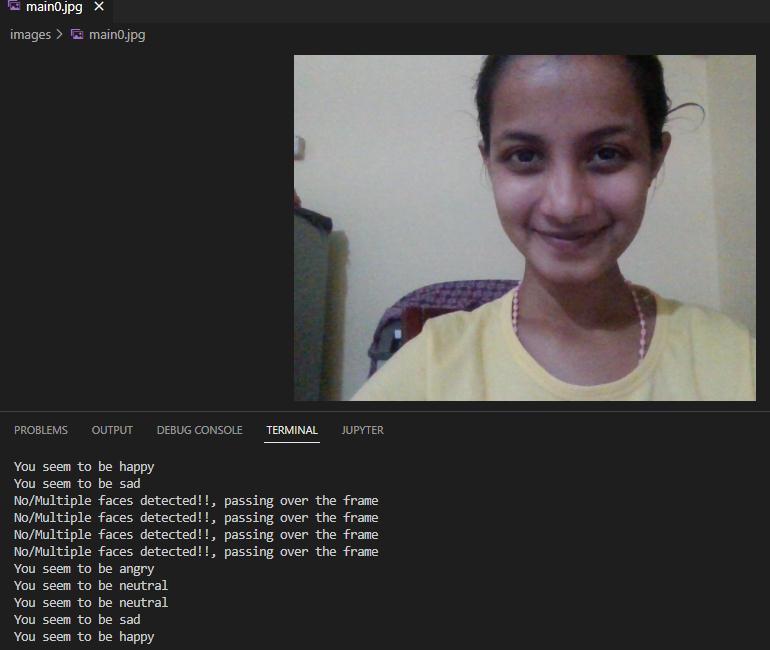

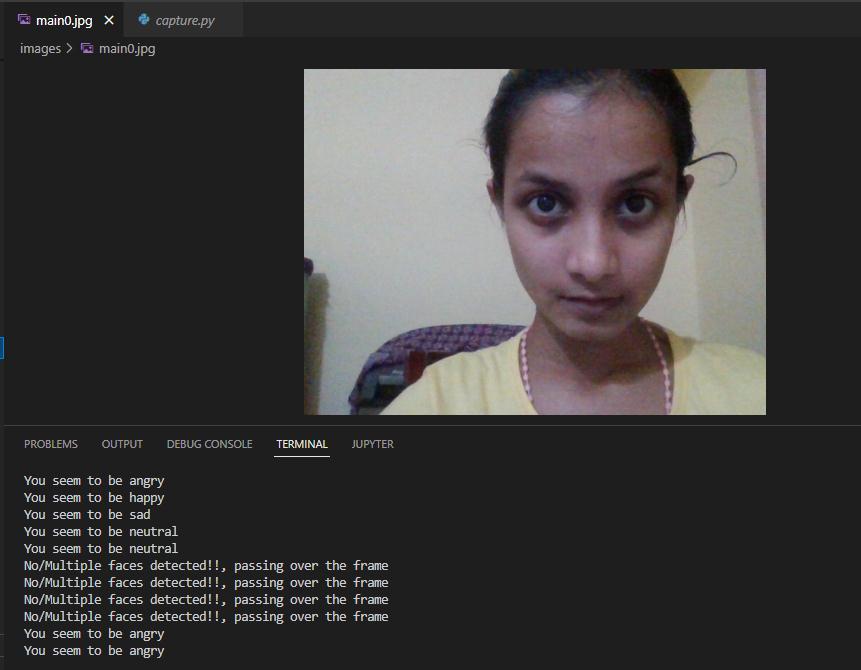

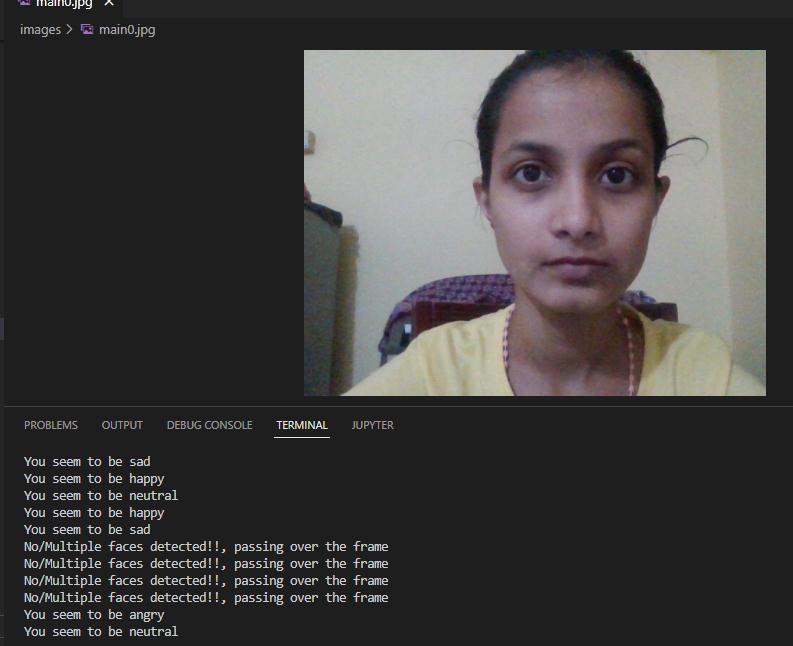

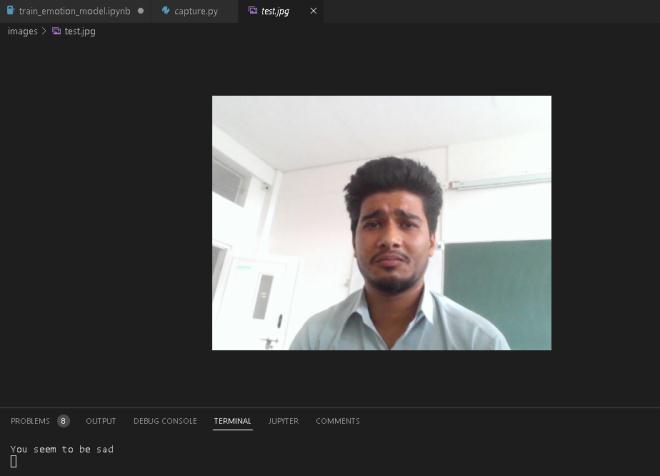

Followingarethescreenshotsoftheinterfaceandoutputof theproposedsystem.

Fig. 6: Home Page

Accuracy can be increased by increasing the number of epochsorbyincreasingthenumberofimagesindataset.The input will be given to convolution layer of the neural network.Theprocessthathappensatconvolutionlayeris filtering. Filtering is the math behind matching. First step hereistolineupthefeatureandimagepatch.Thenmultiply each image pixel by the corresponding feature pixel. Add them up and divide by the total number of pixels in the feature.

The output of neural network classifier is one of the four emotionlabels:happy,anger,sadandneutral.HTMLpages with user interface for each emotion are designed for the system in such a way that once the emotion of user is

Fig. 7: Song played when Happy emotion detected

Fig. 8: Happy Emotion Detected

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

[5]DeeptiChaudhary,NirajPratapSingh,SachinSingh,"A SurveyonAutonomousTechniquesforMusicClassification basedonHumanEmotionsRecognition",May 2020

[6] S Metilda Florence, M Uma, "Emotional Detection and Music Recommendation System based on User Facial Expression",October2020

[7]https://www.analyticsvidhya.com/blog/2021/11/facial emotion detection using cnn/

[8]https://towardsdatascience.com/a comprehensive guide to convolutional neural networks the eli5 way 3bd2b1164a53

Fig. 15 : Random Mode

A music player which plays songs according to the user’s emotion has been designed. The system has been divided into differentmodulesforimplementation which includes facedetection,emotiondetectionandsongclassification.The proposed system is designed as an emotion aware application which provides a solution to the tangible approach of manual segregation of large playlists. ImplementationofstaticfacedetectionisdoneusingViola Jones Algorithm and testing of the same was done using imagesfromdifferentfacialdatasets.Dynamicfacedetection willbeimplementedasfutureworksothatuserscananalyze emotions real time and such an application involves computationalcomplexityandlargeramountofdatasetfor gettinghigheraccuracylevel.TheCNNclassifierisdesigned in such a way that 4 emotion labels can be recognized: happy, anger, sad and neutral and more emotions can be workedforinthefuture.

[1]KodamanchiliMohan,KalledaVinayRaj,PendliAnirudh Reddy,"Emotion Based Music Player",Volume 7,May June 2021

[2]Armaan Khan,Ankit Kumar,Abhishek Jagtap,"Facial ExpressionbasedSongRecommendation:ASurvey",Vol.10 ,December 2021

[3]AshwiniRokade,AmanKumarSinha,PranayDoijad,"A Machine Learning Approach For Emotion Based Music Player",Volume6,July2021

[4] Sarvesh Pal, Ankit Mishra, Hridaypratap Mourya and Supriya Dicholkar,"Emo Music (Emotion based Music player)",January2020

[9]DigitalImageProcessing,3rdedition,RafaelC.Gonzalez, RichardE.Wood

Bharath Bharadwaj B S Professor,Departmentof ComputerScience&Engineering, MaharajaInstituteofTechnology Thandavapura.

Fakiha Amber Student,DepartmentofComputer Science&Engineering, MaharajaInstituteofTechnology Thandavapura.

Fiona Crasta Student,DepartmentofComputer Science&Engineering, MaharajaInstituteofTechnology Thandavapura.

Manoj Gowda C N Student,DepartmentofComputer Science&Engineering, MaharajaInstituteofTechnology Thandavapura.

Varis Ali Khan Student,DepartmentofComputer Science&Engineering, MaharajaInstituteofTechnology Thandavapura.