International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

1

, Shubhangi Kadam2

, Rajesh Prasad3

1Student, Department of Computer Science and Engineering, MIT School of Engineering, MIT Art, Design and Technology, Pune, Maharashtra, India

2Student, Department of Computer Science and Engineering, MIT School of Engineering, MIT Art, Design and Technology, Pune, Maharashtra, India

3Professor, Department of Computer Science and Engineering, MIT School of Engineering, MIT Art, Design and Technology, Pune, Maharashtra, India ***

Abstract Human computer interaction platform have many ways to implement as webcams and other devices like sensors are inexpensive and can get easily available in the market. The most powerful way for communication between human and machine is through gesture. For higher conveyance between the human and machine/computer to convey information, hand gesture system is very useful. Hand gestures are a sort of nonverbal type to communicate that maybeemployedinseveralfields. Researchandsurveypapers included hand gestures applications have acquire different alternative techniques, including those supported on sensor technology and computer vision.

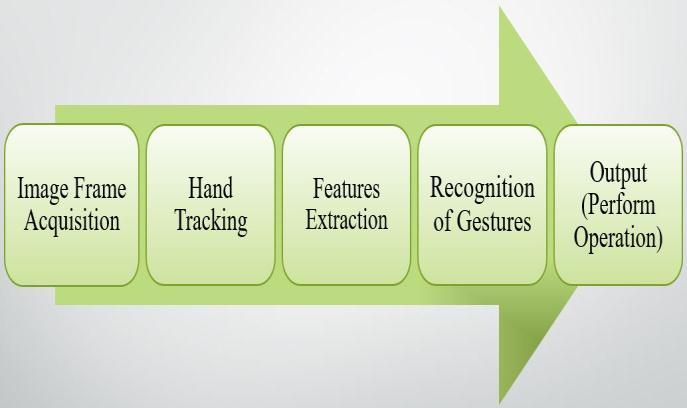

In this system, we aimed to build a real time gesture recognition system using hand gestures. Particularly, we will use the convolutionalneuralnetwork(CNN)inthroughoutthe process. This application presents a hand gesture based system to control a computer that is performing different operations usingneuralnetwork. Our applicationisdefinedin fivephases, Image frame acquisition, Handtracking, Features extraction, RecognitionofgesturesandClassification(perform desired operation). An image from the webcam will be captured, and so hand detection, hand shape features extraction, and hand gesture recognition are done.

Key Words: Deep Learning, Computer Vision, Hand Gestures, Convolutional Neural Network, Python, OpenCV.

Gesture recognition is a popular and in demand analysis field in Human Computer Interaction technology. It has severalemploymentsinvirtualenvironmentmanagement, medical applications, sign language translation, robot control,musiccreation,orhomeautomation.Therehasbeen aspecialsignificancerecentlyonHCIstudy.Handistheone whichismosthelpful communicationtool inseveral body parts,becauseofitsexpertise.Thewordgestureisemployed forseveralcasesinvolvinghumanmotionparticularlyofthe hands,arms,andface,justsomeoftheseareinformative.

The convolutional neural networks are the most popular employed technique for the image classification task. An

imageclassifiertakesaninputimage,orinputsequenceof images and categories them into one among the possible classesthatitwastrainedtoclassify.Theyhaveapplications indifferentfieldssuchasmedicaldomain,self drivingcars, educationaldomain,frauddetection,defense,etc.Thereare several techniquesandalgorithmsforimageclassification taskandalsotherearesomechallengeslikedataoverfitting. During this project Controlling Computer using Hand Gestures,weareaimedtomakeareal timeapplicationusing OpenCV and Python. OpenCV is a real time open source computervisionandimage processinglibrary.We’lluseit withthehelpoftheOpenCVpythonpackage.

Over the traditional mechanical communication technologies,gesturerecognitionsystemhasbecomeknown asamostpopulartechnology.Thedomainmarketisdivided on the different basis like Technology, Type, Practice, Product, Use and Geography. Assistive robotics, Sign languagedetection,Immersivegamingtechnology,smartTV, virtualcontrollers,Virtualmouse,etc.

Most of the methods used Arduino and sensors, directly device webcam is used in very few methods. Then there might be miss recognitions of gestures in case the background environment has elements that appears like human skin. Also hand should be within the range limit. Datasetoverfittingisthemainconcern.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

Incomputerscienceandtechnologyera,gesturerecognition isacrucialfieldwhichcanusetotranslatehumangesture usingdifferentcomputervisiontechniquesandalgorithms. Therearenumeroushumanbodymotionswhichcancreate gesturesbutthemostcommontypeofgesturegeneration stand up from the face and hands. The complete policy of trackinggesturetotheirrepresentationandchangingthem to some useful command is refer as gesture recognition. Differenttechniquesandmethodshasbeenemployedforthe designanddevelopmentofsuchkindoftask.

The starting approach of interaction with computer using hand gesture was first projected by Myron W. Krueger in 1970[1].Theaimoftheperspectivewasachievedandalso themousecursorcontrolwasperformedwiththehelpofan externalwebcam(GeniusFaceCam320),asoftwarepackage that would interpret hand gestures and then turned the recognizedgesturesintoOScommandsthatcontrolledthe mouseactionsonthedisplayscreenofthecomputerdevice [2].ChoosinghandgestureasacommunicationtoolinHCI will allow the development of a good vary of applications with the absence of physical contact with the computing devices [3]. At present, most of the HCI depends on the devicessuchaskeyboard,ormouse,howeverthegrowing importanceinacategoryofmethodsandtechniquesbased on computer vision has been popular because of skill to recognizehumangesturesinasimplemanner[4].

Detection and recognition of a particular human body gestureandcarryinformationtothecomputeristhemain objective of gesture recognition. Overall objective of this systemwastocreatethehumangestureswhichcanbeadmit bycomputerdevicetocontrolagoodsortofdevicesthatare at distant using different hand poses [5]. Hand gesture recognitionbasedontheroboticcomputervisiontohandle the devices such as digital TV, play stations, etc. Hand gesturerecognitionforsign languagewasconsideredas a weightyresearcharealately.Butbecauseofdifferentissues, likeskintonecolordifference,thecomplexanddisturbing environmentsandalsothedifferentstaticanddynamichand gestures,thecommonproblemofthatsystemraised.Hand gesturesrecognitionformanagementofTVisrecommended by[6].Inthis,onlyonehandmomentisusedtocontrolTV.A hand picture looks like icon that follows the hand movementsoftheuserappearonthescreendisplayofTV.In thispaper[7],theactualhumancomputerinterfacethatis HCImodelwhichisbasedonthehandgesturesandaccept gesturesinauniquewaytooperateusingmonocularcamera andassistthesystemtotheHRIcasehasbeendeveloped. The evolved system relies on a classifier based on Convolution Neural Network to extract features and to recognizeparticularhandgesture.

The HMM that is Hidden Markov Model considered as a crucialtoolforthedynamicgesturesrecognitioninactual.

The system employed HMM, operate in present and the general aim to build this system is to operate in static environments.Theproposedmethodologywasfortraining, toemploythetopologynamedLRBofHMMwiththeBaum Welch Algorithm and also for testing, the Forward and Viterbi Algorithms and checking the input sequences and building the maximum productive achievable pattern recognition state sequence [8]. In this paper [9], even the developedmodelseemstobeeasytohandleascomparedto thenewestavailablesystemorcommandbasedsystembut drawbackisdevelopedsystemislesspowerfulinspotting thegesturesandrecognitionofthesame.Sodespiteofthe complexenvironmentalbackgroundandanormallighting environmentbackground,existsystemneedtoimproveand require to build further a good network for gesture recognition. This system is built for total six classes. Howeverthisexistmodelcanbeusedtocontroloperations suchaspowerpointpresentation,windowspicturemanager, mediaplayer,gamesetc.Inthispaper[10],usinganArduino Uno and Ultrasonic sensors, operations such as handling mediaplayer,volumeincrease/decreaseareperformedon laptop.Arduino,Ultrasonicsensors,Pythonusedforserial connection.Forinteractiveandeffectivelearning,suchtype ofsystemcanbeusedintheteachingclassrooms.

Hand Gesture recognition system based on devices like Arduino UNO and several ultrasonic sensors to manage a devicewheretheycancontrolVLCbyinvolvingoperations likeplayandpausevideosandalsoforpagescroll upand down [11]. This paper [12] suggest a convenient hand gesture monitoring system based on ultrasonic sensors, whichisbuiltusingArduinomicrocontrollerATMEGA32.It isclaimedthatextrahardwareisnotrequiretoclassifyhand gestures and also claimed that simple low cost ultrasonic sensors can be used to notice different range limits to identify hand gestures. In this paper [13], hand gesture system relied on Arduino UNO and python programming with wired ultrasonic sensor is developed to manage a deviceandtheyincludedoperationslikezoomin/outand imagerotation,etc.Thistrialissuccessfultrialofworkingof handmotionsensingsystemusingsensorsandArduinokits inwirelessmoderadiofrequency.Handgesturerecognition systemforMicrosoftOfficeandmediaplayerwiththeirown datasetisdeveloped.[14].

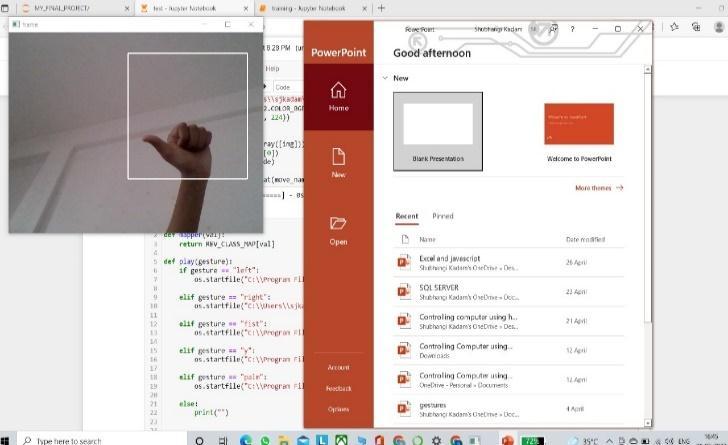

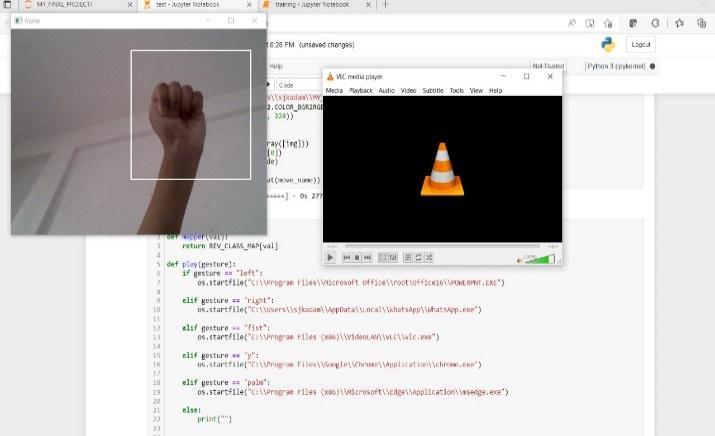

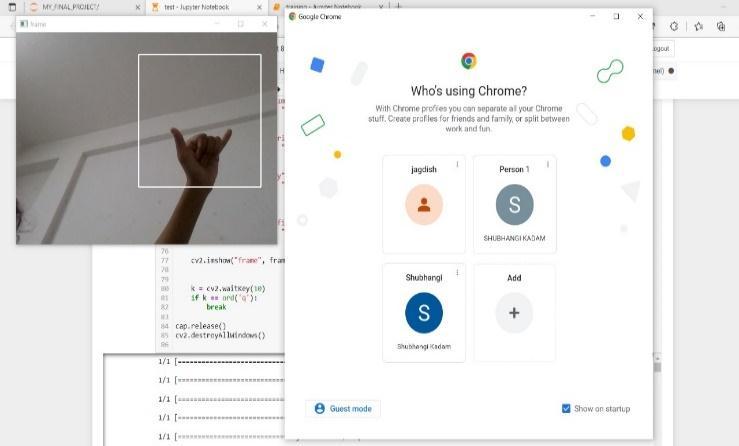

We tried touseavailabledataset,butwefacedoverfitting problem. Thus wecreate our own dataset for training the model.Wetooktotal10differenthandgesturestoperform activities like opening the WhatsApp, PowerPoint presentation, Microsoft Word, Microsoft Edge, Google Chrome, Video Player, Xbox, and Paint etc. We took total 3000imagesfortraining,2000imagesfortestingand500 imagesforvalidation.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

Inthismodulefirstuponhaveaglanceonthedataset.We havetotal3000imagesfortraining,2000imagesfortesting and 500 images for validation. We have performed image data augmentation, as somebody said “Keras ImageDataGeneratorisagem!”itletsusaugmentourimages inreal timewhilethemodel isstilltraining.Wecanapply anyrandomtransformationsoneachimagefromdatasetas itispassedtothemodel.Thiswillnotonlymakeourmodel powerfulbutwillalsolayasideontheoverheadmemory!

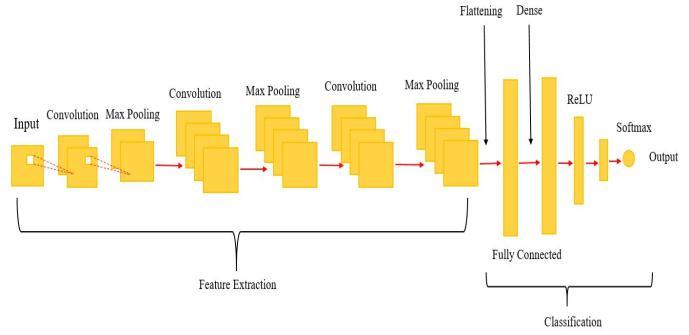

Now our next task is to train our hand gesture detector model.ForthatwehaveusedConvolutionNeuralNetwork (CNN) architecture revised from Squeezenet and VGGNet. LearnedfeaturesofCNNremainhiddenandthusitisusedas black box.

features to the next layer. The third step will involve flattening, flatten is the function that converts the pooled feature map to a single column that is passed to the fully connected layer. Fully Connected layers, in this part, everythingthatwecoveredthroughoutthesectionwillbe mergedtogether.Denseaddsthefullyconnectedlayertothe neuralnetwork.Inthedenselayerneuronsaresupposedto connect deeply. Rectified Linear Unit or ReLU is a linear functionthatwill outputthe input directlyifitis positive, otherwise,itwilloutputzero.Mathematically,itisdefinedas y=max(0,x).Thesoftmaxfunctiontransformsinputvalue intovaluesbetween0and1,sothattheycanbeinterpreted asprobabilities.Finaloutputofthislayerwillalwaysremain between 0 and 1. For this reason it is usual to append a softmax function as the final layer of the neural network. Softmaxisoftenusedasthefinallayerinthenetwork,fora classificationtask.

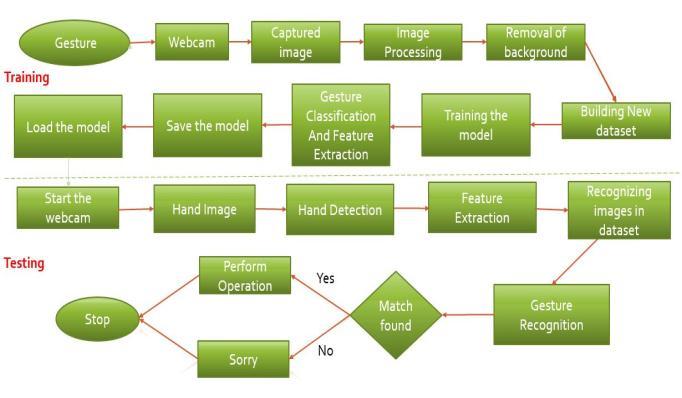

We used Adam optimizer because accuracy rate of this optimizerismuchbetterthanothers.Thewholeworkflowof ourproposedsystemisexplainedthroughfollowingimage Fig4,

In this, for creating the model, we have used VGG19 (inceptionlearning).VGGisasuccessorofAlexNet.VGG19is adeepconvolutionneuralnetworkhavingconvolutionlayer, pooling layer (max pool), fully connected layer, ReLU and softmax in its architecture. The first step is convolution operation.Inthisstep,featuredetectorsaremapped,which basicallyserveasthe neural network'sfilters.Thesecond step is pooling, pooling layers are used to minimize the dimensionsofthefeaturemaps.However,willuseaspecific typeofpooling,maxpooling.Maxpoolingselectsmaximum

Nowaftercreationofmodel,wecompiledandtrainedour model. Then after checking the accuracy, visualization of resultsbyplottinggraphs,andsavingthemodelwefinished withthetrainingmodule. Here,completetaskistakingthe imageofthehandgestureasaninputusingthewebcamand then compress the image by using the CNN algorithm to matchtheimagesinthedatasetinordertodetectthehand moment accurately. The captured image is preprocessed, andahanddetectortriestofilteroutthehandimagefrom the captured image. A CNN classifier is used to recognize gesturesfromtheprocessedimageafterfeatureextraction.

Nowwehavetrainedmodel.Thismodelwilldetectthehand, thenfeatureswillbeextractedandifgestureisrecognized thenparticularoperationwillbeperform.Wealsoaddedone more functionality in our implementation that tells user whichactionisperformed.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

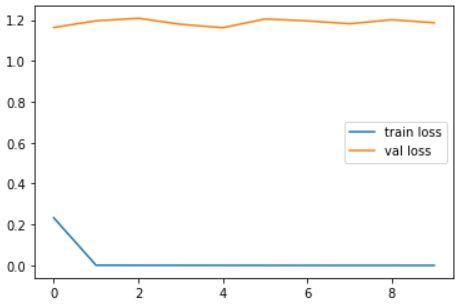

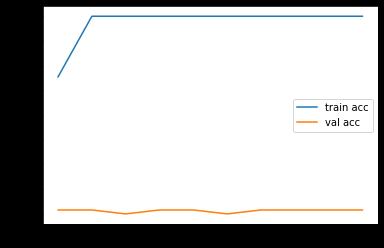

Followingarethetwoplotsoftrain valaccuracyandtrain valloss.Wegot80.40%validationaccuracy.

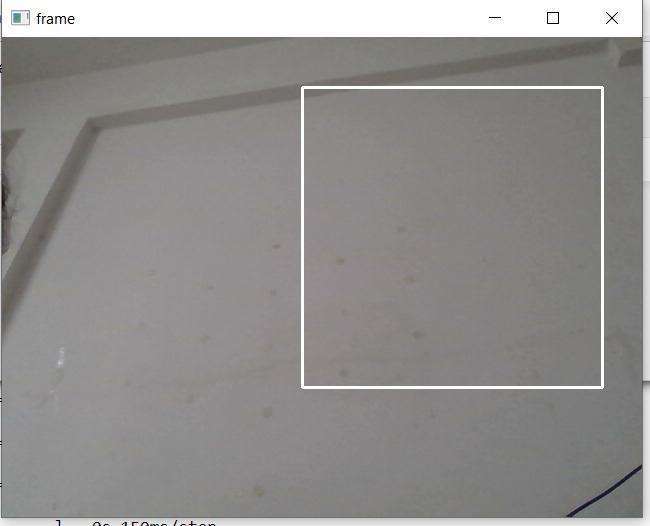

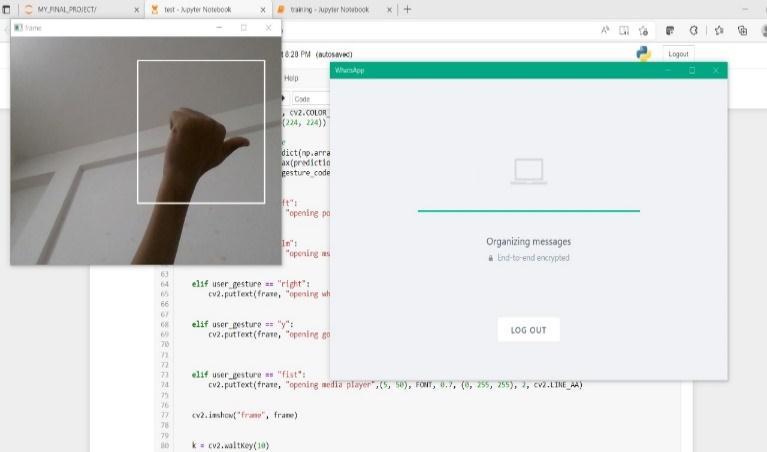

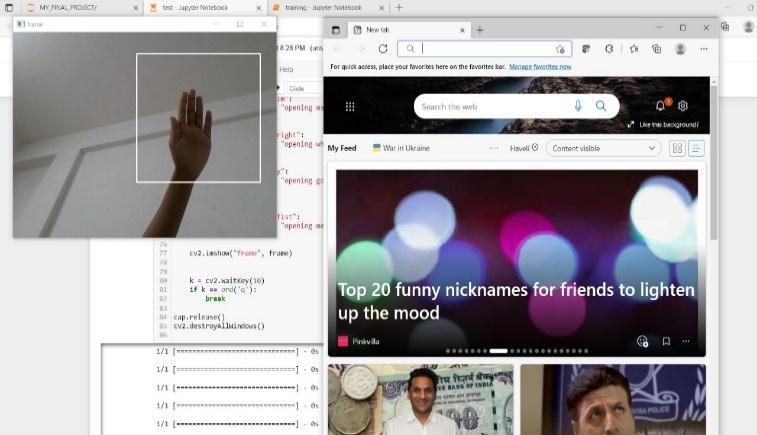

Below are some demons of the developed system. We assigned total 10 hand gestures to perform different operations.

Fig 9. GestureDetectingFrame

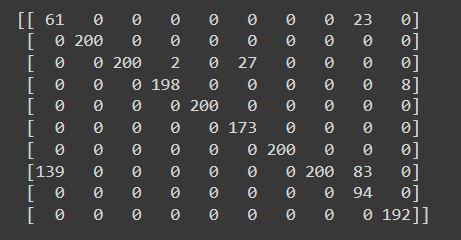

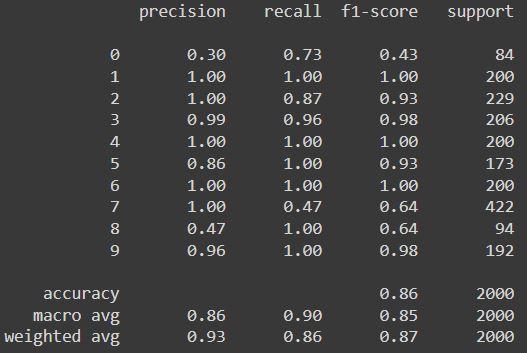

We achieved following result of evaluation metrics. Our modelisconfusedinsomeofthegestureslikecross,scissor, up,etc.Weachieved85.90%accuracyatthetimeoftesting ourmodel.Followingaretheclassificationreportswegot.

Fig 10. OpeningtheWhatsApp

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072

With the growth of present technology, and as humans generally makes the use of hand movements that is hand gestures in the daily communication in order to make intentionsmoreclear,handgestureidentificationistreated tobeacrucialportionofHumanComputerInteraction(HCI), which provides devices the capability of detecting and classifying hand gestures, and perform activities subsequently. Research and analysis in the field of hand gestures has become more popular and exciting. It also allows a way of natural and simple interaction. Standard interactivetechniquesbasedonseveraltoolslikeamouse, keyboard/touch pad, or touch screen, joystick for gaming andconsolesforsystemmanagement.

Inthispaperwealsohavediscussedtheoverallreviewof gestureacquisitionmethods,thefeatureextractionprocess, theclassificationofhandgestures,thechallengesthatface researchersinthehandgesturerecognitionprocess.Inthis application, we developed a deep learning model for controllingacomputerusinghandgestureswiththehelpof PythonandOpenCV.Itisthecost effectivemodelasweare not using any extra devices and sensors. We can define a projectascreatingasuitabledataset,trainingthemodeland testing this model in real time. This project has limited scope, we assigned total 10 hand gestures to perform different operations, but in future we can add more operations like volume up/down, scroll up/down, swipe left/right and many more, and can be possible to make completelyhandgesturescontrollingdevice.Handgesture recognitionusedinmanydifferentapplicationslikerobotics, signlanguagerecognition,HCI,digitandalphanumericvalue, home automation, medical applications, gaming etc. Hand gesturesrecognitionprovidesaninterestinginteractionfield inaseveraldifferentcomputerscienceapplications.

[1] M. KRUEGER, Artificial reality II, Addison Wesley Reading(Ma),1991.

[2] Horatiu StefanGrifandCornelCristianFarcas,"Mouse Cursor Control System Based on Hand Gesture", 9th International Conference Interdisciplinarity in Engineering,INTER ENG2015,8 9October2015,Tirgu Mures,Romania.

[3] H.AJALAB,"StatichandGesturerecognitionforhuman computer interaction", Asian Network for Scientific Informationtechnologyjournal,pp.1 7,2012.

[4] J C.MANRESA, R VARONA, R. MAS and F. PERALES, "Hand tracking and gesture recognition for humancomputer interaction", Electronic letters on computervisionandimageanalysis,vol.5,pp.96 104, 2005.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

[5] H.HASANandS.ABDUL KAREEM,"Statichandgesture recognition using neural networks", Artificial IntelligenceReview,vol.41,pp.147 181,2014.

[6] W.FREEMANandC.WEISSMAN,"Televisioncontrolby hand gestures", Proc. of Intl. Workshop on Automatic FaceandGestureRecognition1995,pp.179 183,1995.

[7] Pei Xu, "A Real time Hand Gesture Recognition and Human Computer Interaction System", arXiv:1704.07296v1[cs.CV]24Apr2017.

[8] J.R Pansare, Malvika Bansal, Shivin Saxena, Devendra Desale,"Gestuelle:ASystemtoRecognizeDynamicHand Gestures using Hidden Markov Model to Control Windows Applications", International Journal of Computer Applications (0975 8887) Volume 62 No.17,January2013.

[9] RamPratapSharmaandGyanendraK.Verma,"Human Computer Interaction using Hand Gesture", Eleventh International Multi Conference on Information Processing 2015(IMCIP 2015).

[10] Rohit Mukherjee, Pradeep Swethen, Ruman Pasha, SachinSinghRawat,“HandGesturedControlledlaptop using Arduino”, International Journal of Management, TechnologyAndEngineering,October2018.

[11] Ayushi Bhawal, Debaparna Dasgupta, Arka Ghosh, Koyena Mitra, Surajit Basak, “Arduino Based Hand GestureControlofComputer”,IJESC,Volume10,Issue No.6,June2020.[

[12] UditKumar,SanjanaKintali,KollaSaiLatha,AsrafAli,N. SureshKumar,“HandGestureControlledLaptopUsing Arduino”,April2020.

[13] Sarita K., Gavale Yogesh, S. Jadhav, “HAND GESTURE DETECTION USING ARDUINO AND PYTHON FOR SCREEN CONTROL”, International Journal of Engineering Applied Sciences and Technology, 2020, Vol.5,Issue3,ISSNNo.2455 2143,July2020.

[14] Ram Pratap sharma, Gyanendra Varma, “Human computerinteractionusinghandgesture”,2015.

Volume: 09 Issue: 06 | Jun 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal