International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

Face Recognition Smart Attendance System: (InClass System)

Abstract:

A biometric is a study of human features and characteristics. Although no faces can be prevented as a security solution, it helps to some extent. Face recognition is a rising field for object detection. It's also used in various fields like attendance, security, medical, etc. Attendance is vitally important in schools and universities. As we know, Manual attendance systems have various drawbacks, such as being less accurate and difficult to maintain. Nowadays, we see several systems such as IoT and passive infrared sensor (PIR) bases and various models. So, for the sensor, we want to keep it in good condition, so it doesn't become damaged. In diverse models, we confront problems such as selecting which feature to use or, more importantly, managing variance in lighting, postures, and size. As a result, we are attempting to construct an "InClass" solution to address the above mentioned issue and digitally provide a valid attendance sheet. For this, we used a CNN face detector that is both highly accurate and very robust, capable of detecting faces for varying angles, lightingconditions,andocclusion.

uncomfortably high cost for users and is very time consumingontheuser'spart.Sofacerecognitionisavery valuable technology And develops strategies that incorporateitintooursystem.

Attendance Management with biometrics is being developed and adopted as multi tech classrooms become more prevalent. In most cases, iris recognition or thumb scanning is used in attendance management. With time, advancesarealsorequiredtokeepupwithever increasing technology. As marking procedures advance, the notion under consideration is the urgent need to remove impediments, the complexity of devices, delays, and genuineattendance.

Keywords

: Face Recognition, Face feature, Face selection,FeatureExtraction

I. Introduction

In today's world, the face recognition technique changes the biometrics field. In this technique, we use people’s faces for identification. Each person has unique facial traits,soit’sveryeasytodifferentiateoruniquelyidentify an individual. Face recognition, which has gallantly outperformedinvariousdisciplines,hasthepotentialtobe employedefficientlyforsecuritysystemsbuthasnotbeen explored owing to obvious weaknesses. As we know, the traditional pen paper system has its pros and cons. The manual attendance marking method is susceptible and time demanding, resulting in a setback for the kids. To address this issue, advances have resulted in the widespread usage of biometrics. As we know, the biometric technique for attendance comes at an

Unlike all traditional systems which are comparatively slow and susceptible, the InClass system employs face recognitiontoidentifyandnotestudentattendance.Inour system, there is no requirement for equipment further than a camera or laptop. The students' presence is validated via the use of their faces. This method is very effective for recording attendance and keeping the record with us or the person taking the attendance (Instructor, administration). Algorithms are employed to match the student's faces with those in the database. In this system, we also use a mail function. We will help to store the attendanceonthedrivewhichisalsohelpfultoreducethe usage of paper and whenever the record of attendance is requireditcanbefetchedeasilyandanywhere.

According tous, readingaboutolder projects likesystems using Biometrics and thumb scanners is very time consuming because it will go one by one mark attendance andalsosuchproductsareverydifficulttomaintain.With the time begging, as we are in the 21st century and it needs us to upgrade the technology that’s why we are comingwith“InClassSystem”toovercometheseproblems.

II. Literature Survey

Sajidandcolleagues(2014)[1]Inthisstudy,hedeveloped a model for identifying people when females wear headscarves and males have beards. For face detection, they use a Local Binary Algorithm (LBA). In this, they use

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

fiducial points for matching the Face. In this system, two databases are used. First, one memory collection includes previously saved photos, while the second database has attendancedatausedtocheckattendance.

They use an image for marking attendance, so capture an image. Then they removed Background and noise from that Image, and using the Gabor filter, they marked 31 fiducial points, which will help calculate facial features. Then it will match with the database, and attendance is marked. They capture the images three times in between lecturestovalidateattendance.

Raghuwanshietal.(2017)[2]Inthispaper,theycompare two feature extraction methods: Principal Component Analysis(Principal Component Analysis(PCA) and Linear Discriminant Analysis(LDA). Principal Component Analysis(PCA) is used to reduce the number of face recognition variables, and Linear Discriminant Analysis(LDA)isusedtominimizethewithin classscatter means moving the same faces together. For their comparison,theyselectedthreeparameters:Timeelapsed, Subspace Projection, and Accuracy based on an oral and classdatabase.

They use two databases, the first is the oral database which contains 400 images of 40 individuals, and the secondistheClassdatabasewhichincludes25imagesof5 individuals. Also, they plot ROC and CMC graphs for analysis and comparison of databases. The ROC plot is used for different possible cute points of a diagnostic test, and CMC is used to measure recognition performance. Principal Component Analysis(PCA) and Linear Discriminant Analysis(LDA) work well in normal light, Distance from the camera is 1 to 3 feet, with no pose variation.

Winarnoetal.(2017)[3]Inthispaper,wecanseetheyuse a 3 WPCA(Three level model wavelet decomposition Principal component analysis ) method for face recognition. Initially, they took images of a person from two cameras on the left and right. After capturing the Image,theynormalizetheimages.Normalizationisdonein two steps: first is preprocessing, and another is half joint Thehalfjointisusedtominimizetheforgeryoffacialdata. They use RGB to Gray conversion and cropping, resizing, andadjustingcontrastbrightnessinpreprocessing.

For feature extraction, they use the 3 WPCA(Three level model wavelet decomposition Principal component analysis ), in which they reduce the dimensionality of the images so that feature extraction using Principal Component Analysis(PCA) is done very quickly. For

classification, they tested two methods, Euclidean and Mahalanobis distance methods. For testing, they consider two parameters: Recognition Rate and Recognition Time, in which the Mahalanobis gives more remarkable results. Theyachieve98%accuracyonasmalldataset.

Soniya et al. (2017) [4] In this paper, they proposed an IOT based system that uses Adriano UNO and Camera. They are arranging that system to create a student database, which gives the user access to add a new entry, whichwillhelpusersregisternewusersquickly.Theyuse the Principal Component Analysis(PCA) algorithm for feature detection and face Recognition. They try to establish such a feature if students leave the class in between,andiftheydonotagainenterwithin15min,they are marked absent. For face recognition, they use face tracking and Face location. Face tracking is used for size, length, breadth pixel of Face, and face location to detect suitable location. They plotted an FMR (occurred when a genuinematchwasobtained),FNMR(whenagenuineuser isblocked),andagraph.

Inthissystem,theyuseacamerawithanimageresolution of 300k pixels and light sensors for switching on 4 LEDs when in the dark. Sharpness, Image control, brightness, and saturation are the features provided by that camera. Themaindrawbacksaretheytakeattendanceonestudent atatimewhichisverytime consumingformanypeople.

Nazare et al.(2016)[5] In this paper, they proposed a systemusingacombinationofAlignment freepartialface recognition and the Viol Jones algorithm. The Alignment Free partial Face algorithm uses MKD (Multi key Descriptor), which is used for prob images and dictionary creation. Each Image in the dictionary is represented in sparse representation and then uses GTP (Gabor Ternary Pattern) for robust and discriminative face recognition. Duetothis,wecaneasilydetectaperson.

For the creation of data, they gave the feature for registration, and in that, they took three images of each person from the front, left, and right side view. They also arrange a camera at the middle top of the blackboard, covering maximum faces. They consider one lecture for 1 hour,andtheytakethreeimagesoftheclassinbetween20 min gaps. So that they get a valid result. For capturing images, they use a camera having a resolution of 20 Megapixels.

Wagh et al. (2015)[6] This paper is based on Principal Component Analysis(PCA) and EigenFace algorithm. They are addressing the issues like head pose problems as well as the intensity of light. For these problems, they use

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

techniques like the illumination variant viola jones algorithm. They also use the RGB Gray conversion, Histogram normalization, and skin classification to improvefacedetectionaccuracy.

Heretheyusethistechniqueonepersonatatime,whichis very time consuming and one of the system's drawbacks. Also, they are not addressing the issues when the person withabeard,mask,etc.

Chintalapati et al.(2013)[7] In this paper, they develop a system with a different algorithm for face detection and their classification and their combination. (i.e. are Principal Component Analysis(PCA)+ Distance Classifier, Linear Discriminant Analysis(LDA)+ Distance Classifier,Principal Component Analysis(PCA)+Support Vector Machine(SVM), Principal Component Analysis(PCA)+ Bayes, LBPH+ Distance Classifier). For comparing that technique, they use various parameters: Occluded faces, the false Positive rate, Recognition Rate(real time video), Distance of the object for correct Recognition,RecognitionRate(staticImage),andTraining Time.

According to their data, Principal Component Analysis(PCA) with Support Vector Machine(SVM) gives excellent results in each aspect. But they do not highlight the Recognition of faces with beards, scarfs, and tonsure heads.Also,whenthesystemrecognizesafaceuptoa30 degreeangle,itwillnotrecognizeifitencounterstheFace morethana30 degreeangle.

Akay et al.(2020)[8] In this paper, they tested two techniques for face detection, namely HOG (Histogram of Oriented Gradient) and another one is the Haar Cascade algorithm. HOG is based on contras in different regions, and Haar Cascade is based on light and dark transitions. They tried both methods based on the parameters; True positive, True Negative, False positive images, Precision, recall,F1 Score,andTrainingtime.

In this, they also introduce medical mask detection due to covid 19,whichwillalsobehelpfulformaskdetection.For Recognition and classification, they use CNN and Support Vector Machine(SVM), respectively. According to their researchbetweenHOGandHaar Cascade,HOGgivesmore significant results on given parameters and works well in changinglightingconditions.

A biometric attendance management system was developed by Varadharajan et al. (2016)[9] In this paper, they introduce the system using the EigenFace method, whichisasetofEigenvectors.EachFaceisrepresentedin

Eigenface, and this Face is converted into an Eigenvector with Eigenvalue. They use the Jacobi method to calculate thisvaluebecausetheirAccuracyandreliabilityarehigh.

They also use different parameters for face detection and Recognition that are Face with a veil, Unveil Face, and beard. So the Unveil Face gives greater accuracy, 93% for detectionand87%forRecognition.

Rekha et al.(2017)[10] In this paper, they integrated two techniques that are Principal Component Analysis(PCA) and an Eigenface database. They address various issues like Image size, Image quality, varying intensity of light, and Face angle. For creating the EigenFace database, they took 15 people to ten images each. For comparing the Training and Testing image, they use Euclidean DistanceDistance in the Recognition part. This uses MATLABforcreatingGUIandTrainingalgorithms.

In this paper, Y.Sun et al.(2020)[11], we see that various CNNmodelswillbetestedwithdifferentparameters.They testedthesemodelsonCIFAR10andCIFAR100.Itwasalso tested on a system with a GPU. In this paper, we see they are tested manually designed, automatic+manually designed, and fully automatic types of systems. According totheirresults,CNN GAworkswellinallparametersand onGPU.

Ammaretal.(2021)[12]Inthispaper,weseeacomparison of3objectdetectionmethods:FasterR CNN,YOLOv4,and YOLOv3.This comparison uses the PSU (Prince Sultan University) dataset and the Stanford dataset. They use parameters like Input Size, AP, TP, FN FP, F1 Score, FPS, Interface Time, Precision, and Recall for comparison. Also for Faster R CNN, YOLOv3, and YOLOv4 use InceptionV2, Barnet 53,andCSPDarknet 53forfeatureExtraction.This comparisonisusedforvehicledetection.

S.Gidaris et al(2015)[13]In this research paper object detection using the CNN model. But for this, they focused mainlyontwofactors.Thefirstisthediversificationofthe discriminative appearance and the second is the encoding of semantic segmentation aware features. The First factor isusedforfocusingonthevariousregionsoftheobject.

Yuhan etal()[14]In thispaper,wecansee the main focus on improving the cross domain robustness of object detection.TheyfocusedontwofactorsthatareImage level shift and Instance level shift. They use the state of the art Faster R CNN model and focus on these two factors to reduce the domain discrepancy. In the Image Level shift, we can see image style and illumination; in the Instance

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

Level shift, we can see the appearance of objects in the imageandthesizeoftheimage.

D. Varshani et al.(2019)[15]In this paper we can see the application of object detection in medical fields. In this, they used a pre trained CNN model. They use different classifiers for classification like Support Vector Machine(SVM),k nearest neighbour’sRandomForest,and Naïve Bayes. For feature extraction the uses X Caption, VGG 16,VGG 19,ResNet 50,DenseNet 121,andDenseNet 169.They tested all combinations but according to their result, the DenseNet 169 and Support Vector Machine(SVM)combinationisbestforX Raytypeimages.

III. Methodology

This is a prototype face detection and identification algorithminreal time.Thesystemismadeupofacamera that is mounted in the classroom and captures video frames before detecting several faces. The amount of bits to be processed is reduced by cropping and converting these faces to grayscale. These faces are then matched to those in the database, and the outcome is shown along withattendance.

3.1.FaceDatabaseCreationofStudents:

Each student can register on the system and their information is also gathered. This information is stored in thedatabaseand their Photosarestoredonthecomputer itself.Alltheattendanceisalsostoredontheserver.

3.2.Face-Recognition:

The recognition technique revolves around face recognition. Face recognition is a computer vision technologythatanalysesaperson'sfacialcharacteristicsto determine their identity. Face recognition has two components: detection and matching. Face recognition takes into account a person's facial features and a face photograph or video feed. If a human face is present, determine each major facial organ's position, size, and placement. To determine the identity of each face, the identifying attributes provided in each face are retrieved andmatchedtoknownfaces.

Face recognition is a sort of biometric recognition that comprises four steps: face picture collection, face image preprocessing, face image feature extraction, matching, andcombininghardrecognition.

3.2.1Neuralnetwork:

In membrane recognition, a neural network is a widely utilizedapproach.Itsconceptisconstructingahierarchical structureoutofahugenumberofsimplecalculatingunits. Eachbasicunitcanonlyperformsimplecalculations,buta system of units in complex structures might be a difficult problemtosolve.Facerecognitionhasalsobeensuccessful using the neural network approach. There are enough training examples to theoretically recognize all faces, as long as the network is large enough. BP networks, self organizing networks, convolutional networks, and other regularlyusedalgorithmsareexamples.

While neural networks have some advantages in face recognition, they also have significant flaws. Neural networks have a large and complex structure, necessitating a large sample library for training. Days or even months of training are not uncommon. There is insufficient speed. As a result, when it comes to facial recognition,neuralnetworksarerarelyused.

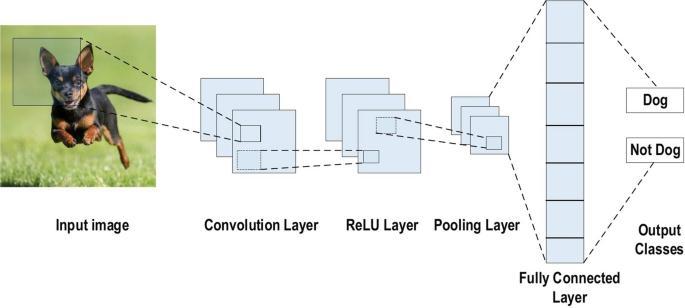

3.2.1.1CNN:

CNN is a modified feed forward ANN that can extract significant properties from input pictures to characterize each.FeatureextractionisnotrequiredwithCNN.Itlearns by extracting significant features from the input photos during the training process. CNN keeps vital details while also accounting for spatial and temporal errors. It is invariable for rotation, translation, compression, scaling, andothergeometricchanges.

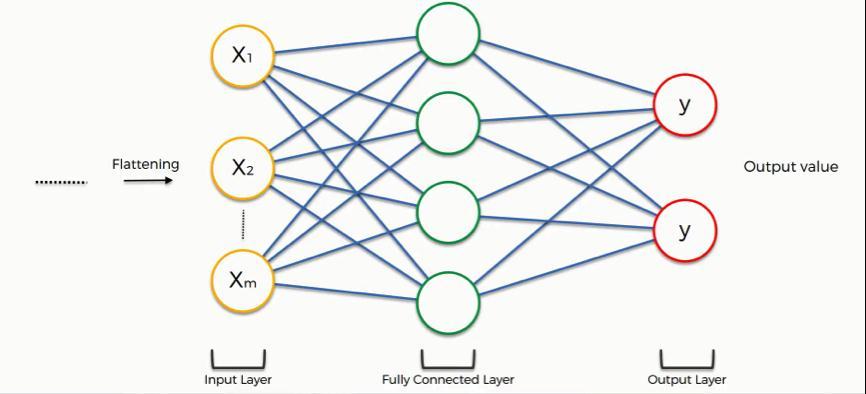

Scaling, shifting, and distortion invariance are three designs that CNN integrated. Back propagation neural networkswereusedtotraintheCNNnetwork.CNNusesa collection of connection procedures to classify the input. Face recognition uses a CNN architecture with five convolutional layers, a pooling layer, and fully linked layers,asillustratedinthediagram.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

Fig.1CNNArchitecture

The convolutional operation between the input picture and the 3x3 kernel was done in the convolutional layer. Thefeaturemapwiththereducedsizeistheconvolutional network's output. Because feature maps are linear, keepingthemthatwayiscritical.Tomakeaconvolutional mask,youmayusemanytypesoffilters.

Fig.3FullyConnectedlayerCNN

To minimize over processing, the maximum combination limitsusableparameters.Thetypeofmeanpoolingusedis a sub sampling. The average value is used to create this. Flattening is converting a two dimensional picture into a single one dimensional vector. Each cell has a unique vectorplacedintothecompletelylinkedlayer.Anartificial neural network is another name for the fully linked layer (ANN). Each neuron in one layer communicates with each neuroninthefollowinglayerviacompletelyrelatedlayers. Softmax is a method for predicting a class from mutually exclusiveclasses.

3.3.Comparison/Recognition:

The face with the highest degree of correlation is recognized as the matched face, and the related name of thefaceiscollectedfromthedatabaseusingtheclassifier.

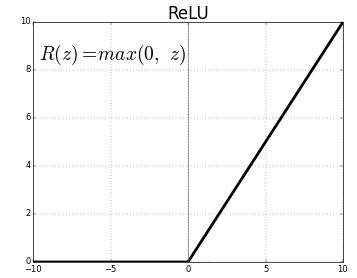

Fig.2ReLU

The Rectifier Linear Unit (ReLu) is used to augment the non linearity of a feature map. The system becomes unstable due to linear function. The pooling layer is used to keep the image's properties. Image size is reduced by grouping. Mean, maximum, sub sampling, and more types ofpoolingexist.Thecluster'sminimumvalueistakenfrom the minimum pool. The sampling reduction process is the sameasthemaximumgrouping.

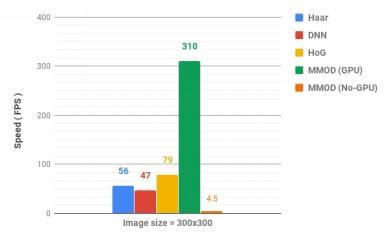

3.3.1Speedcomparisonofthemethods

We used 300*300 image for comparison of the method thatare:

We run each method 1000 times on the given image and take10suchiterationsandthetimetaken. HerewerunMMODinnormalCPUandNVIDI GPU

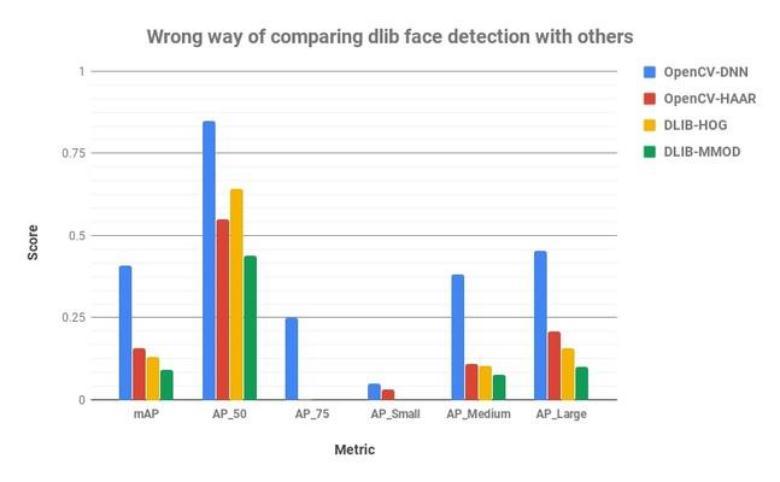

AP_Small=AveragePrecisionforsmallsizefaces(Average ofIoU=50%to95%)

AP_medium = Average Precision for medium size faces ( AverageofIoU=50%to95%)

AP_Large=AveragePrecisionforlargesizefaces(Average ofIoU=50%to95%)

mAP=AverageprecisionacrossdifferentIoU( Averageof IoU=50%to95%)

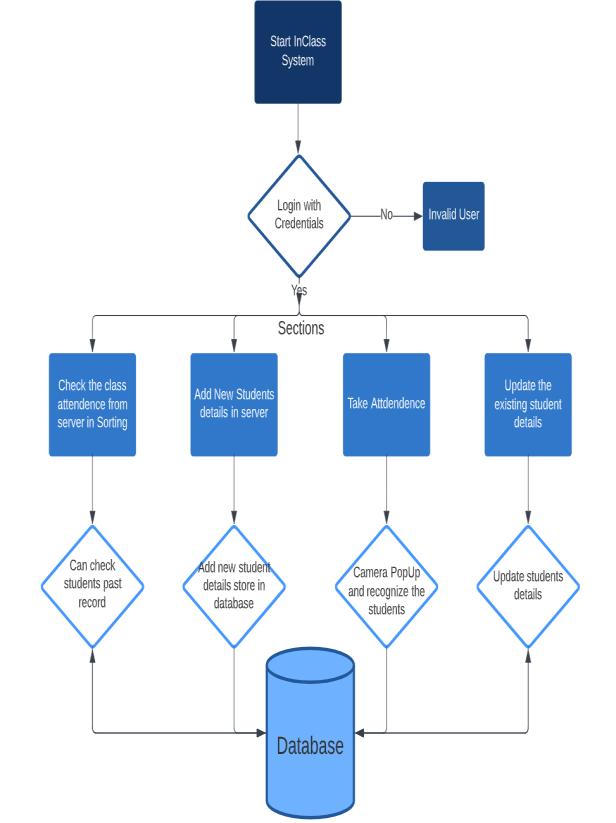

IV. Flow chart

Fig.4SpeedComparison

3.3.2 Accuracy Comparison:

Wecomparethese4modelsontheFDDBdatabase. Given belowarethePrecisionscoresforthe4methods.

Fig.6Flowchart

V. Result

Fig.5AccuracyComparison

Where,

AP_50 = Precision when overlap between Ground Truth andpredictedboundingboxisatleast50%(IoU=50%)

AP_75 = Precision when overlap between Ground Truth andpredictedboundingboxisatleast75%(IoU=75%)

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page2732

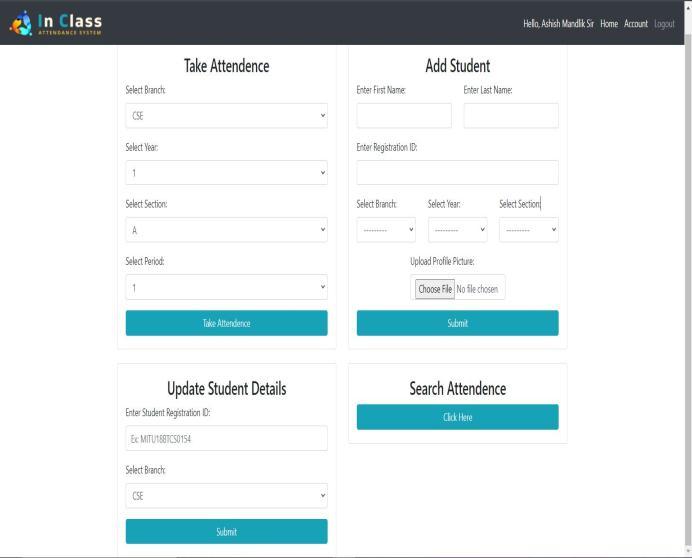

When we login into the system then this page occurs, in whichtherearefoursections: 1.Takeattendance 2.Addnewstudentdetails 3.Updateexistingstudentdetails 4.Searchattendance.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

Fig.7UI

1.Takeattendance:

After filling in all the details in that field, we have to click onthetakeattendancebutton.

Whenweclickthetakeattendancebutton,thecamerawill openandstartrecognizingstudents.

2.Addstudents:

In this section, we add new student details like student's name,registrationID,branch,class,year,andprofilephoto andstorethemindatabases.

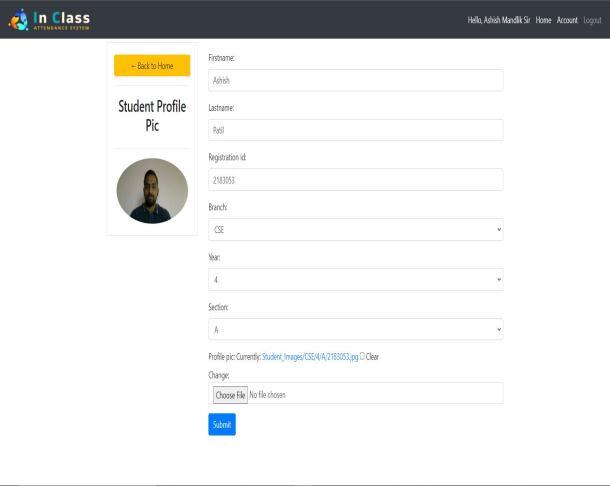

3.Updatestudentdetails:

This section updates student details like profile photo, branch,etc.

Fig.8Updatesection

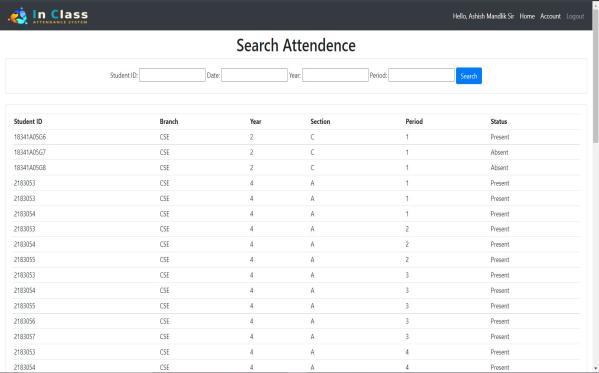

4.Searchattendance:

In this attendance section, we search the student's attendance using various filters such as Registration ID, name,date,section,andyear.

Fig.9SearchStudentsAttendance

VI. Challenges

We all know that human faces are unique and stiff things. Several aspects influence the appearance of the structure offaces.Theoriginofthediversityoffacialappearancecan be classified into two kinds of appearance. They are intrinsic factors and extrinsic factors. So intrinsic factors arerelatedpurelytothephysicaltraitsofthefaceandare independentoftheobserver.Thisintrinsicfactorisfurther split into two categories that are intrapersonal and interpersonal. The intrapersonal aspects can affect the facialappearanceofthesameperson,withsomeexamples suchasage,facialstufflikeglass,facialhaircosmetics,and so forth, and facial expression. Interpersonal aspects, one

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

on each hand, are essential for the variance in facial appearance for example, ethnicity and gender. Extrinsic factors contribute to the appearance of the face changing duetotheinterplayoflightwiththefaceandtheobserver. These elements include resolution, imaging, noise, and focus.

Assessments of state of the art techniques undertaken in recentyears,suchasFRVT2002aswellasFAT2004,have demonstrated that lightning, age, and pose variation are threefundamentalissueswithcurrentfacialrecognition.

VII. Conclusion

The primary goal in establishing this system was to eliminate all of the shortcomings and unusual techniques of manually handling attendance. The face recognition attendance system is designed to solve the issues with manual methods. We used the facial recognition idea to track student attendance and constructed a web based applicationtoimprovetheuserexperienceandthesystem. The method works effectively in a variety of stances and variations. It may be argued that utilizing human face recognitiontechnologyinaclassroomtoautomatestudent attendance works reasonably effectively. It can certainly be enhanced to produce a better outcome, especially by paying attention to the feature extraction or identification process.

A CNN face detector thatis both highlyaccurate and very robust, capable of detecting faces from varying viewing angles, lighting conditions, and occlusion. But the face detector can run on anNVIDIA GPU, makingitsuper fast!. So we required high end computation power devices to achieve greater accuracy. This system can be used in various places like validation of employees in offices, car detection, security purpose, and criminal detection. As we are using the pre train model, we define features for recognition,soinsomecases,oursystemmayfail.Likein cardetection,ourmodelistrainedwithahumanfacesoit isnotproperin cardetection.

VIII. Reference

[1]M.Sajid,R.HussainandM.Usman,"Aconceptualmodel for automated attendance marking system using facial recognition," Ninth International Conference on Digital Information Management (ICDIM 2014), 2014, pp. 7 10, doi:10.1109/ICDIM.2014.6991407.

[2]A. Raghuwanshi and P. D. Swami, "An automated classroom attendance system using video based face recognition," 2017 2nd IEEE International Conference on

Recent Trends in Electronics, Information & Communication Technology (RTEICT), 2017, pp. 719 724, doi:10.1109/RTEICT.2017.8256691.

[3]E. Winarno, W. Hadikurniawati, I. H. Al Amin and M. Sukur, "Anti cheating presence system based on 3WPrincipal Component Analysis(PCA) dual vision face recognition," 2017 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI),2017,pp.1 5,doi:10.1109/EECSI.2017.8239115.

[4]V. Soniya, R. S. Sri, K. S. Titty, R. Ramakrishnan and S. Sivakumar,"Attendanceautomationusingfacerecognition biometric authentication," 2017 International Conference on Power and Embedded Drive Control (ICPEDC), 2017, pp.122 127,doi:10.1109/ICPEDC.2017.8081072.

[5]N. K. Jayant and S. Borra, "Attendance management system using hybrid face recognition techniques," 2016 Conference on Advances in Signal Processing (CASP), 2016,pp.412 417,doi:10.1109/CASP.2016.7746206.

[6]P. Wagh, R. Thakare, J. Chaudhari and S. Patil, "Attendance system based on face recognition using eigen face and Principal Component Analysis(PCA) algorithms," 2015 International Conference on Green Computing and Internet of Things (ICGCIoT), 2015, pp. 303 308, doi: 10.1109/ICGCIoT.2015.7380478.

[7]S. Chintalapati and M. V. Raghunadh, "Automated attendancemanagementsystembasedonfacerecognition algorithms," 2013 IEEE International Conference on Computational Intelligence and Computing Research, 2013,pp.1 5,doi:10.1109/ICCIC.2013.6724266.

[8]E. O. Akay, K. O. Canbek and Y. Oniz, "Automated StudentAttendanceSystemUsingFaceRecognition,"2020 4th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), 2020, pp. 1 5, doi: 10.1109/ISMSIT50672.2020.9255052.

[9]E. Varadharajan, R. Dharani, S. Jeevitha, B. Kavinmathi and S. Hemalatha, "Automatic attendance management system using face detection," 2016 Online International Conference on Green Engineering and Technologies (IC GET),2016,pp.1 3,doi:10.1109/GET.2016.7916753.

[10]E. Rekha and P. Ramaprasad, "An efficient automated attendance management system based on Eigen Face recognition," 2017 7th International Conference on Cloud Computing,DataScience&Engineering Confluence,2017, pp.605 608,doi:10.1109/CONFLUENCE.2017.7943223.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056 Volume:09Issue:06|June2022 www.irjet.net p ISSN:2395 0072

[11]Y. Sun, B. Xue, M. Zhang, G. G. Yen and J. Lv, "Automatically Designing CNN Architectures Using the Genetic Algorithm for Image Classification," in IEEE TransactionsonCybernetics,vol.50,no.9,pp.3840 3854, Sept.2020,doi:10.1109/TCYB.2020.2983860.

[12]Ammar, A.; Koubaa, A.; Ahmed, M.; Saad, A.; Benjdira, B. Vehicle Detection from Aerial Images Using Deep Learning:AComparativeStudy.Electronics2021,10,820. https://doi.org/10.3390/electronics10070820

[13]S. Gidaris and N. Komodakis, "Object Detection via a Multi region and Semantic Segmentation Aware CNN Model," 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 1134 1142, doi: 10.1109/ICCV.2015.135.

[14]Domain adaptive faster R CNN for object detection in the wild. (n.d.). Retrieved June 4, 2022, fromhttps://www.researchgate.net/publication/3297505 53_Domain_Adaptive_Faster_R CNN_for_Object_Detection_in_the_Wild

[15]D. Varshni, K. Thakral, L. Agarwal, R. Nijhawan and A. Mittal, "Pneumonia Detection Using CNN based Feature Extraction," 2019 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), 2019, pp. 1 7, doi: 10.1109/ICECCT.2019.8869364.

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page2735