International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

1,2,3,4 Student, Department of Information Technology, SNIST, Hyderabad, Telangana, India 5Professor, Department of Information Technology, SNIST, Hyderabad, Telangana, India. ***

Abstract Safety and security are two of the most significant characteristics of today's age, and 'automation' is also becoming increasingly important. The major goal of this work is to demonstrate how computer vision and deep learning may be used to detect moving vehicles and count them by classifying them appropriately, as well as to address lane detection using computer vision. When detecting a vehicle, computer vision detects the car in the frame and classifies it using the YOLO deep learning algorithm and as well as determining whether the observed vehicle is moving forward or backward from the camera's perspective. While lane detection uses methodology such as frame masking, canny edge detection, and Hough lines transformation. These systems can be used in self driving automobiles, driver assistance in smart cars, as well as traffic management and planning, parking management systems, Traffic control and other applications.

Keywords: Lane,Vehicle,Automation,computervision,DeepLearning

Intheprevailingtime,therangeofvehiclestransferringonroads&highwaysisgrowinginaspeedymanner,withthisthe want of tracking and controlling of cars is greater crucial. Surveillance cameras may be visible in lots of public places. Several recordings are being saved and archived over time. Vehicle detection and counting have become an increasing number essential inside the dual carriageway control sector. However, because of the one of a kind sizes of cars, their discoverystaysataskthatwithoutdelaychangestheaccuracyofcarrecords.Thedualcarriagewayplacepicturedisfirst demarcated and subdivided right into a subdivision and adjoining place inside the proposed new subdivision; the techniqueisessentialinenhancingcaracquisition.Then,the2placesabovehadbeenlocatedattheYOLOv3communityto decidethekindandplaceofthecar.Finally,vehicletrajectoriesaredetectedviawayofmeansoftheORBalgorithm,which maybeusedtodecideavehicle'susingconductanddecidetherangeofvariouscars.Severaldualcarriageway primarily totallybasedsurveillancemoviesareusedtoconfirmtheproposedroutes.Testeffectsaffirmthattheuseoftheproposed partitioning technique can offer excessive detection accuracy, mainly for small car detection. In addition, the brand new method defined in this newsletter could be immensely powerful in judging using and counting cars. This mission has a sensiblepricethisisnotanunusualplacewithinsidethecontrolandmanagementofdualcarriagewayscenes.Atpresent, item primarily totally based car discovery is split into traditional system imaginative and prescient and deep mastering methods. Conventional system imaginative and prescient structures use the motion of a vehicle to split it from a static heritage image. This technique may be divided into 3 categories: a way to use heritage removal, a way to use non stop comparison of video frames, and a way to use optical flow. Using the video body variant technique, the distinction is calculatedinstepwiththepixelvaluesofor3consecutivevideoframes.Inaddition,thefrontalcircuitisseparatedviaway of means of a threshold. Vehicle detection and records in dual carriageway tracking video sequences are extraordinarily essential for dual carriageway sitevisitors control and management. Withthesignificant use ofsitevisitor’s surveillance cameras, a huge library of site visitors associated video pictures has been created and gathered for the motive of evaluationwhileconsideredfromanexcessivevantagepoint,it'smilesviabletoassumeanavenuefloorthisisinaddition away.Thegoalcar'slengthvariesdramaticallywhileconsideringthisattitudeandtheprecisionwithwhichasmallitema wayawaymaybedetectedthevisibilityfromthestreetispoor.Inthefaceofcomplexdigitaldigicamstructures,itsmiles importanttodealwiththedemandingsituationsindexedaboveeffectivelyatthebackofthescenes.Andpositionedthem intopractice.

ManypeoplehavepublishedmanyresearchpapersexplainingdifferentapplicationsofOpenCV,NumPy,YOLO,andmany detecting algorithms. A paper written by Aharon Bar Hillel, Ronen Lerner, Dan Levi & Guy Raz titled: Recent progress in

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

road and lane detection: a survey [1], performs a survey on various research papers on the topic of road and lane perceptionoverthelast5years,inthis,theyhavediscussedandelaboratedallthetechniques.Thepapertitled:Areview of lane detection methods based on deep learning written by, PengLiu SongbinLi, and JigangTang [2], discusses various methods and approaches to performing lane detection. The paper Titled: Robust Lane Detection and Tracking in Challenging Scenarios [3], by ZuWhan Kim addresses some of the challenges which are faced in the traditional lane detectionprocesslikelanecurvatures,wornline markings,lanechanges.ThepaperwrittenbyAbdulhakam.AM.Assidiq; OthmanO.Khalifa;Md.RafiqulIslam;SherozKhan:Real timeLanedetectionforautonomousvehicles[4],inthisavision based lane detection approach is presented that can work in real timeand is resistant to illumination changes and shadows.ThepaperwrittenbyKuo YuChiu;Sheng FuuLin:Lanedetectionusingcolour basedsegmentation[5],Weoffer a newstrategy basedoncolour informationthatcan be usedincomplexsituations. Inthisway,westart byselectingthe regionofinteresttofindthecolourlimitusingamathematicalmethod.Thereaftertheboundarywillbeusedtodistinguish between the possible boundaries of the road and the road. The paper: A novel system for robust lane detection and tracking[6],byYifeiWang;NaimDahnoun;AlinAchimintroducesaroutedetectionandtrackingsystembasedonanew route to remove the feature and Gaussian Sum Particle (GSPF) filter. In the paper titled: Lane Detection with Moving VehiclesintheTrafficScenes[7],writtenbyHsu YungCheng;Bor ShennJeng;Pei TingTseng;Kuo ChinFaninthispaper first, route lanes are recognized based on color information. Next, in cars of the same color and trajectory, we use size, shape,andmovementinformationtodistinguishtheactualroutemarkings.Finally,thepixelsinthelinemarkermaskare collected to find the line boundary lines. The paper: An Improved YOLOv2 for Vehicle Detection [8], by Jun Sang; ZhongyuanWu;PeiGuo;HaiboHu;HongXiang;QianZhang;andBinCaiinthispaperitisproposedtosolvetheproblems of existing methods like vehicle recognition, low accuracy, and speed new detection model YOLO v2 Vehicle based on YOLOv2.Areal timeorientedsystemforvehicledetectionby[9],MassimoBertozzi;AlbertoBroggi;StefanoCastelluccio: this work presents a system for vehicle detection in a monocular video, it is composed of 2 different engines PAPRICA a parallel architecture,anda serial architecturewhich runsmediumlevel tasksaimedtodetectthepositionof vehicles.In thepapertitled:VehicleDetectionandTrackingTechniques:AConciseReview[10],byRaadAhmedHadi,GhazaliSulong, and Loay Edwar George we present an overview of the image processing techniques and analytical tools used to build these aforementioned applications that involve the development of traffic monitoring systems. Paper titled Real time multiple vehicle detection and tracking from a moving vehicle [11], written by Margrit Betke, Esin Haritaoglu & Larry S. Davis, a real time visual system has been developed that analyzes color coded video cameras on the front wheel drive vehicle. The system uses a combination of color, edge, and movement information to detect and track road boundaries, road signs, and other vehicles on the road. A paper titled A survey of vision based vehicle detection and tracking techniquesinITSwrittenbyYuqiangLiu;BinTian;SonghangChen;FenghuaZhu;KunfengWang[12],thisworkpresents here a complete review of high quality video processing strategies for car detection and tracking as well as viewing directions for future research. A paper Vehicle Detection and Classification: A Review [13], by V. Keerthi Kiran, Priyadarsan Parida & Sonali Dash presents a detailed review of vehicle acquisitions and classification methods and discusses various vehicle acquisitions in adverse weather conditions. It also discusses the data sets used to evaluate proposedstrategiesinvariousdisciplines.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

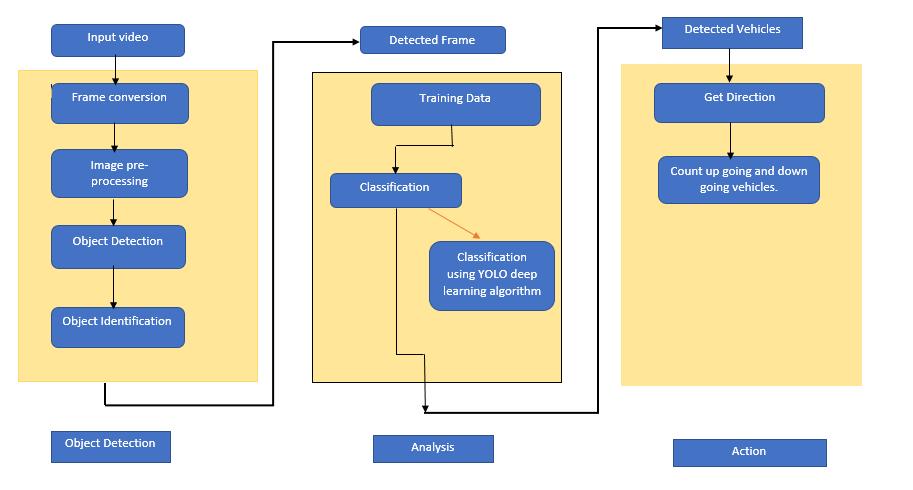

Fig1:FlowchartforLaneDetection,VehicleDetectionandClassification.

WeperformvehicledetectionandclassificationusingtheOpenCVand pythonmodules.WeusetheYOLOv3model along withOpenCV python.OpenCVisalibraryofpythonbindingsdesignedtosolvecomputervisionproblems.YOLOstandsfor theYouOnlyLookOnce.Itisusefulfordetectingreal timeobjectsusingalgorithms.YOLOalgorithmsworkbydividingthe image into N grids and each grid has an equal dimensional region of S x S. Each of N grids handles the detection and localizationoftheobjectsitcontains.

Inthisproject,wewilldetectandclassifythecarsmovingontheroadandalsocountsthenumberofcarspassingthrough theroad.Weneedtocreatetwoprogramsforthisproject.ThefirstonewilltrackthecarsusingOpenCV.Itkeepstrackof each and every detected vehicle on the road and another one will be the main detection program. The prerequisites required for working with these projects are python 3.0, OpenCV 4.4.0, Numpy, and YOLOV3 pre trained model weights andconfigFiles.

While performing the vehicle counting. In this process, the First step is, we need to import the necessary packages and initializethenetwork.Inthisstep,weimportthetrackerpackagewhichisusefulfortrackinganobjectusingtheEuclidean distanceconceptandalso,weneedtoinitializethelinepositionsthatwillbeusedtocountvehicles.YOLOv3istrainedon thecocodataset,sowereadfilesthatcontainallclassnamesandstorethenamesinalist.Thecocodatasetconsistsof80 different classes. Configure the network using cv2.dnn.readNetFromDarknet() function. If you using GPU set the DNN backend as CUDA. We can use the randint function from the random module where we can generate a random color for eachclassinourdataset.Withthehelpoftheseofthesecolors,wecandrawtherectanglesaroundtheobjects.

In the second step, we need to read the frame from the video file. Initially, we need to read the video through the video captureobject.Byperformingthereadmethod,wecanreadeachframefromthecaptureobject.Bythehelpreshapinghape we reduced our frame by 50 percent. The line function is useful for drawing crossing lines in a frame. Then we use the imshow()functiontoshowtheoutputimage.Inthethirdstep,wepreprocesstheframesandrunthedetection.Inthiswe useforwardisusedtofeedtheimageasinputandreturnanoutput.Thenweperformthepostprocess()functiontopost processtheoutput.Inthefourthstep,weperformthepreprocessingoftheoutputdata.

Thenetworkforwardhas3outputs.First,wedefineanemptyclasswherewestorealldetectedclassesinaframe.Weuse twoforloopsweiteratethrougheachvectorandcollecttheconfidencescoreandclassIdindex.Iftheconfidencescoreis greater than our defined confThresold. Then we collect information about the class and store the box coordinate points, class id, and confidence score in three separate lists. YOLO sometimes provides multiple bounding boxes for a single object, so we reduce thenumber of detection boxes and have taken the best detection box for each class. We use the

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

NmnBoxes() method to reduce the number of boxes and take only the best detection box for the class. We use cv2.rectangle()wecandrawaboundingboxaroundthedetectedobject.

Inthefifthstep,Track andcountall vehiclesontheroad,inthisstageaftergetting all detections, wekeeptrack ofthose objects using a tracker object. The tracker. update() function keeps track of very detected objects and updates the positionsoftheobject.Count_vehicleisa customfunctionthatcountsthenumberofvehiclesthatcrossedtheroad.Then weneedtocreatethetwoemptylists tostoreclassid’sthatenterthecrossingline. UP_listanddown_listisforcounting thosevehicleclassesintheuprouteanddownroute.Thenfind_centerfunctionreturnsthecenterpointofarectanglebox. Inthispart,wekeeptrack ofeachvehicle’spositionand theircorrespondingIds.First,wecheck ifthe objectis between theup_crossinglineandmiddlecrossingline,thenidoftheobjecttemporalityisstoredintheuplistforuproutevehicle counting.Inthedownroutevehicles,wecheckwhethertheobjecthascrossedthedownlineornot.Iftheobjectcrossed thedownline,thentheidoftheobjectiscountedasanuproute,andweadd1withtheparticulartypeofclasscounter.We usethecircle()methodtodrawacircleintheframe. Finallygetthecountstoshowthevehiclecountingontheframein real time.

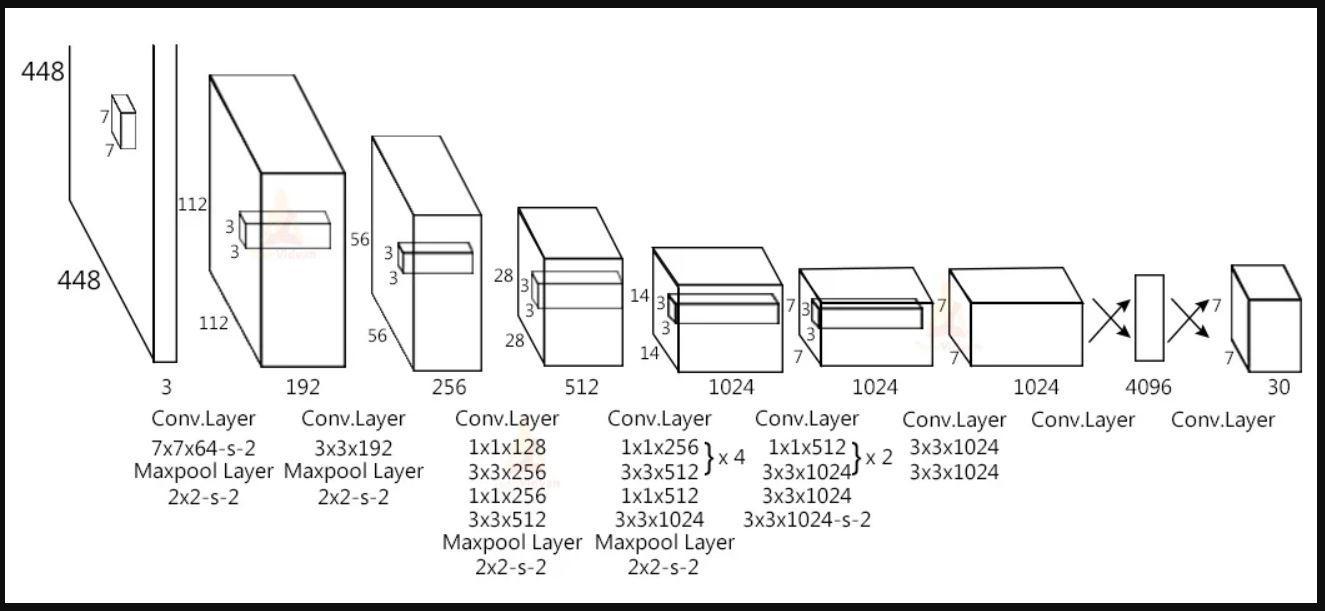

3.1.2. Architecture

Fig 2:YOLOArchitecture

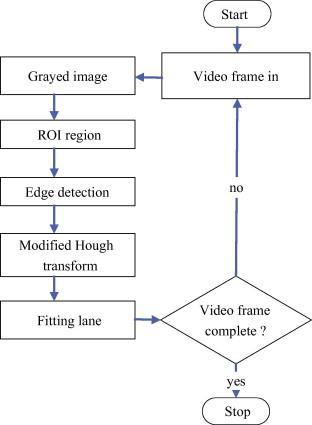

3.2. Lane Detection Methodology

Fig 3:MethodologyforLaneDetection

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Canny Edge Detection is a widely used algorithm in the OpenCV module, as the name suggests this is a method used for detectingedgesinanygivenimage.ThiswasdevelopedbyJohnF.Cannyintheyear1986.Thisalgorithmisamulti staged process,belowisthestepwiseworkingofthealgorithm.

Noise Reduction: Edgesarealwayssusceptibletonoise,sothefirststepinthealgorithmistoremovethisnoiseby using a5*5Gaussianfilter.Thisalsosmoothenstheimagebyreducingthenoise

Intensity Gradient Calculation: After the image is smoothened and noise is removed then it is filtered using the Sobel kernel bothverticallyandhorizontally,thishelpsinderivingthefirstderivativeinthehorizontal direction( )andfirst derivativeintheverticaldirection( ).Afterthiswecancalculatetheedgegradientanddirectionofeachpixelusingthe formulaegivenbelow: √ ( )

Thegradientdirectionisalwaysperpendiculartotheedges,anditisroundedtooneofthefourangles(0,45,90,180).

Non Maximum Suppression: Afterfindingthegradientvaluesnext,weneedtoperformaquickscanonthewholeimage looking for pixels that are unwanted means which are not constituted as edge and remove them. The result you get is a binaryimagewith"thinedges".

Hysteresis Thresholding: Thelaststepintheprocessishysteresisthresholdingforthisprocesstocompletecannyedge detection using two threshold values (i.e., upper threshold and lower threshold) those can also be said as MinVal and MaxVal.

Theintensitygradient( )of eachpixel ischeckedwith boththethresholdvaluesin ordertodistinguishedgepixelsand non edgepixels.Foreachpixel(p):

a. If >MaxVal,thenitisconsideredasanedgepixel.

b. If <MinVal,thenitisconsideredanon edgepixelandisdiscarded.

c. If > MinVal and <MaxVal, then this is considered as classified edges and if p is a pixel that is connected to a ‘sure edge’pixelthepisaccepted,elsepisrejectedanddiscarded.

Houghlinestransformisatransformusedtodetectstraightlinesinanimageorvideo,forthistransformtobeappliedon anyimagefirstitissuggestedtorunanedge detectionpre process.

Linesinanimagespacecanbeexpressedwith2variables: Cartesiancoordinatesystem: Polarcoordinatesystem: We use the polar coordinate system to express lines in Hough lines transform, below is the equation we use for the equationofaline. ( ) ( )

Arrangingtheaboveformulaegivesthebelowone,

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Foreachpoint, ingeneralgives,

Thismeansthatthepoint representseverylinepassingthrough

Ifforagivenpoint afamilyoflinesplotted,thenweobtainasinusoid.,intheplane.

Weconsideronlythepointssuchthatr>0and0< <2π.

We continue the process for some other points in an image, if any intersection happens in the ϴ r plane, that indicates boththepointsbelongtosameline.

Theseintersectionsintheplanehelpustodetectstraightlines,i.e.,moretheintersectionsmorethepointsofasameline, to consider the detected object to be as a lion a threshold value can be defines which checks for minimum number of intersecting curves in the plane to assign the object as a line. The Hough Line Transform keeps a track of these intersectionsofcurvesontheplaneforeverypointintheimagewhenthevalueincreasesthanthresholdthenitdeclaresit asalinewith asintersectionpoint.

weneedtoreadthevideofromthecamerainput,nextstepistoperformcannyedgedetectiononthereadvideoonevery pixel, this can be done by applying gray scaling to images first this turns the image into gray internally, and then the Gaussian smoothing, and blur methods are applied on the grayscale image then the actual canny edge detection is done, and the edges of the whole image are detected. The next step is to identify the region of interest (ROI) according to the requirementoftheproject,nextweneedtoapplyHoughtransformsintheROIafterthatwehavetofindtheaverageslope and extrapolate the lane lines in the Hough transforms to apply the image on the video to detect the lanes in the input video

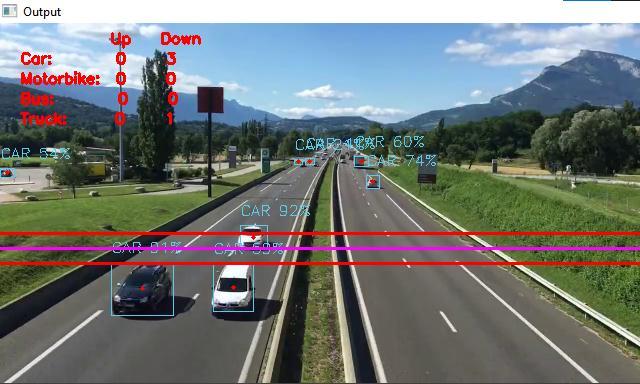

AsshoneinFig.4thevehiclesaredetectedwhichisrepresentedbytherectangularboundingbox.Thedetectedvehicleis classified and the percentage of accuracy is displayed on top of the bounding box, the 2 red lines represent the up and downlineswherea vehicle crossingthelineiscounted asupgoingordowngoing vehicle.Thepink lineisthe threshold line.Usedtodetecttheupgoinganddowngoingvehicles.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

ThedetectedlineisrepresentedinreddishorangecolorintheFig.5

Fig 5:Lanedetectionoutput

[1]AharonBarHillel,RonenLerner,DanLevi&GuyRaz. “Recentprogressin road andlanedetection:a survey”,Science Direct.

[2]JigangTang,SongbinLi,PengLiu.“Areviewoflanedetectionmethodsbasedondeeplearning.”,Elsevier.

[3]ZuWhanKim.“RobustLaneDetectionandTrackinginChallengingScenarios.”,IEEE.

[4]Abdulhakam.AM.Assidiq;OthmanO.Khalifa;Md.RafiqulIslam;SherozKhan“Realtimelanedetectionforautonomous vehicles.”,IEEE.

[5]Kuo YuChiu;Sheng FuuLin“Lanedetectionusingcolor basedsegmentation.”,IEEE.

[6]YifeiWang,NaimDahnoun,AlinAchim“Anovelsystemforrobustlanedetectionandtracking.”,Elsevier.

[7] Hsu Yung Cheng; Bor Shenn Jeng; Pei Ting Tseng; Kuo Chin Fan “Lane Detection with Moving Vehicles in the Traffic Scenes”,IEEE.

[8]JunSang;Zhongyuan Wu;PeiGuo;HaiboHu;Hong Xiang; QianZhang;andBin Cai “AnImprovedYOLOv2forVehicle Detection.”,MDPI.

[9] Massimo Bertozzi, Alberto Brogg, Stefano Castelluccio “A real time oriented system for vehicle detection.”, Science Direct.

[10] Raad Ahmed Hadi, Ghazali Sulong, Loay Edwar George “Vehicle Detection and Tracking Techniques: A Concise Review.”,ARXIV.

[11] Margrit Betke, Esin Haritaoglu & Larry S. Davis “Real time multiple vehicle detection and tracking from a moving vehicle”,Springer.

[12] Yuqiang Liu, Bin Tian, Songhang Chen, Fenghua Zhu, Kunfeng Wang “A survey of vision based vehicle detection and trackingtechniquesinITS”,IEEE

[13]V.KeerthiKiran,PriyadarsanParida&SonaliDash“VehicleDetectionandClassification:AReview”,Springer.