International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p-ISSN:2395-0072

1 , Dr. D. Jagadeesan2, Mr. V Maruthi Prasad3

1Student, IV CSE, Dept. of CSE

Madanapalle Institute of Technology & Science, Madanapalle, AP, India, 2Asst. Professor, Dept. of CSE

Madanapalle Institute of Technology & Science, Madanapalle, AP, India, 3Asst. Professor, Dept. of CST Madanapalle Institute of Technology & Science, Madanapalle, AP, India ***

Duetotheincreasein thedevelopment of economicandrecreational of drones,moreover because theassociated risk to airspace safety, this study proposal has emerged in recent years. This analysis plan has emerged within the previous couple of years because of the speedy development of economic and recreational drones and also the associated risk to airspacesafety.Acomprehensivereviewofcurrentliteratureondronedetectionandclassificationvictimizationmachine learning with completely different modalities, during this analysis plan has emerged within the previous couple of years because of the speedy development of economic and recreational drones and also the associated risk to airspace safety Dronetechnologyhasbeenusedinpracticallyeveryaspectofdailylife,includingfooddelivery,trafficcontrol,emergency response, and surveillance. Drone detection and classification are carried out in this study using machine learning and imageprocessingapproaches.Theresearch'smajorfocusisonconductingsurveillancein high riskareasandinlocations where manned surveillance is impossible. In such situations, an armed aerial vehicle enters the scene and solves the problem.Radar,optical,auditory,andradio frequencysensordevicesareamongthetechnologiesaddressed.Theoverall conclusion of this study is that machine learning based drone categorization appears to be promising, with a number of effectiveindividualcontributions.However,themajorityoftheresearchisexperimental,thereforeit'sdifficulttocompare the findings of different articles. For the challenge of drone detection and classification, there is still a lack of a common requirement drivenspecificationaswellasreferencedatasetstoaidintheevaluationofdifferentsolutions.

Keywords: Drone Detection, Machine Learning, Airspace safety, Radar, Acoustic, Airspace vehicle, surveillance, Motion Detection, Image Processing

Everyday wecomeacross manydronescapturedimagesandvideos, nowa days dronesareused in manyways suchasshootinginoccasions,deliveringthefoodusingautomateddroneandmanymoreusagesarebeingdone.Thenwhy can’tadroneisusedforthepurposeofsecurityandsurveillance.Heretheproblemhasraised.Then,

Agoodsystemfordetecting Dronesmustbeabletocoverabroadareawhileyetdistinguishingthemselvesfrom other things. One approach to doing this can be to use a combination of wide and slim field of read cameras. Another strategyistouseabunchofhigh resolutioncameras.there'snothankstohaveafisheyeinfrareddetectororAssociatein Nursing array of such sensors. in this thesis because there is only one with a fixed field of view. To obtain the required volume coverage, the IR sensor will be installed on a moving platform. At different periods in time, this platform can be assignedobjectsorsearchonitsown

This is AN era of advancement and technology. attributable to this technology and advancement, the crime and attacksofcriminalshaveconjointlyinflated.tocut back andshieldthevoters,correctsecurityand police work area unit required.severalorganizationstrytodevelopadvancedmachinesandtechniques.oneamongthoseisthatthearielvehicle technique.

In this technique, the employment of drones is completed for the bar and protection of the voters of a selected place.thismethodisemployedtolookatandconductpoliceworkattheborderofaselectedplace.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Thisprojectistitled"Dronedetectionandclassification"asaresultofduringthisanalysistheusageofthedrone iscompletedforthedetectionofanotherdrone,andthereforethealgorithmicrule duringthisdroneisemployedtofind andclassifytheitemthatispassingfromit.

The purpose of the paper is to observe, classify the drone from different objects that are moving. Our Machine learningmodelitselfispredicatedonthemotionoftheobjectsandmappingoftheobjectswiththedatasetandprovides the ultimate output. The machine learning algorithmic rule is developed for mapping and creating the correct representation of the moving object is finished. the foremost vital objective of the project is securing and police work of theborderareas

Motiondetectionofthedroneandcapturingofthemovementofthedroneispossible anditcanbedonewiththe machinelearningalgorithm,Sincetheworkingondronethemainlimitationoftheprojectistheclimaticconditionandthe batterybackup.

UnmannedAirVehicles(UAVs)(commonlyreferredtoasdrones)createvarietyofconsiderationsforairspace safety which will injury individuals and property. despite gaining widespread attention in a variety of civic and commercialuses.Whilesuchthreatscanrangefrompilotinexperiencetopremeditatedattacksintermsoftheassailants' aims and intelligence, they all have the potential to cause significant disruption. Drone sightings are becoming more common:inthelastfewmonthsof2019,forexample,manyairportsintheUnitedStates,theUnitedKingdom,Ireland,and theUnitedArabEmirateshavefacedsevereoperationaldisruptionsasa resultofdronesightings[1].Asensororsensor systemisrequiredtodetectflyingdronesautomatically.Whenitinvolvesthedronedetectiondrawback,asverifiedwithin the fusion of information from several sensors, i.e., using many sensors in conjunction to induce a lot of correct findings thannoninheritablefromsinglesensorswhereascorrectingforhisorherindividualshortcomings,issensible.[7]

Theproposedapproachaswellastheautomateddronedetectionsystemaredescribed.First,atahighlevel,then in further depth, both in terms of Hardware components, as well as how they are connected to the main computing resourceandthesoftwarethatisused.[3]Becausematlabisthemajordevelopmentenvironment,allprogramsarecalled scripts,andathreadisreferredtoasaworkerinthethesismaterial.[4][5]Usinga seriesofhigh resolutioncameras,as demonstratedin[7],isanotheroption.Thereisnowaytohaveawide angleinfraredsensorbecausethisthesisonlyhas one infrared sensor with a set field of view. The IR sensor, or a series of similar sensors, is used to achieve the requisite volume coverage. will be installed on a moving platform. When the platform's sensors aren't busy identifying and classifyingstuff,itcanbeassignedobjectsorgoonitsownhunt.[6]

Frequency modulation at a low cost In contrast to optical detection, continuous wave radars are resistant to fog, cloud, and dust, and are less susceptible to noise than acoustic detection. [6], It operates in low visibility conditions since it doesn't require a LOS. Depending on the microphone arrays used, the cost is low. [5] Low cost due to the use of camerasandopticalsensors,aswellasthereuseofcurrentsurveillancecameras.RFsensorsatalowcost.Long detection range,noLOSrequired.[7]

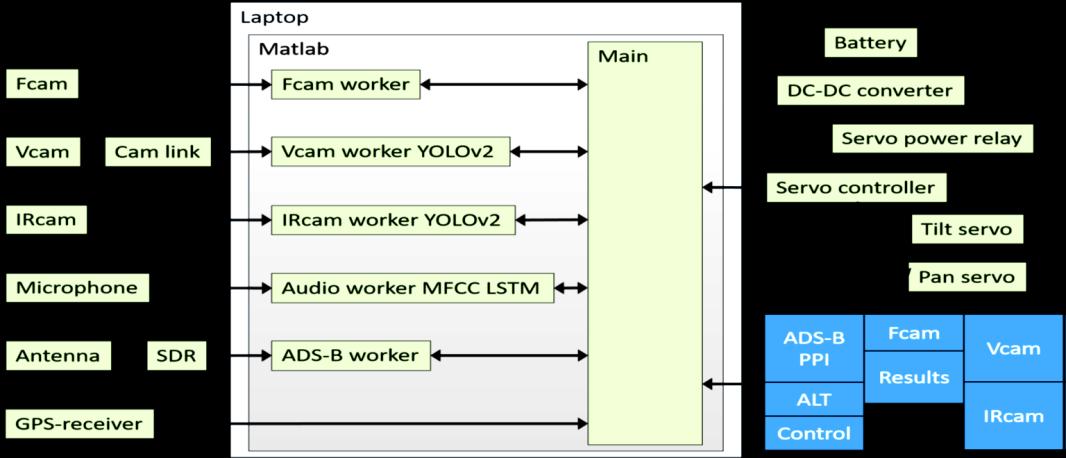

The system uses the principal electro optical sensors for detection and classification, a thermal infrared camera and a videocamera ThetechnologymakesADS Bdata availableinordertokeeptrack ofcooperativeaircraft1inthe airspace. Whenadroneorahelicopterentersthesystem'sarea ofoperation, audiodataisusedtodeterminetheirpresenceusing their different noises. The system's computations are performed on an ordinary laptop. This is also where the user sees the detection results. The following is the system architecture, which includes the main layout of the graphical user interface(GUI):

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

The IRcam is positioned beside the Vcam on a pan/tilt platform using data from a fisheye lens camera (Fcam) with a limitedfieldofviewthatcovers180horizontallyand90vertically.IftheFcamdetectsandmonitorsnothing,theplatform can be programmed to scan the skies in the area. the system in two distinct search patterns. The key components of the systemaredepictedinFigure3.2

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Thedronedetectionsystem'skeycomponents.Themicrophoneisonthelowerleft,andthefisheyelenscameraisabove it. The IR and video cameras area unit mounted on the central pan and tilt platform. A stand is found for the servo controller and power relay boards is found behind the pan servo, within the metal mounting channel. apart from the laptopcomputer,allhardwareelementsareaunitmountedonatypicalsurveyor'srack. toprovideastablefoundationfor thesystem.Thisstrategyalsomakesit easytodeploythesystemoutside,asshowninthediagram. Thesystemhastobe easilyportabletoandfromanylocationgiventhenatureofthetechnology.Asaresult,atransportsolutionwasdesigned, inwhichthesystemcouldbedisassembledintoafewmajorcomponentsandplacedinatransportbox.

The power consumption of the system components has been considered in the same way that it has been in the any embeddedsystem’sdesigntakenintoaccountinordertoavoidoverloadingthelaptop’ sbuilt inportsandtheUSBhub.A RuidengAT34testerwasusedtomeasurethecurrentdrawnbyallcomponentsforthispurpose.

A FLIR Breach PTQ 136 thermal infrared camera with a Boson 320x256 pixels detector was employed. The IR field cameras of vision There are a total of 24 longitudinally and 19 laterally. Figure 3.4 shows a image from the IRcam video stream.

FLIR Breach's Boson sensor, for example, has a better resolution than the FLIR Lepton sensor, which has an 80x60 pixel resolution.Uptoadistanceofatleastmeters,thescientistswereabletodistinguishthreedifferentdronekinds.However, detectioninthatinvestigationwasperformedmanuallybyahumanwatchingthelivevideobroadcast,ratherthanusinga trainedembeddedandintelligentsystem,asinthisthesis.

TheIRcamcanoutputtwotypesoffiles:aclean320x256pixelfile(Y16with16 bitgrayscaleimage)andaninterpolated 640x512 pixel image in I420 format (12 bits per pixel). The colour palette of the interpolation image format can be modified, and there are a variety of other image processing choices. The raw format is employed in the system to avoid havingextratextinformationplacedontheinterpolatedimageBecausetheimageprocessingproceduresareimplemented inMATLABratherthanPython,thisoptiongivesyoumorecontroloverthem.

The output of the IRcam is distributed to the portable computer through a USB C port at sixty frames per second (FPS). TheIRcamisadditionallyhopped upbytheUSBassociation.

ASonyHDR CX405videocamerawasaccustomedcapturethesceneswithinthespectrum.asaresultoftheVcam'soutput isANHDMIsignal,ANElgatoCamLink4Kframedisagreeablepersonisemployedtosupplya1280x720videostreamin YUY2 format(16bitsperpixel)atfiftyframespersecondtotheportablecomputer.BecausetheVcam'szoomlenscanbe adjusted,thefieldofviewcanbemadetobebroaderornarrowerthanthe IRcam’s.TheVcamisavideocamera.issetto haveaboutthesamefieldofviewastheIRcam.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

To monitor a larger area of the system's surroundings, an ELP 8 megapixel 180 fisheye lens camera is used. A USB port producesa1024x768Mjpgvideostreamat30framespersecond.

A small cardioid directional microphone, the Boya BY MM1, is also connected to the laptop to identify the sound of the drone.

A RADAR module is not included in the final design, as indicated in the system architecture specification. However, becauseonewasavailable,itwasdeterminedthatitshouldbeincludedand detailedinthissection.TheRADARmodule’ s performanceisdescribed,anditsabsenceisjustifiedbytheshortpracticaldetectionrange.

Thesystem’scomputingcomponentisrunthiswasdoneusingHPlaptop.TheGPUisanNvidiaMX150,andtheCPUisan Inteli7 9850H.ThelaptopisconnectedtosensorsandtheservocontrollerviainbuiltportsandanadditionalUSBport.

Therearetwosectionstotheprogramutilizedinthethesis.Tobegin,thereisthesoftwarethatiscurrentlyrunninginthe systemwhenitisdelivered.Asetofsupporttoolsisalsoavailablefortaskslikecreatingdatasetsandtrainingthesetup.

Whenthedronedetectoristurnedon,thesoftwareconsistsofamainscriptandfive"workers,"whichareparallelthreads madepossiblebytheMATLABparallelcomputingtools.Messagesaretransported inbetweenthemainprogramandthe employees using data queues. Using a background detector and a multi object Kalman filter tracker, the Fcam worker communicates the azimuth and elevation angles to the main program after establishing the position of the best tracked target1. The main software can then use the servo controller to drive the pan/tilt platform servos, allowing the IR and videocamerastoanalyzethemovingobjectfurther

The audio transmitter transmits the information about the classes and confidence to the main part. One is made up of a historytrack,whiletheotherismadeupofcurrenttracksbecauseofthisthedisplaydepictsandthevariationsinaltitude ofthetargetsclearly.

All of the above parts also confirm the main script's command to run the detector/classifier or be idle. the number of framespersecondpresentlybeinghandledisadditionallypassedtothemostscript.anyanalysisintothevarious output categories or labels that the most code will receive from the employees finds that not all sensors will output all of the target categories employed in this analysis. Then, the audio employee contains a background category. If the received message'svehicleclassfieldismissing,ADS Bcanprovidetheoutput"NoknowledgeClass".

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Table3.1:Observationsofdifferentcamerasandmodules

Themainscriptisthesoftware'sbrain;itnotonlystartsthefiveworkers(threads)andestablishescommunicationqueues forthem,butitalsodeliverscommandstotheservocontrollerandgetsdatafromtheGPSreceiver.Followingthestart up procedure,thescriptentersaloopthatrunsuntiltheuserclosestheapplicationthroughtheuserinterface(GUI).

The most common actions performed on each iteration of the loop refreshing the user interface and reading user inputs Themainscriptalsoconnectswiththeservocontrollerandtheworkers.atregularintervals.Tentimespersecond,servo positionsandqueuesarepolled.Thesystemresults,suchastheThiscomponentalsocalculatesthecorrespondingoutput labels and confidence using the most current worker outcomes. Furthermore, new commands are supplied to the servo controller at a rate of 5 Hz for execution. The ADS B graphic is updated every two seconds. Having varied intervals for distinctjobsimprovestheefficiencyofthescriptbecause,forexample,becauseanaeroplanetransmitsitspositionevery secondviaADS B,updatingtheADS Bchartstoofrequentlywouldbeawasteofprocessingresources.

TheIRcamworkerattachestoaqueueitgetsfromoftheparentscriptinthefunctiondefinitionwhenitstartsupforthe first time. The IRcam worker uses the worker's queue to build a queue for the main script, because the main script can only create a queue from the worker. This establishes bidirectional communication, allowing the worker to provide informationaboutthedetectionsaswellasreceivedirectives,suchaswhenthedetectorouldoperateandwhenitshould beidle.WhentheIRcamworkerfunctionisstartedwithoutaqueue

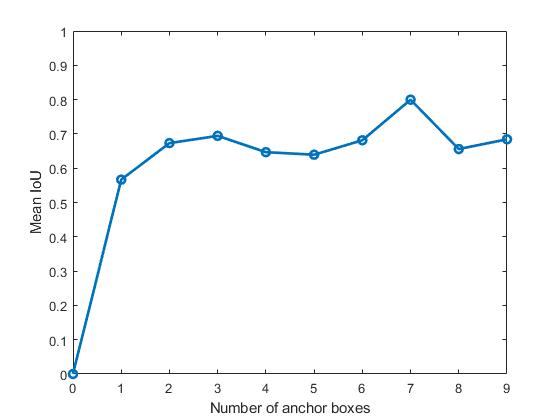

The trade off to consider when deciding on the number of anchor boxes to Using a big IoU guarantees that the anchor boxes overlap effectively with the training data's bounding boxes. but utilizing more anchor boxes increases the computationalcostandincreasestheriskofoverfitting.Threeanchorboxesarechosenafterreviewingtheplot,andtheir sizes are generated from the output of the estimate Anchor Boxes function, with a width scaling ratio of 0.8 utilized to matchtheinputlayer'sshrinkagefrom320to256pixels.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Aftertheevaluationdatahasbeenselectedandsetaside,thedetectoristrainedutilizingdatafromtheavailabledataset. The IRcam YOLOv2 detector's training set contains 120 video clips, each lasting little more than 10 seconds and evenly dispersed over all class and distance bins, totaling 37428 annotated photos. Using the stochastic gradient descent with momentum(SGDM)optimizer,thedetectoristrainedforfiveepochs1withaninitiallearningrateof0.001.

The trainYOLOv2ObjectDetector function does pre process augmentation automatically because the training data is supplied as a table rather than utilizing the datastore format. Reflection, scaling, and modifying brightness, color, saturation,andcontrastareamongtheenhancementsused.

With a few exceptions, The Vcam worker looks a lot like the IRcam worker. The input image for the Vcam is 1280x720 pixels, and it is shrunk to 640x512 pixels following the obtain snapshot function. The visible video image is subjected to only one image processing step. The input layer of the YOLOv2 detector measures 416x416x3. When compared to the IRcamworker'sdetector,thedetector'slargersizeisimmediatelyevident inthedetector'sFPSperformance.Thetraining periodislongerthanintheIRexampleduetothehigherimagesizenecessarytotrainthedetector.Onasystemwithan NvidiaGeForceRTX20708GBGPU,oneepochtakes2h25min.Thetrainingsetcontains 37519images,andthedetector, like the IRcam worker's detector, is trained for five epochs. The fisheye lens camera was originally configured to look upwards,howeverthiscausedsignificantvisualdistortionintheareajustabovethe initialpoint,whichistheinteresting targetthatappear.

Themotiondetectorislessinfluencedbyimagedistortionafterrotatingthecameraforward,andsincehalfofthefieldof viewisnotusedotherwise,thisisafeasibleapproach.Theimagewasinitiallyeditedtoeliminatetheareahiddenbythe pan/tiltplatform.Theimagebelowthehorizoninfrontofthesystem,ontheotherhand,isnolongerevaluated

TheFcamworker,liketheIRcamandVcamworkers,establishesqueuesandconnectstothecamera.The camera'sinput image is 1024x768 pixels, and the lower part of the image is reduced to 1024x384 pixels when the obtain snapshot functionisused

The image is subsequently analyzed using the computer vision toolbox's Foreground Detector function. This use a Gaussian Mixture Models based background subtraction technique (GMM). The moving elements are one, and the background is none. hence this function outputs a binary mask. The mask is then processed using the impend function, whichaccomplishesmorphological erosionanddilation.The elementof impendfunctionis tosetitto3x3and toensure thatonlyextremelysmallitemsareremoved.

TheFcamworker'soutput istheFPS status,aswell astheoptimumtrack'selevationandazimuth angles,ifone exists at thetime.TheFcamhasthemosttuningparametersoutofalltheemployees.Theimageprocessingprocedures themulti objectKalmanfiltertrackerparameters,thefrontdetectorandblobanalysismustallbechosenandtuned.

Theaudioworkercapturesacousticdatausingtheassociateddirectionalmicrophoneandstoresitinaone secondbuffer (44100samples)thatisrefreshed20timespersecond.Themfcc functionfromthemfcc libraryisusedtoclassifysound inthebuffer.thetoolboxforaudio theparameterLogEnergyissettoIgnorebasedonempiricaltrails,andtheretrieved featuresarethenpassedtotheclassifier.

The LSTM classifier is made up A fully connected layer, a SoftMax layer, Associate in Nursing a classification layer comprisean inputlayer, 2 bifaceLSTMlayerswitha dropoutlayerin between,a completelyconnected layer, a SoftMax layer,and a classificationlayer. Theclassifier expands onthis, but with the additionof inclusionofa third dropout layer between the bidirectional LSTM layers and an increase in the number of classes from two to three. Because each of the outputclassesIntheaudiodatabase,thereare30ten secondsnippets;fiveclipsfromeachlessonarekeptasideforstudy.

The remaining audio clips are incorporated into the course. For every 10 sec’s clip’s final second is used for validation, whiletherestisusedfortraining. Theclassifierisbeinggivenfirsttrainedfor250epochs,usinga near zerotrainingset errorandamarginallyincreasingvalidationloss.

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

Because, unlike the YOLOv2 networks, the audio module is a classifier rather than a detector, and it is trained using a separate class of general background sounds. These were captured outside in the system's regular deployment environment, and include some clips of the servos moving the pan/tilt platform. The output, like those of the other workers, is a class label and a confidence score. As previously mentioned, not every aircraft will broadcast its vehicle categoryaspartoftheADS Bsquittermessage.TheimplementationofADS Bmessagedecodingcanbedoneintwoways. The first approach is to dump the data using Dump1090 software and then import it into Matlab, with the worker just sorting it according to the main script. Another option is to use the Communications toolbox in Matlab to create ADS B decoding.

The difference between the two approaches is that Dump1090 requires fewer computational resources, but the ADS B squitter'svehiclecategoryfieldisnotincludedintheoutputJSONformat1message,evenifitisfilled

The Matlab technique, on the other hand, outputs all available information about the aircraft while consuming more computationalresources,causingthesystemtoslowdownoverallperformance.

Data was collected to identify the percentage of aircraft that sent out vehicle class information in order to find the best optionAscriptwascreatedaspartofthesupportsoftwaretocollectbasicstatisticaldata,anditwasdiscoveredthat328 ofthe652 planes,or slightlymore thanhalf,sent outvehicletypeinformation. The vehiclecategoryforthe restofthese planeswassetto"NoData."Giventhataroundhalfofallaircraftsendouttheirvehiclecategory,oneofthemainpillarsof thisstrategyistodetectandtrackotherflyingobjectsthatcouldbemistakenfordrones,despitethecomputingburden.

All of the vehicle categories that are subclasses of the aero plane target label are grouped together. "Light," "Medium," "Heavy," "High Vortex," "Very Heavy," and "High Performance Highspeed" are all grades. Helicopter is the name of the class "Rotorcraft." There is also a "UAV" category designation, which is interesting. Although the label is translated into drone,thisisalsoimplementedintheADS Bworker.

One might wonder if such aircraft exist, and if so, whether they fall under the UAV vehicle category. Take a look at the Flightradar24 service for an example. One such drone may be seen flying near Gothenburg City Airport, one of the locationswherethedatasetforthisthesiswascollected.

Ifthevehiclecategorymessageisreceived,theclassification'sconfidencelevelissetto1,andifitisn't,thelabelischanged to aero plane, which is the most prevalent aircraft type, with a confidence level of 0.75. any of the other sensors could affect the final classification. The support software comprises of a series of scripts for creating training data sets, configuring the YOLOv2network,andtrainingit. There areother scriptsthat importa network thathaspreviouslybeen trainedanddoadditionaltrainingonit.Thefollowingaresomeofthedutiesthatthesupportsoftwareroutineshandle:

ADS Bstatisticsaregathered.

Transformationsandrecordingsofaudioandvideo

Thetrainingdatasetsaremadeupofthedatathatisavailable.

Estimatingthenumberandsizeofanchorboxesneededtosetupthedetectors

Therisinguseofdrones,aswellastheresultingsafetyandsecurityconcerns,underlineDronedetectiontechnologiesthat are both efficient and dependable are in high demand. This thesis looks at the possibilities for creating and building a multimodal drone detection system employing cutting edge machine learning techniques and sensor fusion. Individual sensorperformanceisevaluatedusingF1 scoreandaverageaccuracymean(mAP).

Machine learning algorithms can analyze the data from thermal imaging, making them ideal for drone identification, accordingtothestudy.WithanF1 scoreof0.7601,theinfrareddetectorperformssimilartoaregularcamerasensor,that

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume: 09 Issue: 06 | June 2022 www.irjet.net p ISSN:2395 0072

hasanF1 scoreof0.7849.TheF1 scoreoftheaudioclassifieris0.9323.Asidefromthat,incomparisontopreviousstudies on the use of an infrared sensor, this thesis increases the amount of target classes used in detectors. The thesis also includes a first of its kind analysis of detection performance. employing a sensor to target distance as a function of The Johnson basedDetect,Recognize,andIdentify(DRI)criteriawereusedtocreatedistancebindivision. When compared to any of the sensors working alone, the suggested sensor fusion enhances detection and classification accuracy.Italsohighlightsthevalueofsensorfusionintermsofreducingfalsedetection.

1. J. Sanfridsson et al. Drone delivery of an automated external defibrillator a mixed method simulation study of bystanderexperience.ScandinavianJournalofTrauma,ResuscitationandEmergencyMedicine,27,2019

2. S. Samaras et al. Deep learning on multi sensor data for counter UAV applications a systematic review. Sensors, 19(4837),2019

3. E.Unluetal.Deeplearning basedstrategiesforthedetection andtrackingofdronesusingseveral cameras.IPSJ TransactionsonComputerVisionandApplicationsvolume,11,2019.

5. H. Liu et al. A drone detection with aircraft classification based on a camera array. IOP Conference Series: MaterialsScienceandEngineering,322(052005),2018.

6. P.Andrasi etal.Night time detectionof UAVsusing thermal infraredcamera. TransportationResearchProcedia, 28:183 190,2017.

7. M. Mostafa et al. Radar and visual odometry integrated system aided navigation for uavs in gnss denied environment.Sensors,18(9),2018.

8. Schumann et al. Deep cross domain flying object classification for robust uav detection. 14th IEEE International ConferenceonAdvancedVideoandSignalBasedSurveillance(AVSS),68,2017.

9. RFbeam Microwave GmbH. Datasheet of K MD2. https://www.rfbeam.ch/files/products/21/downloads/ Datasheet_K MD2.pdf,November2019

10. MathWorks. https://se.mathworks.com/help/vision/ examples/create yolo v2 object detection network.html, October2019.

11. MathWorks. https://se.mathworks.com/help/audio/ examples/classify gender using long short term memory networks.html,October2019

12. M.Robb.Githubpage.https://github.com/MalcolmRobb/dump1090,December2019.

13. Flightradar24.https://www.flightradar24.com,April2020

14. EverdroneAB.https://www.everdrone.com/,April2020.