International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

Soham Bhagwat1 , Pratik Dharu2 , Abhishek Dixit3, Bhadrayu Godbole4

1,2,3,4Dept. of Computer Engineering, P.E.S. Modern College of Engineering, Maharashtra, India ***

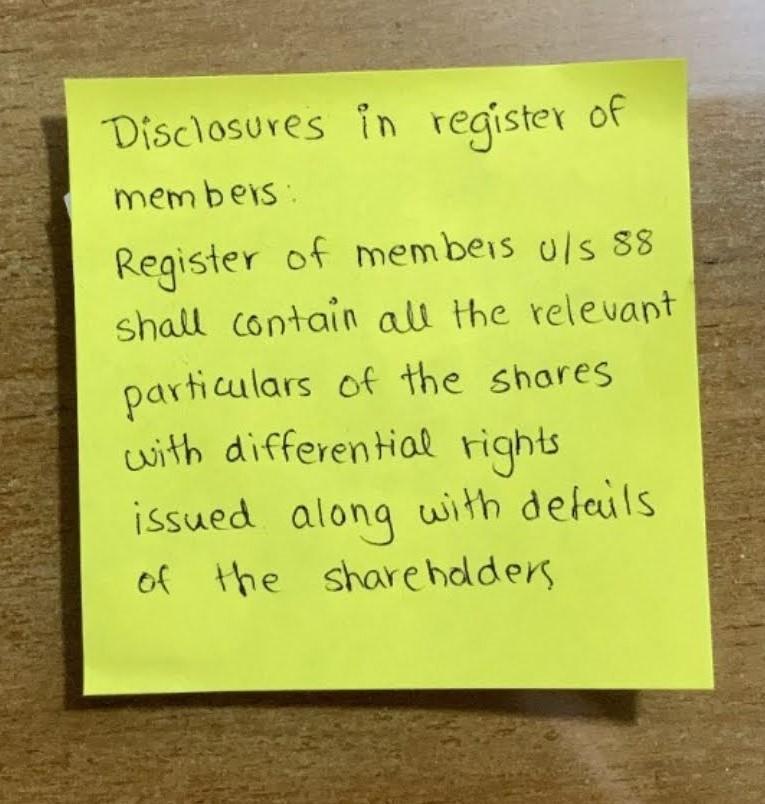

Abstract The project focus on the creation of OCR software for the off line recognition of handwriting. OCR programs can recognize printed text with nearly perfect accuracy. The recognition of handwriting is harder due tothe many different styles and inconsistent nature of handwriting. Handwritten text recognition (HTR) is an open field of research and a relevant problem that helps automatically process historical documents.

In recent years great advances in deep learning andcomputer vision have allowed improvements on document and image processing including HTR. Handwritten text recognitionplays an important role in the processing of vital information. Processing of digital files is cheaper than processing traditional paper files even though a lot of information is available on paper.

The aim of an OCR software is to convert handwrittentext into machine readable formats. Despite such advances in this field, little has been done to produce open source projects that address this problem as well as methods that utilize graphical process units (GPUs) to speed up the training phase.

Key Words: OCR,HTR,GPU,CNN,BRNN,CTC,DIA,MRZ

OpticalCharacterRecognition(OCR)dealswithrecognition ofdifferentcharactersfromagiveninputthatmightinclude animage,arealtimevideo,oramanuscript/document.By usingOCR,onecantransformthetextintoadigitalformat, thusallowingrapidscanninganddigitizationofdocuments inphysicalformataswellasrealtimetext recognition(in case of videos) Similarly, this interpretation of OCR methodology involves pre processing of input, text area detection, application of the best pre trained models and finally, detection of text as an output. All of this is made possible with an offline software UI compatible with a Windowsoperatingsystem.

TodevelopOpticalCharacterRecognition(OCR)software for the recognition of handwriting using following algorithms:

CNN BRNN CTC

Presently, OCR is capable in reading screenshots which has facilitated the transferring of information between incompatible technologies. By using OCR for handwritten textmanualentriesonpaperwillalsobelegibletocomputer systems.Additionally,OCRcanbeusedtoperformDocument Image Analysis (DIA) by reading and recognizing text in research, governmental, academic, and business organizations that are having a large pool of documented, scanned images. Thirdly, OCR can be used to automate documentation and security processes at airports by automatically reading the Machine Readable Zone (MRZ) andotherrelevantpartsofapassport.Inthisway,OCRhasa scopeinawiderangeofapplications.

Having considered some of the benefits of using OCR software,italsocomesalongwithitsownshortcomings To beginwith,straightOCRwithoutadditionalAIortechnology specifically trained to recognize ID types will lack the requisite accuracy one needs to deliver a good user experience. Thus, structuring the extracted/detected data involvesmorethanjustOCR.Secondly,consideringpictures of ID documents these images usually need to be de skewediftheimagewasnotalignedproperlyandreoriented so that the OCR technology can properly extract the data. Thus, OCR must combine with image rectification. Lastly, when there is glare or blurriness in the ID image, the probability of data extraction mistakes is significantly higher.Thus,glareandblurcancausemistakes.

Mentionedbelowaresomerequirementspecificationsforthe efficientworkingoftheOCRsoftware.

Before the commencement of the project, there are some assumptionsthattheprojectworkswith:

The input image selected by the user is in a jpg/png format.

The text to be detected and recognized is in English. Theinputimageprovidedbytheuserisupright.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

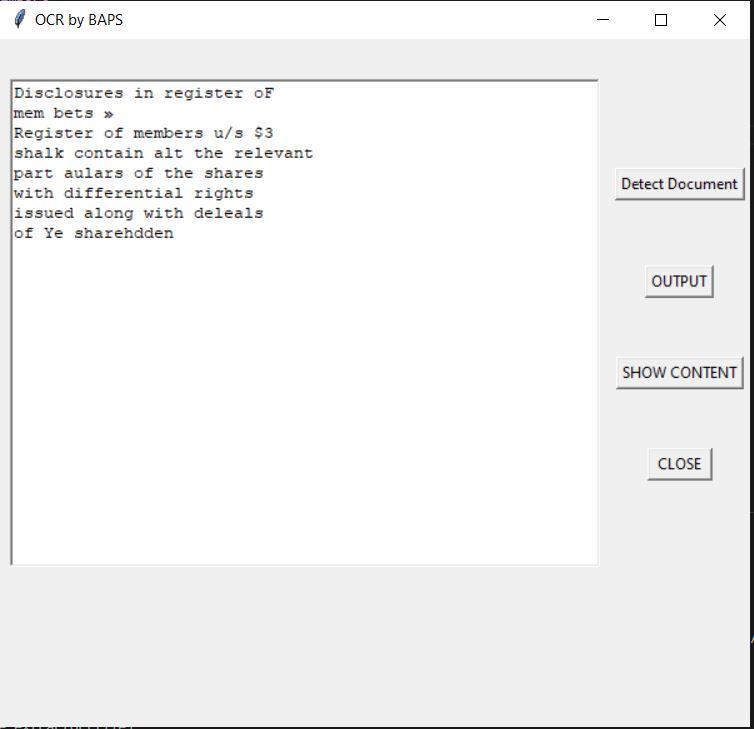

Theusershouldbeabletochooseanduploadanimage ofhischoicethroughthefilebrowserwindowuponclicking oftheuploadimagebuttononthesoftwareUI.

The user should be able to get instant output of the chosenimageintheoutputwindow,andusershouldalsobe shown intermediate stages in output detection for better understandinguponclickingofthebuttonsuccessivelyonthe softwareUI.

Thesoftwareisasinglescreendisplaywheretheuser uploadsanimageasperinterest.

Theimageiscropped,resizedanddetectedtextoutput isdisplayedaftertheuserpressesindicatedbuttons.

The software is designed to run on all PCs having at leastaWindows8OSalongwithPython3.9installed.

ThefrontendismanagedusingTkinterlibraryofPython whilethebackendishandledbyoslibraryofPython.

Thesoftwarerunsonthesavedmodelfilesthathave been trained on a cloud infrastructure named Google Colaboratory

Themodelshavealreadybeentrainedandoptimizedon GoogleColaboratoryGPUsbeforehand,sotheperformance requirementsofusershavebeenreducedtoaminimum8GB RAMalongwithasuitablequadcoreprocessorandminimum 5GBfreeHDDtomakespaceforthewholesoftwaresuite.

With the above hardware specifications, a user takes around 30 to 50 seconds to get an output in the provided outputwindowintheUI.

Asnouserdataiscollected,therearen’tanysecurity concerns.

Asthissoftwareisoffline,thereisn’tanyvulnerability posedfromthenetworkside.

Thetracesofdataaredeletedoncetheuserclosesthe UI.

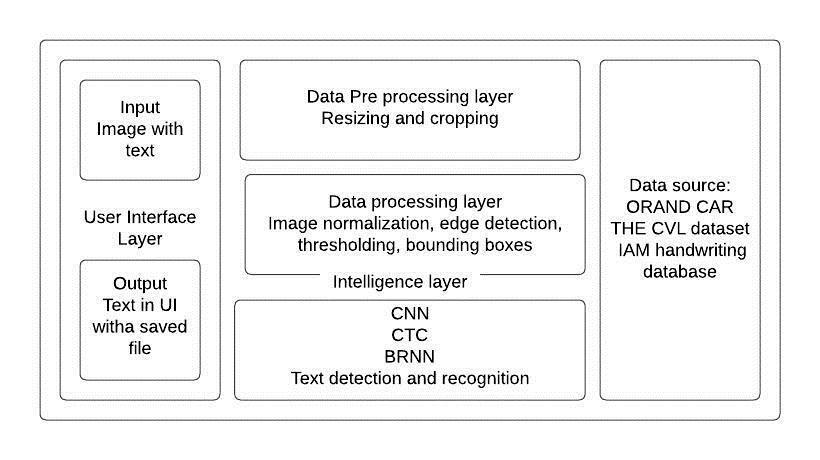

3.1 System Architecture

Fig 1:Systemarchitecturediagram

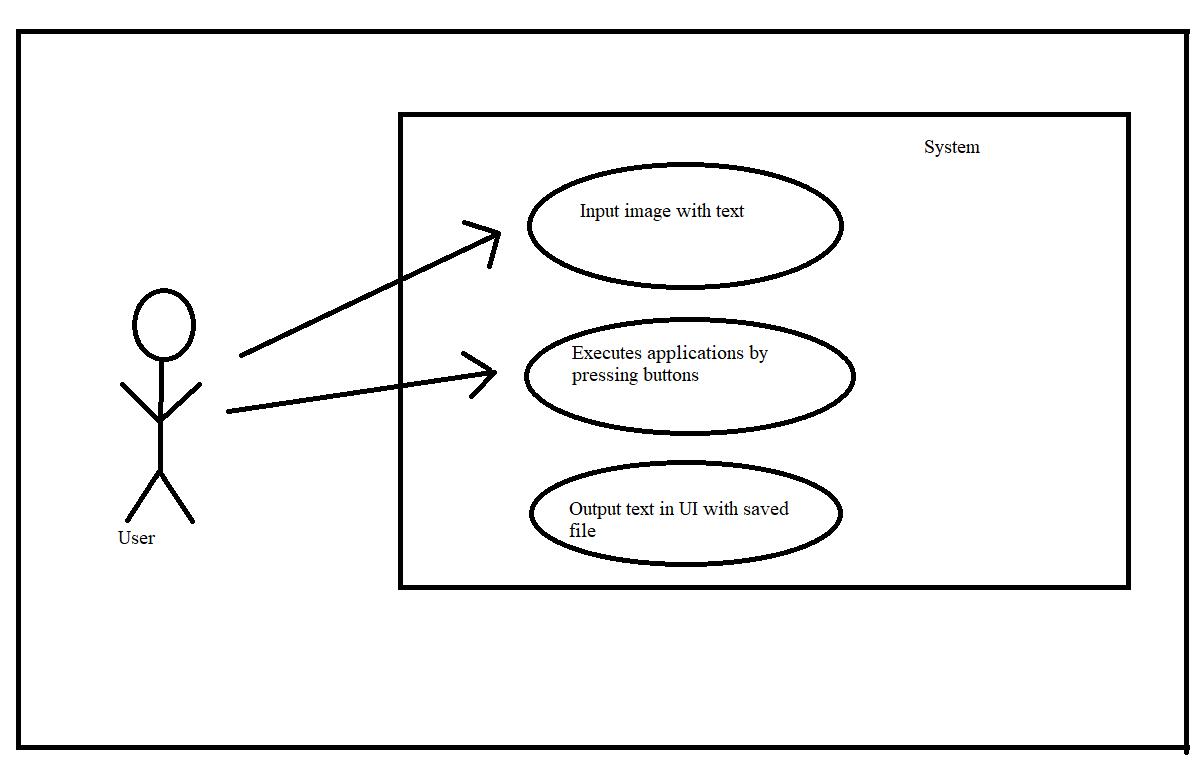

3.2 Use Case Diagram

Fig 2:Use casediagram

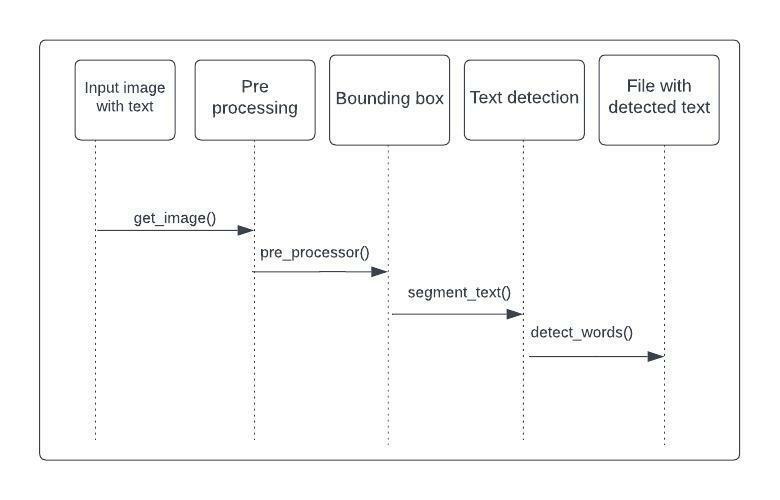

3.3 Sequence Diagram

Fig -3:Sequencediagram

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

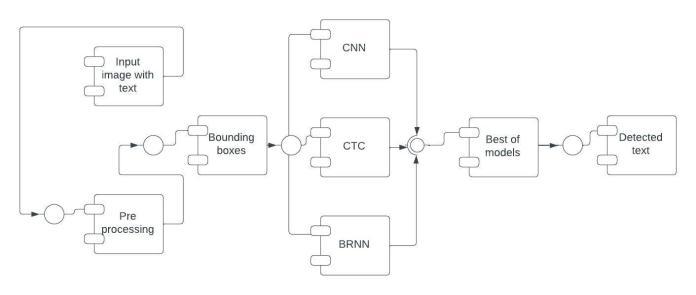

Fig 4:Componentdiagram

Document Detection: This module helps to detect documentpresentintheimage.Itfurtherhelpsincropping theimagebyremovingthebackgroundsothattheonlythe document is visible and further resizes the image so as to makeitusablebytheothermodules.

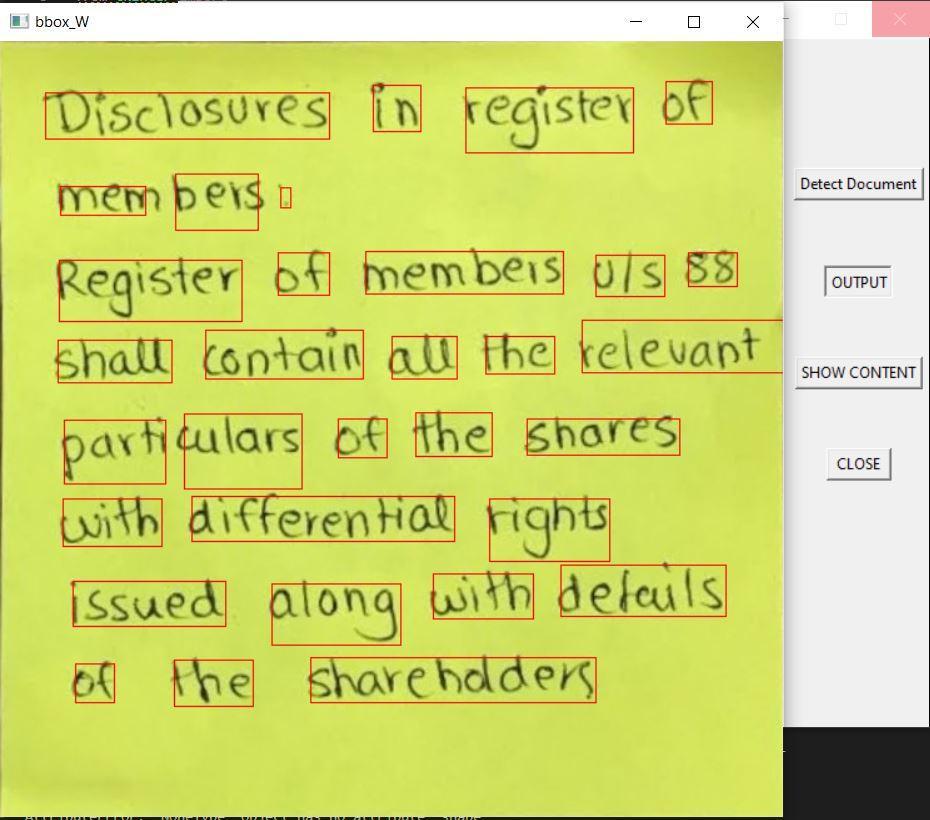

Text areaDetection:Thismodulescansovertheareas intheimageandmakesaroughestimateoftextareasthat might be present in the image. It further draws bounding boxesoverthedetectedtextareasintheimage.

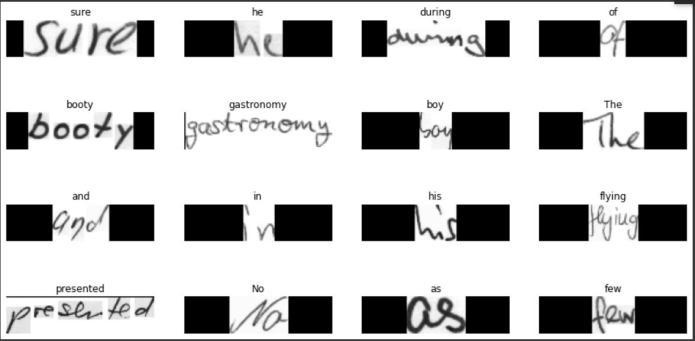

TextRecognition:Thismodulescansoverthebounding boxesintheimageandgivesaroughestimateofrecognized textthatmightbepresentintheboundingboxes.Thisisdone withthehelpofdifferentpre trainedmodels.

Output: This module stores the text that has been recognizedfromtheimage.Itfurthergeneratesanoutputin theoutputwindowandmakesatextfile.

Thealgorithmfordetectingstraightlinescanbedividedinto thefollowingsteps:

Edge detection, e.g. using the Canny edge detector. MappingofedgepointstotheHoughspaceandstorage inanaccumulator.

Interpretationoftheaccumulatortoyieldlinesofinfinite length. The interpretation is done by thresholding and possiblyotherconstraints.

Conversionofinfinitelinestofinitelines.Thefinitelines canthenbesuperimposedbackontheoriginalimage.

Thisinturnwillhelpusindetectingadocumentpresentin theimage.

Thisprocessisusedtofurthercropandresizetheimagefrom removingthebackgroundinawaythatjustthetextremains.

Thealgorithmfortextrecognitionthroughdifferentmodels canbedividedintothefollowingcommonsteps:

Performpre processingontheimagebyremovingnoise and document background using the Hough line detector mentionedabove.

Detecttextareasintheimageanddrawboundingboxes over the detected text areas by making use of packages in Python.

Usefeatureextraction/labellingonexistingdatasetsfor un supervisedlearning.

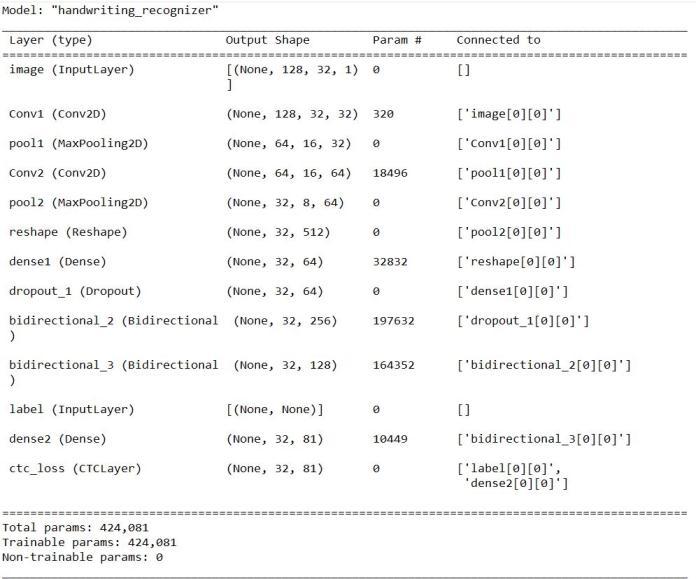

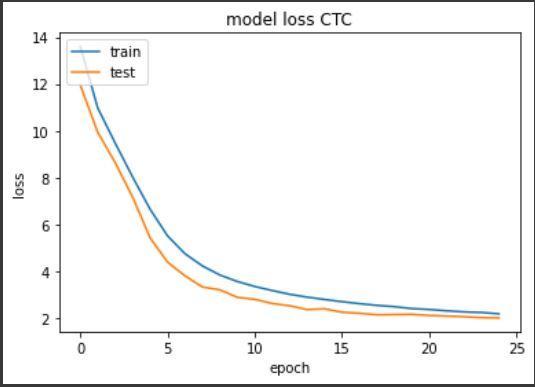

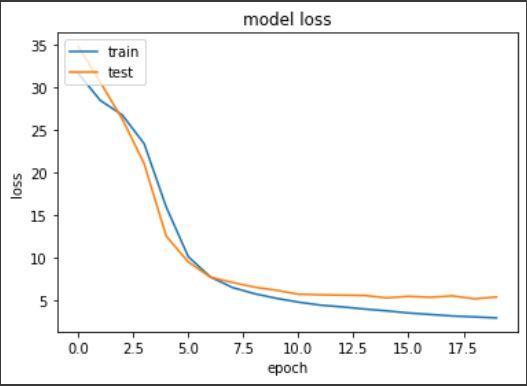

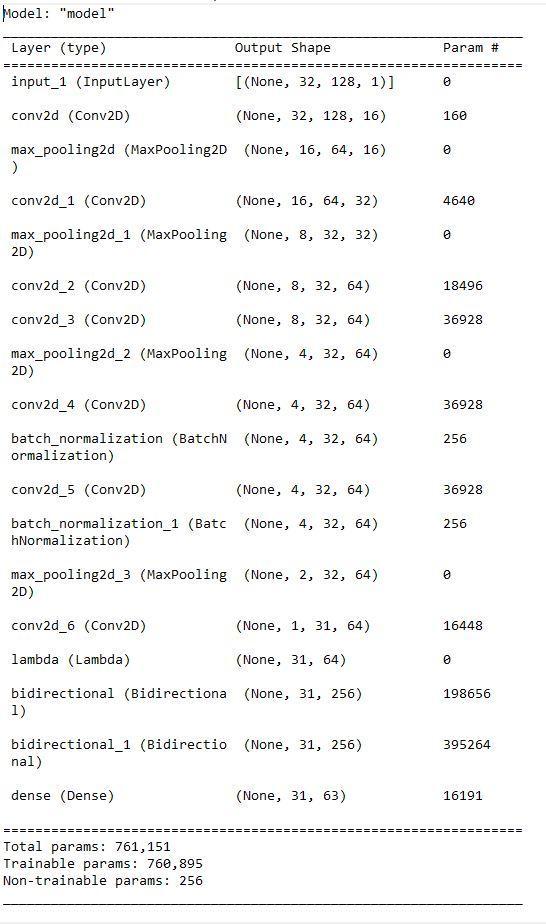

Builddifferentneuralnetworksandtrainwiththepre processeddataviz.CNN,BRNN,CTCforoutputswithvaried accuraciesontestdata.

Record observations after using different models and images and choose the onewith the best accuracy fortext recognition.

Thiswillhelpusindetectingandrecognizingtextfromthe givenimagedocumentpresentintheimage.

ThisoutputwillthenbeshownontheUIfortheuseralong withatext filethatwillbegenerated.

Fig 5:CTCmodelsummary

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

In recent years, great advances in deep learning and computervisionhaveallowedimprovementsondocument andimageprocessingandHTR.Processingofdigitalfilesis cheaperthanprocessingtraditionalpaperfiles.Theaimof anOCRsoftwareistoconverthandwrittentextintomachine readableformatsandbymakinguseofdatapre processing thatinvolvedcropping,resizing,normalization,thresholding of an image and then further splitting of datasets and applicationsofmodelsvizCNN,CTCandBRNN,we’reableto performOpticalCharacterRecognitionofTextthroughthe providedUI.Theaforesaidmodelscanbefurtheroptimized toimprovetheaccuracyofthedetectedtext.

[1] Rosebrock, A. “Automatically OCR’ing Receipts and Scans,” PyImageSearch, 2021, https://pyimagesearch.com/2021/10/27/automatically ocring receipts and scans/.

[2] H.Li,R.YangandX.Chen,"Licenseplatedetectionusing convolutional neural network," 2017 3rd IEEE International Conference on Computer and Communications (ICCC), 2017, pp. 1736 1740, doi: 10.1109/CompComm.2017.8322837.

[3] Schuster,Mike&Paliwal,Kuldip.(1997).Bidirectional recurrent neural networks. Signal Processing, IEEE Transactionson.45.2673 2681.10.1109/78.650093.

[4] O. Nina, Connectionist Temporal Classification for OfflineHandwrittenTextRecognition,BYUConference Center,2016.