e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

Kalaichelvi K1, Tamilarasan R2, Sureshbabu N2, Ravi V2, Santhosh Kumar S M2

1Associate professor, Department of Electronics and Communication, V.S.B Engineering college, Karur 639004,Tamilnadu,India

2 Department of Electronics and Communication, V.S.B Engineering college, Karur 639004,Tamilnadu,India ***

Abstract The COVID 19 is a disease that is highly contagious and spreads from an infected person to another through droplets, when that person sneezes or coughs. The effective way to reduce the spreading of the virus is to use the face masks properly as enforced by the governments and also by following the social distancing. There are also survey reports which emphasize the effectiveness of masks and social distancing in the reduction of the spread of the COVID 19. Even Though the situation is well handled by the government with people’s support, the disease is not brought into control yet. So precautionary steps are needed to monitor people following the rules to bring the spread into control. For auto recognition of the people who are not wearing the face masks and violating social distancing, we can use the deep learning technology with the YOLOv3 algorithm, which is highly useful in object and image recognition and classification applications. Here we are implementing the same with both face masks and social distancing monitoring. For training the model datasets are collected from the internet which are freely available.

Keywords : IoT, Deep learning, Image processing, YOLOv3.

During the COVID 19 pandemic in many countries, wearing a mask is mandatory and indeed, it reduces the fatality rates. As long as the vaccine is not widely utilized and is not fully protective for every person, wearing a maskiscrucial.Asitisimportanttocontrolthespread,the authorities need a way to monitor public places like subway stations and shopping centers. Therefore, there is aneedtodetectmaskedandunmaskedfaces.Becauseface detection is a vital part of this process, it requires a large amount of time and resources if done manually and increases the chance of making mistakes in detecting unmasked faces. Machine learning and computerized visiontechniquescanhelpautomatethisprocess.

It is difficult for the government to control the spreading without people following the instructions. Communication of this disease can only be reduced with the right cooperationfromthepublic.MaintainingPhysicaldistance, repeated hand sanitization, and face masks have all been shown to be effective in preventing the virus from

spreading, but not everyone is following the rules. The spread of the coronavirus would be difficult for India to control.Themost effective meanstopreventtransmission are face masks and hand sanitizers. This has had positive benefitsintermsofminimizingdiseasetransmission.

In the discipline of Computer Vision and Pattern Identification, facial recognition is a crucial component. This method has a number of disadvantages, including a high level of feature complexity and low detection accuracy. Face recognition approaches based on deep convolutional neural networks (CNN) have been widely developedinrecentyearstoincreasedetectionaccuracy.

Several authors have employed predefined standard modelsasVGG 16,Resnet,andMobileNet,whichneedalot ofmemoryandcomputingtime.Aneffortwasmadeinthis studytomodifythemodelinordertoreducememorysize, processing time, and enhance the accuracy of the model's conclusions. This research provides an implementation of deep learning based facemask and social distancing detectionsystem.

Object identification techniques using deep learning techniqueshavepotentiallybecomemuchmoreefficientin solving challenging tasks in recent years when compared to shallow models [10]. Developing a real time system/model capable of recognising whether persons haveworna mask ornotinpublicplacesisoneoftheuse cases.

Real time deep learning was used by Shaik and Ahlam [7] to categorize and distinguish emotions, whereas VGG 16 wasusedtoclassifysevenfaces.UndertheexistingCovid 19lock downperiodforavoidingspreading,thistechnique thrives. Furthermore, Ejaz et al. [12] employed main component analysis to distinguish people with masked facesfrompeoplewithunmaskedfaces.

One use of face recognition was done by Li et al. They created the HGL approach, which used color texture analysis in pictures and line portraits to identify head poses using masks for faces.[11] In an effort to track and enforce compliance with health guidelines, utilizing CNN (Convolution Neural Network) to distinguish whether an

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

individualwaswearingamaskornot.Theprecisioninthe front was 93.64 percent, while the side to side accuracy was87.17percent.

Using the condition identification approach, Qin andLi[3]createdafacemaskrecognitiondesign.Thefour main components of approaching the problem are, p re processing the image, trimming the facial regions, super resolutionoperation,andestimatingtheendstate.

The suggested face mask system is implemented utilizing the YOLO v3 algorithm. Deep learning is generally implementedusingbothartificialintelligenceandmachine learningtechniques. Whencomparedtomachinelearning, this method, which is inspired by brain neurons, has shown to be more flexible and develops more accurate models. The suggested system comprises two sets of images,oneofwhichisnotwearingamaskonthefaceand the other of which is wearing a face mask. This is done in real time in a specific distance utilizing a web camera to capture and recognise photos. The outcome will be saved in the Internet of Things, which will aid in the process' organization.

The study uses YOLO to detect the wearing and not wearing of face masks in real time using an alarm system, as well as the observation of social separation among people strolling in the area. A VGG 16 model for detecting face masks that is both precise and fast. The trained model was able to achieve a 97 percent accuracy rate. In YOLOV3, an image is divided into grids first. Around highly scoring objects with the aforementioned specified classifications, the number of anchor boxes (also knownasboundaryboxes)foreachcellwillbepredicted.

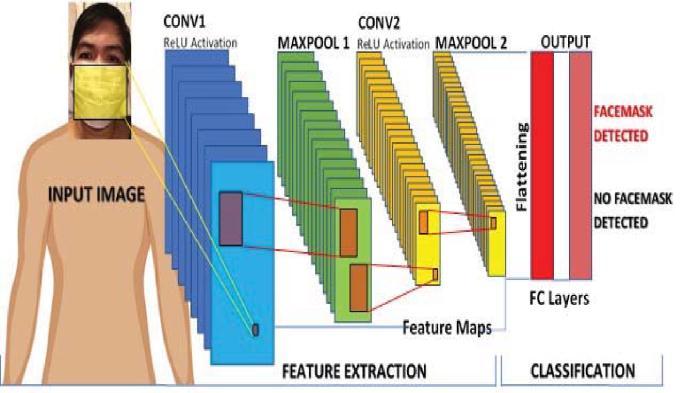

First the image will be processed before further implementation which is called preprocessing. For the necessary detection of boundaries and objects, image segmentation techniques are used. The relationship must be known in order to classify the objects and different categories.Toachievethis,themodelmustbetrainedwith the proper data set. Here we are also using deep learning technologyalong withOpenCVandcomputervision.

The camera should be calibrated in order to match the pixel distance in the image captures. This can be achieved by calibrating it according to required internal and external parameters. Even though it produces accurate results, this one is harder to implement than the other available method which is implemented using triangle similaritycalibrationbutwithlesseraccuracy.

For the effective face mask detection application, we are using YOLOv3 which is the efficient one, and commonly used for the real time object detection systems. As compared to other available techniques this one is faster andaccurate.YOLOconsistsof53layerswhicharetrained withImageNet.Forourspecificapplicationwearestacking 53 more layers, which combinedly gives 106 layers which wecan use for our application of face mask detection. For training the model the required dataset is obtained from the internet. Various data formats such as png, Jpeg are includedinthedataset.Therearelotsofdatasetsavailable including Kaggle’s dataset for Face Mask Detection by Omkar Gaurav. All the photos were from open source resources, out of which some resemble real world scenarios, and others were artificially created to put a maskontheface.

Omkar Gaurav’s dataset gathered essential pictures of faces and applied facial landmarks to find the individual’sfacialcharacteristicsintheimage.MajorFacial landmarksincludetheeyes,nose,chin,lips,andeyebrows. Thisintelligentlycreatesadatasetbyformingamaskona non masked image. Finally, the dataset was divided into two classes or labels. These were ‘with_mask’ and ‘without_mask’, and together, the images were curated, aggregatingtoaround4000images.

The quality of the dataset impacts the accuracy of a model.Thefirststepinthedatacleanupprocessistodelete all the incorrect images found in the data set.The photos aredownsizedtoasetsizeof96×96pixels,whichreduces the strain on the machine while training and ensures the best possible outcomes. Following that, the photos are labeled as having masks or not. To accelerate processing, the photo array is converted to NumPy array.Also used is the MobileNetV2's preprocess input function. The data augmentation technique is then used to expand the size andqualityofthedatasample.Tocreatemultiplecopiesof the same image, we use the Image Data Generator feature with the required settings for rotation, zoom, and flip horizontallyorvertically.

To avoid over adjust, the amount of training samples has been increased. This improves the generalization and robustness of the formed model. By randomly selecting photosfromthedataset,thedatasetisthensegregatedinto trainingandtestdatainaratioof8:2.

In training and test datasets, the stratification variable is used to maintain the same proportion of data as in the original dataset. We used Google Colab to carry out this work. The model was formed on Colab with a GPU, while the preprocessing steps were created on a laptop because theywerenotcomputationallyintensive.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

To form our model, we need to transform the.xml files into.txt and, more specifically, produce the YOLO format after downloading the dataset.Of course, before we begin training, we need to be certain that the conversion has been successful and that we will provide accurate data to ournetwork.

Facecanbediscoveredinbothstaticimagesandreal time video streams using this face detection algorithm. Overall, thismodelisquick,preciseandresource efficient.Nowthe model has been trained, it can be used to detect the existence of masks in any image. The given image is initiallyfedintoafacedetectionmodel,whichdetectsallof thefacesinit.Subsequently,thesefacesareintegratedinto an CNN based face mask detection algorithm. The model wouldidentifythehidden patterns/features ofthepicture andcategorizeitas"Mask"or"NoMask."

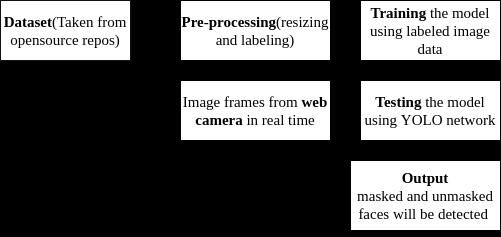

Fig.1 Proposed system block diagram

We have to break our data into two sets, the training andvalidationsets,toformand validateourmodelduring the training process. The percentages were 90% 10% in each instance. So we made two new files, one for the test folder and the other for the train folder, and placed 85 photographs with their accompanying annotations in the test folder and the remaining 768 into the train_folder directory.

Darknet model is used for this specific face detection case. To use this for detection, the additional weights which are present in the YOLOv3 network are randomly initialized prior to the training. They will be getting their propervaluesduringthetrainingphase.Weneedtocreate 5 files in order to complete our preparations and start trainingthemodel.

1. sample.names file: A file with .names extension which has all the three classes specific to our problem: mask, no_mask,and not_proper.

2. sample.data:A .data extensionfilewhichincludes relevant information for the problem which will beusedforfurthertraining.

3. fmask.cfg: We need to copy the yolov3.cfg and rename it into a .cfg file for setting our required configuration.

4. text files for train and test : Path to all individualfilesareincludedinthesetwo.txtfiles.

Thestepswhicharefollowedduringtheentireprocessare showninFig.1.Aftergettingalltherequiredfilesanddata the preprocessing step will happen where we’ll be changing the parameters of the image such as dimensions as per our requirement and will convert it into an Numpy array. After that the pixel intensities will be scaled. The obtained image data will be reduced to more manageable groupsbytheprocessof feature extraction(Fig.2)carried outbythedeeplearningmodelweareusing.Therealtime imageframescapturedfromthe camera will beprocessed bythe trained model toproducethe output

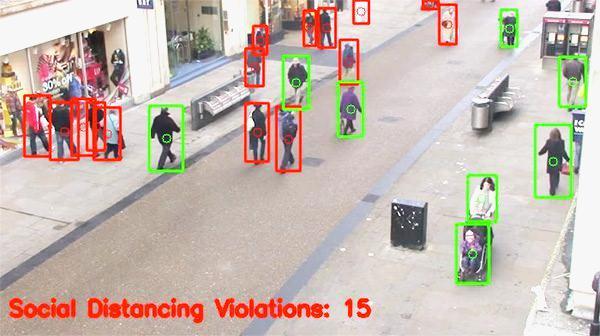

Thefollowingarethestages todevelopa social distancing detector.

1. Onlypersonsinanimagearedetectedusingobject recognition

2. The Pairwise distance algorithm is used to determine the distance between the persons after allofthepeopleintheimagehavebeenidentified.

3. According to the acquired results, the distance criterionwillbeviolatedifanytwopeopleareless thanNpixelsapart,whichisthethresholdthatwe setearlier.

Let's imagine there are at least two people in the frame. We'll calculate the Euclidean distance between all parts of the centroid. AS the matrix is symmetrical, we have to loop over the upper triangular distance matrix and examine if the distance exceeds the government's and the health care professional's minimal social distance requirements.

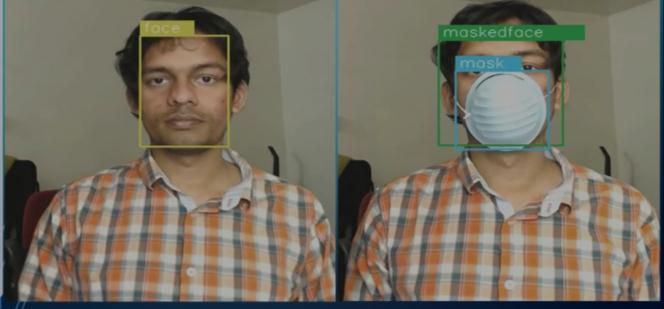

If a person is not wearing masks, then the boundary box displayed over the face will be red or yellow in color. Otherwise it will be green. This one is illustrated in Fig.3 Similarly, the people who are violating social distancing will be marked in red in the real time image as shown in theFig.4

WhiletheCOVID 19outbreakposesanumberofthemajor hazards to the world, it also serves as a reminder that we must take care to prevent the virus from spreading. By analyzingthephotos,YOLO basedtechniquesprovedtheir ability to recognise and classify objects. The suggested systemcandetectthepresenceorabsenceofamask,social distancing, and the model delivers accurate and quick resultsusingqueries,OpenCV,andCNN.Withregardtothe speed of detection and the accuracy of the model, the proposedschemeyieldsadmirableresults.

[1] Viola, P., Way, O.M., Jones, M.J.: Robust real time face detection. Int. J. Comput. Vision 57(2), 137 154 (2004)

[2] Forsyth, D.: Object detection with discriminatively trainedpart basedmodels.IEEETrans.PatternAnal.Mach. Intell.32(9),1627 1645(2010).

[3]NingX,DuanP,LiW,ZhangS(2020)Real time3dface alignment using an encoder decoder network with an efficient deconvolution layer. IEEE Signal Process Lett 27:1944 1948. https://doi.org/10.1109/LSP.2020.3032277

[4]Girshick,R.:FastR CNN.Comput.Sci.(2015)[5]Ren,S., He, K., Girshick, R., et al.: Faster R CNN: towards real time objectdetectionwithregionproposalnetworks(2015)

[6] Liu, W., Anguelov, D., Erhan, D., et al.: SSD: Single Shot MultiBox Detector. In: European Conference on Computer Vision,pp.21 37.Springer,Cham(2016)

[7] Hussain SA, Al Balushi ASA (2020) A real time face emotionclassificationandrecognitionusingdeeplearning model. In: Journal of physics: Conference series, vol 1432. IOPPublishing,Bristol,p012087

[8] Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: 2017 IEEE Conference onComputer Vision andPatternRecognition(CVPR),pp.6517 6525(2017)

[9] Redmon J, Farhadi A.: YOLOv3: an incremental improvement(2018)

[10]Adusumalli,H.,Kalyani,D.,Sri,R.K.,PratapTeja,M.,& Rao,P.B.R.D.P.(2021).FaceMaskDetectionUsingOpenCV. 2021 Third International Conference on Intelligent CommunicationTechnologiesandVirtualMobileNetworks (ICICV).doi:10.1109/icicv50876.2021.9388375

[11]LiS,NingX,YuL,ZhangL,DongX,ShiY,HeW(2020) Multi angle head pose classification when wearing the mask for face recognition under the covid 19 coronavirus epidemic. In: 2020 International conference on high

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

performance big data and intelligent systems. IEEE, pp 1 5.https://doi.org/10.1109/HPBDIS49115.2020.9130585

[12] Ejaz, M.S., Islam, M.R., Sifatullah, M., Sarker, A.: Implementation of principal component analysis on masked and non masked face recognition. In: 2019 1st International Conference on Advances in Science, Engineering, and Robotics Technology (ICASERT). pp. 1 5 (2019)

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page3352