International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

Sumukha S Puranik1 , Tejas S2 , Vaishnavi P3, Vivek A Arcot4, Paramesh R5

1,2,3,4 Student, Computer Science and Engineering, Global Academy of Technology, Karnataka, India

5Associate Professor, Computer Science and Engineering, Global Academy of Technology, Karnataka, India ***

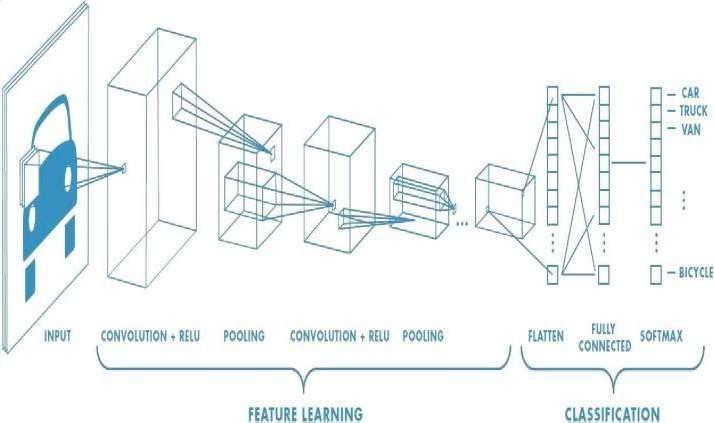

Abstract - Real time object detection is a diverse and complex area of computer vision. If there is a single object to be detected in an image, it is known as Image Localization and if there are multiple objects in an image, then it is Object Detection. This detects the semantic objects of a class in digital images and videos. The applications of real time object detection include tracking objects, video surveillance, pedestrian detection, people counting, self driving cars, face detection, ball tracking in sports and many more. Convolution Neural Networks is a representative tool of Deep learning to detect objects using OpenCV (Opensource Computer Vision), which is a library of programming functions mainly aimed at real time computer vision. This project proposes an integrated guidance system involving computer vision and natural language processing. A system equipped with vision, language and intelligence capabilities is attached to the blind person in order to capture surrounding images and is then connected to a central server programmed with a faster region convolutional neural network algorithm and an image detection algorithm to recognize images and multiple obstacles. The server sends the results back to the smart phone which are then converted into speech for blind person’s guidance. The existing system helps the visually impaired people, but they are not effective enough and are also expensive. We aim to provide more effective and cost efficient system which will not only make their lives easy and simple, but also enhance mobility in unfamiliar terrain.

Key Words: OpenCV, Artificial Intelligence, YOLO V3, Convoluted neural network

Vision impairment is one of the major health problems in the world. Vision impairment or vision loss reduces seeing or perceivingability,whichcannotbecuredthroughwearingglasses.Nearlyabout37millionpeopleacrosstheglobeareblind whereasover15millionarefromIndia.Peoplewithvisualdisabilitiesareoftendependentmainlyonhumans,traineddogs,or specialelectronicdevicesassupportsystemsformakingtheirdecisions.Navigationbecomesmoredifficultaroundplacesor placesthatarenotfamiliar.Survivingdevices,theobjectsthatareatasuddendepthorobstaclesabovewaistlevelorstairs createconsiderablerisks.Thus,weweremotivatedtodevelopasmartsystemtoovercometheselimitations.

Afewrecentworksonreal timeobjectdetectionwererecentlyreported.Thereport'scontentsareweighedintermsoftheir benefitsanddrawbacks.Blindpeople'smovementsaretraditionallyledbyawalkingstickandguidedogs,whichrequirealong timetobecomeusedto.Thereareafewsmartsystemsonthemarketthatuseelectronicsensorsputonthecane,butthese systemshaveseveraldrawbacks.Smartjacketsandwalkingstickshavebeeninvestigatedastechnologyadvances,including sensorsembeddedinthewalkingsticks.Themodelisasimplejacketthatmustbewornbytheblindperson.Anyobstruction notificationissuppliedviasensor specificvoicemessages.Themessagesaretailoredtotherecipient.[4] Researchersandauthorshavealsoworkedonaprototype,smartrehabilitativeshoesandspectaclesthathasbeendesignedand developedtofacilitatesafenavigationandmobilityofblindindividuals.Thesystemhasbeendesignedforindividualswithvisual loss requiring enhancement and substitution aids. Three pairs of ultrasonic transducers (transmitter and receiver) are positionedonthemedial,central,andlateralportionsofthetoecapofeachshoe.Amicrocontroller basedbeltpackdevice poweredbya12V,2500mAhNiMHbatterycontrolsthetransmittedsignalofeachofthethreetransducersandrelaysthe reflectedsignalthroughanactivationsignaltotheassociatedtactileoutput.Detectingobstructionsatheadlevelisdonewith Ray Ban(LuxotticaGroupS.p.A.,Milan,Italy)spectacles.Theyareequippedwithapairofultrasonictransducerspositionedin thecenterofthebridge,aswellasabuzzermountedononeofthetemples.

TheUniversityofMichiganisworkingonthecreationofasophisticatedcomputerizedElectronicTravelAid(ETA)forblindand visuallyimpairedpeople.Abeltcontainingasmallcomputer,ultrasonicandothersensors,andsupportelectronicswillmakeup thedevice.Thesignalsfromthesensorswillbeprocessedbyaproprietaryalgorithmbeforebeingcommunicatedtotheuser viaheadphones.TheNavBeltdevicewillallowablindpersontowalkacrossunfamiliar,obstacle filledsituationsquickly andsecurely.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

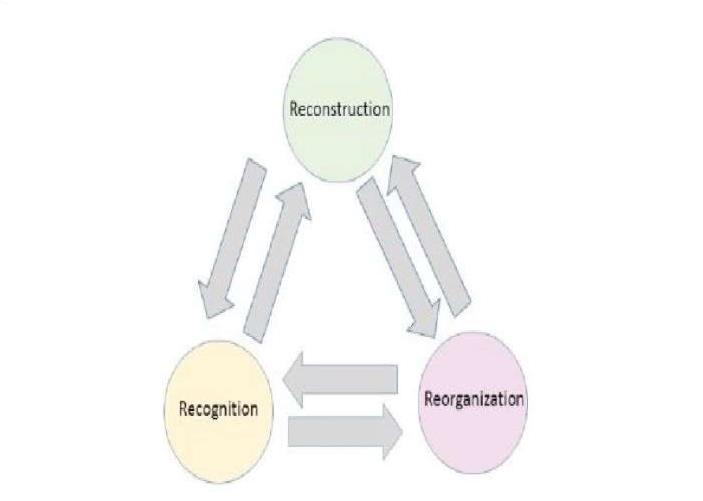

ThethreeR's,orreconstruction,recognition,andrearrangement,canbeusedtosummarizecomputervision(CV)activities. Reconstructionentailscalculatingthethree dimensional(3D)scenariothatproducedacertainvisualrepresentation.Objectsin theimagearelabelledasaconsequenceofrecognition.[2]

AnandroidSmartphoneisconnectedtoaLinuxserver,andimageidentificationisperformedusingadeeplearningalgorithm. Whenthesystemisinoperation,thesmartphonesendsimagesofthesceneinfrontoftheusertoaserverovera4GorWi Fi network.Followingthat,theserverrunstherecognitionprocessandsendsthefinalfindingstothesmartphone.Throughaudio notifications,thesystemwouldnotifytheuserofthelocationofobstaclesandhowtoavoidthem.Thedisadvantageofthis systemiscontinuousneedofinternetandcostofhostinganadvancedandhighcomputingserver.[2] Thereisanotherresearchthatfocusmainlyoncreatinganartificiallyintelligent,fullyautomatedassistivetechnologythatallows visuallyimpairedpeopletosenseitemsintheirenvironmentandprovideobstacle awarenavigationbyprovidingreal time auditoryoutputs.Sensorsandcomputervisionalgorithmsarecombinedtogiveapracticaltravelaidforvisuallyimpaired personsthatallowsthemtoviewvariousthings,detectimpediments,andpreventcollisions.Theentireframeworkisself containedandbuiltforlow costprocessors,allowingvisuallyimpairedpeopletouseitwithouttheneedforinternetaccess.The detectedoutputisalsodesignedforfasterframeprocessingandmoreinformationextractioni.e., item countingashortertime period.Incomparisontopreviouslydevelopedmethods,whichwereprimarilyfocusedonobstacleidentificationandlocation trackingwiththeuseofsimplesensorsanddidnotincorporatedeeplearning,theproposedmethodologycanmakeasubstantial contributiontoassistingvisuallyimpairedpersons.[1]

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

AnovelapproachtoobjectdetectioncalledYOLO.Classifiershavebeenrepurposedtododetectioninpreviousworkonobject detection.Instead, think ofobject detectionasa regression issue with geographically separated bounding boxes andclass probabilities.Inasingleassessment,asingleneuralnetworkpredictsboundingboxesandclassprobabilitiesdirectlyfromentire images.Becausetheentiredetectionpipelineisasinglenetwork,itcanbeoptimizeddirectlyondetectionperformancefrom beginningtoend.Ourunifiedarchitectureislightningquick.TheYOLOmodel'sbasemodelprocessesimagesat45framesper secondinrealtime.FastYOLO,asmallervariantofthenetwork,processes155framespersecondwhilemaintainingtwicethe mAP of other real time detectors. A network with 24 convolutional layers and two fully connected layers makes up the architecture.Thefeaturesspacefromprecedinglayersisreducedbyalternating1X1convolutionallayers.Ituseshalfresolution (224X224inputpicture)totraintheconvolutionallayersontheImageNetclassificationtask,thendoublestheresolutionfor detection.[7]TheprovidedimagewassegmentedintogridsofSXSintheYOLO basedobjectdetection[34],withSdenotingthe numberofgridcellsineachaxis. Eachgridunitwasresponsiblefordetectinganytargetsthatenteredthegrid.Then,fortheBnumberofboundingboxes,each ofthegridunitsprojectedamatchingconfidencescore.Thehighestprobabilityrepresentsagreaterconfidencescoreofthe associatedobject,whiletheconfidencescorerepresentssimilaritywiththesoughtobject.[1]Darknet 53whichismadeupof 53convolutionallayersservesasafeatureextractorforYOLO v3.Ithasthefastestmeasuredfloating pointoperationspeed, implyingthatthenetworkperformsbetterwhenGPUresourcesareused.Theframeworkwaslinkedtoalivevideofeed,and imageframeswerecollectedasaresult.Thecapturedframeswerepre processedandfedintothetrainedmodel,andifany objectthathadbeenpreviouslytrainedwiththemodelwasspotted,aboundingboxwascreatedarounditandalabelwas generatedforit.Followingthedetectionofallobjects,thetextlabelwastransformedintovoice,oranaudiolabelrecordingwas played,andthefollowingframewasprocessed

Theexistingsystemsthatarecurrentlyavailableforthevisuallyimpairedcomesattheirowncost.Mostofthesystemsarenot integratedtowearableproducts.Thereareothersystemswhichconsistsofexpensivecameraandsensorswhicharenot affordablebyeveryone.

Ablindstickisanadvancedstickthatallowsvisuallychallengedpeopletonavigatewitheaseusingadvancedtechnologies, integratedwithultrasonicsensoralongwithlight,waterandmanymoresensors.Iftheobstacleisnotthatclose,thecircuit doesnothing.Iftheobstacleisclosetothemicrocontroller,thenitsendsasignaltosound.Theblindstickwouldnotbecapable oftellingwhatobjectisinthefront.Itissomethingwhichisnotawearable,anditmustbecarriedbyhand.

Oursystemisdesignedinawaytoprovidebetterefficiencyandaccuracywhichcanbeembeddedtoawearabledevice.It providesarealtimethree dimensionalobjectdetectionifanyobstacleisdetectedwithinthespecifieddistance.Theoutputof theobjectdetectedissupportedbyatexttovoicegoogleAPI,wherenotificationsaresentthroughvoicemessages.Todevelop ascientificapplicationormodelthatcanbeembeddedtoadailywearableandprovideaccuratereal timethree dimensional objectdetectionthatissupportedbynotificationsthroughvoicemessagesifanyobstacleisdetectedwithinthedistance.

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072

Table 1: Summary of Literature Survey.

SlNo NoTitleofthepaper,JournalName andYear

1. Efficient Multi Object Detection and Smart Navigation Using Artificial Intelligence for Visually ImpairedPeople,Entropy,2020

2. PARTHA: A Visually Impaired Assistance System,2020 3rd International Conference on Communication System, Computing and IT Applications (CSCITA)

3. Integrating Computer Vision and Natural language, Kyambogo University,2019

Methodology

Darknet, YOLO v3, OpenCV,COCO

Advantages Disadvantages

Higheraccuracyin object detection and cangetbetterwithuse

ImageProcessing, Arduino,Indoor Navigation,Speech Commands,indoor localization, Ultrasonic sensors,TinyYoloV2

I Rsensorandultrasonic sensorworkingonSonic pathfinder.Googlepixel 3XL. Microsoft Kinetic Sensor.

Low light detection, security and real time locationsharing.

Expensive for now. Integrationproblem.

limited scope and functionality,cost inefficiency, systems not beingportable

I R sensor and ultrasonic sensor workingonSonicpath finder. Google pixel 3XL.Microsoft KineticSensor.

Expensive camera and sensors.

4. Design and Implementation of mobility aid for blind people 2019

5. Design and Implementation of Smart Navigation System for Visually Impaired, International Journal of Engineering Trends andTechnology

6. BlindAssistSystem,International Journal ofAdvanced Research in Computer and Communication Engineering,2017

7. You Only Look Once: Unified, Real Time Object Detection, UniversityofWashington

8. Design and Development of a Prototype Rehabilitative Shoes and Spectacles for the Blind, 2012 5th International Conference on Biomedical EngineeringandInformatics

9. WearableAssistiveDevicesforthe Blind,IssuesandCharacterization, LNEE 75, Springer, pp 331 349, 2010.

Ultrasonicsensorand ArduinoUno.

Arduino Nano R3, Raspberry Pi V3 , RF module, GPS, GSM module,Proteus8.6, Arduino Software 1.8.2 andPython2.7

I R Sensors, ATmega32 8 bitmicrocontroller.

Betterlowlevelobject detectionandlowcost. Objectsinelevatedareais notdetected

Practical,costefficient, VoiceRecognition,SMS alert.

limited scope and functionality, Small Box Detectiondueto inappropriatelocalization

Low cost, audio and vibrationfeedback. Bluetooth Connectivity andlessaccurate

FastYOLO,YOLOR CNN

Ultrasonictransducers, A 12 V, 2500 mAh NiMHbattery operated, microcontrollerbased belt

packunit

Surveymadeonassistive devicesthatcanbeused to guide visually impaired.

Combining R CNNwith YOLO

Small Box Detectiondue to inappropriate localization

Low level obstacles detection(upto90cm) Objectsinelevatedareanot detected

Use of vests, belts, actuators,servomotors andsensors.

Sensory overload, Long learning/training time, Acoustical feedback, Tactile feedback

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal

International Research Journal of Engineering and Technology (IRJET) e ISSN: 2395 0056

Consideringalltheotherliterature,wehavecomeupwithourmethodologywhereweaimtoprovideascientificapplicationor modelthatcanbeembeddedtoadailywearable.Areal worldobjectislivefedwiththehelpofawebcamwhereitprovides accuratereal timethree dimensionalobjectdetection.Theprocessedoutputisthenfurthergivenasoutputintheformoftext, where this processed text is converted into speech API that is supported by notifications through voice messages, if any obstacle is detected within specific distance. The messages are made specific so that the user can track the position and distanceofobstacleproperlyandavoidanyinconveniencewhiledoingtheirdailyactivities.Thisspeechisgivenasanaudio outputtotheuser.

Thisprojectproposesasystemtoassistblindpeopleintheirnavigation.Withtheproposeddesignandarchitecture,theblind and the visually impaired will be able to independently travel in unfamiliar environments. Deep learning based object detection,inassistancewithvariousdistancesensors,isusedtomaketheuser222222awareofobstacles,to providesafe navigationwhereallinformationisprovidedtotheuserintheformofaudio.Theproposedsystemismoreusefulandefficient thantheconventionalonesandcanprovideinformationaboutthesurroundinginformation.Itispractical,costefficientand extremelyuseful.Tosumup,thesystemprovidesnumerousadditionalfeatureswhichthecompetingsystemsdonotprovide fullyandcanbeanalternativeoraverygoodenhancementtotheconventionalones.

[1] RakeshChandraJoshi,SaumyaYadav:EfficientMultiObjectDetectionandSmartNavigationusingArtificialIntelligencefor VisuallyImpairedpeople,Year2020,Journal:Entropy

[2] DevashishPradeepKhairnar,ApurvaKapse:AVisuallyimpairedassistancesystem,Year:2020

[3] Lenard Nkalubo:Integrating ComputerVision andNatural language,Position Papers of the Federated Conferenceon ComputerScienceandInformationSystems,Volume19,Year:2019

[4] B.S.Sourab,SachithD’Souza:DesignandImplementationofMobilityaidforBlindpeople,InternationalAssociationfor PatternRecognition,Year:2019.

[5] Md.MohsinurRahmanAdnan:DesignandImplementationofSmartNavigationSystemforVisuallyImpaired,International JournalofEngineeringTrendsandTechnology,Year:2018,Volume:18

[6] Deepak Gaikwad, Tejas Ladage: Blind Assist System, International Journal of Advanced Research in Computer and Communicationengineering,Volume6Issue3,Year:2017,PageNo:442 444

[7] JosephRedmon,SantoshDivvala:YouOnlyLookOnce:Unified,Real TimeObjectDetection,UniversityofWashington, AllenInstituteforAI,Year:2016

[8] PaulIbrahim,AnthonyGhaoui:DesignandDevelopmentofaprototypeRehabilitativeShoesandSpectaclesfortheBlind, InternationalConferenceonBiomedicalEngineeringandInformatics,Year2012

[9] RVelazquez,WearableAssistiveDevicesfortheBlind,LNEE75,Springer,pp331 349,Year:2010

Volume: 09 Issue: 05 | May 2022 www.irjet.net p ISSN: 2395 0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |