International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

1Student of computer engineering, Smt. Indira Gandhi College of Engineering, Maharashtra, India.

2Student of computer engineering, Smt. Indira Gandhi College of Engineering, Maharashtra, India.

3Student of computer engineering, Smt. Indira Gandhi College of Engineering, Maharashtra, India.

***

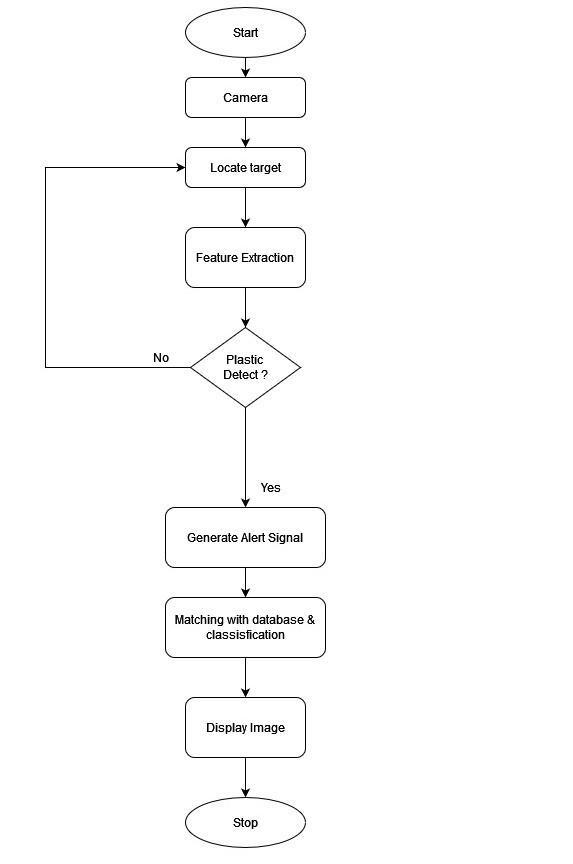

Abstract Although plastic pollution is one of the most significant environmental issues today, there is still a gap in information about monitoring the local distribution of plastics, which is needed to prevent its negative effects and to plan mitigation measures. Plastic waste management is a global challenge. Manual waste disposal is a complex and expensive process, which is why scientists make and learn automated planning methods that increase the efficiency of the recycling process. Plastic waste can be automatically selected from the waste disposal belt using imaging processing techniques and artificial intelligence, especially in depth reading, to improve the recycling process. Waste disposal techniques and procedures are used in large groups of materials such as paper, plastic, metal, and glass. However, the biggest challenge is to differentiate between different types of objects in the group, for example, to filter different colors of glass or plastic. It is important because it is possible to recycle certain types of plastic (PET can be converted into polyester material). Therefore, we must look at ways to separate this waste. One of the opportunities is the use of in depth learning and convolutional neural networks. In household rubbish, the main problem is plastic parts, and the main types are polyethylene, polypropylene, and polystyrene. The main problem considered in this article is to create an automated plastic waste disposal system, which can categorize waste into four categories, PET, PP, HDPE, and LDPE, and can work in a filter plant or citizen home. We have suggested a method that can work on mobile devices to identify waste that can be useful in solving urban waste problems.

Plastic pollution has become one of the most important environmentalproblemsofourtime.Sincethe1950's,when it was introduced, as a clean and inexpensive item, plastic hasreplacedpaperandglassinthepackagingoffood, wood furniture,andmetalinautomobileproduction.Globalplastic

production increased year on year, reaching approximately 360 million tons by 2018. Only nine percent of the nine billion tons of plastic ever produced and recycled. Effective measures to prevent the harmful effects of plastics need to understand their origin, methods, and trends. Another way to reduce waste is to recycle it. Its primary function is to maximize the recycling of similar materials, including reducing costs in processing. The recycling process takes place in two areas: asset production and subsequent waste production. Its assumptions take into account the setting of appropriate attitudes among producers, associated with the production of highly available materials, and the creation of appropriate behavior among recipients. Recycling of waste on the latest used products is possible, among other things withtheseconduseofrawmaterialscombinedwithchanges in its composition and composition. To do this, it is important to filter the waste and not just the components suchasmetal,bio,plasticpaperorglass.Itisnecessaryhere to use advanced techniques to separate the type of object into individual groups because not all of them are suitable foruseagaintoday.Forexample,aneasywaytorecycleand recycle PET plastic. Four types of plastics dominate household waste: PET, HDPE, PP, PS. Separating them into individual types of plastics will allow for reuse of some of them. One of the options is the use of computer image recognition techniques combined with artificial intelligence. We have suggested a method that can work on mobile devices to identify waste thatcanbe useful in solving urban wasteproblems.Thedevicecanbeusedbothathomeandat garbage disposal plants, and when used with a small computerwithasmallcamera,itwillpresentresultswithan LED diode and the user puts the garbage in the appropriate box in person. Four types of plastics dominate household waste: PET, HDPE, PP, PS. Separating them into individual typesofplasticswillallowforreuseofsomeofthem.Oneof the options is the use of computer image recognition techniques combined with artificial intelligence. We have suggested a method that can work on mobile devices to identify waste that can be useful in solving urban waste problems. The device can be used both at home and in wastedisposalplants,andwhenusedwithamicrocomputer

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008

International

Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

witha small camera,itwill presentresultswithLEDdiodes. In the Computer Vision program, data sets are divided into two main categories: the training data set used to train the desired algorithm and the test algorithm for testing the algorithminwhichitistested..Thepercentagethataperson dividesaffectsanormalpipeandisthefirststepintryingto solvethischallengingtask.Theworkprovidedinthisreport, focuses on the 50% separation in all tested data sets. In addition, each set of training and evaluation data is further subdivided into negative and negative structures to ensure that the algorithm learns what to look for in the image and whatnottolookat.Atthe endofthetest,oneneedstolook at all the accuracy figures in order to come up with a complete analysis of the created pipe. This requirement emphasizes the fact that the system learns to be better prepared. The project builds on a host of high level approaches that have been developed over the years to compare and analyze the most accurate feature filter when presented in categories and provide modern testing of advanced ideas. and effective ideas for the work of separating building materials. My research objective is to develop a better understanding of the best adapted techniques in the segregation process, to conduct a comprehensiveoverviewofCNN'snine sitearchitecturethat includes the latest models and to compare and analyze features that lead to better data planning. on the separation ofmaterialandhowitaffectsthewholesystem.Thefocusof this policy is on the acquisition of high quality categories in theinstallationimagesin4plasticsections.

Thestudy paperauthorsAbdellahEl zaar1,AyoubAoulalay 2, Nabil Benaya 1, Abderrahim El mhout i 3, Mohammed Massar 2, and Abderrahim El allati 1, examined how plastic materialsaccumulateinthe Earth'senvironmentandhavea negativeimpactonanimals.wild.Inmanycases,especiallyin developing countries, plastic waste is dumped into rivers, streams, and oceans. Show them a large amount of recycled plastic at landfills and uncontrolled dumping sites. In this activity, use the power of in depth reading strategies in image processing and classification to identifyplastic waste Theirwork aimstoidentify thetextureofplasticandplastic objectsinimagesinordertoreduceplasticwasteatsea,and to facilitate waste management. in order to train the SVM separator, they believe that the VGGNet model trained at ImageNet has learned excellent image representation, and thattheyhavelearnedtheweightscanbeusedinothertasks such as waste separation. methods: in the first, the CNN trainedmodelatImageNetisusedasafeaturekey,andthen

intheSVMeditingseparator,thesecondstrategyisbasedon fine tuning the pre trained CNN model. There is a method that has been trained and tested using two data sets. One challengeisthe data detectiondatabaseandtheotheristhe acquisitionoftheobject,anditachievesthemostsatisfactory results using two in depth reading strategies. of plastic and natural materials and especially in the deep sea. We used two challenging data sets to evaluate and improve the effectiveness of our approach. The local method achieves high accuracy results and can be used with challenging data sets.

With this system, bottles of dry waste are predicted accordingtothecharacteristicsoftheirclassusingthe Deep Learning algorithm. Convolutional Neural Networks (CNNs) are used to classify plastic bottles into various classes such as water bottles, juice, and syrup. There are three types of plastics,PP, HDPE and PET. These types of plasticsare used for sorting and filtering. Feature output is used to identify the characteristics of an object in the image, as well as to calculate its vector element. Components of the recycled material were collected in three sets of data sets: Polypropylene, Polyethylene Terephthalate, and High Density Polyethylene each contains 900 images. CNN includes a series of convolutional layers, major integration layers, launch layers, and each layer is linked to the front layer. There are 6 types of bottles in each class, resulting in 16,200 images. A technique called Speeded Up Robust Features(SURF)wasusedtoextractfeaturesinimagesusing threesteps extraction,defining,andmatching.

In the proposed system, an RGB camera and a small computerwithcomputervisionsoftwareareusedtoanalyze plastic waste. The home version will include a Raspberry Pi computerthatcandetectobjectsandallowtheusertoplace garbage ina specific container. With 15layers,thisnetwork has standard functions, which means fewer features will be used for recognition. With images of 120 * 120 pixels, the network performs better than a 23layer network of 227 × 227pixels.UsingtheWaDaBadatabase,theresearchersused fivedatasetscontaining2000imageseach,theequivalentof 10000imagesintotal.ConvolutionNeuralNetwork(CNN)is a mathematical model of the artificial neural network. The results of the proposed experiments achieved an average efficiencyof74%,withFRR=10%andFAR=16%

This project builds on a host of high level approaches that havebeendevelopedovertheyearstocompareandanalyze

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

themostaccuratefeaturefilterwhenpresentedincategories andprovide moderntesting ofadvancedideas. and effective ideas for the work of separating building materials. Our research objective is to develop a better understanding of the best adapted techniques in the segmentation process, conducting a comprehensive overview of CNN's nine component architecture that includes the latest models and comparing and analyzing features that lead to better data planning.ontheseparationofmaterialandhowitaffectsthe wholesystem.Thefocusofthispolicyisontheacquisitionof highqualitycategoriesinthe installationimagesin 4plastic sections.Thecontributionofthisresearcharticleisdesigned to conduct a large number of experimental tests in training and evaluation data sets to improve understanding of the best wayto classifythings and to consider whether transfer learning can improve outcomes on the currently selected site.Asthecurrentsystemwillrestorealimitedsequenceof scores and documents, there is a need for a good curve calculator for memory accuracy and results. A good separator can scale the visible images at the top of the restored list. The main unit of operation for precision call is called intermediate accuracy (AP) and will be used on three of the four selected databases. Compared with computer performance and memory accurate analysis, intermediate accuracyprovidesaneasywayasitreturnsasinglenumber thatreflectsthefunctionalityoftheseparator.

We faced many challenges while collecting data. Data was not available online in certain categories. We have collected data from local stores, Plastic Trash etc. 720 plastic photos were taken. Images are clicked on a white background and thenecessarypre processingstepsareperformed.Wetook4 labels to split. A total of 720 local plastic photographs are taken using mobile phones in four data classes. Images are used for analysis and classification of waste. The data is almost equally divided into four classes, which helps to reduce data bias. The total number of image data is represented in Table I. The next step after data collection is the advance processing step needed to clean up the data. Various pre processing steps can be used to clean the data and make it ready to enter the network. Real world data is unstructured and has a lot of noise. Image data feeds the networkwithoutperformingthefirststepofthedata

The feature removal process is performed to extract interesting features from new samples read by the previous network. So, we applied the convolution layer of a pre trainednetwork,appliednewdataaboutit,andthentrained

anewclassonthemodel.Inthefeatureremovalprocess,we remove the features by releasing the last three layers of a pre trained network, and then adding our fully integrated layersandtrainingtoourdatabase.II.Finetuning

After making an element, another method of re using a model similar to the output element is used called fine tuning. All layers except the last layers are frozen and a custom layer is built to separate. In the last layer, two layer layers are added with the ReLU activation function and finally the Softmax activation function. Then the convolutional layer and the new phase divider added to the joint training that improved the performance of the model afterfineadjustment.

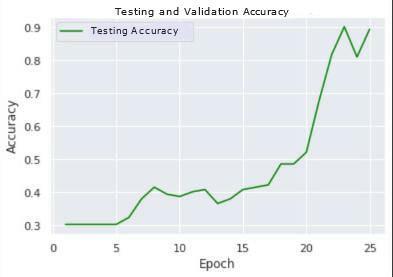

The research consisted of training the prepared networks and determining the accuracy of the categories using differentcategoriesofdataforinclusionindatatrainingand testing. edata werepreparedinfourphases:90%(training data), 10% (test data), 80% 20%, 70% 30%and 60% 40%.

The network learning process is made up of the data sets described above. The tutorial was approved for two frames, withtwotypesofembeddedimage,witha resolutionof156 ×156and256×256pixels.Thestudywasperformedwitha variablevaluecoefficientoflearning,startingfrom0.001and decreasing all 4 subsequent periods, as well as 1064 repetitionsoftheperiod.Thetestsshowedthebestaccuracy andlossesobtainedinsuccessiverepetitionsduringthe90%

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified

International

Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

10% refractory network reading and in the image correctionofinputof156×156pixelsandchartsmadeafter 10 periods. We used 500 images to train accuracy and 168 accuracytestimages.

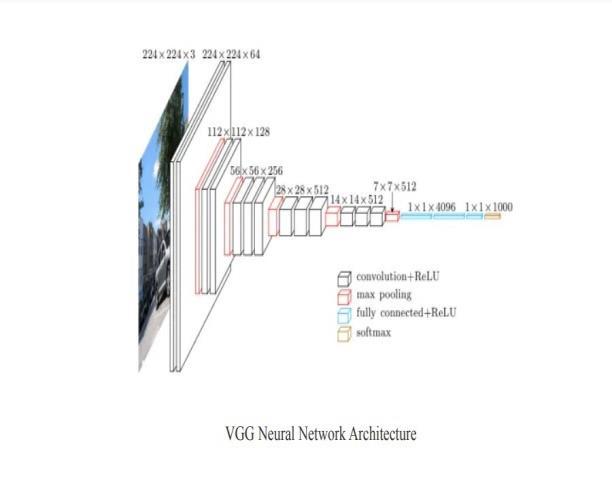

VGG stands for Visual Geometry Group; is a typical deep rooted structure of the multi layer Convolutional Neural Network (CNN). "Deep" refers to the number of layers with VGG 16 or VGG 19 covering 16 and 19 layers. The VGG structureisthebasisoftheunderlyingmodels.Developedas a deep neural network, VGGNet also transcends the multi functionbasesanddatasetsbeyondImageNet.Inaddition,it is now one of the most famous photographic recognition structures.

Fully Connected Layers: VGGNet has three fully integrated layers. Of the three layers, the first two have4096channelseach,aswellasVGG

Architecture:

Input:VGGNetcapturesimageinputsize224×224. In the ImageNet competition, the creators of the model cuta 224 ×224center patchon eachimage tokeeptheinputsizeoftheimageequal.

Convolutional Layers: VGG conversion layers use a small reception field, i.e., 3 × 3, the smallest possible size that can shoot up / down and left / right.Inaddition,thereare1×1convolutionfilters thatserveasa sequential inputconversion. This is followedbytheReLUunit,whichisanewprogram from AlexNet that reduces training time. ReLU represents the function of activating the modified line unit; is a component line function that will output input if forwards; otherwise, the output is zero. The convolution stride is set to 1 pixel to maintainpostconversioncorrection(stridenumber ofpixeltransitionsovertheinputmatrix).

HiddenLayers:AllhiddenlayersintheVGG networkuseReLU.VGG rarelyusesLocal ResponseNormalization(LRN)asitcoversa thirdwith1000channels,1perclass.

Fig.No.2:VGGneuralnetwork

stimulates memory usage and training time. Moreover, it doesnotimproveonabsoluteaccuracy.

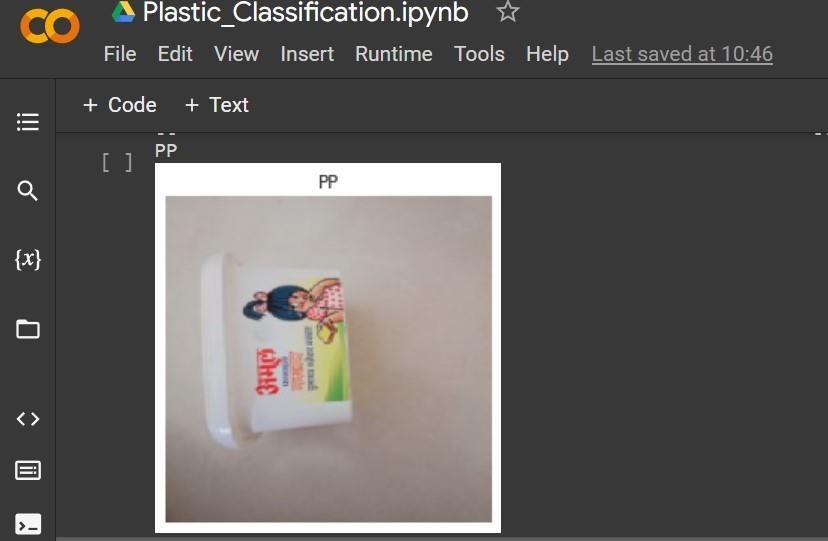

ColabNotebookJupyternotebooksworkinthecloudandare highlyintegratedwithGoogleDrive,makingthemeasytoset up, access, and share. machine learning, data analysis and education.

WestartedusingourprojectwiththeYOLOmodel.TheYolo model is able to detect the plastic material but it is not able to classify plastic into its types For classification we start workingwiththeVGG16model.Analyzingthetestresults,it can be seen that, in the case of our 15 layer network with 120 × 120 images, 4 epoch is sufficient to obtain a tolerable level.Furthertraining,andwithalowleveloflearning,does not yield significant results. 77% achieved accuracy after 4 seasonsisagoodresult.Continuouslearningupto25epoch increasesefficiencytoalmost100%.Inthecaseof256×256 pixels, the calculation time was doubled and the accuracy was reached at 91.72%. at is not acceptable in a system operating in the real world [19 21]. In the case of the 23

layer network, the learning process was different. the network achieved 99.23% accuracy in the first case of data sharingwithimagesof256×256pixels.

PlasticDetection:

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified

International

Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

Inconclusion,wehavedevelopedaplasticseparationsystem thatisabletoclassifydifferentpartsofplasticintotypesand risks using the Deep learning Neural Network. This system canbeusedtoautomaticallyseparatewasteandhelpreduce human interventions and prevent infection and contamination. If more images are added to the database, systemaccuracycanbeimproved.Inthefuture,wewilltend to improve our system so that it can differentiate many wasteproducts,bychangingsomeoftheparametersused.

1. ADeepLearningApproachtoManageandReducePlastic Waste in the Ocean https:.E3S Web of Conferences 336, 00065(2022)//doi.org/10.1051/e3sconf/202233600065 .E3SWebofConferences336,00065(2022)ICEGC'2021

2. Automatic Plastic Waste Segregation And Sorting Using Deep Learning Model https:/ /www.researchgate.net/publication/349683316_Automa tic_Plastic_Waste_Segregation_And_Sorting_Using_Deep_L earning_Model?enrichId=rgreq3a5688f880d22dc24124a d36191325b5XXX&enrichSource

3. Deep Learning for Plastic Waste Classification SystemVolume 2021, Article ID 6626948, 7 pages https://doi.org/10.1155/2021/6626948

4. Plastics Europe.Available online: https://www.plasticseurope.org/application/files/9715/ 7129/9584/FINAL_web_version_Plastics_the_facts2019_1 4102019.pdf(accessedon27April2020)

5. United Nations Environment Program. Available online: https://www.unenvironment.org/news and stories/pressrelease/un declares war ocean plastic 0 (accessedon27April2020).

6. United Nations Environment Program. The state of plastic.Availableonline:https://wedocs.unep.org/bitstrea Jambeck, J.R.; Hardesty, B.D.; Brooks, A.L.; Friend, T.; The guardian. Available online: https://www.theguardian.com/science/2017/nov/05/te rraw atch the riverstaking plastic to the oce ans (accessedon27April2020.).

7. Eriksen, M.; Lebreton, L.C.M.; Carson, H.S.; Thiel, M.; Moore,C.J.;Borrero,J.C.;Galgani,

8. F.; Ryan, P.G.; Reisser, J. Plastic pollution in the world’s oceans:Morethan5trillionplastic

9. pieces weighing over 250,000 tons afloat at sea. PLoS ONE2014,9,e111913.[CrossRef][PubMed]

10.Jambeck,J.R.;Geyer,R.;Wilcox,C.;Siegler,T.R.;Perryman, M.;Andrady,A.;Narayan,R.;

11.Law, K.L. Plastic waste inputs from land into the ocean. Science 2015, 347, 768 771. [CrossRef]OSPAR commission. Guideline for Monitoring Marine Litter on theBeachesintheOSPAR

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 09 Issue: 05 | May 2022 www.irjet.net p-ISSN: 2395-0072

12.Monitoring Area. Available online: https://www.ospar.org/documents?v=7260(accessedon 22April2020).

13.Hardesty, B.D.; Lawson, T.J.; van der Velde, T.; Lansdell, M.;Wilcox,C.Estimatingquantitiesandsourcesofmarine debris at a continental scale. Front. Ecol. Environ. 2016, 15,18 25. [CrossRef]\ Opfer, S.; Arthur, C.; Lippiatt, S. NOAAMarineDebrisShorelineSurveyFieldGuide,2012.

14.Availableonline:https://marinedebris.noaa.gov/sites/def ault /files/ShorelineFieldGuide2012.pdf (accessed on 25 April2020).

15.Cheshire, A.C.; Adler, E.; Barbière, J.; Cohen, Y.; Evans, S.; Jarayabhand,S.; Jeftic,L.;Jung,R.T.;Kinsey,S.;Kusui,E.T.; et al. UNEP/IOC Guidelines on Survey and Monitoring of Marine Litter. UNEP Regional Seas Reports and Studies 2009,No.186;IOCTechnicalSeriesNo.83:xii + 120 pp. Availableonline:https://www.nrc.govt.nz/media/10448/ unepioclittermonito ringguidelines.pdf (accessed on 25April2020).

16.Ribic, C.A.; Dixon, T.R.; Vining, I. Marine Debris Survey Manual.NoaaTech.Rep.Nmfs1992,108,92.

17.Kooi, M.; Reisser, J.; Slat, B.; Ferrari, F.F.; Schmid, M.S.; Cunsolo, S.; Brambini, R.; Noble,K.; Sirks, L. A.; Linders, T.E.W.;etal.Theeffectofparticlepropertiesonthedepth profileofbuoyantplasticsintheocean.Sci.Rep.2016,6, 33882.[CrossR

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified