Birds Identification System using Deep Learning

Abstract

Birds species are disappearing or threatened with extinction today are in great numbers. Many of these species are rarely found and it is a difficult task to distinguish bird species, due to their different sizes, colors, and different views of humans at different angles.

The difference between these animals should be noted as we know that it is easy for humans to see birds in pictures. In this study we focused on creating a model that can distinguish different species of birds using only their own image. For this, we used a convolutional neural network (DCNN) with ResNet 18 model. The accuracy of our model got improved using ResNet 18 transfer learning.

We used the Caltech UCSD Birds 200 data set [CUB 200 2011] [1] for both training and testing purposes. To differentiate, using the Deep Convolutional Neural Network, DCNN training and testing is performed on Google Colab using the PyTorch Facebook library. The final results show that by using the transfer of learning we have achieved a test accuracy of 78.8 percent.

KeyWords: Convolutional neural network(CNN), ResNet 18, Inception V3, VGGNet ,Transfer Learning.

1. INTRODUCTION

Everyday life is often fast and busy and involves extracurricular activities. Bird watching is a hobby that can give you a break from everyday life and can also encourage you to be strong in the face of daily challenges.Itcanalsoprovidehealthbenefitsandthejoy ofenjoyingnature.Manypeoplevisitbirdsanctuariesto see different species of birds or observe their beautiful feathers while observing the differences between the bird species and their characteristics. Understanding such differences between species can enhance our knowledge of rare birds and their ecosystem. However, because of spectator boundaries such as location, distance, and equipment, the bird's appearance is based onseveralfactors.

As we all know, birds play a key role in maintaining environmental harmony. The presence of different species of birds in the natural environment is also important for a variety of environmental reasons. Exploringbirdscantell us alotaboutthe worldandthe environment and can help capture important

informationaboutnature.Environmentalscientistsoften use birds as an indicator of climate change as birds are sensitive to environmental changes and can be used to understandtheecosystemaroundus.Variousreal world applications rely on birds, such as land pollution monitoring. The presence of bird species in the natural environmentisalsoimportantformanynaturalreasons. Thisisanotherarea where ourclassificationsystemcan beused.

But collecting and gathering information about birds requires a great deal of human effort and is a very expensive process. Therefore, a strong approach should be in place that can provide comprehensive bird information processing and serve as an important tool for professionals, agencies, and so on. Similarly, the composition of the species of birds plays an important role in determining the type of bird. Ornithologists (scientists who study birds) have been struggling to distinguish between different species of birds for decades. To distinguish the species of birds they must learn all the details of the birds, such as their climate, genetics, distribution, etc. Even professional birdwatchers sometimes disagree with the species of birdsthatrepresentthisbird.

Thisiswheremachinelearningandartificialintelligence work. Self driving cars, Siri, etc., are some of the examples of machine learning and artificial intelligence used in the real world. So, why not use some practical artificialintelligenceinthefieldofbirdhunting.Machine learning, artificial intelligence, and mathematical design can help to identify many species of birds using their pictures. Today, image classification has become quite popular and has become one of the main fields of machine learning and in depth learning. Identifying birds that create an image is a challenging task due to problemssuchasdifferentbirdspeciesthatvarygreatly in shape and appearance, background image, lighting conditions in photos, and extreme variation in posture. In our project, we have tried to classify images of different species of birds using Deep Convolutional NeuralNetworks(DCNN)andtransferlearning.

2. LITERATURE REVIEW

The studies [2,3] have focused on transfer learning techniquesforclassification.Currently,CNNsarecapable

Journal of Engineering and Technology (IRJET)

of analyzing birds from different angles and positions andhaveanaccuracyofabout85%.

Recently, there has been some development in visual categorization methods for species identification [4] which extends its application in many domains.[5] involvestheapproachforgenericobjectrecognition.

In[6]FagerlundandHermadevelopedsignalprocessing techniques using Mel Frequency Cepstral Coefficients (MFCCs).Theyachievedanaccuracyof71.3%.

Viches et al [7] considered that the identification of distinctive features is crucial so used data mining algorithmslikeID3,J4.8,andNaïveBayes.

[8] employs a global decision tree with Support Vector Machines (SVM) classifier in each node to separate two species. A. Marini [9] identified the species using both visual and vocal features of the bird using the CUB 200 2011datasetandtooktheaudiosamplesfromtheXeno Cantodataset.

In [12] Zhang et al. and Bilinear CNN by Lin et al. employed a two stage pipeline of part detection and part basedobjectclassification.

[12] involves training an individual CNN based on unique properties by detecting the location of parts of the object making it more efficient than R CNN. On the other hand, Lin et al. [13] proposed a bilinear model havingtwostreamswithinterchangeableroles.

The authors of the paper [14] developed a model to detect almost 200 types of objects (birds) with an accuracy of 71.5%. They used CUB 200 2011 dataset. In [15] the authors have used a region based CNN (faster CNN)methodthatworks,onthedetectionofbirdsbased on the full image. The results show that the faster CNN works well as compared to other CNN models. In [16] work is done to improve faster CNN by using convolution and filter pruning techniques. The results obtainedhaveimprovedtheresultstoagreatextent.

In [17], the cascaded convolutional neural network that does object detection is introduced. It uses three level deepCNN,whichdoestheobjectdetectioninacoarseto excellent manner. In[18], the authors have used CNN with the transfer learning model. They worked on the detectionoftheareaswherethesnowhasbeenseenand forthisCCTVfootagewastakenfromthe Japanwebsite, whichispubliclyavailable.

In [19] the authors have used transfer learning to provide coarseness to the system and proved that a granular network is more effective. For this, they used theimagedatasetofCOCO.In[20],theyevaluatedtheR

CNN and SSD for evaluation of the manga (Japanese Comic) objects. The authors have shown that the fast RCNN works well for the character face and text detection. In [21] 360 degree panoramic images are used and post processing is applied in the model to finetunetheoverallresult.

In [22], the authors worked on the detection of harmful objectswiththetensorflowAPIandhaveusedthefaster R CNNalgorithmfortheexperiment.

PROBLEM STATEMENT

We know that many species of birds are extinct or endangered and many of the birds not seen today were easilyidentifiedbeforethatwhichiswhythistopiccame to our minds. Humans cannot see each species of bird becauseofacertaindegreeofsimilarity.Identifyingbird species can be a challenge for humans which is why we usealgorithmstoperformtasksforus.

The database was launched in 2010 and contains 6000 photographsof200classesofbirds.Relatedtothishave been additional label data including binding boxes, incorrect classification, and additional attributes. This was revised in 2011, to add more images, bringing the total numberofimagesinthe databasetoabout 12,000. Available attributes have also been updated to include 15 parts, 312 binary attributes, and a box that includes each image. For the most part in this series, we will simplyusepicturesandclasslabelstodevelopandtrain birdpredictionnetworks.

PROBLEM OBJECTIVE

In this project, we have used the deep convolutional neural network to classify various images of birds. The bird species classifier is trained and tested using the Caltech UCSD Birds 200 dataset. We have used Deep Convolutional Neural Networks (DCNN) on an image that is converted into a greyscale format to generate an autograph using the PyTorch library, where multiple nodes will be generated and then these different nodes arecomparedwiththetestingdataset,andthescoresare savedinasheet.Afterthat,weanalyzedthisscoresheet we used the algorithm with the highest prediction accuracyinourdesktopapplication.

Themajorobjectivesofthismodelare:

• To recognize the birds using Deep Convolutional NeuralNetworksandimagemorphology.

• To develop a real time application that can be used to detectvariousspeciesofbirds.

•Todisplayalltheinformationrelatedtothebird.

Theblockdiagramgivenaboverepresentsourapproach to the problem. The description of each block of the diagramisgivenbelow:

• Image: In this step, the input image is taken from the usertofeedtothetrainedsystem.

•TrainingImageset:Thisbelongstothedatasetwhichis used to train the model. It can contain around 7000 images of 200 species of birds which are used to train theCNN.

• Pre processing: This step aims to process the image accordingtothealgorithm'sneeds.Itaimstoremovethe unwanted distortions and enhance an image so that our modelcanbenefitfromthisimproveddatatoworkon.

• Feature Extraction: It is a process of dimensionality reduction by which a set of raw data is reduced to data thatcanbeusedforprocessing,alternatively,wecansay that it aims to take out the meaningful data from the imagewithoutlosinganyinformation.

•Convolutional Neural Network:Asmentionedabove, it is a deep learning model that is used as an image classifiertoclassifyimagesbasedontheirfeatures.

• Web scraping: It is a technique that is used to extract data from websites.Thedata takenfromthewebsitesis storedinadatabasewhichislaterusedtoextractallthe informationaboutthebirds.

• Output: Output will contain all the data related to the speciesofthebird.

Working

a) The image of the bird will be given by the user to identifythe nameandother detailsofthespeciesof thebirds.

b) Image will be transformed and analyzed using the DeepconvolutionNeuralnetworktechniqueandthe classofbirdwillbepredicted.

c) This class will be used to extract the details of the birds.

d) Name and other details will be displayed on the screenoftheuser.

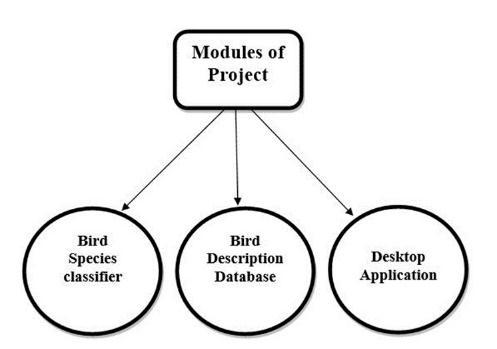

4. PROJECT MODULE

There are three modulesin our project which isusedto developtheapplicationforbirddetection.Theyare:

BirdSpeciesClassifier

BirdDescriptionDatabase

DesktopApplication

BIRDS SPECIES CLASSIFIER Under this module, we are going to perform the operations which are needed by our application to classify the various birds. We are going to perform various steps like image preprocessing, feature extraction, applying a convolutional neural network (CNN) to the images, and then it will classify the image. In the image preprocessing step, we are going to improve the image by suppressing unwanted distortions, and/or alsowearegoingto enhancesome oftheimportant image features so that our models can benefit from this improved data and can work on this data and provide us better accuracy. In this, we are going to perform steps like resizing the image, denoising the image,segmentation,morphology,etc.toprocessthe image and after that, we feed it to the model. After this, weare going to extract some features from the image by performing the feature extraction step, which is nothing but reducing the dimensionality of

image by extracting the meaningful features without losing any information. Now, at last, weare goingtoapplytheCNNmodeltoanalyzeourimages and after it has been trained then it can classify the various species into different classes. As defined before, CNN is a deep learning algorithm that can takeaninputimage,anddifferentiatethembasedon variousaspectsorobjectsintheimage.

Bird Description Database Image Classification requires a large dataset of images with proper distinction among different classes. These classes arefeaturesoftheindividualimagesbasedonwhich they can be classified. The dataset used by us is Caltech UCSD birds 200, featured in 2011. It is the successor of the CUB 200 dataset and contains almost double the number of images per class. The CUB 200 dataset featured in 2010 has around 6000 images of 200 bird species whereas the data set which we used has 11,788 images of 200 bird specieswitharateof60imagesperspecie.

Imageorganizationinthedatasetisdoneinformof subdirectories based on their species. Each image has15partlocationsandalmost322binaryattribute labels. The dataset contains all the North American speciesofbirds.

We used this dataset because the size and the number of images in the dataset is optimal for our developmentasitcontainsalargenumberofimages so that the model could be trained to provide good accuracy also it is not very large that will require high computing resource and will be very time consumingtotrainthismodel.

5. RESULT ANALYSIS AND DISCUSSION

Training a new image classification model from scratch is a very difficult and very time taking task. It can take lots of time for configuring the different layers of the modelandarrangingtheselayersinsuchawaythatthey giveusthehighestaccuracypossible.Also,itneedsalot ofresourceswithlotsofdataforthetrainingandtesting purposesofthemodel.Therefore,itisdifficultforevery person to make his/her artificial intelligence model for everyproblem.

A solution to this problem is provided with the use of thetransferlearningtechnique.Inthistransferlearning, apre trainedmodelisappliedtothecurrentproblemfor classificationpurposes.Amodelistrainedonsomeother problem that is similar to the problem which has to be solvedandthen thatpre trainedmodel isappliedto the problem. This helps in boosting the accuracy of the model and making it fit for the classification of images. Also, not much time and resources are needed for this typeoftraining.

But choosing the right pre trained model for image classificationisadifficulttask,justbecauseeverymodel can classify images into different classes but with differentlossesanddifferentaccuracies.Thereforetoget the best fit for our project we tried and tested three differentmodels.

Desktop Application - Inthismoduleofourproject, we have developed a desktop application that takes input from the user and displays the output to the useronitsscreen.Wehaveusedthepython’sPyQt5 library for developing the user interface of the application. The IDE we used for development is PyCharm.

PyCharm:PyCharmisadedicatedPythonIntegrated DevelopmentEnvironment (IDE).Itprovidesa wide rangeofessentialtoolsforPythondevelopers.

PyQT5:PyQt5isacross platformGUItoolkit,asetof python bindings for QT version 5. It can be used to develop interactive desktop application with ease because of its tools and simplicity provided by this library. The UI of this application is made by the QtDesigner which is a tool provided by PyQt5 for designing front end of the application faster and withease.

The models we used are VGGNet, ResNet, and InceptionV3models.Thesemodelsarealltrainedonthe ImageNet dataset and performed well in the ImageNet LargeScaleVisualRecognitionChallengeorILSVRC.The datasetonwhichthesearetrainedcontained1.2million images of different objects and these were to be categorized into 1000 different classes. These are validated and tested on 50,000 and 100,000 images respectively. So we tried all these models separately on ourdatasetandusedtheonewhichhelpedusinscoring thebestaccuracy.

a) Image Preprocessing Before feeding the data to the algorithms, we have to preprocess the input, so that we can get more accuracy and the model can also gain information from the data. We applied differentpreprocessingtotestandtrainthedataset.

b) Result Analysis of Different Models - Wepracticed three different models for the training and testing purpose of our classification problem, how to controlmodelcomplexityforeachofthemandwhat their advantages and disadvantages are. After finding the accuracies of all the three models we then chose the model which helped us in achieving the highest top 1 accuracy. So, we have to predict 200differentclassesorspeciesofbirdswith11,788

International Research Journal of Engineering and Technology (IRJET)

Volume:09Issue:

2022 www.irjet.net

images. We divided these 11,788 images into train and test folders. We trained our model on 5,990 images and then used 5,790 images for the testing purposeofourmodel.

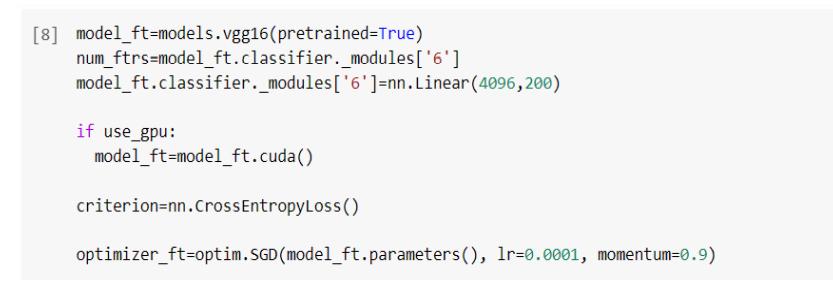

1)VGGNet - VGGNet is a convolutional neural network structure proposed by Karen Simon and Andrew Zisserman in 2014. We used VGG 16 structures for partition purposes. Input to VGG based on conversion is a 224 * 224 RGB image. To preview the layer, here, we take a picture in RGB format with pixel values in the range 0 255 and subtract the average values, calculated throughout theImageNettrainingset,fromit.

The average values of the VGGNet algorithm are [0.485, 0.456,0.406].Thesevaluesareusedin3differentimage channels. These input images after pre processing exceedtheweightlayers.Therearea total of13flexible layers and 3 layers fully integrated into the VGG16 architecture. VGG has smaller filters (3 * 3) with more depth instead of larger filters. It ends up with the same reception field as if you had a single layer of 7 x 7 flexibility.uploadingamodeltoourfileisdonebelow.

The pre trained VGG 16 is downloaded and since it is trained on the ImageNet dataset which contains 1000 output classes its output layer is configured according to those classes. So we manipulated the last layer of the vgg16 network according to our needs.

We configured its output such as that it can classify the images in only 200 classes. The loss criterion that we use for training our model is ‘CrossEntropyLoss’ as it combines LogSoftmax and NLLLoss in one single class. It is useful in classification problems. We have an SGD optimizer for our model with a learning rate of 0.0001 and a momentumof0.9.

ISSN:2395 0056

ISSN:2395 0072

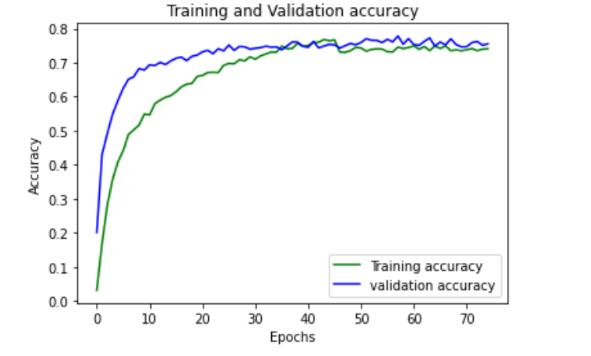

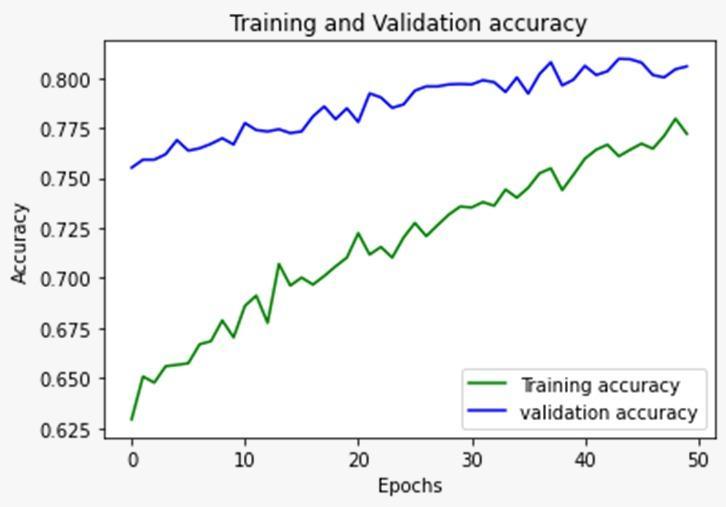

Fig.3

The above graph depicts the accuracy of the model with every epoch while the model is trained and testedaftereveryepoch.

Thetop1andtop5accuraciesofthemodelare:

Top1 76.12 Top5 93.81

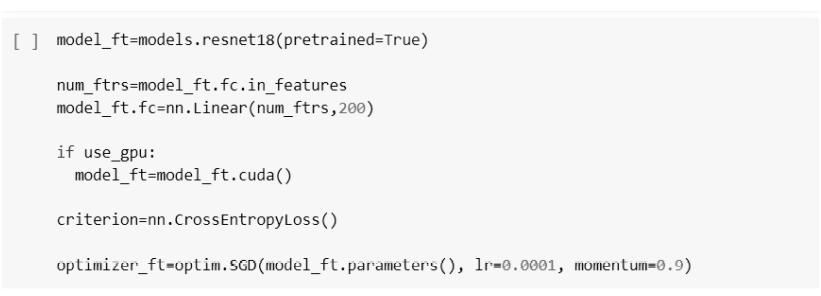

2) ResNet 18 The full form of ResNet is Residual Network which means using skip connections or shortcuts.ResNet 18isaCNNmodelthatmakesuse of skip connections or shortcuts to jump over some layers and using these shortcuts helps it to build deeper neural networks. The 18 in the name represents the number of layers it contains, which means it is 18 layers deep neural network. ResNet solvesamajorproblemintheneural

network field which is with the increase in network depth of the model the accuracy of the model is decreased and a degradation problem is exposed. ResNet solves this problem by using shortcuts to jump over some layers. It is also trained on the ImageNet dataset with 1000 classes. So we have to manipulate its last layer as well which is shown in thefigurebelow:

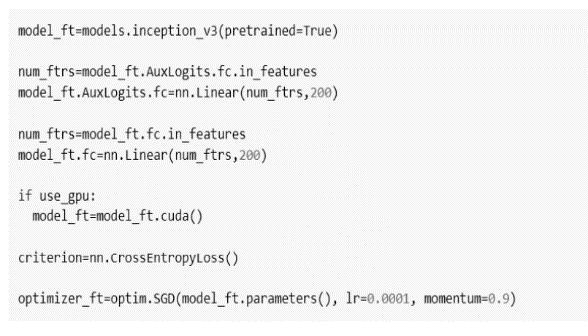

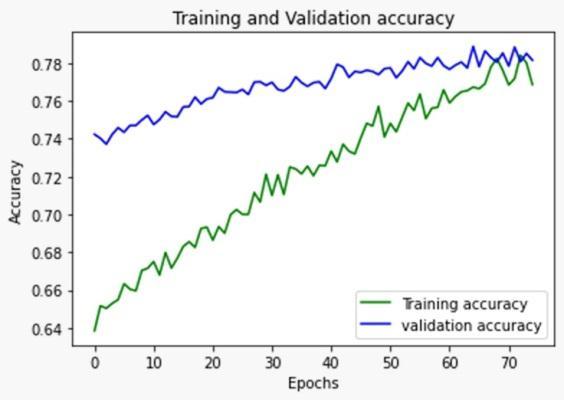

Aswecanseeinthefigure,thelastofthenetworkis changed into a linear neural network with 200 classes. The loss we used here is CrossEntropyLoss with SGD optimizer which is configured with a momentumof0.9andwithalearningrateof0.0001. After training it for 75 epochs we got the following results

Technology

2395

Themodelispretrainedandwethelastlayerischanged asperourneeds.

After training the model for fifty epochs, we get the followingresults.

Fig.4

The top 1 and top 5 accuracies of the model are givenbelow:

Top1 79.23

Top5 95.18

3) Inception V3 Inception V3 is based on the original paper: "Rethinking the Inception Architecture for Computer Vision" by Szegedy, et. al. The model itself is made up of symmetric and asymmetric building blocks, including max pooling, average pooling, concatenations, dropouts, and fully connected layers. Batch normalization is used throughout the model and is appliedintheactivationinputs.Thelossiscomputedvia the Softmax function. In the Inception module, the network is a bit “wider” than “deeper”. Filter with multiple sizes operates on the same level which makes the network a bit wider. The model is downloaded and manipulatedinabelow mentionedway:

Fig.5

The top 1 and top 5 accuracies of the model are given below:

Top1 77.35

Top5 94.35

6. CONCLUSION

The application we made uses the concept of deep learning algorithms to address the problem of identification of bird species. It can classify the bird speciesonlybyusingtheimagesofthebirds,andituses those images to extract the features from the images. These features are then used by the classifier to predict the classes, or in our case species, of the images. The outcome evidently reveals that the techniques we used in our work reduces the time and space in prediction of thebirdspecies.

Weproposedanapplicationthatiscapableofidentifying different bird species. This type of application can be very useful for amateur bird watchers, who are starting in the field of bird watching and the researchers who study birds. Also, this application can be useful for environmentalists,astheyusebirdsasanenvironmental indicator.

For developing such application we used Caltech UCSD Birds200datasetforthetrainingandtestingpurposeof our model. We trained our model on Google’s Colab platform using PyTorch library and used transfer learning to boost the accuracy of our model. Transfer learning technique helped us in achieving much higher

International Research Journal of Engineering and Technology (IRJET) e ISSN:2395 0056

Volume:09Issue:04|Apr2022 www.irjet.net p ISSN:2395 0072

accuracy than we imagined. We chose pre trained ResNet 18 architecture, after trying three different models, with transfer learning to boost the accuracy of our model. The model we trained using ResNet 18 was abletoachieveanaccuracyof78.86percent.

Also, in order to make our application lightweight, so that user does not have to download our whole model, wehaveuploadedourmodelanddetailsofvariousbirds on the cloud platform Heroku as an API. This helped us in reducing the size of our application by tremendous amounts.Also,theseAPIscanbeusedbyanyapplication ormodel fromanywhere. WemakerequeststotheAPIs and it returns us the JSON objects in return which containthecompleteinformationthatweneed.

7. FUTURE SCOPE

Improving Performance: The performance of our bird species classifier can be improved by using some more advanced algorithm.

[4] Krause, J., Jin, H., Yang, J., and Fei Fei, L. (2015). Finegrained recognition without part annotations. In ProceedingsoftheIEEEConferenceonComputerVision andPatternRecognition,pages5546 5555.

[5] Gavves, E., Fernando, B., Snoek, C. G., Smeulders, A. W., and Tuytelaars, T. (2015). Local alignments for fine grained categorization. International Journal of ComputerVision,111(2):191 212.

[6] P. Somervuo, A. Harm¨ a and S. Fagerlund, “Parametric Representations ¨ of Bird Sounds for Automatic Species Recognition”, IEEE Trans. Audio, Speech, Lang. Process., Vol.14, No.6, pp.2252 2263, November2006.

[7] E. Vilches, I.A. Escolbar, E.E. Vallejo, and C.E. Taylor, “Data Mining Applied to Acoustic Bird Species Recognition”,IEEEInt.Conf. on Patt.Recog., Hong Kong, China,pp.400 403,2006.

[8] S. Fagerlund, “Bird Species Recognition Using Support Vector Machines”, EUSASIP J. Adv. Signal Process.,Vol.2007,pp.1 8,2007.

Adding Species:

Currently, we are able to classify only 200 species of birds which can be increased so that themodelcanclassifymorespecies.

Adding More Data:

The accuracy increases with the size of the dataset. Therefore,if we have more images per classaccuracywillincrease.

Making an IoS/Android Application: An android or an IoS application can be made using the model in the future so that it can be much easier to carry and more people can have accesstoiteasily.

8. REFERENCES

[1] Ciregan, Dan, Ueli Meier, and Jürgen Schmidhuber. "Multicolumn deep neural networks for image classification." In 2012 IEEE conference on computer vision and pattern recognition, pp. 3642 3649. IEEE, 2012.

[2] Branson, Steve, Grant Van Horn, Serge Belongie, and Pietro Perona. "Bird species categorization using pose normalized deep convolutional nets." arXiv preprint arXiv:1406.2952(2014).

[3]Alter,AnneL.,andKarenM.Wang."AnExplorationof Computer Vision Techniques for Bird Species Classification."(2017).

[9] Marini, Andreia & Facon, Jacques & Koerich, Alessandro. (2013). Bird Species Classification Based on Color Features. Proceedings 2013 IEEE International Conference on Systems, Man, and Cybernetics, SMC 2013.4336 4341.

[10] O'Shea, Keiron & Nash, Ryan. (2015). ‘An IntroductiontoConvolutionalNeuralNetworks’.ArXive prints.

[11] Hubel, David H., and Torsten N. Wiesel. "Receptive fields, binocular interaction and functional architecture inthecat'svisualcortex."TheJournalofphysiology160, no.1(1962):106

[12] N. Zhang, J. Donahue, R. Girshick, and T. Darrell. Partbased r cnns for finegrained category detection. In Computer Vision ECCV 2014, pages 834 849. Springer, 2014.

[13]T. Y.Lin,A.RoyChowdhury,andS.Maji.Bilinearcnn models for fine grained visual recognition. In Proceedings of the IEEE International Conference on ComputerVision,pages1449 1457,2015.

[14]Ümit BUDAK, Abdulkadir ŞENGÜR, Aslı Başak DABAK and Musa ÇIBUK, Transfer Learning Based Object Detection and Effect of Majority Voting on Classification Performance, 2019 International Artificial IntelligenceandDataProcessingSymposium(IDAP)

International Research Journal of Engineering and Technology (IRJET)

09Issue:04|Apr2022 www.irjet.net

[15]. Wang, C., & Peng, Z. (2019). Design and Implementation of an Object Detection System Using Faster R CNN. 2019 International Conference on Robots & Intelligent System (ICRIS). DOI:10.1109/icris.2019.00060

[16] Qian, L., Fu, Y., & Liu, T. (2018). An Efficient Model Compression Method for CNN Based Object Detection. 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS). DOI:10.1109/icsess.2018.8663809.

[17] Zhang, W., Li, J., & Qi, S. (2018). Object Detection in Aerial Images Based on Cascaded CNN. 2018 InternationalConferenceonSensorNetworksandSignal Processing(SNSP).DOI:10.1109/snsp.2018.00088

[18] Parintorn Pooyoi; Punyanuch Borwarnginn; Jason H. Haga; Worapan Kusakunniran…Snow Scene SegmentationUsingCNN BasedApproachWithTransfer Learning, 2019 16th International Conference on Electrical Engineering/Electronics, Computer, TelecommunicationsandInformationTechnology(ECTI CON).

[19]WendiCai;JiadieLi;ZhongzhaoXie;TaoZhao;Kang LU, Street Object Detection Based on Faster R CNN…2018 37th Chinese Control Conference (CCC)… 10.23919/ChiCC.2018.8482613

[20] Hideaki Yanagisawa; Takuro Yamashita; Hiroshi Watanabe….A study on object detection method from manga images using CNN…. 2018 International WorkshoponAdvancedImageTechnology(IWAIT)

[21] Yiming Zhang; Xiangyun Xiao; Xubo Yang…..Real Time Object Detection for 360 Degree Panoramic Image Using CNN… 2017 International Conference on Virtual RealityandVisualization(ICVRV)

ISSN:2395 0056

ISSN:2395 0072