International Research Journal of Engineering and Technology (IRJET)

e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

1,5 Professor, Dept. of Computer Science and Engineering, SNIST, Hyderabad-501301, India 2,3,4 B.Tech Scholars, Dept. of Computer Science and Engineering Hyderabad-501301, India ***

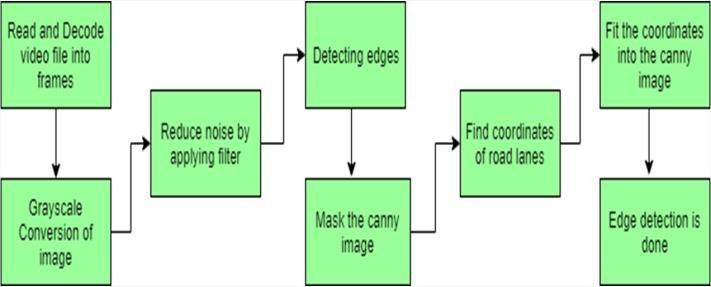

Abstract :- Autonomous vehicles are currently advancing quickly to make driving easier for people. In order to accomplish this, the vehicles must be able to recognize the lane of the road in order to maintain their position and avoid car accidents. In this article, we use the OpenCV library to implement and improve a computer vision system for lane detection on roads on both images and videos. This objective will be accomplished using the well-known line detection technique known as the Hough probabilistic transform. In this study, the grey scale picture, camera calibration, masking filter, and letter-on Canny edge detection are used as preprocessing approaches before the Hough transform. Prior to moving on to video, which is a more realistic case study, we first build our method on images. We then demonstrate our detection with aredline onthescreen.

Keywords: Gray Scaling, Hough Transformation, Gaussian Smoothing, Canny Edge Detection

As traffic in cities increases, road safety becomes more and more important. Most accidents on the avenues are caused by people exiting lanes against the rules. Most of these result from the driver's negligence.[1]

Inconsistent and slow behavior. Both automobiles and pedestrians must maintain lane discipline when using the road. A type of technology that enables cars to understandtheirsurroundingsiscomputervision.Itisa subset of artificial intelligence that aids in the comprehension ofimage and video input by software. Findingthelanemarkings is what the technology aims to do. Its objectiveistomaketheenvironmentsaferand the traffic situation better. The proposed system's functionalitycanrangefromshowingthepositionofthe road lines to the both on any outdoor display to more complex applications like lane switching soon to lessen traffic-related concussions. It's critical to accurately recognize lane roads in lane recognition and departure warning systems.[2] The predicting lane borders system,whichisinstalledinautomobiles,warnsdrivers when a vehicle crosses a lane boundary and directs them to prevent collisions. It is not always required for

lane borders to be clearly apparent for these intelligent systemstoensuresafetravel;forexample,thesystemmay have trouble effectively detecting lanes due to poor road conditions,alackofpaintusedtodefinethelanelimits,or other circumstances. Other variables may include environmental impacts like fog brought on by constant lightning conditions, day and night conditions, shadows produced by objects like trees or other vehicles, or streetlights. These elements make it difficult to tell a person apart from a road lane in the background of a picturethathasbeencaptured.[3].

Statistics on road accidents show that 95% of the causesthat led to an accident were human-related, and that driver error was a factor in up to 73% of incidents. This further suggests that the driver's negligenceistheprimarycauseoftrafficaccidents.[1]

With such serious issues with traffic safety, the advancement of vehicle safety technology has emerged as acrucial area for research in an effort to lower the number of persons who are hurt or killed in auto accidents. With today's cutting-edge auto safety technology, the driver's driving safety is improved primarily by computer, automatic control, and informationfusiontechnologies, whichalsoprovidethe automobilemoreintelligencewhiledriving.[2]

In the intelligent transportation system, smart automobilesare in the lead. It fully completes multilevel aided driving operations in addition to environment sensing, planning,and decision-making. It is an entirely new, cutting-edgesystem. Smart cars can fully comprehend the information about the environment around them using their ownsensors, whichisnecessaryforotheroperations.[3]However, no two roads are the same in the entire planet.This is due to the fact that there are too many things thatcan affect the state of the roads. It is simple for drivers to make poor driving decisions based on specific road condition information when natural and artificial elements change, which causes traffic accidents. Traffic accidents can be successfully avoided if the driver is able to make anaccurate assessment of the road conditions and provides timely guidance to correct any erroneous driving behavior. Right now,

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

the smart car system must effectively assess the state of the roads and provide the driver with precise drivinginstructions.[4]

Global trials for unmanned vehicles have already started. utility.[5]

This blurring technique produces a smooth blur that looks like you're seeing through a translucent screen, whichisnoticeablydifferentfromthebokeheffectthatis created by an out-of-focus lens or the shadow of an objectundernormallighting.

Thepresentresearchonautonomousvehiclesis focused on how to detect and recognize road signs, obstructions, and pedestrians in an unfamiliar area accurately and instantly. In conclusion, the thorough investigation of lane detection and recognition technologies offers significant practical

To record the landscape of the road, a CCD camera is fixedto thefront-viewmirror.The baselineis expected to be set up as horizontal to simplify the issue and ensure that the image's horizon is parallel to the x-axis. If not, the calibration data can be used to alter the camera'simage.Eachlaneboundarymarkingconsistsof twoedgelinesthatareoftenapproximaterectangles.[1]

In this study, it was envisioned that the algorithm wouldreceive a 620x480 RGB-colored image as its input. Therefore, to reduce processing time, the algorithmattempts to turn the image into a grayscale image.[2] Second, the image will make it difficult to discern edgescorrectly when there is noise present. F.H.D algorithm as a result the work of Mohamed Roushdy (2007) was usedto improve edge detection. the edge detector next wasused to create an edge image by applying a clever filter and automatically obtaining the edges. It has significantlystreamlined the image edges, lowering the quantity of learning data needed.[3]

Next edged a right and left lane border segment is producedbythelinedetectorafterreceivingtheimage.

The horizon was identified as the projected point at which these two-line segments would intersect. The information in the edge picture picked up by the Hough transformwasusedtoexecutethelaneboundaryscan.A list of right- side and left-side points were produced by thescan.Thelaneborderswerefinallyrepresentedbya pair of hyperbolas that were fitted to these data points. The hyperbolas are presented on the original color imageforviewingpurposes.[4]

A 2-D convolution operator called the Gaussian smoothing operator is used to 'blur' images and eliminate noise and detail. It is comparable to the mean filterinthisregard,butitmakesuseofa differentkernel thatsimulatesaGaussian(or"bell-shaped")hump.

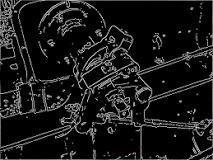

With the use of the Canny edge detection technology, the amount of data that needs to be processed can be drastically reduced while still extracting meaningful structuralinformation from various vision objects. It is frequentlyusedinmanycomputervisionsystems.

The edges of a picture are found using Canny Edge Detection. It employs a multistage method and accepts agrayscaleimageasinput. TheCanny ()methodofthe imgproc class can be used to conduct this process on a picture;thesyntaxforthismethodisasfollows.

TheCannyOperator'sobjectivesweremadeclear.Good detection entails being able to identify and indicate all actual edges. Low separation between the detected edge and the actual edge indicates good localization. Onlyoneresponseisallowedforeachedge,clearly.

Fig2.CannyEdgeDetection

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

The practice of automatic driving makes extensive use of lane detection technology. This study use the Hough transform to identify the valuable vertical straight lanes. First, picture preprocessing extracts the image's edge features. The area of interest in the photograph is thendrawnout.

Two edge points that are on the same line will cause theirassociatedcosinecurvestocrossataparticular (,) pair.Asaresult,theHoughTransformalgorithmlocates pairings of (,) that have more intersections than a predeterminedthresholdtoidentifylines.

The Hough transform is a feature extraction technique used in image analysis, computer vision, and digital image processing. The purpose of the technique is to findimperfectinstancesofobjectswithinacertainclass ofshapesbyavotingprocedure.

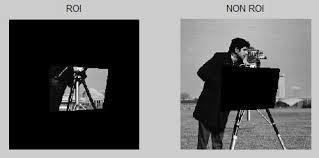

An image's region of interest (ROI) is a section you want to filter or manipulate in some way. An ROI can beshownasabinarymaskpicture.PixelsintheROIare set to 1 in the mask picture, while pixels outside the ROIaresetto0.

The image window is expanded to include a default region with a rectangular shape and the associated colour.Afterthat,clickeachvertexoftheROIpolygon.

Fig3.Houghtransformation

Theprocessofgrayscalinginvolveschanginganimage from another color space, such as RGB, CMYK, HSV, etc.,toavarietyofgrayscales.Thereareseveralshades ofblackandwhite.

Grayscalerefersto a situationin which eachpixel ina digital image solely contains information about the light's intensity. Usually, just the range from deepest black to brightest white is visible in such photos. In other words, the only colors present in the image are black, white, and grey, the latter of which includes severalshades.

Newgrayscaleimage=((0.3*R)+(0.59*G)+(0.11* B) is the new equation that results. This equation shows that Red contributed 30%, Green provided 59%, the highest of the three colors, and Blue contributed11%.

The project involves finding lane lines in a picture usingPythonandOpenCV.OpenCVisanacronymfor "Open- Source Computer Vision," and it refers to a collectionofsoftwaretoolsforimageanalysis.

The goal of edge detection istoidentify item borders in images. In an image, a detection is used to look for regionswheretheintensity sharplyvaries.Itispossible toidentify an image as a matrix or a collection of pixels. Theamountoflightexistingataparticularlocationinan image is represented by a pixel. A value of zero implies thatsomethingiscompletelyblack,whileavalueof255 indicates that something is completely white. Each pixel's intensity is represented by a numeric value ranging from 0to 255. A gradient is a pattern of pixels with different brightness’s. While a little gradient denotes a shallowshift, a high gradient denotes a steep change.

Additionally,thereisabrightpixelinthegradientimage anywhere there is a strong gradient, or wherever there is an abrupt change in intensity (rapid change in brightness).By tracing out each of these pixels, we may obtain the edges. The edges in our road image will be foundusingthisidea.

The image is then made grayscale: gray image = cv2.cvtColor(image,cv2.COLOR_RGB2GRAY)

Thepicturehasnowbeenmadegrayscale:

Each pixel in a grayscale image is represented by a single number that represents its brightness. Changing a pixel'svalue to match the average value of the around pixels'intensities is a typical way to

smooth an image. In order to

lowernoise, akernel will averagethepixels.Ourentire

image is smootheddown using this kernel of normallydistributednumbers (np. array([[1,2,3],[4,5,6],[7,8,9]]),with each pixel's value set to the weighted average of itsneighbors. In this instance, blur=cv2 will be used with a5x5 Gaussian kernel. Gray image, Gaussian Blur (5,5),0); The image withlessnoiseisshownbelow.

Thelinedetectoroperatorinaconvolution-basedmethod consists of a convolution mask tailored to find the presenceoflines withaspecific widthnandorientation. Here are the four convolution masks for detecting straight,oblique(+45degrees),oblique(45degrees),and verticallinesinapicture.

color_select [color_thresholds|~region_thresholds]=[0,0,0] line_image [~color_thresholds®ion_thresholds] =[9,255,0]

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

An edge is a spot in a picture where the contrast betweenadjacent pixels intheimage drastically varies. Aconsiderablegradientisonethatissteep,asopposed to onethat is shallow. An image can be compared to a matrix with rows and columns of intensities in this manner. With the x axis travelling the width (columns) andthey axistraversingtheimageheight,apicturecan alsoberepresentedin2Dcoordinatespace(rows).The Canny function computes a derivative on the x and y axes todetermine the brightness difference between adjacentpixels. In other words, we are figuring out the gradient(or changein brightness) ineverydirection. It thenuses astringofwhite pixelstotracethegradients thatarethestrongest.

canny_image=cv2.Canny(blur,100,120)

HereistheresultofapplyingtheCannyfunctiononthe image:

wewillnowfillthetriangledimensioninthis maskwith 255. To obtain our final region of interest, we will now perform abitwise AND operation on the clever picture andthemask.

lines_edges=cv2.addWeighted(color_edges,0.8, line_image,1,0)

lines_edges=cv2.polylines(lines_edges,vertices,True, (0,0,255),10)

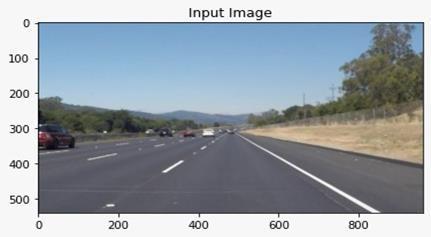

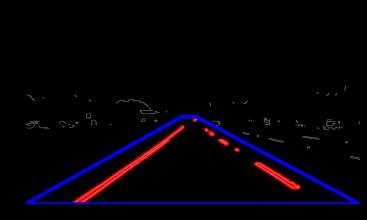

plt.imshow(image)plt.title("InputImage")plt.show() plt.imshow(lines_edges)

plt.title("ColoredLaneline[InRED]andRegionof Interest[InBlue]")

plt.show()

The picture, also known as the masked image, is shown belowafterperformingabitwiseoperationontheclever imageandthemask:

The low threshold and high threshold functions can be used to distinguish between neighboring pixels that adheretothestrongest gradient.Ifthegradientexceeds the upperthreshold, it is accepted as an edge pixel; if it falls below the lower level, it is discarded. Only if the gradient is connected to a strong edge and falls within theparametersisitpermitted.

The entirely black areas correspond tomodest intensity variations between adjacent pixels, whereas the white line shows a spot in the image where there is a substantialchangeinintensityabovethethreshold.

The image's size was selected to show the lanes on the roads and to highlight the triangle as our area of attention.

Then, a mask that has the same dimension as the image andisessentiallyanarrayofallzerosiscreated.Inorder torenderthedimensionsofourregionofinterestwhite,

Now, we use the Hough transform method to identify thelanelinesintheimagebylookingforstraightlines.

Thefollowingequationdescribesastraightline: Y=mx+b

Theslopeofthelineisjustaclimboverarun.Theline canberepresentedasasingledotinHoughSpaceifthe y intercept and slope are specified. There are many lines, each with a different ‘m' and 'b' value, that can pass through this dot. There are numerous lines that can cross each point, each with a distinct slope and y intercept value. However, a single line runs between the two locations. By examining the point of intersection in sufficient space, which reflects the ‘m' and 'b' values of a line that crosses both places in HoughSpace,wemaydeterminethis.

We must first create a grid in our Hough space before wecanidentifythelines.

e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

The slope and y intercept values of the line are represented by a bin in the grid. The bin to which a pointofintersectioninaHoughSpacebelongswillcast avoteforthatpoint.Thecontainerwiththemostvotes will be where we draw our line. On the other hand, a verticallinehasaninfinitely steepslope.Therefore,we shall utilize polar coordinates rather than Cartesian coordinatestoexpressverticallines.Thus,theequation forourlineisasfollows:

defdraw_the_lines(img,Lines):

img=np.copy(img)

blank_image = np.zeros((img.shape[0], img.shape[1], 3),dtype=np.uint8)

for line in Lines: for x1, y1, x2, y2 in line: cv2.line(blank_image, (x1, y1), (x2, y2), (0, 255, 0), thickness=4)

img = cv2.addWeighted(img, 0.8, blank_image, 1, 0.0) returnimg lines = cv2.HoughLinesP(cropped_image, rho=2, theta=np.pi/60, threshold=50, lines=np.array([]), minLineLength=40,maxLineGap=80) image_with_lines=draw_the_lines(image,lines)

Herearetheresultingpictures:

Now, utilizing the video capture feature and reading each frameinthemoviewiththeaidofthewhileloop, thesameprocesscanbeusedtoavideothatcomprises anumberofframesorimagesasfollows:

cap=cv2.VideoCapture('test_video.mp4')while cap.isOpened():

ret,frame=cap.read() frame=process(frame)cv2.imshow('frame',frame)if cv2.waitKey(1)&0xFF==ord('q'): break

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

We utilize a modular implementation strategy since it makes updating algorithms easy and allows us to continue developing the model in the future. We place themodel'spicklefileintheappropriateplacessothatit may be easily moved to products. As a result, it may be simple to skip compiling the entire huge code. Another way to strengthen the concept is to imagine a future in whichitispossibletoseetheroadatnightorinthedark. The process of color selection and recognition is quite efficient indaylight. Althoughaddingshadows will make thingsa little noisier, drivingat night or in low light will bemoredifficult(e.g.,heavyfog).Furthermore,thisstudy can only identify lanes on bituminous roads; loamy soil roads, which are common in Indian villages, are not included. This project can be enhanced to detect and prevent accidents on roads with loamy soil that are presentincommunity

No further data, such as lane width, time to lane crossing, or offset between the center of the lanes, is neededbytheproposedsystem.

Additionally, not needed are coordinate transformation andcameracalibration.

Thesuggestedlanedetectingtechnologycanbeusedina variety of weather circumstances on straight, painted, andunpaintedroadways.

We employed methods like the Canny Function and the OpenCVpackagetoachieveedgedetection.Then,usinga zero-intensitymask,weusedthebitwisemethodto map our region of interest. Then, the image's straight lines and lane lines were located using the Hough Transform technique. Since Cartesian coordinates did not provide a sufficientslopeforvertical andhorizontal lines, weused polar coordinates instead. To display lane lines, we finally combined the original image with our zerointensityimage.

We would like to extend our gratitude to P Ramu for his astute suggestion, wise advice, and moral supportthroughoutthewritingofthiswork

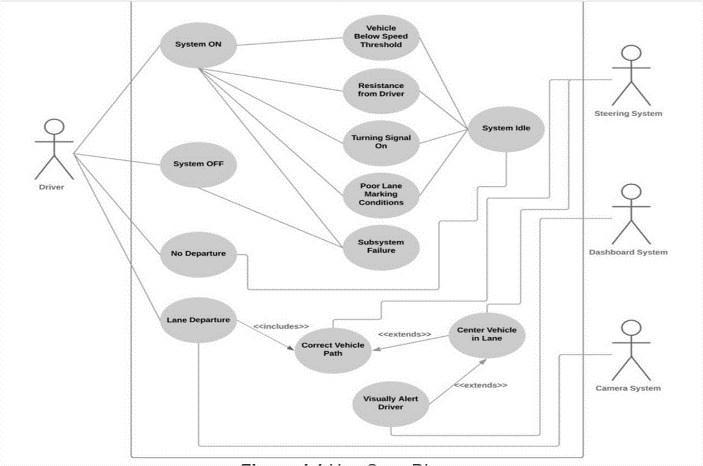

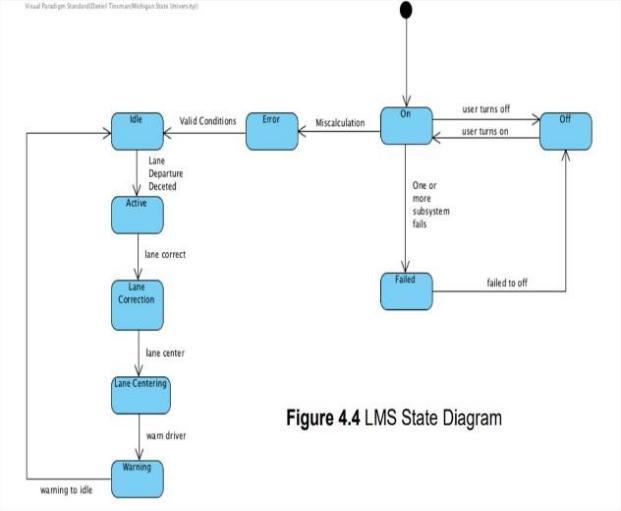

1. A. Berninger, J. Schroeder, D. Somary, D. Tinsmanand M. Wojno, Lane Management System – Team 1,MichiganState University, October2018. http://www.cse.msu.edu/~wojnomat/

2. Berninger, J. Schroeder, D. Somary, D. Tinsman and M. Wojno, “Requirements Outline”, Michigan State University, October 2018.

3. “How does lane departure warning work?”, Ziff Davis, LLC. PCMag Digital Group, 2018. https://www.extremetech.com/extreme/1 65320-what-is- lane-departurewarningand-how-does-it-work

4. “LaneDeparture Warning”, National Safety Council MediaRoom, 2018. https://mycardoeswhat.org/safetyfeatures/lane-departure-warning/

5. “Lane DepartureWarning System”, RobertBosch GmbH., 2018. https://www.boschmobilitysolutions.com/ en/products- and-services/passenger-carsand-light- commercialvehicles/driverassistancesystems/lane- departurewarning/

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN:2395-0072

6. “Lane Keeping Assist”, National Safety CouncilMedia Room, 2018.

https://mycardoeswhat.org/safetyfeatures /lane-keeping-assist/

7. “Lane Keeping System”, Ford Motor Company,2018.

https://owner.ford.com/support/howtos/s afety/driver- assisttechnology/driving/how-touse-lanekeeping-system.html

8. “Lane Keeping System”, Robert Bosch GmbH.,2018.

https://www.boschmobilitysolutions.com/ en/products- and-services/passenger-carsand-light- commercial vehicles /driver assistance-systems/lane-keeping-support/

9. “Toyota Corolla Lane-CenteringTech A Step Toward to Self-Driving Cures Annoying "Ping-Pong", Forbes Media LLC, 2018.

https://www.forbes.com/sites/doronlevin/ 2018/04/13/toy ota-corolla-lanecenteringtecha-step-toward-to-self- driving-curesannoying-ping-pong/#39e17fbc7b26

10. Monticello, Mike. “Guide to Lane Departure Warning &Lane Keeping Assist,” Consumer Report,