International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

Abstract - The field of emotion recognition has advanced significantly, and it now has a significant impact on HCI. It is important to extract the facial features that may be used to detect the expression in order to do any Facial Expression Recognition. It details the range and uses of automatic emotion recognition systems across several industries. This study also examines a number of parameters that can improve the system's precision, security, and effectiveness. How photos can be recognised by computers in a similar way to how humans do. Handwriting is one that can be recognised from an image and is useful for processing handwritten forms and for human labour like check analysis. check analysis and processing of handwritten forms. The process of recognising the image in image recognition will be influenced by the angle of view, lighting, and clarity of the captured image. The method for offline handwritten digit recognition presented in this work is based on various machine learning techniques. The major goal of this study is to provide efficient and trustworthy methods for handwritten digit recognition.

Visually perceptible human facial emotions are all around humans. They are organic cues that aid in their comprehension of the emotions of any subject in front of them or in pictures or movies. While these emotions are extremely complex and difficult for machines to comprehend, they are simple to comprehend for humans. Numerous human-computer interactions, including those involving smartphones, affective computing, intelligent control systems, psychological research, behavioural analysis,patternsearching,defence,socialmedia,robotics, andother areas,have made extensive use of human facial emotion recognition. One might provide the highest level of user pleasure and feedback to enhance present technologies by evaluating these feelings. Only computer vision and deep learning can be used for this. To create several Facial Emotion Recognition (FER) systems that have been evaluated for encoding and transmitting Informationfromfacialrepresentations.

The OCR is a process of classifying the optical patterns present in a digital image to the corresponding characters.The character recognition is achieved through important steps of feature extraction and classification. OCR system simulates the human capability to recognize printed forms of text and it has become one of the most successful applications in the field of object recognition. Applications of OCR include identifying the vehicle registration number from the image of number plate which helps in controlling traffic, converting printed academic records into text for storing in an electronic database,decodingancientscripture,automaticdataentry by optical scanning of cards, bank checks, etc. The OCR systemssavetimeandpreventtypingerrors.Theusageof neural networks is possible for OCR. OCR has been the subjectofextensiveinvestigationduringthelast30years. As a result, document image analysis (DIA), multilingual, handwritten, and omni-font OCRs have become popular. Despite these considerable study efforts, the machine's capacity for accurate text reading is still far lower than thatofthehuman.Therefore,contemporaryOCRresearch focuses on enhancing OCR's accuracy and speed for documents printed in a variety of styles and written in unrestricted situations. No open source or paid software has ever been made accessible for difficult languages like Urdu,Sindhi,etc.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

Rapidshiftsofvisualattention betweencomponentsof the current task and other context cues are caused by an eager incentive to prevent errors of omission (i.e., promotion focus). These quick adjustments in focus should make it easier to execute the usual task of recognising facial emotions. Our hypothesis, which is mostly based on the regulatory focus literature, is also consistent with the body of research on face emotion identification.

There are numerous OCR-based programmes that can extract text from images, but they are insufficiently accurate to perform the same purpose for handwritten writing.TherearenumerousOCR-basedprogrammesthat can extract text from images, but they are insufficiently accurate to perform the same purpose for handwritten writing.

In the age of artificial intelligence and the internet of things, emotion recognition is essential. It has enormous potential for behavioural modelling, robotics, healthcare, biometricsecurity,andhuman-computerinterface.

In the upcoming years, it is anticipated that the global market for optical character recognition would expand quickly. In 2021, the OCR market was estimated to be worth USD 8.93 billion. Between 2022 and 2030, a CAGR of 15.4% is anticipated for growth. The rising need for OCR across a range of end-use industries, including healthcare, automotive, and others, is what's fueling this expansion.

These varyingly tiny yet complex signals in our facial expressions frequently reveal a wealth of information abouthowwearefeeling.Wecaneasilyandinexpensively assess the effects that content and services have on audiencesandusersbyusingfaceemotionrecognition.

A well-known issue in artificial intelligence and machine learning is optical character recognition (OCR). The issue arises when the available data is somewhat vague and unregulated, which is precisely the case in handwritten text recognition, despite the fact that most people think thesituationisclear-cut.

Facial Emotion Recognition is a technology that analyses emotions from a variety of sources, including images and videos.Itisamemberofthegroupoftechnologiesknown as "affective computing," a multidisciplinary area of study onthecapacityofcomputerstorecogniseandunderstand affectivestatesandhumanemotionsthatfrequentlyrelies onArtificialIntelligencetechnology.

Human emotions can be inferred from facial expressions, which are a form of non-verbal communication. Decoding these emotional expressions has long been of interest to researchers in both the human computer interaction and psychology fields (Lang et al. 1993; Ekman and Friesen 2003). (Cowie et al. 2001; Abdat et al. 2011). The widespread use of cameras as well as recent advancements in machine learning, pattern recognition, andbiometricsanalysishaveallbeensignificantfactorsin thedevelopmentofFERtechnology.

The fact that so many businesses, from corporate behemoths like NEC or Google to smaller ones like Affectiva or Eyeris, invest in the technology demonstrates its expanding significance. A number of programmes under the Horizon20201 EU research and innovation programmeareinvestigatingtheuseofthetechnology.

Facedetection,facialexpressiondetection,andexpression classification to an emotional state make up the three steps of FER analysis. Based on an examination of facial landmark placements, emotion detection is possible (e.g. end of nose, eyebrows). Additionally, in recordings, variations in such postures are also examined in order to spot facial muscle contractions (Ko 2018). Faces can indicate fundamental emotions (such as anger, disgust, fear, joy, sadness, and surprise) or compound emotions (suchashappilysad,happilysurprised,happilydisgusted, tragically afraid, sadly angry, sadly surprised), depending on the algorithm (Du et al. 2014). In other situations, the physiologicalormentalstateofapersonmayberelatedto theirfacialexpressions(e.g.tirednessorboredom).

Surveillance cameras, cameras near billboards in businesses,socialmedia,streamingservices,andpersonal devices are some of the sources of the photos and videos usedasinputbyFERalgorithms.

Early computer programmes could comprehend print handwriting with separated characters, but cursive handwriting with interwoven characters offered Sayer's Paradox, a character segmentation challenge. The first applied pattern recognition programme was created in 1962 by Shelia Guberman, who was living in Moscow.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

Commercial illustrations came from organisations like IBM and Communications Intelligence Corporation. In the early1990s,twocompanies–ParagraphInternationaland Lexicus developed technologies that could recognise handwriting in cursive. While Ronjon Nag and Chris Kortge, two Stanford University students, established Lexicus, Paragraph was based in Russia and was founded by computer scientist Stepan Pachikov. The Lexicus Longhand system was made commercially accessible for the PenPoint and Windows operating systems, while the Paragraph CalliGrapher technology was implemented in the Apple Newton systems. After being purchased by Motorola in 1993, Lexicus went on to create for the company Chinese handwriting detection and predictive text technologies. The handwriting recognition team at Paragraph,whichSGIpurchasedin1997,laterestablished theP&I division,whichVademlateracquiredfrom SGI. In 1999, P&I purchased CalliGrapher handwriting recognition technology from Vadem, along with other digitalinktechnologies.

The process of FER is a composite activity comprises differentphases.Thesephasesareasfollows:

Typically, the face is made up of skin, facial muscles, and bones. These muscles contract, resulting in distorted face features. The quickest way to convey any type of information is through facial expressions. Facial expression detection software could result in a userfriendly human-machine interface. According to research by Ekman and Frisen from 1978, facial expressions function as a quick signal that changes with the contractionoffacialfeatureslikethelips,eyes,cheeks,and brows, affecting the recognition accuracy. Additionally, happy,sad,fearful,disgusted,angry,andsurprisedaresix universally recognised basic expressions. Face detection, feature extraction, and expression classification are the first three steps in the process of recognising facial expressions.

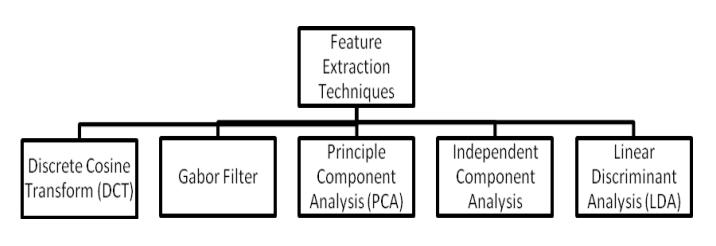

The process of feature extraction transforms pixel data into a more complex representation of the face's or its components' shape, motion, colour, texture, and spatial arrangement. In general, feature extraction brings the input space's dimensions down. As a crucial task in a pattern recognition system, the reduction procedure should preserve critical information. Different methods canbeusedforfeatureextraction.

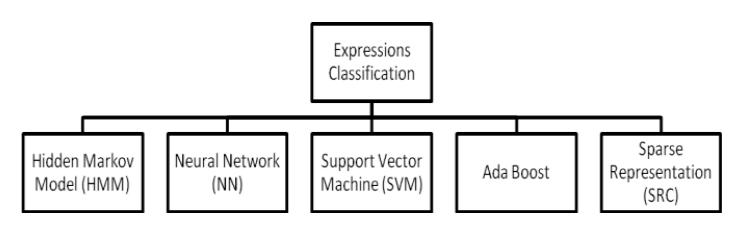

A classifier that is connected to a decision process and frequently includes models of pattern distribution performs expression classification. Action units and prototypicalfacialexpressionsarethetwoprimaryclasses Ekman identified for use in facial expression recognition. To extract expressions, a variety of categorization techniquesareapplied.

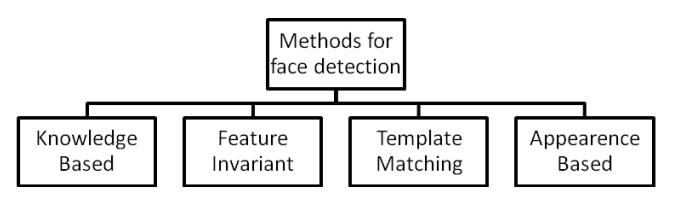

Face detection is the preliminary processing stage for facialexpressionrecognition.Totransformapictureintoa normalisedpurefacialimageforfeatureextraction,follow thesesteps:findthefeaturepoints,rotatetheimagetoline themup,findthefaceregion,andcropitusingarectangle in accordance with the face model. Face detection techniquesentailfindingfacesinasingleimage.

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page845

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

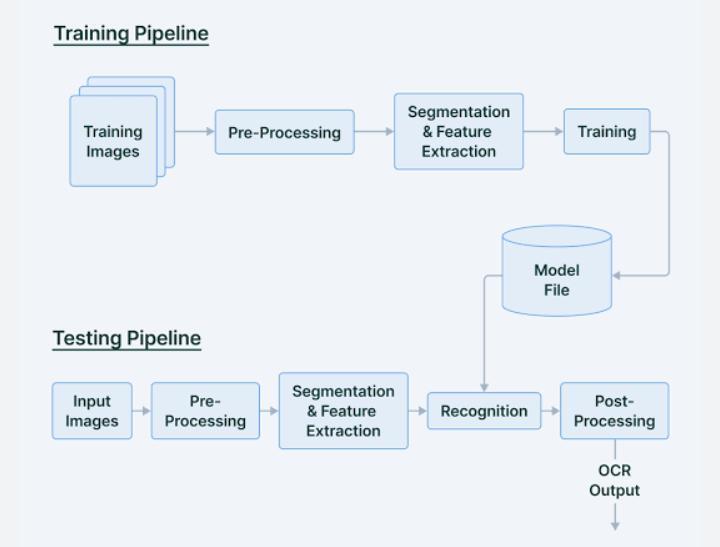

The process of OCR is a composite activity comprises differentphases.Thesephasesareasfollows:

The first step in OCR is called image acquisition, and it entails getting a digital image and putting it into a format that a computer can easily process. Both picture compression and quantization may be used in this. Binarization, which uses just two levels of the image, is a unique instance of quantization. The binary picture is usually sufficient to describe the image. There are two types of compression: lossy and lossless. There has been provided an overview of the various image compressing methods.

Pre-processing follows image acquisition and seeks to improvetheimagequality.Thresholdingisoneofthepreprocessing methods that seeks to binary the image depending on a certain threshold value. Both local and global settings are possible for the threshold value. You can use a variety of filters, including averaging, min and max filters. As an alternative, several morphological processes like erosion, dilatation, opening, and shutting canbecarriedout.

Finding the document's skew is a crucial step in preprocessing.Projectionsprofiles,theHoughtransform,and nearest neighbour methods are some of the skew estimating methods. Before applying following steps, the imageisoccasionallythinnedaswell.Asafinalstepinthe pre-processing stage, it is also possible to determine the text lines that are present in the document. This may be accomplishedviapixelclusteringorprojections.

Before moving on to the classification phase, the image is divided into characters in this stage. Segmentation can be done formally or inferentially as a result of the classificationprocess.Additionally,theadditionalstagesof OCR can assist in supplying contextual data beneficial for imagesegmentation.

Various character traits are extracted at this level. Characters can only be identified by these traits. An important research question is how to choose the best features and how many characteristics should be employedoverall.Itispossibletouseavarietyoffeatures, including the image itself, geometric features (loops, strokes), and statistical features (moments). Finally, a variety of methods, including principal component

analysis, can be employed to reduce the image's dimensionality.

The process of placing a character in the right category is what it is described as. Based on relationships found in picturecomponentrelationships,thestructuralmethodto classification. The statistical methods rely on the classificationoftheimageusingadiscriminatingfunction. The Bayesian classifier, decision trees, neural networks, closest neighbour classifiers, and others are examples of statistical classification methods. Finally, there are classifiersthatuseasyntacticapproach,whichimpliesthe useof a grammatical technique to build a picture from its constituentparts.

Thereareseveralmethodsthatcanbeutilisedtoincrease the accuracy of OCR findings once the character has been classified. Using more than one classifier to categorise an image is one strategy. The classifier can be applied in a hierarchical, parallel, or cascade manner. After that, multiple methods can be used to integrate the classifiers' output. Contextual analysis can also be done to enhance OCR outcomes. Error chances can be decreased by taking into account the geometrical structure and document context of the image. OCR results can be enhanced by lexical processing based on Markov models and dictionaries.

Profiles of individuals can also be produced using FER technology in a variety of circumstances. It could be used todetermine whethersomeone acceptsa certain product,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

advertisement, or idea. It can also be used to categorise workplace productivity and fatigue resistance. The risk comes from the possibility that the target of the targeting may not be aware of it and may get uncomfortable once they learn about it. Erroneous profiling or assumptions made merely on the basis of an association with a certain set of people who share the same feelings can have additionalramifications.

Last but not least, FER can affect behavioural modifications if a person is conscious of the technological exposure(knownasReactivityinpsychology).Peoplemay modify their routines or stay away from particular locationswherethetechnologyisusedinanefforttoselfsensor and protect themselves. If non-democratic administrations employed such technology to infer residents' political attitudes, one may picture the chilling impactitcouldhaveonasocietyandthefeelingofunease amongcitizens.

In this study, an effective method for handwritten character recognition is proposed. The handwritten numeralswererecognisedbyadeepnetworkmodelinthe proposed work. By introducing the new kernel approaches, the low identification rate of the three numbers 3, 7, and 9 can be raised. A pattern of overlap amongthemmaybethecause.However,byintroducinga quad-tree based structure, which can even deep grasp each digit pattern at a coarser-grain level, the identification rate can be increased. In order to increase accuracy, modern methods can be utilised to investigate topologicalproperties.

On the front end, the system uses both FER and OCR technologies equally,howevertheback endusesdifferent technologies.

The review of the framework for facial expression recognition has been presented in this research. and providesareviewoftheliteratureonthevariousmethods used to identify facial expressions. Based on their rate of recognition,thesetechniquesareevaluated.

The recognition reading is still negative even though the training and input set were compiled using a third-party imageprocessingprogrammewithnoise-freeimages.This test compares two sets of distinct people's handwriting, hence the outcome is predicted to be unremarkable in terms of matching and recognition. Numerous factors contribute to the inadequate recognition, some of which aregivenbelow:

• The possibility that two sets of input and training files representthehandwritingoftwodifferentpeople.Evenif weassumethatthesameindividualcontributedbothsets, there is very little likelihood that the letters will be

identical to those that were previously provided. Therefore, it is anticipated that the recognition using crossed-setwillbepoor.Despitereceivingapoorscorefor recognition, some letters can still be accurately identified even after being crossed-tested. These letters include the letters"T"and"E,"whichhavelittletonocurvature.

We extend our sincere gratitude to Dr. Thomas P John (Chairman), Dr. Suresh Venugopal P (Principal), Dr Srinivasa H P (Vice-principal), Ms. Suma R (HOD – CSE Department), Dr. John T Mesia Dhas (Associate Professor & Project Coordinator), Ms. Nikitha V P (Assistant Professor & Project Guide), Teaching & Non-Teaching Staffs of T. John Institute of Technology, Bengaluru –560083.

1. R. Plamondon and S. N. Srihari, ―Online and off-line handwriting recognition: a comprehensive survey,‖ IEEE Transactionsonpatternanalysisandmachineintelligence, vol.22,no.1,pp.63–84,2000.

2. N. Arica and F. T. Yarman-Vural, ―An overview of character recognition focused on off-line handwriting,‖ IEEETransactionsonSystems,Man,andCybernetics,Part C (Applications and Reviews), vol. 31, no. 2, pp. 216–233, 2001.

3. L. M. Lorigo and V. Govindaraju, ―Offline arabic handwritingrecog-nition: a survey,‖IEEE transactions on pattern analysis and machine intelligence, vol. 28, no. 5, pp.712–724,2006.

4. G. Nagy, ―Chinese character recognition: a twenty-fiveyear retrospec-tive,‖ in Pattern Recognition, 1988., 9th InternationalConferenceon.IEEE,1988,pp.163–167.

5.Ashok Kumar, Pradeep Kumar Bhatia, “Offline handwritten character recognition using improved backpropagation algorithm” International Journal of AdvancesinEngineeringSciences,Vol.3(3),2013.

6.Yusuf Perwej, Ashish Chaturvedi, “Neural Networks for Handwritten English Alphabet Recognition”, International Journal of Computer Applications (0975 –8887), Volume 20–No.7,April2011.

7.Liangbin Zheng, Ruqi Chen, Xiaojin Cheng, “Research on Offline Handwritten Chinese Character Recognition Based on BP Neural Networks”, 2011 International Conference on Computer Science and Information Technology(ICCSIT2011), IPCSIT vol. 51 (2012), 2012, IACSITPress,Singapore

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

8.Mariusz Bernacki, Przemysław Włodarczyk, “Principles of training multi-layer neural network using backpropagation”

URL:http://galaxy.agh.edu.pl/~vlsi/AI/backp_t_en/backp rop.html

9.Performance Testing URL: http://agiletesting.blogspot.com/2005/02/performance ‐vs‐load‐vs‐stress‐testing.html [10] Sandeep B. Patil, G.R. Sinha,"Real Time Handwritten Marathi Numerals Recognition Using Neural Network", IJITCS, vol.4, no.12, pp.76-81, 2012. [11] Shailendra Kumar Dewangan,"Real Time Recognition of Handwritten Devnagari Signatures without Segmentation Using Artificial Neural Network", IJIGSP, vol.5, no.4, pp.30-37, 2013.DOI: 10.5815/ijigsp.2013.04.04

10.Aiquan Yuan, Gang Bai, Lijing Jiao, Yajie Liu, “Offline Handwritten English Character Recognition based on Convolutional Neural Network” in the 10th IAPR International Workshop on Document Analysis Systems, pp.125–129.2012.

11. B.Indira,M.Shalini,M.V. Ramana Murthy,Mahaboob Sharief Shaik,"Classification and Recognition of Printed HindiCharactersUsingArtificialNeuralNetworks",IJIGSP, vol.4,no.6,pp.15-21,2012

12. Anita Pal, Dayashankar Singh, “Handwritten English Character Recognition using Neural Network” International Journal of Computer Science and Communication,Vol.1,No.2,pp.141-144,2010.

13. Azizah Suliman, Mohd. Nasir Sulaiman, Mohamed Othman,RahmitaWirza,“Chaincodingandpreprocessing stages of handwritten character image file,” Electronic Journal of Computer Science and Information Technology (eJCSIT),vol.2,no.1,pp.6-13,2010

14.Ying, Zhang; “facial expression recognition based on NMF and SVM”, International Forum on Information TechnologyandApplications2009.

15. Anagha, Dr. Kulkarnki; “Facial detection and facial expression recognition system”, International Conference onElectronicsandCommunicationSystem(ICECS-2014).

16.Claude C. Chibelushi, Fabrice Bourel; “Facial ExpressionRecognition:ABriefTutorialOverview”,2002.

17.G.Hemalatha, C.P. Sumathi; “A Study of Techniques for Facial Detection and Expression Classification”, International Journal of Computer Science & Engineering Survey(IJCSES)2014.

18.“Facial Recognition System”, http://en.wikipedia.org /wiki/Facial_recognition_system.

19.Szwoch Wioleta; “Using Physiological Signals for EmotionRecognition”,2013IEEE.

20.Weifeng, Caifeng, Yanjiang; “facial expression recognition based on discriminative distance learning”, 21st International Conference on Pattern Recognition (ICPR2012).

21. Watada, J., & Pedrycz, W. A fuzzy regression approach to acquisition of linguistic rules. Handbook of Granular Computing,719-732,2008.

22.Seewald,A.K.(2011).Onthebrittlenessofhandwritten digitrecognitionmodels.ISRNMachineVision,2012.

23.Kloesgen, W., & Zytkow, J. Handbook of Knowledge DiscoveryandDataMining.

24.A. S. Meuwissen, J. E. Anderson, and P. D. Zelazo, ‘‘The creation and validation of the developmental emotional faces stimulus set,’’ Behav. Res. Methods, vol. 49, no. 3, p. 960,Jun.2017,doi:10.3758/S13428-016-0756-7.

25.H.L.Egger,D.S.Pine,E.Nelson,E.Leibenluft,M.Ernst, K. E. Towbin, and A. Angold, ‘‘The NIMH child emotional faces picture set (NIMHChEFS): A new set of children’s facialemotionstimuli,’’Int.J.MethodsPsychiatricRes.,vol. 20,no.3,pp.145–156,Sep.2011,doi:10.1002/MPR.343.

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |