Detection of Blindness and its stages caused by Diabetes using CNN

K.TAbstract – Diabetic retinopathy is an eye condition that occurs due to diabetes in humans. It can arise because of the high blood glucose level caused by diabetes. Diabetes Retinopathy is the leading cause of new incidents of blindness in those aged 20 to 74. If DR is discovered and treated early enough, vision impairment caused by it can be managed or avoided. Blindness can be avoided if detected early enough. The Convolutional Neural Network (CNN), based on deep learning, is a promising tool in biological image processing. A deep-learning system for the categorization of Diabetes Retinopathy (DR) grades from fundus pictures is used to detect early blindness. In our paper, sample Diabetes Retinopathy (DR) photographs were grouped into five groups based on ophthalmologist competence. For the classification of DR phases, a group of deep Convolutional Neural Network algorithms was used. State-of-the-art accuracy result has been achieved by Resnet50, which demonstrates the effectiveness of utilizing deep Convolutional Neural Networks for DR image recognition.

Keywords – AI.ML, Diabetes Retinopathy, Deep-learning based CNN

I. INTRODUCTION

Theretinaisalight-sensitivelayerintheeye'srearportion. It transforms incoming light into electrical signals and transmits them to the brain. These signals are converted into visuals by the brain. Blood must be supplied to the retina on a regular and continuous basis. It gets the blood fromtinybloodveinsthatreachit.Highlevelsofsugar,the damage these tiny vessels in the blood leading to the development of Diabetes Retinopathy. Vitreous haemorrhage occurs when vessels in blood bleed into the primary jelly that fills the eyes, known as the vitreous. Floaters are common in mild cases, but vision loss is possible in more severe cases because the blood in the vitreousinhibitslightfromenteringtheeye.Bleedinginthe vitreous can resolve spontaneously if the retina is not injured. Diabetic retinopathy is responsible for almost 86 percent of blindness in the younger-onset group. In the older-onset group, in which other eye diseases were common, one-third of the cases of legal blindness was developed due to diabetic retinopathy. The lack of trained eye doctors and level of operator’s expertise also determinesthefateofthepatient.Lackoffacilitiesinrural and semi-rural and lack of awareness about the disease contributelargelytothenumberofrisingcases.Thisgrave problem motivated us to come up with a state-of-the-art solutionbasedonDeepLearningandwithhighaccuracyto help detect the stage of blindness to provide the required treatment.

II. Diabetic Retinopathy

The backs of your eyes are supplied with oxygen and nutrients by some of your body's smallest and most sensitivebloodvessels.Whenbloodarteriesareinjured,the cells that surround them may begin to die. The retina is in the back of your eye and is responsible for sending "sight

messages" to your brain. When light enters your eye, it bounces off the retina and is relayed to the optic nerve by light-sensitive cells. If those cells start to die, your retina willnolongerbeabletoconveyaclearimagetoyourbrain, resultinginvisionloss.

DiabeticRetinopathycanbecategorizedinto5stages:

1.NPDR(non-proliferativediabeticretinopathy)

2.MildNon-proliferativeRetinopathy

3.ModerateNon-proliferativeRetinopathy

4.SevereNon-proliferativeRetinopathy

5.PDR(proliferativediabeticretinopathy)

III. System Design

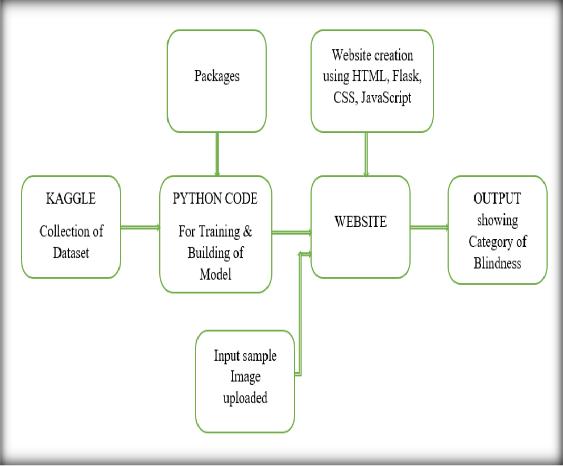

Fig.1.SystemArchitecture

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

1. Kaggle

It contains 88,702 high-resolution photos acquired from various cameras with resolutions ranging from 433 289 pixelsto51843456pixels.Eachimageisassignedtooneof five DR phases. Only the ground facts for training photos are provided to the public. Many of the photographs on Kaggleareofpoorqualityandhaveinaccuratelabeling.

2. Python 3 Spyder

We used the Scripting language- Python 3 programming languagetotrainandbuildthemodel(onSpyderIDE).

3. Anaconda command prompt

Anaconda command prompt is like the command prompt, but it ensures that we may use Anaconda and conda commands without changing directories or paths from the prompt. We'll note that when we launch the Anaconda command prompt, it adds/("prepends") a variety of locationstoyourPATH.

4. Tensor Flow

For our model, we made use of TensorFlow. It's a free artificialintelligencepackagethatbuildsmodelsusingdata flowgraphs.Itgivesprogrammerstheabilitytobuildlargescaleneuralnetworkswithnumerouslayers.Classification, perception, understanding, discovering, prediction, and creation are some of the most common applications for TensorFlow. It is used to obtain the online Dataset. It isan end-to-end platform that made it easy for us to build and deployourmodel.

5. Keras

KerasisaPython-baseddeeplearningAPIthatrunsontop ofTensorFlow,amachinelearningplatform.Itwascreated with the goal of allowing for quick experimentation. It's crucial to be able to go from idea to result as quickly as feasiblewhenconductingresearch.

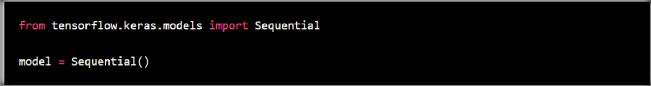

5.1. Keras model

Inourproject,weadoptedthesequentialmodel.Itenables ustobuildmodelsinalayer-by-layermanner.However,we areunabletodevelopmodelswithmanyinputsoroutputs. It's best for simple layer stacks with only one input tensor and one output tensor. Layers and models are Keras' primarydatastructures.

5.2. Keras layers

In Keras, layers are the fundamental building elements of neural networks. A layer is made up of a tensor-in tensoroutcalculationfunction(thelayer'scallmethod)andsome state (the layer's weights) stored in TensorFlow variables. Keras Models are made up of functional building components called Keras Layers. Several layer () functions are used to generate each layer. Theselayersreceiveinput information, process it, perform some computations, and then provide the output. Furthermore, the output of one layerisusedastheinputofanotherlayer.

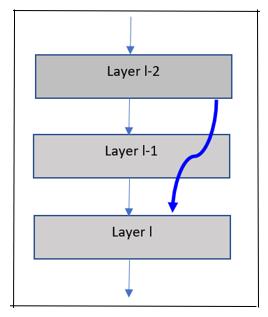

Fig.3.CanonicalformofResidualNeuralnetwork(ResNet)

6. Web development

6.1. Flask

We used the Python Flask API, which allows us to create web applications. Flask is a Python-based microweb framework.Itisreferredtoasamicroframeworkbecauseit does not necessitate the usage of any specific tools or libraries.Itdoesn'thavea databaseabstractionlayer,form validation, or any other components that rely on thirdpartylibrariestodotypicaltasks.

6.2. HTML

HTML (Hypertext Markup Language) is the coding that defines how a web page, and its content are structured. Content could be organized using paragraphs, a list of bulleted points, or graphics and data tables. HTML (Hypertext Markup Language) and CSS (Cascading Style Sheets) are two of the most important Web page construction technologies. For a range of devices, HTML provides the page structure and CSS offers the (visual and auditory) layout. JavaScript is a text-based programming languagethatallowsyoutoconstructinteractivewebpages onboththeclientandserversides.WhereasHTMLandCSS provide structure and aesthetic to web pages, JavaScript addsinteractivecomponentsthatkeepusersengaged.

Fig.2.SequentialModel

IV. IMPLEMENTATION

Rescaling has been performed to make sure all pixel valuesliebetween0and1

Data augmentation by Horizontal flip to increase no of images. Import from PIL is used so that now from Python ImagingLibraryvariousimageeditingcapabilitieslikeload imagecropimagecannowbeperformed.

After all the images have been standardized, data augmentation is performed to boost the training data and hence the training process' quality. The augmentation is accomplished by flipping each input image vertically. Invariance isa characteristic of a convolutional neural network that allows it to reliably categories objects even whenpositionedindifferentorientations.

3. Data Balancing

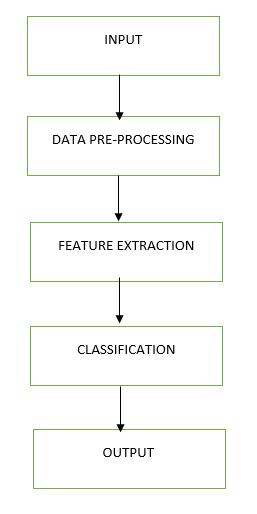

Fig.4.Showstheimplementationofthemode

1 Collected database

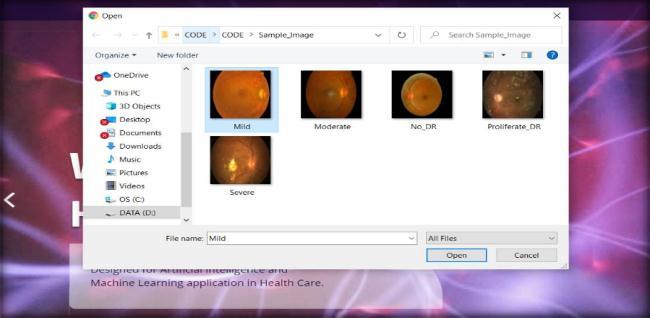

Collected Database Fundus Images to train the model. Typical fundus images from the database that represent differentDRstages:mild,moderate,NoDR,PDR,severe,and proliferativediabeticretinopathy.

2. Data Pre-processing

Data preprocessing is the most important aspect of our paper.Pre-processingisdonebecauseimagesmaynotbefit those criteria, the geometry can be distorted, the lighting can be irregular, there can be noise in the images, and so on… so Preprocessing algorithms aim to prepare data (images), so they can be used efficiently by other types of algorithms.

We analyzed many projects and papers on Diabetic Retinopathy and found that they key to building a robust system was enough data preprocessing steps to improve thequalityoftheimages.

Thedatapreprocessingstepsincludes

Intensitynormalizationwasdonetoconvertpixelvalues to0to255only.Itmaybe50to150.0to255willbebetter distributed as RGB. This refers to a homogenization of the signal intensity of the white and grey matter, to better distinguish between the features and make it easier to segment. For this we used the Numpy package so that we canperformoperationsonthepixels.

Noise Removal by Cropping, zooming, and rescaling to zoom into only the features and surrounding eye area will be cropped which doesn’t have any features thus noise is removed.

Imbalanced data is a classification problem in which the numberofobservationsperclass isnotevenlydistributed; you'll often have a lot of data/observations for one class (referred to as the majority class) and a lot less for one or moreotherclasses(referredtoastheminorityclasses).As aresult,databalancingisthefollowingphaseinourmodel. This step is done to provide the CNN model with an equal splitofdataforeachDRgrade,whichcanhelpremovebias during the training phase. The training model is provided an equal numberofimagesfornormal,moderate,medium, severe,andprophylacticdiabetes.

4. Feature Extraction

The mathematical statistical process that extracts the quantitative parameter of resolution is known as feature extraction. It is a term used to describe changes or anomaliesthatarenotvisibletothehumaneye.Identifying anomalies is what Feature Extraction is all about. We decided to take GLCM out of the equation (texture-based features). The probability density function and the frequencyofoccurrenceofsimilarpixelsareusedtocreate the Gray Level Co-occurrence Matrix (GLCM). The suggested methodology uses the proprietary Res-Net50 CNN model, which has 50 weighted layers, to perform transfer learning. Res-Net50 is divided into four stages, each with three convolutional layers and n replications. Res-Netreferstoaresidualnetworkinwhichconvolutional layer blocks are bypassed utilising shortcut connections. This feature can help the ResNet model learn global features particular to the data. The parameters of the convolutionallayersaretransferredwithoutalteration,and thefullyconnectedlayer(FC1000layer)issubstitutedwith a shallow classifierwithfourclasslabels,reflectingtheDR grades,toimplementtransferlearning.

5. Classification

Weusedfollowingclassifications.

5.1. First-stage classification

To categorize a DR image, we tested two types of shallow classifiers in this stage. The first classifier is a pixel-wise feedforwardNeuralNetwork(NN)classifier,whichismade up of one layer of fully connected weights between the Resnet50 models feature vector and an output layer with severalnodesequaltotheDRgrades.Basedontheselected features, the second one does a binary linear kernel Supported Vector Machine (SVM) classification. Four class labelsareutilizedtotrainthefirst-stagesystemclassifiers: normal, mild, moderate, and severe/PDR. Due to the similarity of their visuals, the DR grades "Severe" and "PDR" have been bundled into one label "Severe/PDR" throughout all experiments. The suggested system was tested using a conventional performance evaluation criterion,namelyclassificationaccuracy,toidentifythebest classifier.

5.2. Second-stage classification

Because the two classes (Severe and PDR) are so similar, theoriginalResnetisunabletodistinguishthemamongthe DR grades. A second stage Resent model is introduced to providehigheraccuracy.ExclusivelycorrectedSevere/PRD images from the first stage are fed to this stage, which is taught offline to identify only between the two classes (Severe and PDR). This stage's output is either "Severe" or "PDR."

V. RESULTS

Output–Followingaretheresults:

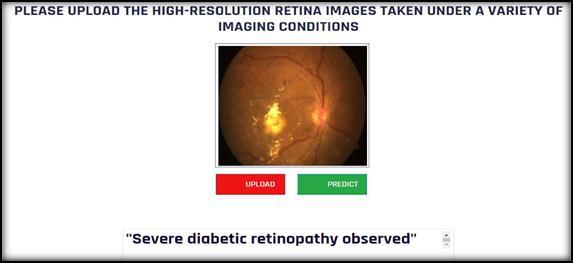

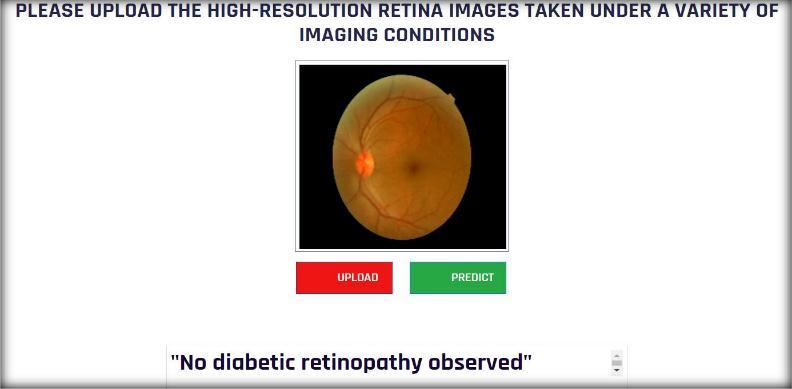

Fig.6

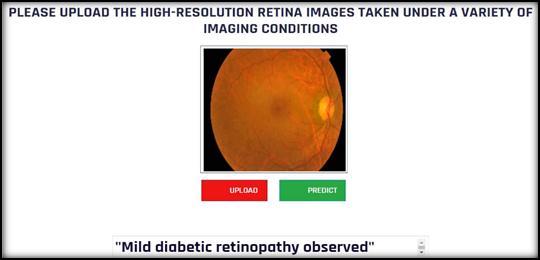

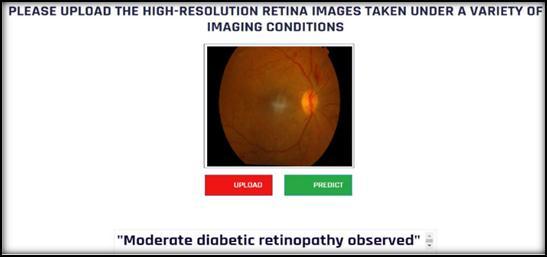

Fig.5 DevelopedwebsiteusingHTML

Fig.7 PredictionforNoDR.“Nodiabeticretinopathy observed

Fig.8 PredictionforMildDR.“Milddiabeticretinopathy observed

Fig.9 PredictionforModerateDR.“Moderatediabetic retinopathyobserved

Fig.10 PredictionforSevereDR.“Severediabetic retinopathyobserved

VI. CONCLUSION

It's worth noting that classifying Diabetes Retinopathy basedonfundoscopicimagesisnotaneasytask,evenfora highly trained human specialist. The results of our study show that CNNs are beneficial and effective in staging Diabetes Retinopathy. Although the accuracy of Resnet50 predictivemodelsisadequategiventheshorttrainingdata quantity,thereisstillmuchopportunityforimprovementin the future. This publication is the first step in laying the groundwork foradditionalresearchintomachinelearningbasedclassifiersforDiabetesRetinopathystaging.Wehope thatoureffortswillresultinavaluablesoftware-basedtool for ophthalmologists to assess the severity of diabetes mellitus by recognising distinct stages of Diabetes Retinopathy, which will aid in the right management of DiabetesRetinopathyprognosis.Thisprojecttakesinputas fundus image and processes it to detect Diabetes Retinopathy. Project can run on any Python (Anaconda) installed- platform and can manage well with stack of fundus images, given one at a time. The result stage will diagnosethetypeofdamagecausedtoretina.

VII. ACKNOWLEDGEMENT

The authors sincerely acknowledge Department of ElectronicsandInstrumentation,BMSCEandourinstructor KRISHNAMURTHYK.Tforhisguidance.

VIII. REFERENCES

[1] G. Danaei, M. M. Finucane, Y. Lu, G. M. Singh, M. J. CowanC. J. Paciorek, J. K. Lin, F. Farzadfar, Y.-H. Khang,G.A.Stevens,M.Rao,M.K.Ali,L.M.Riley,C. A.Robinson,andM.Ezzati,"National,regional,and global trends in fasting plasma glucose and diabetes prevalence since 1980: systematic analysis of health examination surveys and epidemiological studies with 370 country-years and 2·7 million participants," TheLancet,vol.378, issue9785,2011,pp.31-40.

[2] L. Wu, P. Fernandez-Loaiza, J. Sauma, E. Hernandez-Bogantes, and M. Masis, “Classification of diabetic retinopathy and diabetic macular edema,” World Journal of Diabetes, vol. 4, issue 6, Dec.2013,pp.290-294.

[3] C.P. Wilkinson, F. L.Ferris, R. E.Klein,P.P.Lee,C. D. Agardh, M. Davis, D. Dills, A. Kampik, R. Pararajasegaram, J. T. Verdaguer, and G. D. R. P. Group, “Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales,” Ophthalmology, vol. 110, issue 9, Sep.2003,pp.1677-1682.

[4] T. Y. Wong, C. M. G. Cheung, M. Larsen, S. Sharma, and R. Simó, “Diabetic retinopathy,” Nature Reviews Disease Primers, vol. 2, Mar. 2016, pp. 116.

[5] H. Zhong, W.-B. Chen, and C. Zhang, “Classifying fruit fly early embryonic developmental stage based on embryo in situ hybridization images,” in Proceedings of IEEE International Conference on Semantic Computing(ICSC2009),IEEE,Sep.2009, pp.145-152.

[6] J. D. Osborne, S. Gao, W.-B. Chen, A. Andea, and C. Zhang, “Machine classification of melanoma and nevifromskinlesions,”inProceedingsofthe2011 ACM Symposium on Applied Computing (SAC 2011),ACM,Mar.2011,pp.100-105.

[7] G. Litjens, T. Kooi, B. E. Bejnordi, A. A. A. Setio, F. Ciompi, M. Ghafoorian, J. A. W. M. van der Laak, B. vanGinneken,andC.I.Sánchez,“Asurveyondeep learninginmedicalimageanalysis,”Medicalimage analysis,vol.42,Dec.2017,pp.60-88.

[8] M. Anthimopoulos, S. Christodoulidis, L. Ebner, A. Christe, S. Mougiakakou, “Lung pattern classification for interstitial lung diseases using a deep convolutional neural network,” IEEE transactions on medical imaging, vol. 35, issue 5, May2016,pp.1207-1216.

[9] Esteva, B. Kuprel, R. A. Novoa, J. Ko, S. M. Swetter, H. M. Blau, and S. Thrun, “Dermatologist-level classification of skin cancer with deep neural networks,” Nature, vol.542, issue 7639, Feb. 2017, pp.115-118.

[10] P.Wilkinsonetal,“ProposedInternationalClinical Diabetic Retinopathy and Diabetic MacularEdema DiseaseSeverityScales“,Ophthalmology2003;110: 1677–1682 ,2003 by the American Academy of Ophthalmology.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 12 | Dec 2022 www.irjet.net p-ISSN: 2395-0072

KRISHNA MURTHY K.T AssistantProfessor DepartmentofElectronicsandInstrumentation,BMSCE