International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

1 Assistant Professor, 2,3,4 Student, Bachelor of Engineering in Information Technology Department of Information Technology , St. John College of Engineering and Management, Palghar Maharashtra, India ***

Abstract: According to the 2011 Census, In India, out of the total population of 121 crores, approximately 2.68 Crore humans are ‘Disabled’ (2.21% of the whole population)). Sign Language serves as a means for these people with special needs to communicate with others, but it is not a simple task. This barrier to communication has been addressed by researchers for years. The goal of this study is to demonstrate the MobileNets model's experimental performance on the TensorFlow platform when training the Sign language. Language Recognition Model, which can drastically reduce the amount of time it takes to learn a new language. Classification of Sign Language motions in terms of time and space Developing a portable solution for a real-time application. The Mobilenet V2 Model was trained for this purpose and an Accuracy of 70% was obtained.

Keywords– Gesture Recognition, Deep Learning(DL), Sign Language Recognition(SLR), TensorFlow, Mobilenet V2.

Since the beginning of Evolution, Humans have kept evolving and adapting to their available surroundings. Senses have developed to a major extent. But unfortunately, some people are born special. They are calledspecialbecausetheylacktheabilitytousealltheir five senses simultaneously. According to WHO, About 6.3%i.e.About63millionpeoplesufferfromanauditory loss in India. Research is still going on in this context. According to Census 2011 statistics, India has a population of 26.8 million people who are differentlyabled. This is roughly 2.21 percent in percentage terms. Outofthetotaldisabledperson,69%resideinruralareas whereas 31% in urban areas.[1] There are various challenges faced by the specially-abled people for Health Facilities,AccesstoEducation,EmploymentFacilitiesand the Discrimination/ Social Exclusions top it all. Sign Language is commonly used to communicate with deaf people.

Sign Language was discovered to be a helpful way of communication since it used hand gestures, facial emotions, and mild bodily movements to transmit the message. It is extremely important to understand and interpret the sign language and frame the meaningful sentence to convey the correct message which is extremely important and challenging at the same time. Thepurposeofthis work is tocontributeto thefieldof sign language recognition. Humans have been trying hard to adapt to these sign languages to communicate for a long time. Hand gestures are used to express any wordoralphabetorsomefeelingwhilecommunicating.

SignLanguageRecognitionisamultidisciplinarysubject on which research has been ongoing for the past two decades, utilising vision-based and sensor-based approaches. Although sensor-based systems provide datathatisimmediatelyusable,itisimpossibletowear dedicated hardware devices all of the time. The input for vision-based hand gesture recognition could be a static or dynamic image, with the processed output being either a text description for speech impaired peopleoranaudioresponseforvision-impairedpeople. In recent years, we have seen the involvement of machine learning techniques with the advent of Deep Learningtechniquescontributingaswell.Adatasetisan essential component of every machine learning program. We can't train a machineto produce accurate results without a good dataset. We created a dataset of Sign language images for our project. The photos were takenwitha variety ofbackgrounds.Aftercollectingall of the photos, they were cropped, converted to RGB channels,andlabelled.

The benefit of this is that the image size and other supplementary data are minimised allowing us to processitwiththefewestresourcespossible.

The Convolution Neural Network (CNN) is a deep learning method inspired by human neurons. A neural network is a collection of artificial neurons known as nodesintechnicalterms.A neuroninsimpletermsisa

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

graphical representation of a numeric value. These neuronsareconnectedusingweights(numericalvalues).

Training refers to the process where a neural network learns the pattern required for performing the task such asclassification,recognition,etc. Whena neural network learns, the weight between neurons changes which results in a change in the strength of the connection as well. A typical neural network is made up of various levels. The first layer is called the input layer, while the output layer is the last. In our case of recognizing the image, this last layer consists of nodes that represent a different class. We have trained the model to recognize AlphabetsAtoZ&Numerals0to9.Thelikelihoodofthe imagebeingmappedtotheclassrepresentedbythenode isgivenbytheoutputneuron'svalue.Generally,thereare 4layersinCNNArchitecture:theconvolutionallayer,the pooling layer, the ReLU correction layer, and the fullyconnected layer. The Convolutional Layer is CNN's first layer,anditworkstodetectavarietyoffeatures.Images are fed into the convolutional layer, which calculates the convolution of each image with each filter. The filters match the features we're looking for in the photographs to a match. A feature map is created for each pair (picture, filter). The pooling layer is the following tier. It takes a variety of feature maps as inputs and applies the pooling method to each of them individually. In simple terms,thepoolingtechniqueaidsinimagesizereduction while maintaining critical attributes. The output has the same number of feature maps as the input, but they are smaller. It aids in increasing efficiency. and prevents over-learning. The ReLU correction layer is responsible forreplacinganynegativeinputvalueswithzero.Itserves asa modeofactivation.The fullyconnectedlayeractsas the final layer. It returns a vector with the same size as thenumberofclassestheimagemustbeidentifiedfrom.

MobilenetisaCNNArchitecturethatisfasteraswellasa smaller model. It makes use of a Convolutional layer calleddepth-wiseseparableconvolution.

In this section, we examine a few similar systems that have been explored and implemented by other researchers in order to have a better understanding of theirmethodsandstrategies.

SmartGloveForDeafAndDumbPatient[3],Theauthor’s objective in this paper is to facilitate human beings by way of a glove-based communication interpreter system. Internally,Thegloveisattachedtofiveflexsensorsandis fastened. For each precise movement, the flex sensor generates a proportionate change in resistance. The Arduino uno Board is used to process these hand motions. It's a combination of a microcontroller and the LABVIEWsoftwarethat'sbeenimproved.Itcomparesthe input signal to memory-stored specified voltage values.

According to this, a speaker is used to provide the appropriatesound.

Digital Text and Speech Synthesizer using Smart Glove for Deaf and Dumb[4], In the year 2017, the authors presented a system to increase the accuracy. an accelerometer was also incorporated which measured theorientationofthehand.Itwaspastedonthepalmof the glove to determine the glove's orientation. The outputvoltageoftheaccelerometeralteredwithregard to the earth's orientation. Unlike the previous paper, this model had 5 outputs from flex sensors and 3 from theAccelerometer(thevalueof X,Y,Z-axis).Arduinois thecontrollerused in thisproject.All ofthe flex sensor andaccelerometervaluesareconvertedtogestures,and then code is built for all of them. In the hardware part, there is also a Bluetooth device. The received data is transmitted by the Bluetooth module across a wireless channel and received by a Bluetooth receiver in the smartphone. Using MIT App Inventor software, accordingtotheuser'sneeds,theauthordesignedatext tospeechprogramthatreceivesalldataandconvertsit to text or corresponding speech. Android applications proved to be efficient in use. but the weight of the gloveswasstillthere.

Sign Language Recognition[5], In 2016, proposed a unique approach to assist persons with vocal and hearing difficulties in communicating. This study's authors discuss a new method for recognizing sign language and translating speech into signs. Using skin coloursegmentation,thesystemdevelopedwascapable of retrieving sign images from video sequences with less crowded and dynamic histories. It can tell the difference between static and dynamic gestures and extract the appropriate feature vector. Support Vector Machines are used to categorise them. Experiments revealed satisfactory sign segmentation in a variety of backdrops, as well as fairly good accuracy in gesture andspeechrecognition.

Real-Time Recognition of Indian Sign Language[6], The authors have designed a system for identifying Indian signlanguagemotionsinthiswork.(ISL).Thesuggested method uses OpenCV's skin segmentation function to locateandmonitortheRegionofInterest(ROI).Totrain and predict hand gestures, fuzzy c-means clustering machine learning methods are utilised. The proposed system, according to the authors, can recognize realtimesigns,makingitparticularlyusefulforhearingand speech-challenged individuals to communicate with normalpeople.

MobileNets for Flower Classification using TensorFlow[7], In this paper, the authors have experimented with the flower classification problem statement with Google’s Mobilenet model Architecture. Theauthorshavedemonstratedamethodforcreatinga

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

mobileNets application that is smaller and faster. The experimental results show that using the Mobilenets model on the Tensorflow platform to retrain the flower category datasets reduced the time and space required forflowerclassificationsignificantly.but itcompromised marginallywiththeaccuracywhencomparedtoGoogle’s InceptionV3model.

Deep Learning for Sign Language Recognition on Custom Processed Static Gesture Images[8], The outcomes of retrainingandtestingthissignlanguagearepresentedin this research. Using a convolutional neural network model to analyse the gestures dataset Inception v3 was used.Themodelismadeupofseveralparts.Convolution filter inputs are processed on the same input. The accuracyofvalidationattainedwasbetterthan90%.This is a paper describing the multiple attempts at detecting sign language images using machine learning and depth data.

GestureRecognitioninIndianSignLanguageUsingImage ProcessingandDeepLearning[9],MicrosoftKinectRGBD camera was used to obtain the dataset. In this study, the authors proposed a real-time hand gesture recognition system based on the data acquired. They used computer vision techniques like 3D construction to map between depthandRGBpixels.Thehandgesturesweresplitfrom thenoisewhenone-to-onemappingwasachieved.The36 static motions related to Indian Sign Language (ISL) alphabets and digits were trained using Convolutional Neural Networks (CNNs). Using 45,000 RGB photos and 45,000 depth images, the model obtained a training accuracy of 98.81 percent. The training of 1080 films resulted in a 99.08 percent accuracy. The model confirmedthatthedatawasaccurateinreal-time.

Indian Sign Language Recognition[10]. This study outlines a framework for a human-computer interface that can recognize Indian sign language motions. This paper also suggests using neural networks for recognition.Furthermore,itisadvocatedthatthenumber of fingertips and their distance from the hand's centroid be employed in conjunction with PCA for more robust andefficientresults.

Signet: Indian Sign Language Recognition System based on Deep Learning [11], In this paper, The authors proposed a deep learning-based, signer independent model.ThepurposebehindthiswastodevelopanIndian static Alphabet recognition system. It also reviewed the current sign language recognition techniques and implemented a CNN architecture from the binary silhouetteofthesignerhandregion.Theyalsogooverthe dataset in great depth, covering the CNN training and testing phases. The proposed method had a likelihood of success of 98.64 percent, which was higher than the majorityofalreadyavailablemethods.

DeepLearningforStaticSignLanguageRecognition[12], Explicit skin-colour space thresholding, a skin-colour modelling technique, is used in this system. The skincolourrangethatwillbeextractedispredefined (hand) made up of non–pixels (background). The photographs were fed into the system into the Convolutional Neural Network (CNN) model.CNN for image categorization. Keras was used for a variety of purposes. Images are beingtrainedIfyouhavetherightlighting,youcandoa lot of things. Provided a consistent background, the system wasable to obtainanaverage. Testingaccuracy was 93.67 percent, with 90.04 percent ascribed to humanerror.ASLalphabetrecognitionis93.44percent, while number recognition is 93.44 percent and 97.52 percent for static word recognition, outperforming the previous record for a number of other relevant research.

Deep Learning-Based Approach for Sign Language Gesture Recognition With Efficient Hand Gesture Representation[13], A Deep Learning-Based Approach to Recognizing Sign Language Gestures with Efficient Hand Gesture Representation. The authors' proposed approach combines local hand shape attributes with global body configuration variables to represent the hand gesture, which could be especially useful for complex organised sign language hand motions. In this study, the open pose framework was employed to recognize and estimate hand regions. A robust face identification method and the body parts ratios theory were utilised to estimate and normalise gesture space. Two 3DCNN instances were used to learn the finegrained properties of the hand shape and the coarsegrained features of the overall body configuration. To aggregate and globalise the extracted local features, MLP and autoencoders were used, as well as the SoftMaxfunction.CommonGarbageClassificationUsing MobileNet[14]

Sign Language Recognition System using TensorFlow Object Detection API[15], The authors of this paper investigated a real-time method for detecting sign language. Images were captured using a webcam (Python and OpenCV were used) for data acquisition, lowering thecost. The evolvedsystem hasa confidence percentageof85.45percentonaverage.

Sign Language Recognition system[16], Here, the system consists of a webcam that captures a real-time image of the hand, a system that processes and recognizesthesign,andaspeakerthatoutputssounds.

MobileNets for TensorFlow are a series of mobile-first computer vision models that are designed to maximise accuracywhiletakingintoaccountthelimitedresources availableforanon-deviceorembeddedapplication[19].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

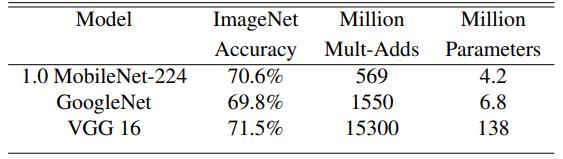

Figure 1. MobileNetparameterandaccuracycomparison againstGoogleNetandVGG16 [2]

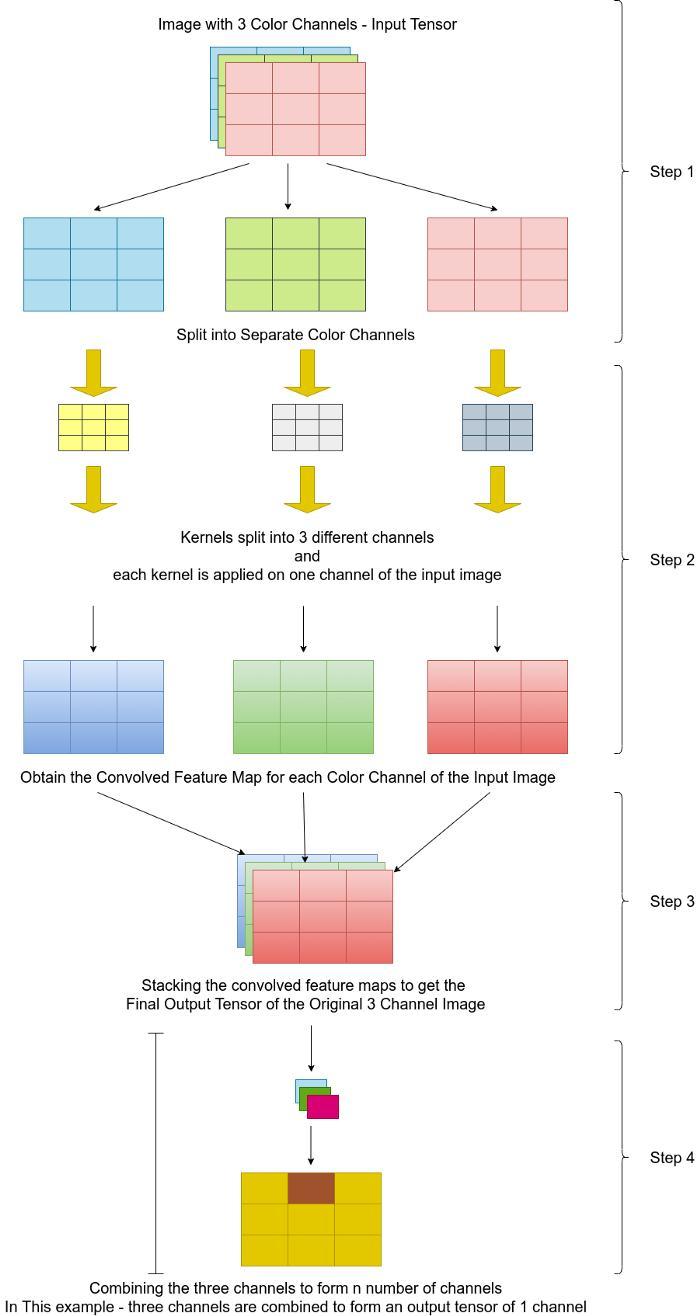

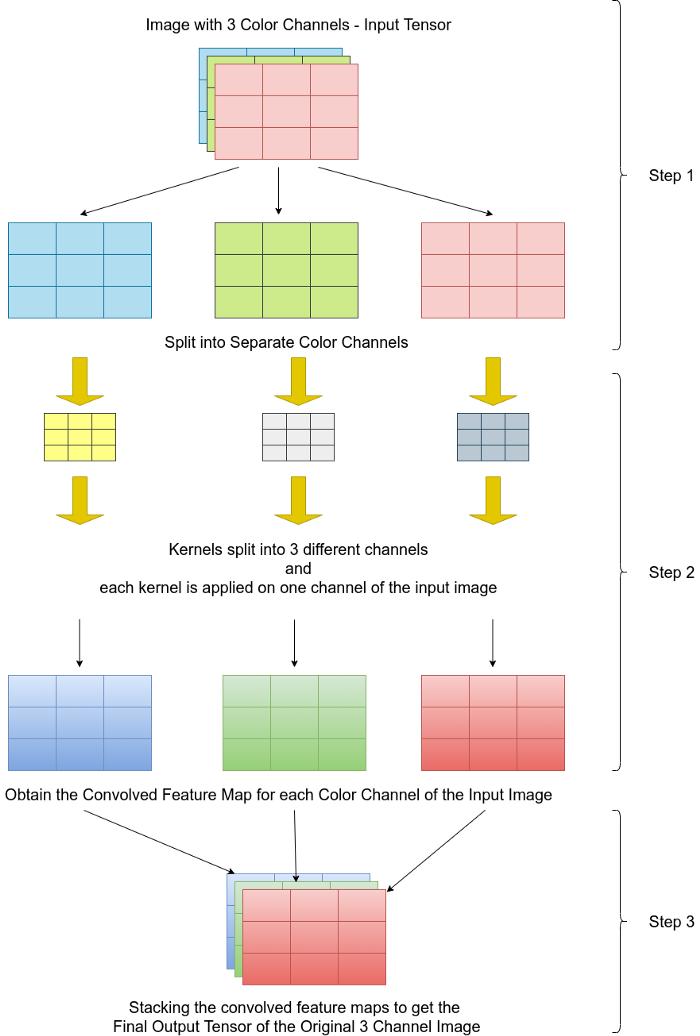

As seen in the above table, it can be concluded that Mobile net gives fairly similar results as compared with Google Net Model and VGG 16, but the number of Parameters required for the purpose is significantly less, whichmakesitidealtouse.Themaindifferencebetween the 2D convolutions in CNN and Depthwise convolutions is that the 2D Convolutions are performed over multiple channels, whereas in Depthwise convolutions each channeliskeptseparate.[2]

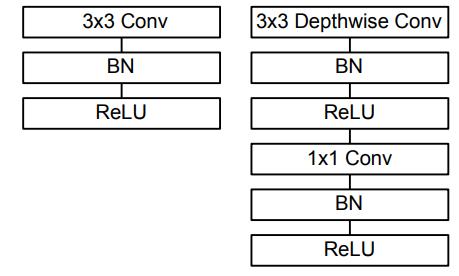

ThefirstlayeroftheMobileNetisafullconvolution,while all following layers are Depthwise Separable Convolutionallayers.Allthelayersarefollowedbybatch normalisation and ReLU activations. The final classification layer has a softmax activation. In terms of our project's scope, our major goal is to create a model thatcanrecognizethenumeroussignsthatdefineLetters, Numerals, and Gestures using mobilenets. Using the Object Detection Technique, the Trained model can recognizetheindicatorsinreal-time.Theideabehindthis projectistodevelopanapplicationthatishandyandcan detect the hand gestures (signs) and recognize what the specially-abledpersonistryingtospeakwithamotiveto help to ease the efforts required by the specially-abled peopletocommunicatewithotherNormalPeople.

Figure 2. DiagramaticexplanationofDepthWise SeparableConvolutions[2]

2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

include image preprocessing, training, verification, and testing.

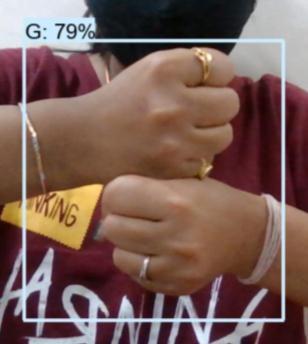

For our project we have made our data set of around 15,000 images which consist of 26 Alphabets(A to Z), Numerals (0-9).After collecting all the images we labelled them using LabelImg[18] The Images collected are then divided into two categories: Train and Test. Train dataset is used to train the model and the Verification Phase uses the Test dataset to verify the accuracy. Then the Model is used to test the model in Real-Time

The dataset is generated for Indian Sign Language, whose signs are English alphabets and integers. A dataset of approximately 15000 photos has been developed.

A Windows 10 PC with an Intel i5 7th generation 2.70 GHz processor, 8 GB of RAM, and a webcam was used for the test (HP TrueVision HD camera with 0.31 MP and 640x480 resolution). Python (version 3.8.9), Jupyter Notebook, OpenCV, and TensorFlow Object Detection API are all part of the development environment.

In real-time, the created system can detect Indian Sign Language alphabets and digits. TensorFlow object detection API was used to build the system. The TensorFlow model that has already been pre-trained SSD MobileNet v2 640x640 is the model zoo. It has undergone training. Using transfer learning on the newly created dataset. There are 15000 photos in all, oneforeachletterofthealphabet.

The experimental setup for the Sign Language RecognitionmodelutilisingMobileNetontheTensorFlow framework is covered in this section. The categorization model is divided into the four stages below: Phases

The Overall Accuracy of the Model has turned out to be 70%.

A technique for recognizing Indian Sign Language is presented in this work. The Tensorflow Mobilenet V2 Model was used to recognize static indicators successfully. The Model can further be improved by adding more numbers of signs and increasing the dynamicityoftheImages.

1. Persons with Disabilities (Divyangjan) in India. New Delhi, India: Ministry of Statistics and Programme Implementation, Government of India,2021.

2. Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto, Hartwig Adam, “MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications ”,Computer Vision and Pattern Recognition, CornellUniversity.

3. P.B.Patel, Suchita Dhuppe, Vaishnavi Dhaye “Smart Glove For Deaf And Dumb Patient ” International Journal of Advance Research in Science and Engineering, Volume No.07, Special IssueNo.03,April2018

4. Khushboo Kashyap, Amit Saxena, Harmeet Kaur, AbhishekTandon,KeshavMehrotra“DigitalText and Speech Synthesizer using Smart Glove for Deaf and Dumb” International Journal of Advanced Research in Electronics and Communication Engineering (IJARECE) Volume 6,Issue5,May2017

5. Anup Kumar, Karun Thankachan and Mevin M. Dominic “Sign Language Recognition” 3rd InCI

e-ISSN: 2395-0056

p-ISSN: 2395-0072

Conf. on Recent Advances in Information TechnologyIRAIT-20161

6. Muthu Mariappan H, Dr Gomathi V “Real-Time Recognition of Indian Sign Language” Second International Conference on Computational IntelligenceinDataScience(ICCIDS-2019)

7. Nitin R. Gavai,Yashashree A. Jakhade,Seema A. Tribhuvan, Rashmi Bhattad “MobileNets for Flower Classification using TensorFlow” 2017 International Conference on Big Data, IoT and Data Science (BID) Vishwakarma Institute of Technology,Pune,Dec20-22,2017

8. Aditya Das, Shantanu Gawde, Khyati Suratwala and Dr. Dhananjay Kalbande “Sign Language Recognition Using Deep Learning on Custom Processed Static Gesture Images” Department of Computer Engineering Sardar Patel Institute ofTechnologyMumbai,India

9. Neel Kamal Bhagat, Vishnusai Y,Rathna G N “Indian Sign Language Gesture Recognition using Image Processing and Deep Learning” Department of Electrical Engineering Indian Institute of Science Bengaluru, Karnataka ©2019IEEE

10. Divya Deora, Nikesh Bajaj “Indian Sign Language Recognition” 2012 1st International Conference on Emerging Technology Trends in Electronics, Communication and Networking ©2012IEEE

11. SruthiC.JandLijiyaA“Signet:ADeepLearning based Indian Sign Language Recognition System” International Conference on Communication and Signal Processing, April 46,2019,India

12. Lean Karlo S. Tolentino, Ronnie O. Serfa Juan, August C. Thio-ac, Maria Abigail B. Pamahoy, Joni Rose R. Forteza, and Xavier Jet O. Garcia “Static Sign Language Recognition Using Deep Learning” International Journal of Machine Learning and Computing, Vol. 9, No. 6, December2019

13. Muneer Al-Hammadi (Member, Ieee), Ghulam Muhammad (Senior Member, Ieee), Wadood Abdul(Member, Ieee), Mansour Alsulaiman Mohammed A. Bencheriftareq S. Alrayes, Hassan Mathkourand Mohamed Amine Mekhtiche,“DeepLearning-BasedApproachfor Sign Language Gesture Recognition With Efficient Hand Gesture Representation”, IEEE AccessVersion-November2,2020.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN: 2395-0072

14. Stephenn L. Rabano, Melvin K. Cabatuan, Edwin Sybingco, Elmer P. Dadios, Edwin J. Calilung, “Common Garbage Classification Using MobileNet” IEEEXplore:14March2019

15. Sharvani Srivastava, Amisha Gangwar, Richa Mishra, Sudhakar Singh, “Sign Language Recognition System using TensorFlow Object Detection API” International Conference on Advanced Network Technologies and Intelligent Computing(ANTIC-2021),partofthebookseries ‘Communications in Computer and Information Science(CCIS)’,Springer.

16. Priyanka C Pankajakshan,Thilagavati B, ”Sign Language Recognition system” IEEE Sponsored 2nd International Conference on Innovations in Information Embedded and Communication Systems,ICIIECS’15

17. Mohammed Safeel, Tejas Sukumar,Shashank K S, Arman M D, Shashidhar R, Puneeth S B, “Sign Language Recognition Techniques- A Review”, 2020 IEEE International Conference for Innovation in Technology (INOCON) Bengaluru, India.Nov6-8,2020

18. LabelImg - A tool used for Image Annotation of datasethttps://github.com/tzutalin/labelImg.git

19. Google, “MobileNets: Open-Source Models for Efficient On-Device Vision,” Research Blog. [Online]. Available:https://research.googleblog.com/2017 /06/mobilenets-open-source-models-for.html

20. https://innovate.mygov.in/

21. https://towardsdatascience.com/sign-languagerecognition-using-deep-learning-6549268c60bd

22. https://www.tensorflow.org/api_docs/python/tf /keras/applications/mobilenet_v2/MobileNetV2