International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Amity University Noida, Amity Road, Sector 125,Noida, Uttar Pradesh 201301 ***

Abstract - A new field of machine learning (ML) study is deep learning. There are numerous concealed artificial neural network layers in it. The deep learning methodology uses high level model abstractions and transformations in massive databases. Deep learning architectures have recently made major strides in a variety of domains, and these developments have already had a big impact on artificial intelligence. Additionally, the advantages of the layer-based hierarchy and nonlinear operations of deep learning methodology are discussed and contrasted with those of more traditional techniques in widely used applications. It also has a significant impact on face recognition methods, as demonstrated by Facebook's highly effective Deep Face technology, which enables users to tag photos.

Artificialneuralsystemsarethefoundationofdeeplearning,whichisabranchofAIthatmimicsthehumanbraininasimilar waytohowneuralsystemsfunction.Wedon'thavetoexplicitlyprogrammeeverythingindeeplearning.Deeplearningisnota brand-newconcept.Atthispoint,ithasbeenaroundfortwoorthreeyears.Becausewedidn'thaveasmuchplanningpower andinformationinthepast,it'sonhypethesedays.DeeplearningandAIenteredthesceneasthepreparingpowerincreased tremendouslyoverthelast20years.

Deeplearningisaparticularkindofmachinelearningthatachievesgreatpowerandflexibilitybylearningtorepresentthe world as a nested hierarchy of concepts, with each concept defined in relation to simpler concepts, and more abstract representationscomputedintermsoflessabstractones.

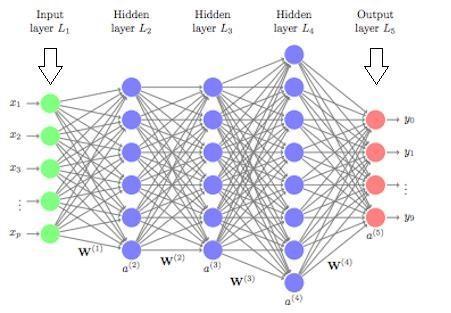

Inhumanbrainapproximately100billionneuronsalltogetherthisisapictureofanindividualneuron,andeachneuronis connectedthroughthousandsoftheirneighbors.Thequestionhereishowwerecreatetheseneuronsinacomputer.So,we createanartificialstructurecalledanartificialneuralnetwherewehavenodesorneurons.Wehavesomeneuronsforinput valueandsomefor-outputvalueandinbetween,theremaybelotsofneuronsinterconnectedinthehiddenlayer.[1]

AIempoweredcomputersbenefitedfromgenuinecrudeinformationormodels,anditattemptstoextricatedesignsfromitand settleonbetterchoiceswithoutanyoneelse.AportionoftheAIcalculationsarestrategicrelapse,SVMandsoforth.

ThepresentationoftheseAIcalculationsreliesvigorouslyupontheportrayaloftheinformationthataregiven.Eachsnippetof datarememberedfortheportrayalisknownashighlightsandthesecalculationsfiguresouthowtoutilizethesehighlightsto extricatedesignsortogetinformation.

Nevertheless,sometimesitmightbechallengingtodiscernwhichdetailsshouldbedeleted.Forinstance,ifweweretryingto identifycarsfromaphotograph,wemayenjoytheopportunitytousetheproximityofthewheelasacue.However,intermsof pixelvalues,itischallengingtodepictwhatawheellookslike.UsingAItoextractvaluefromthosetraits(representation)but notthehighlightsthemselvesisonewaytosolvethisproblem.Thisapproachiscalledportrayallearning.Oncemore,ifthe calculationlearnswithouttheassistanceofanyoneelseandwithverylittlehuman

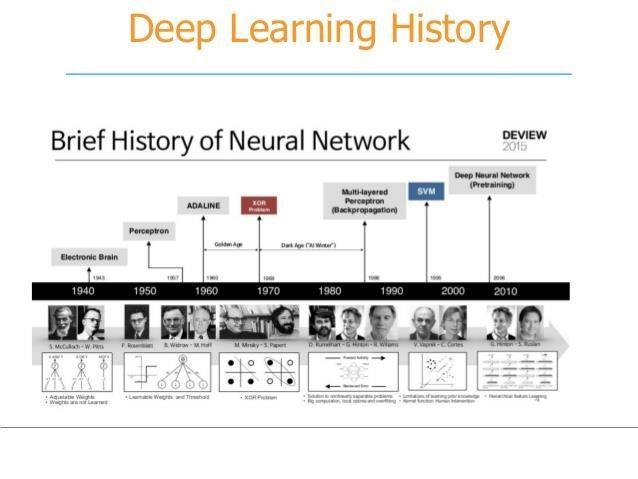

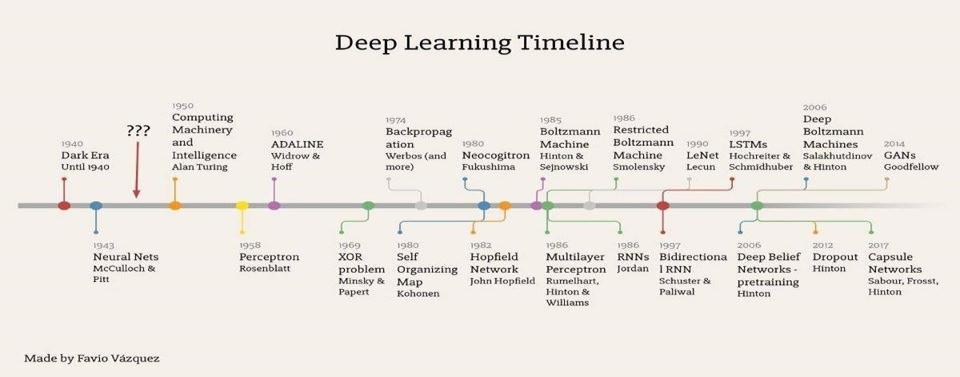

Longtimebackin1943,deeplearningwasintroducedbyWarrenMcCullochandWalterPitts,whentheycreatedacomputer basedonneuralnetworksinthebrainWarrenMcColluchandWalterPittsmadeacombinationofalgorithmsandmathematical evolutionswhichisknownasthresholdmagicinordertoreplicatethethoughtprocess.Andthus,afterthatdeeplearninghas evolvedalotandtherehavebeentwomajorbreakthroughmoments.[6]Oneofthesewasin1960byHenryJKellywhichwas thedevelopmentofbasicsofcontinuousbeampropagationmodelLateronin1962StuartDrefyusfoundasimpleversion basedonthechainruleOneoftheearliestrolestodevelopdeeplearningalgorithmswasin1965wheretwopeopleAlexey GrigoryevichIvakhnenkoandValentinGrigor’evichLapausedmodelsofpolynomialfunctionswhichwereanalyzedstatistically

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Therewerevarioushurdlestoointhedevelopmentofitwhichisoneofthemostimportantandinterestingtopicsintoday’s world.Amajorsetbackwaswhenin1970duetolackoffundingtheresearchaboutartificialintelligenceanddeeplearninghad torestricted. Butevenaftersuchimpossibleconditionscertainindividualsresearchedaboutitevenafterlackoffinancialhelp

Thetermconvolutionalneuralnetworkswhichwehearthemostintoday’stimewheneverwetalkaboutdeeplearningwas usedthefirsttimebyKunihikoFukushima.[6]HehimselfdesignedtheneuralnetworksusingconvolutionallayersHecreated amultilayerandhierarchicaldesignin1979whichwastermedasneocognitron.Ithelpedthecomputertolearnvisualpatterns. Thenetworksresembledmodernversionsandweretrainedtoactivatemultiplerecurringlayers.

LaterthentheworldwasintroducedwiththeFORTANCODEforBackPropagation.Thiswasdevelopedinthe1970’sandit usednumerouserrorstotrainthedeeplearningmodels.

ThisbecamepopularlateronwhenathesiswrittenbySeppoLinnainmaaincludingTheFORTANCODEbecameavailableand known.[5]Eventhoughthefactthatitwasdevelopedin1970itwasn’tunderactioninneuralnetworksuntiltheyear1985.

YannLeCunnwasthefirstonetoexplaintheandprovideapracticaldemonstrationin1989.Somethingthathedidwasthathe combinedconvolutionalnetworkswithbackpropagationinordertoscanhandwrittendigits

Asthenextdecadekickedinartificialintelligenceanddeeplearningdidnotmakealotofprogress. in1995VladimirVapnik andDanaCortesdevelopedthesupportvectormachinewhichisasystemformappingandrecognizingsimilardata.Long short-term memory or LSTM was developed in 1997 by Juergen Schmidhuber and Sepp Hochreiter for recurrent neural networks.

GoingintothenextcenturyTheVanishingGradientdownsidecameintotheyear 2000once“features”(lessons)fashionedinlowerlayersweren'tbeinglearnedbythehigherlayerssincenolearningsignal reachedtheselayerswerediscovered.Thiswasn'tanelementarydownsideforallneuralnetworkshoweveritwasrestrictedto solelygradient-basedlearningways.

In2001,aquestreportcompiledbytheMETAcluster(nowknownasGartner)cameupwiththechallengesandopportunities ofthethree-dimensionalknowledgegrowth.Thisreportmarkedtheonslaughtofmassiveknowledgeandrepresentedthe increasingvolumeandspeedofinformationasincreasingthevaryofinformationsourcesandkinds.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Fig -1:Timeline

Nowthequestionthatwouldpopinineveryone’smindisthatwhyitmattersandwhydoweneeddeeplearning.injustaword, deeplearningcanbedefinedusingaccuracyandpreciseworkDeeplearninghasachievedhigheraccuracyinrecognition departmentthananyoneeverbeforethishasnumerousbenefits,oneofwhichismeetingcustomerexpectationsandimportant fortheoperationofself-learningdevicessuchasdriverlesscars

Recentresearchshowsandscientistsbelievethattherearecertaintasksatwhichdeeplearningisevenbetterthanhumans mainlybeingimagerecognition

Deeplearningwasfirstlyintroducedin1980sproperly,sincethenithasbeenevolvingandtherearetwomainreasonsforits existence-

1. Oneofthemajorneedsofdeeplearningislabelleddata.Forexample,millionsofimagesandhoursofvideoarerequired fordevelopmentofdriverlesscars

2. Deeplearningrequiressubstantialcomputingpower.High-performanceGPUshaveaparallelarchitecturethatisefficient fordeeplearning.Whencombinedwithcloudcomputing,thisenablesdevelopmentofteamstoreducetrainingtimefora deeplearningnetworkfromweekstohoursorless.

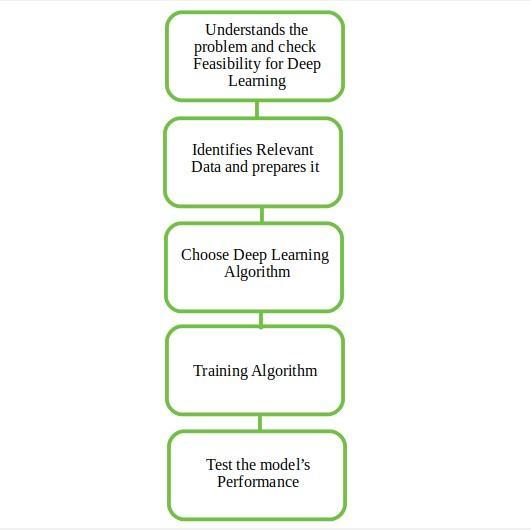

Wellwhatdoyoudowhenyougettoknowaboutaproblemthefirstthingthatyoudoisthatyouidentifyitandthenfinda solutionfortheproblem,thefeasibilityoftheDeepLearningshouldalsobechecked.Second,weneedtoidentifytherelevant data which corresponds to the actual problem and should be prepared accordingly. Third, picking the appropriate deep learningalgorithm.Fourth,Algorithmshouldbeusedwhiletrainingthedataset.Fifth,Finaltestingshouldbedoneonthe dataset.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Mostdeeplearningmethodsuseneuralnetworkarchitectures,whichiswhydeeplearningmodelsareoftenmentionedasdeep neuralnetworks.

Theterm“deep”usuallyreferstothenumberofhiddenlayerswithintheneuralnetwork.[1]Generally,neuralnetworksjust contain2-3hiddenlayers,whiledeepnetworkscanhaveasmanyas150.

Deeplearningmodelsaretrainedbyusinglargesetsoflabelleddataandneuralnetworkarchitecturesthatlearnfeatures automaticallyfromthedatawithouttheneedformanualfeatureextraction.

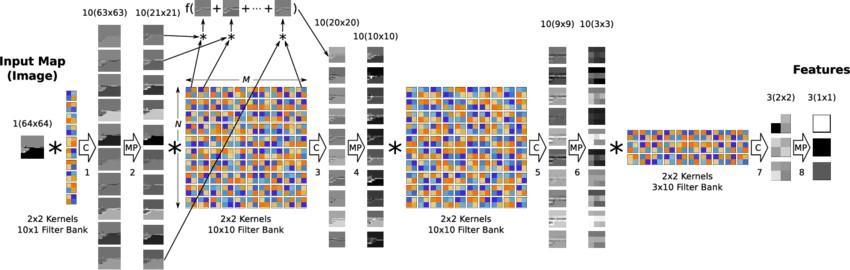

Oneoftheforemostpopularsortsofdeepneuralnetworksisunderstoodasconvolutionalneuralnetworks(CNNorConvNet). ACNNconvolveslearnedfeatureswithinputfile,anduses2Dconvolutionallayers,makingthisarchitecturecompatibleto processing2Ddata,likeimages.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

CNNseliminate the necessity for manual feature extraction,so youare doing not gotto identify featureswont toclassify images.[1]TheCNNworksbyextractingfeaturesdirectlyfromimages.Therelevantfeaturesaren'tpre-trained;they'relearned whilethenetworktrainsonasetofimages.Thisautomatedfeatureextractionmakesdeeplearningmodelshighlyaccuratefor computervisiontaskslikeobjectclassification.

CNNs learn to detect different features of a picture using tens or many hidden layers. Every hidden layer increases the complexityofthelearnedimagefeatures.For example, theprimaryhiddenlayercouldfindouthow todetect edges,and thereforethelastlearnsthewaytodetectmorecomplexshapesspecificallycateredtotheformoftheobjectwearetryingto recognize.

Itisdifficulttoknowabouteverythinghappeningintheartificialintelligenceworld.Withsomanypapersbeingreleased,itcan bedifficulttotalkabouttherealitywhichisthepresentexistenceandfutureprospects.

Designingneuralnetworkarchitecturesrequiresmoreartthanscience.Mostindividualsjustgrabawell-likedspecificationoff theshelf.Doesanybodywonderhowwerethesecutting-edgearchitecturesdiscovered? Theanswerissimple-trialanderror usingpowerfulGPUcomputers.

Thedecisionofwhentousemax-pooling,whichconvolutionfiltersizetouse,wheretofeaturedropoutlayersissimplyabout randomguessing.

Trainingdeeplearningnetworksmaybeagoodwaytourgeconversantinmemory.Atypicallaptophas16GBofRAMmemory. ThelatestiPhonehasaround4GBofRAM.TheVGG16imageclassificationnetwork,witharound144millionparameters,is around500MB.Duetothebigsizeofthosenetworksit'sverydifficulttocreatemobileAIappsandappsthatusemultiple networks.HavingthenetworksloadedintoRAMmemoryenablesmuchfastercomputingtime.

ResearchoncompressingthesenetworkslikeDeepCompressionworksveryalmostlikeJPEGimagecompression,Quantization andHuffmanencoding.DeepCompressioncanreduceVGG-16from552MBto11.3MBwithnolossofaccuracy.

Amajorchallengewhilebuildingdeeplearningmodelsisthedataset.Deeplearningmodelsrequirealotofdata.GANsarea promisinggenerative modellingframework thatcanconcludenewdata pointsfrom a dataset.Thiscanbeusedtocreate humungousdatasetsfromsmallones.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

1.Automatic Text Generation – Corpus of text is learned and from this model new text is generated, word-by-word eventuallythismodelcanlearnhowtospell,formsentences,andusepunctuations.

2.Healthcare –Ithelpsindiagnosingmanydiseasesandhelpsinthetreatmentprocesswiththehelpofmedicalimaging[2].

3.Automatic Machine Translation –Certainwords,sentences,orphrasesinonelanguageistransformedintoanother language(DeepLearningisachievingtopresultsintheareasoftext,images).

4.Image Recognition –Recognizingandidentifyingpeopleandobjectsinimagesaswellbeingabletounderstandcontent andcontext.[4]ThisareaisalreadybeingusedinTourismandGamingindustryjusttonameafew

5.Predicting Earthquakes – Teaches a computer to perform viscoelastic computations which are used in predicting earthquakes.[6]

Weareonthevergeofcreatingormighthavesuccessfullycreatedthemostintriguingtechnologyinthehistoryofmankind. Thus,ArtificialIntelligence(DeepLearning)isthemostinterestingtopicinthefieldofScienceandTechnology.Accordingto recentresearchtherewasnewsthatcertainrobotswerespeakinganaltogetherdifferentlanguageandpossiblymalfunctioned, howeverassoonasengineersmonitoredtherobotstheyconcludedthatmachineshaddevelopedalanguageoftheirownand werecommunicatingeffectively.Latertheywereshutdown.

Sotherearehundredsofpossibilitiesinthelandofartificialintelligenceandspecially indeeplearning.DeepLearningcan changeourlivescompletelywiththeadvancementintechnology.Oncewecancalibrateourbraintosuchalevelwherewecan unlockmorecapabilitiesofourbrain,itcanbebreakthroughresearchforthemankind.

Amajorrevolutionisundertaking,andweareapartofit.Therefore,wemusthelpinwhateverwaywecanandcontribute towardsthetechnology.DeepLearninghasalotofpotentialevenbeyondourimagination.HugetechnologicalgiantElon Musk’snewcompanyNeuralinkisprofoundlyresearchingondeeplearningandneuralnetworks.Applehasalsobeendoinga lotofresearchlatelyandhasfiledvariouspatentsrelatedtoartificialintelligence. Also,whensuchestablishedcompanies investbillionsofdollarsinthetechnologywecanveryeasilyestimateitspotentialandcapabilities.

1. YannLeCun,YoshuaBengio&GeoffreyHinton,Deeplearning

2. JonghoonKim,Prospectsofdeeplearningformedicalimaging

3. S.M.S.Islam,S.Rahman,M.M.Rahman,E.K.DeyandM.Shoyaib,Applicationofdeeplearningtocomputervision:A comprehensivestudy

4. KaimingHe,DeepResidualLearningforImageRecognition

5. Deng,L.(2014).Atutorialsurveyofarchitectures,algorithms,andapplicationsfordeeplearning