2,3U.G.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Multi-faceted Wheelchair control Interface

1

Abstract - As the population of the elderly and the disabled grows, so does the demand for care and support equipment to enhance their quality of life. The most popular mobility aid for people with limited mobility for the past 20 years has been the electric powered wheelchair (EPW), and more recently, the intelligent EPW, also known as an intelligent wheelchair (IW), has attracted significant attention as a new technology to meet users' varied needs. Elderly people and disabled people face a lot of difficulties in performing the simplest day to day tasks. Many of them rely on others or utilizing conventional technologies such as wheelchairs to accomplish tasks. With the help of modern technology and the advent of voice-enabled applications and devices we can build tools to help them interact with society and smooth their mobility during everyday activities. A major problem that they face is to reach the wheelchair, hence, to curb this issue we propose a mobile application that enables the user to locate and navigate the wheelchair towards themselves whenever they need it. The primary goal of the interactive user operated wheelchair system project is to provide a user- friendly interface by employing two ways of interaction with the wheelchair that is entering choice of direction through touch screen (haptic) and voice recognition input using speech recognition module to operate a wheelchair.

Key Words: Support; Intelligent wheelchair; Mobile application;Interact;Voice-recognition;Haptic;

1. INTRODUCTION

The project on using technology with wheelchair is an assistivetechnologythatincludesthisinitiativetomakelife more independent, fruitful, and joyful for dependent and disabled people. The primary goal of the interactive user operated wheelchair system project is to provide a userfriendlyinterfacebyemployingtwowaysofinteractionwith thewheelchairthatisenteringchoiceofdirectionthrough touch screen (haptic) and voice recognition input using speech recognition module to operate a wheelchair. The device is made to allow a person to operate a wheelchair withtheirvoice.Thisprojectaimstofacilitatethemovement ofolderpersonswhoareunabletomovewellanddisabled orhandicappedpeople.Itis hopedthatthisapproachwill enablecertainfolkstomovearoundlessfrequentlyasadaily necessity.Acrucialpieceoftechnologythatwillenablenew formsofhuman-machineconnectionisspeechrecognition.

***

Therefore,byapplyingspeechrecognitiontechnologyforthe movementofawheelchair,theissuestheyconfrontcanbe resolved. The employment of the smart phone as a middlemanorinterfacecanactualizeandmaximizethis.To create a program that can detect speech, control chair movement, and handle or manage graphical commands, interfaceshave beenbuiltforthisproject.Thewheelchair withamotorthatcanbemovedusingvocalcommands.Itis crucial for a motorized wheelchair to be able to avoid obstaclesautomaticallyandinrealtime,soitcangoquickly. Throughresearchanddesignwise,thewheelchairtocontrol development along safe and effective use of the provision independenceandself-usemobility.

2. Literature Review

Numerousstudieshavefoundthatallimpairedpeoplecan benefit from independent mobility or movement, which includes powered wheelchairs, manual wheelchairs, and walkers. Independent mobility improves educational and employment prospects, lessens dependency on group members, and fosters sentiments of independence. Independentmobilityiscrucialinlayingthegroundworkfor a lot of young children's early learning. [1] A cycle of deprivation and lack of drive that develops learned helplessnessisfrequentlytheresultofalackofexploration andcontrol.Independentmovementisacrucialcomponent of self-esteem for older individuals and aids in "ageing in place."Duetothenecessityofmovementformanyofthese activities, mobility issues contributed to the issue of activities of daily living (ADL) and instrumental ADL limitations.[2]Reducedsocializationchancesasaresultof mobilityissuesfrequentlycausesocialisolationandavariety of mental health issues. While traditional manual or selfautomated wheelchairs can often meet the needs of people with impairments,certainmembers ofthedisabledcommunityfindit difficult or impossible to utilize wheelchairs independently. In paper [3], they describe a smart wheelchair navigation system through multiple inputs hence the users with differenttypesofdisabilitiescanalsousethesamesystem. Simple and low cost ATMega32L of AVR microcontroller familyisusedforprocessingpurpose.

Microcontroller will compare those values with saved thresholdvalues.Whenitgetsbelowthethresholdvalue,it will transmit stop command to the H-bridge motor driver circuittoavoidthepossibilityofcollision.Controllerwillget navigational commands from android phone, which are

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

connectedthroughBluetooth.Usercangivevoicecommands to the android device which will be compared with saved commandsanditsvaluewillbepassedtocontroller.

Inpaper[4],theydescribeadesignshowingthemovement and voice-controlled wheelchair that can guide the paraplegictoheadtowardstheirwillandwishwiththehelp ofthevoicecommandwheelchair.Ithasprovidedadesign thatisefficientinhelpingthehandicappedpeoplewithout puttingtheirstrengthsandeffortstopullthewheelchair,by commandingitontheirvoicewitha facilitytocontrol the speedofthewheelchair.Thebrainoftheproposedsystemis Microcontroller. Voice to text app is used to get the voice input from the handicapped person and it is given to the Microcontroller via Bluetooth EGBT45ML.The voice gets converted to the machine code which will be given to the motordrivertocontrolthemovementofwheelchairs.

This paper [5], presents an automatic wheel chair using voice recognition. A voice-controlled wheelchair makes it easyforphysicallydisabledpersonwhocannotcontroltheir movementsofhands.Thepoweredwheelchairdependson motorsforlocomotionandvoicerecognitionforcommand. The circuit comprises of an Arduino, HM2007 Voice recognition module and Motors. The voice recognition modulerecognizesthecommandbytheuserandprovides the corresponding coded data stored in the memory to ArduinoMicrocontroller.ArduinoMicrocontrollercontrols thelocomotionaccordingly.TheyareusingHM2007voice recognition module which correlates commands to do speech processingandgive theresulttoArduinowhich is furtherprogrammedwithrespectivelocomotioncommands. ThelocomotioniscontrolledusingL293DandRelaywhich controlthemotors.ThesoftwareiscodedinArduinoandthe hardware components parse the voice commands and translateittothemotors.

Thispaper[6],describesthewheelchairsystemwithuser friendly touch screen interface. This device helps the disablestohaveautomaticadvancementtotheirdestination throughpredefinedpathsintheindoorsystem.Useoftouchscreen enables less muscle movement and less muscular pressurethantheselfpropelledwheelchairswhicharebeing used from ages. The ability to choose between manual operating mode and predefined operating mode uniquely presentscapacityofthe wheelchairtooperateinmultiple environments.Obstacleavoidancefacilityenablestodrive safelyinunknownaswellasdynamicenvironments.

Inpaper[7],awheelchairROBOTmodelcontainsanin-built MicroControllerAndEyeBallSensingsystem,whichdoes thefunctionslikeright,left,forwardandreverseoperations. Thewheelchairisdesignedinsuchawaythatitcanmove freelywithoutexternalsupportordependency.Throughthis feature the patients can enable movements of their wheelchairaspertheirdesire

In paper [8], the design of an automated wheelchair for peoplesufferingfromtotalorpartialparalysisispresented. Thiswheelchairdesignallowsself-controlusinganInfrared Sensorandamicrocontroller(ArduinoUno)basedcircuitry withemergencymessagetransmittingsystems.Thisdesign willnotonlypreventstressonthebodyduringmovementin allfourdirectionsbutwillalsobeaccessiblebylowincome households.

Roboticwheelchairshaveenhancedthemanualwheelchairs byintroducinglocomotioncontrols.Thesedevicescanease the lives of many disabled people, particularly those with severe impairments by increasing their range of mobility[9].Theseroboticenhancementwillprovidebenefit people who cannot use hands and legs. In this project we havedevelopedavoicecontrolledwheelchairwhichaimto counter the above problems. The wheelchair can be controlledusingjoystickaswellasusingvoicecommands. He/Shejustneedstosaythedirectionormovethebuttonfor that direction and the wheelchair moves in the desired direction.Inhardwaredevelopment,weareusingHM2007 voicerecognitionmodulewhichcorrelatescommandstodo speech processingandgive theresulttoArduinowhich is further programmed with respective locomotion commands[10].

3. Gap analysis

Prior to this, researchers have designed wheelchairs that require high hardware configuration using heavy and complex models such as 2-axis joystick, huge brakes and tires etc All these components combinedly contribute to difficulty in mobility for the patient using the wheelchair. Withtheuseofdedicatedhardwareperipherals,theelderly anddisabledpeoplerequiremaximumphysicaleffortsfor motion.Also,eventhoughtheyareheavyandcomplicated, they provide a similar performance to other light-weight software-basedmodels.Mostofthepapersdescribed,lack the concept of integrating software into the hardware for efficientmobility.Withtheuseofsoftware,notonlywecan designlightweight andminimalisticwheel-chair,butalso wecanembedvarioustechnologieslikeartificialintelligence for including latest features like autopilot into the wheelchair. Hence, the majority of the proposed models werenotperformingasefficientlyastheyweresupposedto.

4. Proposed system

4.1 Flowcharts

Wheelchairs can be controlled in two ways: utilizing the user'svoiceandanAndroidapp.

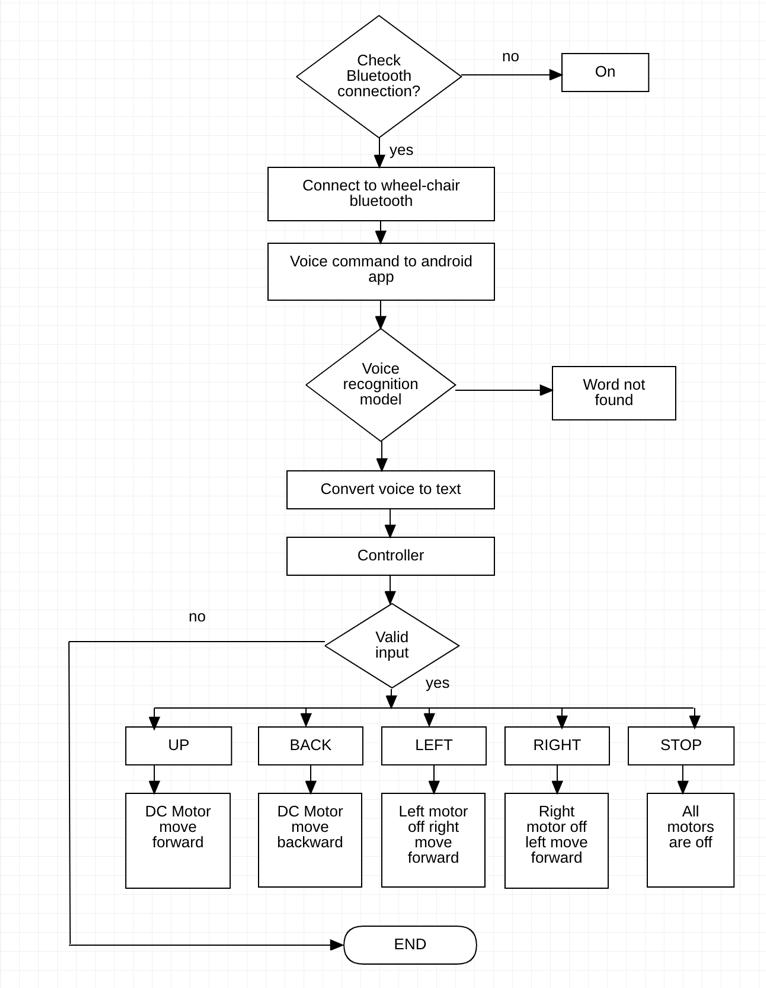

Thefirstflowchartdemonstrateshowvoiceinstructionscan be used to operate a wheelchair. First, a Bluetooth connection is made between the wheelchair and the user voice application. The user is then expected to utilize the applicationtoutterparticularcommands.Thewordisthen

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

validated and converted to text using the Google voice service.Thetextformatisthenanalysedbythecontroller, whothenverifiesthattheinputiscorrectbeforeproviding the motor drivers with precise instructions for the movementinthedirectionsofleft,right,straight,backward, orstop.

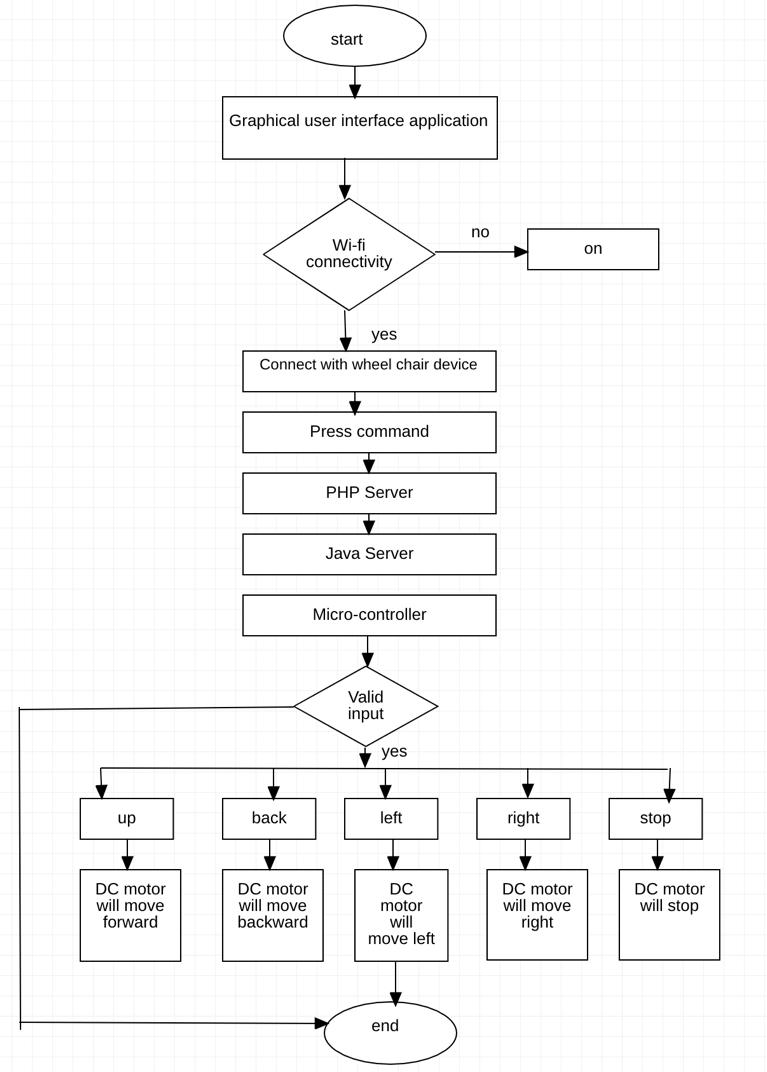

Fig 2: Flowchart for GUI Command

4.2 Block Diagram and explanation

Fig 1: Flowchart for User Voice Command

TheuserwillutilizeanAndroiddevicewithaGUIappinthe secondpartofourprojecttosendcommandsbyclickingona certain button, and the wheelchair will respond as instructed. The wheelchair will move as a result of the commands.TheinternetorWi-Ficonnectionisamustfor thismodule.Themicrocontrollerfirstverifiesthattheinput is genuine before giving the motor drivers specific instructions for moving left, right, straight, backward, or stoppingaltogether.

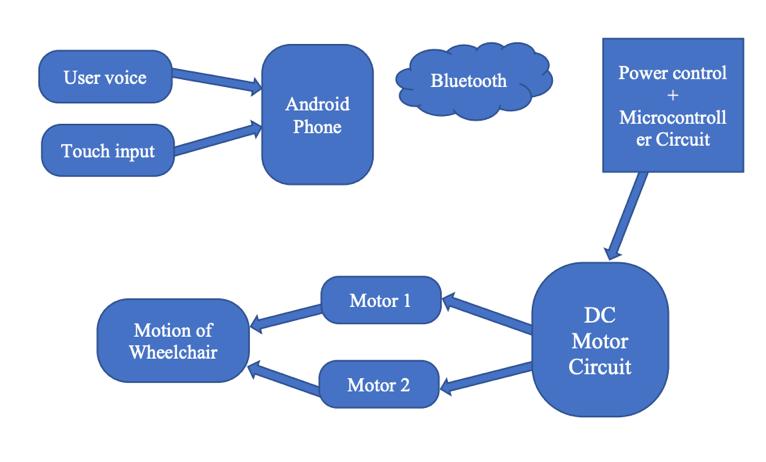

Fig 3 :- Block diagram for the proposed model

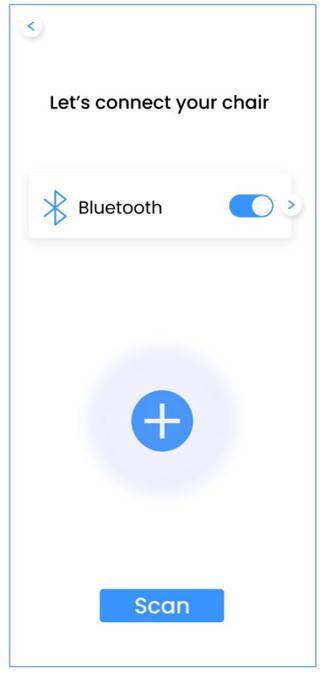

ItisanapplicationtorunontheandroiddevicewiththeGUI appforsendingcommandbyclickingonthespecificbutton and the wheelchair will behave accordingly as per the command. The commands will produce the movement of wheelchair. The Bluetooth connection is used to transmit instructions to the actuators in the wheelchair. Microcontroller checks for the valid input and then gives specific instructiontothemotor driversforitsmovement towardsleft,right,straight,backwardotherwisestop.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Android will act as middleware. Android will offer connectivitythroughBluetoothandwillsendwirelessdata overtheconnection.Androidwillhaveavoicerecognition apptoreceivevoicecommands.Thevoicerecognitionapp will first decode the sets of instructions from the voice commands and then later send it to the Bluetooth serial module. The microcontroller will receive the set of instructions; it will compare the instructions with the predefinedconditionsthataresetasbase.Iftheconditionis satisfied, then the signal will be sent to the driver circuit which in turn will convert output pulse signal from microcontrollertoelectricalsignaltodrivethewheelorto givethemechanicalinstructionstothewheel’sDCmotors. There is also one other way to give directions and other controls,bypressingafingeragainstthevariousquadrants ontheappwhichisprogrammedwithdifferentvaluesfor differentdirections.

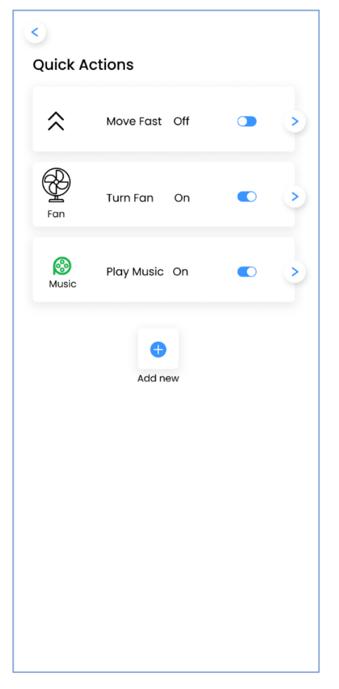

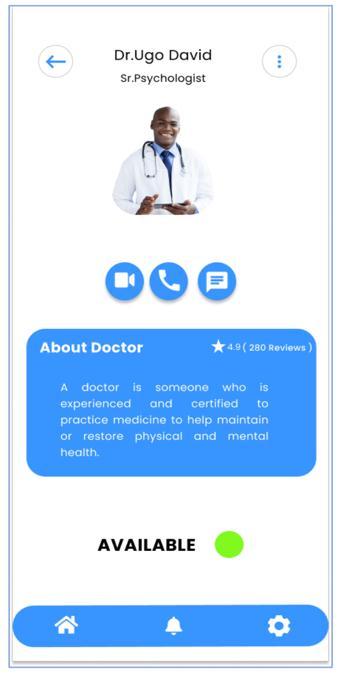

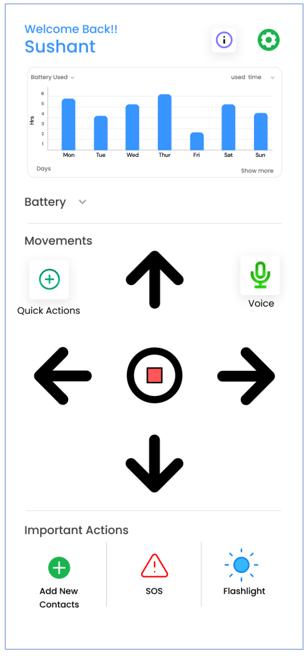

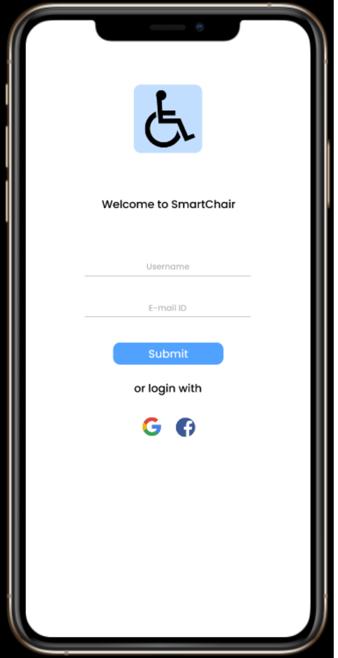

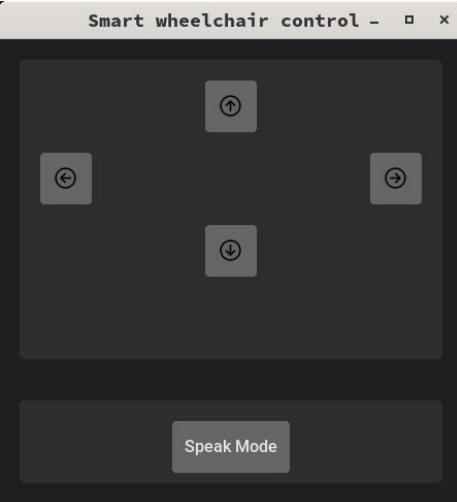

4.3 UI Interface

The user-interface is designed in compliance with the Sneiderman’s 8 golden rules, fitts’ law and Neilsen’s 10 heuristics for UI Design. We have used a simple interface whereweprioritizethearrowbuttonsfordirectionandthus makingthemofbiggersize.Thus,accordingtoFitts’formula the time to acquire the direction buttons for the user is reduced as it is inversely proportional to the width of the buttons. The direction options in the user interface are providedaccordingtoreal-worlddirectionsforeaseofuse andthevoiceoptionmakestheprocessimmenselysimple for users that might have less or no vision. The standard settingsandpositionsforbuttonshavebeenusedsotheuser needsleastornoadaptationtotheinterface.

Fig 6: Bluetooth Connection Fig 7: Battery info

Fig 4: Login screen Fig 5: Home page

Fig 8: Emergency contact Fig 9: Quick Actions

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Theusercanimmediatelystopthewheelchairatanypoint during their journey by speaking or clicking. A user could runintoanerrorforexampleifthevoiceenabledsystemis unabletorecognizethecommand.Whenthishappens,the system reads out the 5 commands that the user can use LEFT,RIGHT,FORWARD,BACKWARD,ANDSTOP.Theuserinterface implements recognition over recall by providing interfacewheredirectionsarealreadygivenandnotasked to be entered by user so the user can just recognize and select. The user-interface is flexible to various types of handicap or disabled people by taking input in multiple ways.

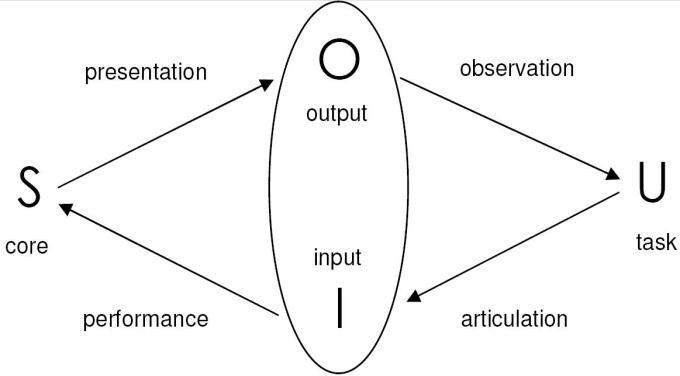

4.4 Interaction Model

Abowd&Beale’sModelisusedasinteractionframework.

5.Result and discussion

Testcase Result

Hapticinput:Left Success

Hapticinput:Right Success

Hapticinput:Forward Success

Hapticinput:Backward Success

Hapticinput:Stop Success

Hapticinput:Multiplekeys Failed

Audioinput:Left Success

Audioinput:Right Success

Audioinput:Forward Success

Audioinput:Backward Success

Audioinput:Stop Success

Audioinput:Noise Failed

Audioinput:“Greatday” Failed

AbowdandBeale’sinteractionframeworkidentifiessystem andusercomponentswhichcommunicateviatheinputand outputcomponentsofaninterface.Similarcyclicalprocesses are taken in this communication from the user's task formulationtothesystem'sexecutionandpresentation of the task to the user's observation of the task's outcomes, fromwhichtheusercanthendesignnewtasks.

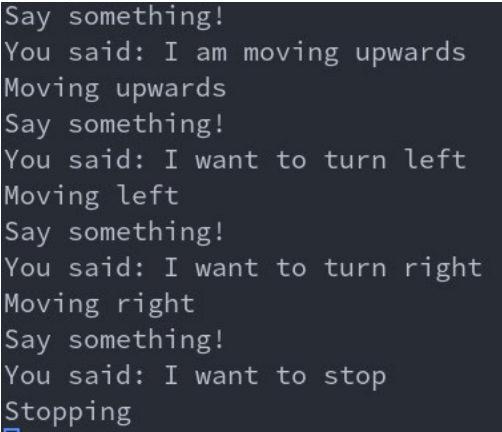

In the proposed system, user is the person using the wheelchairapplicationinterfaceontheirphone.Inputisvia touchscreen (Haptic input) input through smartphone or voice-enabledinput Thesystemtakesinasinputcommands fromtheusertoeithermoveinacertaindirectionorstop themovementofthewheelchair.UsingtheArduinoIDE,we canconvertthecommandstoactualactionsperformedby thewheelsthroughmotor.Theoutputisrequiredmovement ofthewheelchair.

4.5 Testing the model

Unittestingmethod wasemployedforgenerating thetest cases.Unittestinginvolvesisolatingdifferentsectionsofthe code to check for bugs and errors for different possible inputstotheapplication.Variousoutputwereobservedfor differentinputs asaresultofthetest.

Fig 10 : Tkinter GUI Panel for voice recognition

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

camera. We also seek NGOs that support the cause of providingeasymovementtoeldersandhandicappeople.An implementationofthefinalproductcanhelpdisabledpeople liveaneasierandbetterlife

7. References

[1] Chin-Tuan Tan and Brian C. J. Moore, Perception of nonlinear distortion by hearing-impaired people, InternationalJournalofIdeology2008,Vol.47,No.5,Pages 246-256.

[2] Oberle,S.,andKaelin,A."Recognitionofacousticalalarm signals for the profoundly deaf using hidden Markov models,"inIEEEInternationalsymposiumonCircuitsand Systems(HongKong),pp.2285-2288.,1995.

[3] A.ShawkiandZ.J.,Asmartreconfigurablevisualsystem for the blind, Proceedings of the Tunisian-German Conferenceon:SmartSystemsandDevices,2001.

output for

Theprojectwastestedforparsingandtransmissionofuser input. Haptic input enables the user to hold down the buttonsandtransmitthemovementsignalviabluetoothas longthebuttonisheld.Thesignalceaseswhenthebuttonis released. This design is quite convenient and intuitive for peopletoworkwith.Voicerecognitionfunctionalityallows theusertotogglethemovementstatebysayingkey voiceactivation words like ‘forward’, ‘stop’ etc. Once the voice commandisrecognized,thecorrespondingmovementsignal is transmitted continuously until interrupted by another userinput.Thevoicerecognitionperformancewasfoundto bedependentonthephone’smicrophonequalityandvaried from phone to phone. Usage of earphone and headphone micsimprovedcommandrecognition.Thenoisetoleranceof thesystemwastestedinaroombyplayingmusicandhaving multipleaudiosources.Itwasfoundtobequitesensitiveto noise as it attempted to parse and recognize words from surroundingsoundandfailedtorecognizecommandswhen themusicloudnesslevelsweresimilartothetestersvoice. The system had satisfactory performance in lightly noisy environments and when the microphone was held close whenspeaking.

6. Conclusion and Future work

Inthisprojectwedevelopedauser-friendlygraphicaluser interface to perform operations on a wheelchair from instructions given through a smartphone device. We integratedasimplisticandminimalisticuser-interfacewith voicerecognitionfeaturethatenablesmovementbyofthe chairbyjustspeakingthecommand.Inthefuturewecantry to collaborate with mechanical experts to build a fully functional voice-enabled wheelchair. Another one of our maingoalsistoexpandthefunctionalityofoursoftwareand include more functionality such as speed control or AIpowered obstacle detection using the phone’s built in

[4] Dalsaniya, A. K., & Gawali, D. H. (2016).Smart phone based wheelchair navigation and home automation for disabled.201610thInternationalConferenceonIntelligent SystemsandControl(ISCO).doi:10.1109/isco.2016.7727033

[5]Leela,R.J.,Joshi,A.,Agasthiya,B.,Aarthiee,U.K.,Jameela, E., & Varshitha, S. (2017).Android Based Automated WheelchairControl.2017SecondInternationalConference onRecentTrendsandChallengesinComputationalModels (ICRTCCM).doi:10.1109/icrtccm.2017.44

[6] Vasundhara G. Posugade, Komal K. Shedge, Chaitali S. Tikhe,Touch-ScreenBasedWheelchairSystem,International JournalofEngineeringResearchandApplications(IJERA)

[7] ShreyasiSamantaandMrs.RDayana,SensorBasedEye ControlleAutomatedWheelchair,InternationalJournalFor ResearchInEmergingScienceAndTechnology

[8] Nowshin, N., Rashid, M. M., Akhtar, T., & Akhtar, N. (2018).InfraredSensorControlledWheelchairforPhysically Disabled People. Advances in Intelligent Systems and Computing,847–855.doi:10.1007/978-3-030-02683-7_60

[9] R.S.Nipanikar ,VinayGaikwad, ChetanChoudhari, RamGosavi,Vishal Harne,2013,“Automatic wheelchair for physicallydisabledpersons”.

[10] S.D. Suryawanshi, J. S. Chitode, S. S. Pethakar,2013,”VoiceOperatedIntelligentWheelchair.”

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

BIOGRAPHIES

Dr.DeepaKaruppaiah AssistantProfessor(Senior) SCOPE,9791570158 deepa.k@vit.ac.in VIT,Vellore, TamilNadu

Mr.SrikanthBalakrishna

UGStudentVIT,Vellore, TamilNadu

Ms.PrarthanaShiwakoti

UGStudentVIT,Vellore, TamilNadu

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal |