International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

1Harsh Parikh, 2Anirudh Navin, 3Dhairya Panjwani, 4Utkarsh Bhosekar, 5Gunjan Sharma, 6 Lokesh Devnani

12345Student, Dept of Information Technology, Thadomal Shahani Engineering College, Mumbai, India 6Student, Dept of Information Technology, Vivekanand Education Society's Institute Of Technology, Mumbai, India ***

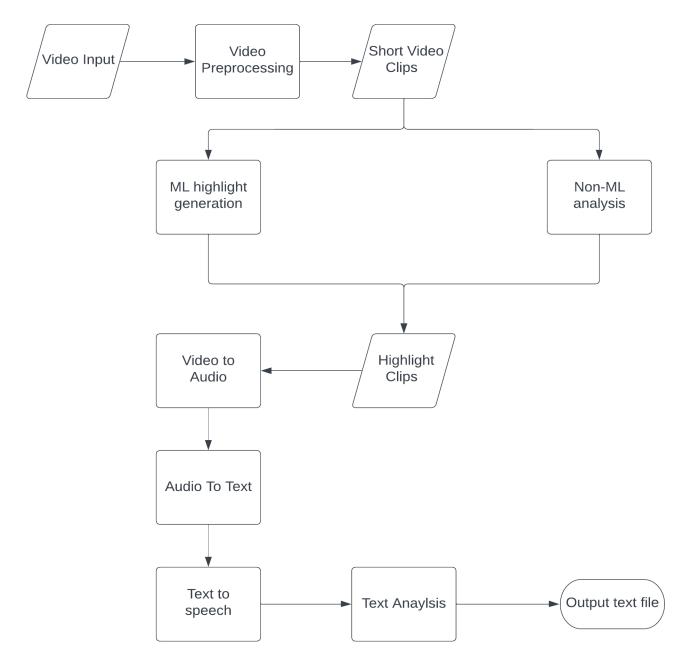

Abstract - Football in particular needs more automation as more nations and clubs want to build their squads using datadriven strategies. Modern games are continually changing, from the creation of stats to the tactics used on the field. The system we propose takes an input of the raw video file of the game and can split the input file into two halves of the game for better performance. The system then uses algorithms to extract highlights from the competition. Outputs here are highlights or key moments that occur in the game. It does not include the time from the game where there are not many eventful actions happening in the game. We then get the audio of the complete game and convert the audio file to text using the speech-to-text model. This is the final output of the entire proposed system.

Key Words: Machine Learning, Football, Report Generation, Highlights, Twitter analysis, Voice modulation

Automationinthefieldofsports,especiallyfootballisaneed of the hour as many clubs and countries are opting for a data-driven approach for their side. From generating statisticstotacticson-fieldthemoderngameisconstantly evolving. As the profits from sports are increasing exponentially,teamsareheavilyinvestingmoreingathering statistics on their players. Certain statistics, such as the distanceaplayerranduringamatch,theaveragepositionof a player on the field, offensive yards per carry, etc. can providesomehiddeninformationonaplayer’sperformance.

It may help discover some hidden talent the player might possess.Thesestatisticsare thenstudiedbydata analysts andmanagerstogaininsightintoagame.Theseareplayerfocused stats that help a team improve the quality of individualsbutwhenpreparingforagameamanagerneeds a complete summary of the multiple games such as the teams’ performance in the last few games, opponents’ performance in the last few games, a summary of a few gameswheretheopponentlost,etc.[1][2]

Thisprojectwillmakethisjobeasierasitcansummarizea whole football match into a text file that distils all the important plays that happened in the match. Such information could be crucial to winning matches if wellanalysed by the team’s coach. Managers can look at these reportstounderstandtheimportantmomentsinagameand

whilepreparingforthegameplayerscanalsofindtheweak link in the defence of the opponent and attack that link. Everytopmanagermustgothroughtheprocessofanalysing theopponent.During that,theyneedto examine previous gamesoftheopponent.Weproposeamodelthatcondenses theactionofamatchtoareportthatiseasilydigested,itwill reducethetimemanagementhastospendanalysingevery game. Our project is not only applied to the analytics departmentofafootballteambutalsothepeoplecovering footballgames,broadcastingcompanies,sportsleagues,and TV channels. Delivering high-quality content as quicklyas possibletotheviewersistheirtoppriority.Keepingthatin mind,mediacompaniesareturningtotechnologytofinda waytospeedup thisprocess. Tospeedup the process of sportscontentgeneration,mediaprovidersarelookinginto waystohaveAIanalysethegamefootageandpickoutthe highlight-worthy moments automatically. This could help sportsjournalistsastheywillnowhavearoughsummaryof thegameinsteadofmakingnotesthroughoutthegame.

Ourproposedsystemtakestheinputoftherawvideofileof thegamewhichusuallylastsninetyminutesplusinjurytime, toimproveperformancewecandividetheinputfileintotwo halvesofthegame.Thesystemwillthenextracthighlights from the competition by usingmachinelearningand nonmachinelearningalgorithms.Theoutputhereisahighlight orimportantmomentsthathappenedinthegamethiswill remove the time when the game is slow and nothing important is happening. After that, we remove the video fromthehighlightssothatwenowonlyhavetheaudioofthe wholegame.Thus,whentheaudiofileisconvertedtoatext file using speech-to-text models will generate a match summaryofallthecrucialbitsandhowithappenedfroma professional commentator. This is the final output of the wholeproposedsystem.

Beforegettingintotheworkflowonhowthepipelineworks, we first need to understand a few basic concepts about footballandhowagamecanbebrokendownintomultiple phases.Asfootballisevolvingatahighspeedandalotofthe timethesephasesmergeintoeachother.ButforourMachine learningmodel,wehaveusedthesephasesandtheiroutcome willdecidethehighlightsvideo.[3]

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Build-upplaymeanshavingpossessionoftheballandtrying tobuildanattackthroughtheopponent'sorganizeddefense. Build-upplaynotonlymeanshavingabulkofshortpassesin a row, among the teammates but alsoa long ball from the goalkeepertotheforwardisabuild-up.Thereasonforthis phaseistoslowlybuildanattackandarriveattheopposition boxprepared.Thisisaslowandlaboriousprocessthatdoes notmakethehighlightvideo.

Transition in the game is a moment where a team loses possessionandtheopponentteamtriestotakeadvantageof theopponentsbeingunorganizedwiththeirpositions.The teamwiththeadvantagemakesquickandwell-coordinated movestochangethephaseofthegamebytakingthebestout of this opportunity and scoring a goal. Transition attacks requirealotofaccuracywitheverymovetheymake.

Attackreferstothemovementoftheteamhavingpossession oftheball,makingtheirmovesflowthroughtheopponent's midfield and defence, and attempting to score a goal. The teamwiththepossession,playingpasses,andmakingmoves canbesaidasbuildinganattack.Anysuccessfulattackthat leads to a goal or a near-goal opportunity is a part of the highlightvideo.

Counter-attackisoneofthequickestwaystoscoregoalsin football. It starts with the transition in the game where a team loses control over the ball and the opponent tries to counterbymakingimportantpassesleadingthestrikersto maketherun.Withaminimalnumberofpasses,havingan attemptonthegoalcounter-attackingstyleoffootballissaid to be the most perilous as it can give maximum damage withinafewseconds.

Pressingisatermwherethedefendingteamintendstoput pressureontheopponentteam,inpossessionoftheball.The mainideahereistogivetheteaminpossessionaslesstime aspossibletomaketheirmovesforattacking.Pressingisnot justrunningtowardtheplayerhavingtheball.Itisdoneina plannedandstructuredmanner.

Dead-balleventsinafootballmatcharethemomentswhen theplayisstoppedtemporarilyandtheballisnotinmotion. This happens when a foul is committed for example a handball,afailedtackle,oranoffside.Inthesesituations,the opponentteamisawardedafreekickinthepositionwhere

thefouliscommittedonthepitch.Incaseofafoulinsidethe boxnearthegoalkeeper,apenaltykickisawarded.

Defense is an action where the players at the back (the center-backs and the full-backs) try to stop the opposing team’s players from scoring the goal. In this, the team defending sits back and endures waves of attack from the opponent team. Anything fruitful in this will go in our highlightsvideo.

We propose a system where wetake the whole game and breakitintochunksof15-30secondvideosthetimeisan arbitrarynumberandcanbechanged.Thesystemwillfeed ittothenextstepwherewetryandidentifythephaseofthe game and decide whether that makes into the highlights video.Thesystemisnotreliantononemodelandanother Non-MachineLearningmodelshouldbeusedtoimprovethe accuracy.Afterthatthevideoisdroppedfromthehighlights. AndaudioisextractedandinthefinalstageusinganANN modelwecangenerateareportfromtheaudiofile.Thiscan beobservedinFig1.

The video is divided into multiple smaller chunks and processed in a parallel manner. This is one of the primary methods for reducing time. It is possible to build it using video time pointers. Splitting is virtual in this manner and does not result in actual sub-file formation. However, the resultcontainsmultiplevideofilesthatmustbeintegrated

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

intoone.Theselimitsatleastmakethemethodunpleasant when it comes to the duties at hand, especially when employing neighbouring frames for computation. To deal with this, wehadto dividethe videowithan overlapsuch thatnoinformationwaslostatjunctionpoints.Theprocessof converting a coloured video to a black-and-white video is requiredbecauseitreducesthetimeneededtogeneratethe highlights from the raw video file which can be 90 to 120 minutes.Ourgoalhereistogenerateareportofthewhole gameratherthanahighlightofthewholematchsowecan reducethequalityofthevideo.

Video shot identification is a critical component of sports video summary andhighlight generation.A football match may be split down into a series of video clips. As a result, events ina football match can be thought of as video clips playedinasequencewhencombinedcangeneratethewhole match. Because highlights are typically a compilation of significantoccurrences,videoclipscanbeutilizedtogenerate them.Processingvideoshotsinsteadofthewholegamewill make the model more time efficient. It should be rememberedthatafootballmatchmightgoonforquitesome time,generallyuptoninetyminutes,andsomeextrainjury time.Theseclipscanbeofarbitrarysizeandcanbemadein thevideopreprocessingstage.Theseclipscanthenbeused hereandthemodelcanclassifytheminto5possibleevents. Inafootballmatch,areplayisvideofootageofoneormore momentsfromamatchairedmorethanonce.Becausethey areregularlyutilizedin-gamehighlights,replaysgivecrucial indicatorsforcriticaloccurrences.Furthermore,replaysare especiallysignificantinfootballsincevideoassistantreferees utilizethemtoaffirmorevaluateajudgment.Themodelcan takeintonoticethatifthesameeventisreplayedthenthere is a chance that the event is a part of the replay and is an important bit of the game and should be a part of the highlights.

Inthehighlights,wegenerallywantsuccessfulattacks,Dead ballevents,foulsthatleadtoayelloworredcard,andsome unsuccessfulattacks.Thiscancomefromattacks,counterattacks,anddead-ballevents.Deadballeventscanbefrom anypartofthefield,ourhighlightcutneedstobefromafree kickintheoppositionhalfnearthegoalandpenaltykicks.It canbeanaiveideatojustputthoseintheseeventsinour highlight cut but there may come a situation where an excitingeventhappensinthebuildupphasewhichshouldnot beapartofthehighlight.Themostcommonthingthatcan happen inthis phase ofthegame is when teamA is in the buildup and suddenly due to a poor pass team B gets possessionhighupthepitchwhichresultsinaneasygoalscoringopportunity.Anothermajorcluethatcanhelpusare celebrations that happenafter scoringa goal.Celebrations areessentialindicationsforidentifyingsignificantmoments

inafootballmatch.Wecoulddevelopamodeltorecognizea player's celebration. The positive class is made up of photographs in which a player has just scored a goal or earnedapenalty,andthenegativeclassismadeupofimages thatwouldordinarilyoccurthroughoutafootballmatchi.e., an exceptional defensive play or a VAR (Video Assistant Referee)turningadecision.

A CNN and RNN architecture can be adapted to classify keyframesand remember previous clips to generatesome continuity.Forexample,ifthephaseisdividedintomultiple clips, internal memory in RNN can keep track of previous clips.Inthisway,wecangenerateaMachinelearningmodel thatcanclassifyeachclipandcategorizethemintomultiple phases then the RNN model will look at the previous classifiedclip andcheck if the current state of the gameis interesting enough to be added to the highlights video. As CNNworkswellwithimageandvideoclassification,wehave chosenthisandwithaneedtostorethepreviousplaysofthe game which requiressome memory wehave chosen RNN. ThearchitecturethatweproposeinthisisCNNarchitecture likeVGG-19andforRNNwesuggestGRUarchitectureasit showsbetteraccuracythanLSTMbecauseitiseasytomodify anddoesn'tneedmemoryunits,thereforeitisfastertotrain thananLSTMmodelandgivessimilarperformance.[4]

3.2.2.

The highlight generator should not rely solely on one parametersuchasthemodelpredictionoftheclip.Itshould havemultipleothermethodssuchasvoicemodulationinthe audiofileasanyimportanteventisfollowedbyachangein amplitude in the voice of the commentator or Twitter analysisofthematch.Relyingsolely ononepredictioncan leadtosomeincorrectpredictionswhichimpacttheinsights that can be extracted. Even a small python code can be writtenthattracksthescorelineandcuts1minutebeforethe goal isscoredsothatwecatchthebuild-upandthewhole attack.Acombinationofamachinelearningmodelalongwith any of the non-machine learning models and taking a weighted average of them will reduce the risk of misclassification.Non-MachineLearningalgorithmsinclude thefollowing:

Techniquesforautomaticallysummarizinganysportusing Twitter analysis have grown in popularity in recent years. Whensummarizingasportsvideo,weshouldconsiderthe viewers' perspective to ensure that they comprehend not onlythegameflowbutalsotheatmosphereofthegame,such astheenthusiasmateachincidentofthegame.Avideothat hasbeensummarizedisreferredtoasa"highlightvideo"in thiscontext.Eventssuchasagoal,aplaythataidsagoal,and a goal-scoring opportunity thrill viewers since they are directlytiedtoagame'ssuccessorloss.[5]

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

Assoonasanymajoreventinthegamehappensfansgoto their Twitter account and tweet about it on a particular hashtag. If we plot a time series graph concerning the frequencyoftweetsreceived,wecanhavearoughestimate ofthekeymomentsofthegame.Thesearethemomentsthat fansofthesporthavedeemedimportantandshouldbeapart ofthehighlightvideo.

Audio intensity is a valuable indicator for identifying significantmomentsinafootballgame.Theenthusiasmof theaudience,playerappeals,andfuriouscommentaryareall examplesofmajoraudiocuesthatarefrequentlyconnected with key developments in a match. Audio characteristics havebeenutilizedtoidentifygoalsandothersituationssuch asfoulsetc.[6]Thismethodisnotonlyconcernedwithjust recognizingwhatisoccurringonthefield,butalsowithhow theaudiencereactstoit.Byanalyzingthesound,themodel detects anything out of the ordinary such as an audience cheering or a referee disagreeing with a player, and then selectstheappropriateclipsforinclusioninthecompilation. However, this strategy may not be appropriate for games withlessnoisyaudiences,suchaschess,wherethereareno applaudingfans.Speakingofcrowds,theyarenotalwaysa reliablemethodofdetectingimportantevents.Infootballat randomintervalsoftimefanschantforaplayerorsingthe club'santhemtomotivatethehometeam.

Inthepipeline,weprocessedtherawvideofiletogeneratea highlightsvideoofthewholematchbyusingacombination of machine learning algorithms or non-machine learning models.Inthisstepwearetryingtoextracttheaudiofrom thehighlightsthiswillhelpintheupcomingstepwherewe useAutomaticSpeechRecognition(ASR)[8]togeneratethe expectedreport.Thereareseverallibrariesandtechniques availableinPythonfortheconversionofVideotoAudio.One such library is MoviePy. MoviePy is a Python library for videoediting,includingslicing,concatenation,titleinsertion, video compositing, video processing, and effect generation.[9] Some instances of the application may be foundinthegallery.Thepythonscriptrequiredtofulfilthis is straightforward. It is as simple as accessing the audio member of the VideoFileClip object that we created to extracttheaudio.Theextractedaudiomustthenbewritten toanewfile,thenameofwhichmustbesupplied.

Atitsmostfundamental,speechisnothingmorethanasound wave.Theacousticpropertiesofthesesoundwavesoraudio signals include amplitude, crest, peak, wavelength, trough, frequency,cycle,andtrough.Mosttoday'sspeechrecognition systems use what is known as a Hidden Markov Model (HMM).Thismethodisbasedontheideathat,whenanalyzed

overashortenoughduration(say,tenmilliseconds),aspeech signal might well be properly represented as a stationary process thatis,aprocesswhosestatisticalfeaturesdonot varyovertime.Speechrecognitionorconvertingtheaudio filereceivedfromthepreviousstepintoareportcanbedone by creating an Artificial Neural Net or by simply using a pythonlibrary.

Speech Recognition translates spoken words to text. Most people use Deep learning and Neural networks for speech recognition.Wecanusesomeofthepackagesfrompython forspeechrecognitionsuchas,‘apiai’,‘assemblyai’,‘Googlecloud-speech’,‘SpeechRecognition’,‘Pocketsphinx’,‘wit’,etc. GooglespeechrecognitionAPIisaneasymethodtoconvert speech into text, but it requires an internet connection to operate.Wecanusethe‘SpeechRecognition’moduleinour use case. The flexibility and ease of use of the SpeechRecognitionpackagemakeitanexcellentchoicefor any Python project. SpeechRecognition has a Recognizer class, it is the place where everything happens. It has instancesthatareusedtorecognizethespeech.Eachmodel hassevenmethodsthatcanreadvariousaudiosourcesusing the different APIs, recognize_bing(), recognize_google, recognize_google_cloud(), recognize_houndify(), recognize_ibm(),recognize_sphinx(),recognize_wit().

This project will be revolutionary in the field of sports analysis as it not only gives the manager and the analyst videohighlightsofthewholegamedistilledintoahighlight videothatlastsonlyafewminutesbutalsoareportofthe wholegameintheformoftext.Therecanbeanotherstep added to the pipeline where the generated report goes in through text analysis to see how the team won or any significant pattern that emerges from it. Another place wherewecanimproveismodellingselectionandtraining. thisworkflowcanbeadaptedtoanyothersportlikehockey orrugbywherethescoreiskeptandthephasesofplayare clearanddistinct.Finally,wehaveproposedacombination ofCNNandRNNcanbeusefulbutlookingintoothermodels andarchitecturethatimprovetheclassificationaccuracyand maintain continuity can improve the final product considerably.

[1] Y.Lee,H.Jung,C.Yang,andJ.Lee,“Highlight-Video GenerationSystemforBaseballGames,”2020.

[2] P. Shukla et al., “Automatic cricket highlight generation using event-driven and excitement-based features,”2018.

[3] T.Worville,“Phasesofplay–anintroduction,”Stats Perform, Apr. 30, 2019. https://www.statsperform.com/resource/phases-of-playan-introduction/(accessedNov.24,2022).

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056 Volume: 09 Issue: 11 | Nov 2022 www.irjet.net p-ISSN:2395-0072

[4] A. Karpathy, G. Toderici, S. Shetty, T. Leung, R. Sukthankar,andL.Fei-Fei,“Large-scalevideoclassification withconvolutionalneuralnetworks,”2014.

[5] Researchgate.net. https://www.researchgate.net/publication/287050282_Eve nt_Detection_based_on_Twitter_Enthusiasm_Degree_for_Gen erating_a_Sports_Highlight_Video(accessedNov.24,2022).

[6] M. R. Islam, M. Paul, M. Antolovich, and A. Kabir, “Sports highlights generation using decomposed audio information,”2019.

[7] B. Guven, “Extracting Audio from Video using Python,” Towards Data Science, Nov. 29, 2020. https://towardsdatascience.com/extracting-audio-fromvideo-using-python-58856a940fd(accessedNov.24,2022).

[8] K. Doshi, “Audio deep learning made simple: Automatic Speech recognition (ASR), how it works,” Towards Data Science, Mar. 25, 2021. https://towardsdatascience.com/audio-deep-learningmade-simple-automatic-speech-recognition-asr-how-itworks-716cfce4c706(accessedNov.24,2022).

[9] “Moviepy,” PyPI. https://pypi.org/project/moviepy (accessedNov.24,2022).

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified