International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

1 Associate Professor, Department of Electronics and Computer Engineering, Maharashtra Institute of Technology, Aurangabad, Maharashtra-431010, India

2 Professor, Department of Electronics and Telecommunication, N B Navale Sinhgad College of Engineering, Kaegaon-Solapur-413255, Maharashtra, India ***

Abstract - The sole means of communication for the deaf and dumb is sign language. These people with physical disabilities use sign language to express their thoughts and emotions. A kind of nonverbal communication called a hand gesture may be used in a number of situations, such as deafmute communication, robot control, human-computer interface (HCI), home automation, and medical applications. In hand gesture-based research publications, a variety of approaches have been used; including those based on computervisionandinstrumentedsensortechnologies.Toput it another way, the hand sign may be classified as a posture, gesture, dynamic, static, or a mix of the previous two. This researchfocuses ona review ofthe literature onhand gesture tactics andexamines theirbenefits and drawbacksindifferent contexts. Four key technical elements make up the gesture recognition for human-robot interaction concept: sensor technologies, gesturedetection, gesturetracking, andgesture classification. The four essential technical components of the reviewed approaches are used to group them. After the technical analysis is presented, statistical analysis is also provided. This paper offers a thorough analysis of hand gesture systems as well as a quick evaluation of some of its possible uses. The study's conclusion discusses potential directions for further research.

Key Words: Hand gesture, Sensor based gesture recognition, Vision-based gesture recognition, Humanrobot collaboration, Sensor gloves.

Peoplewithspeakingandlisteningimpairmentsutilizesign languageasameansoftransmittingdata.Essentially,sign language is made up of body parts moving to convey information,emotion,andknowledge.Bodylanguage,facial emotions, and a system manual are all employed in sign languageasaformofcommunication.Themanyexpressions areemployedinsignlanguagesasamorenaturalmediumof interaction,andforthosewhoaredeaforhardofhearing, they are the only available form. Deaf and dumb persons who use sign language may communicate without using words.TheSignlanguageisnotauniversallanguagelikethe variousspokenlanguagesspokenaroundtheglobesinceit mayvarybyregionanditisfeasiblethatdifferentnations mayuseuniqueorseveralsignlanguages[1].

There may be many sign languages in certain nations, including India, the United States, the UK, and Belgium. People utilize it as a kind of nonverbal communication to convey their feelings and ideas. The non-impaired individuals found it challenging to communicate with the impairedpeople,andinordertodosoduringanytraining related to medical or educational fields. A certified sign languageinterpreterisneeded[2].Theneedfortranslators has been steadily rising over time in order to facilitate efficientcommunicationwithpersonswhoaredeaforhave speechimpairments.Handgesturesarethemajormethod usedtodisplaytheSign.TheHandGesturemaybefurther dividedintotheglobalmotionandthelocalmotion.While just the fingers move in a local motion, the whole hand moves in a global motion [3]. Many implementations of recognizing of hand gestures technology are being used today, including surveillance cameras, human-robot communication,smartTV,controller-freevideogames,and signlanguagedetection,amongothers.Theappearanceofa bodily component that incorporates non-verbal communication is referred to as a gesture. The Hand gestures are further divided into a number of types, including Oral communication, Manipulative, and Communicativesigns[4][5].

TheAmericanSignLanguage,theIndianSignLanguage, andtheJapaneseSignLanguageareonlyafewexamplesof themanysignlanguagesthatexisttoday.Whilethesemantic interpretationsofthelanguagemightchangefromplaceto region,theoverallstructureofsignlanguageoftenstaysthe same.Abasic"hello"or"bye"handmotion,forinstance,can be the same everywhere and practically in every sign language [6][7]. When using sign language, gestures are expressedusingthehands,fingers,andoccasionallytheface, dependingonthenativelanguages.Becausesignlanguageis a significant form of communication and has a significant impactonsociety,itiswidelyrecognized.Withouttheuseof aninterpreter,hearing-impairedpersonsmaycommunicate effectively with non-signers thanks to a strong functional sign language detection approach [8]. Due to regional differencesinsignlanguage,eachnation'ssignlanguagehas itsownrulesandsyntaxaswellassomevisuallycomparable characteristics. For illustration, without the necessary understanding of Indian Sign language, American Sign Language users in America could not comprehend Indian SignLanguage[9][10].

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

Theuseoflanguageisanessentialcomponentofourdaily lives. Without understanding each other's language, communicationmightbechallenging.Differentcommunities employ a variety of languages for communication. The visually challenged utilize sign language as a means of communication[11].Theprimarymeansofcommunicating in sign language is visual, and signs are used to express soundpatternsandmeanings.Thedevelopmentofvarious sign language standards based on regional and ethnic differenceshasbeenfacilitatedbymanycountries,including KoreanSignLanguage(KSL),ChineseSignLanguage(CSL), IndianSignLanguage(ISL),AmericanSignLanguage(ASL), British Sign Language (BSL), and Persian Sign Language (PSL) [12]. The hearing and speech challenged may effectively communicate with others who are not affected with the use of sign language recognition technology. The Sign language recognition systems is enhancing societal, private, and academic aspects of communication between thosewhoareimpairedandthosewhoarenotinadisability inanationlikeIndia[13].

ThemainissuewithISListhatthereisn'tastandardized database for the language, no common formatting for ISL, andthatthereareseveral variantsthroughout the nation. Translatingcertainwordsbecomestoocomplicatedbecause they have numerous meanings, such as the word "dear," whichisparticularlytough todeal with since it cannot be directlytranslatedintothespecificsetofsignlanguage[14]. TheresearchalsodemonstratesthatEnglish'ssyntax,vocab, and structure are all well established in Indian Sign Language. The solitary hand gesture and the double hand gesture, which represent the tasks that need the most stretching,aretwooftheseveralgesturesemployedinISL [15].

Theidentificationinsignlanguageismoredifficultsinceitis multimodal. The handshape, movement, and position are connected with manual aspects whereas the facial appearance,headposition, andlipstancearerelatedwith non-manual elements in the identification process of sign language.HowsignlanguageconnectstoEnglishwordsis one of the primary obstacles to sign language recognition [16].TolocateEnglishtermswithtwodistinctmeaningsisa straightforward test. There would be a symbol with the identical two senses as the English term if ISL signs representedEnglishwords.Forinstance,theEnglishword "right"maysignifyeither"left"or"wrong,"dependingonthe context.Thereisn'tanISLsignthathasthesetwomeanings, however. In ISL, they are conveyed by two distinct signs, exactlyastheyareinFrench,Spanish,Russian,Japanese,and the majority of other languages, where they are stated by twodistinctwords[17].

We all know that language is the primary means of interculturalcommunication.LanguageslikeHindi,English, Spanish,andFrenchmaybeuseddependingonthenationor area that a person is from. Currently, sign language is utilized as a means of communication by those who are unabletolistenandspeak.Handgesturesareusedtoconvey ideasandwhattheywanttosay[18].Today,itisimpossible to envisage a world without sign language owing to how challenging it would be for individuals to exist without it. Without sign language, individuals will become helpless. People who are unable to listen or speak now have the chance to live better lives in our world because to sign language. Now that they can interpret sign language, they can converse with others. We created an Indian sign languagerecognitionsystem,whichcanbedeployedinmany locations,toenablepeopletocomprehendthelanguageof the Dumb and the Deaf and make their lives more comfortable[19].

Sinceweareawarethatdeafindividualsfinditchallenging to thrive in environments without sign language interpreters,wehavedevelopedthissystemtohelpussolve theissuesthesepeopleface.TheISLdetectionmethodmay now be used in banks, where a different system can be establishedforthedeafanddumb[20].Aseparatescreen canbemountedonthewall andwill bevisibletoall bank employees while they are at work. Now, when a person entersabank,theymaygostraighttothespecialsystemthat hasbeenestablishedforthemandusegesturestopresent theirquestionstotheISLrecognitionsystem.Themotions will be recognized by the ISL recognition system, and the relevant output will appear on the screen. The public's inquiriesmaybeansweredinthismanner.TheISLmaybe used in grocery shops where consumers can place orders using gestures and the ISL recognition system, and the shopkeepercanalsoplaceordersusingthisframework[21].

Therearemanywaystousesignlanguage,andthepractice ofsignlanguageinterpretationisverycrucialforthegroup of people with disabilities. Through the use of computers, this group may communicate with other hearing communities. We may install our computer equipment in banks, railroads, airports, and other public and private locations so that dumb and deaf people can communicate withhearingpeopleusingthesecomputers.Wemayutilize computersasaninteraction betweenthese two groups as sign language is solely understood by the deaf and dumb society,butitmaybeunderstoodbythehearingcommunity. These computers may provide assistance to such a community.Itallowsforthe transcription ofmotionsinto

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

textorspeech.Ourwarriorsareabletocommunicatewith oneanothersilentlybyemployingsignlanguage[22][23].

The following are a few uses of the Indian sign language recognitionsystem:

1.Withtheuseofthismethod,hearingimpairedanddumb individualsmayconversewithoneother.

2.Itaidsinthedevelopmentofasignlanguagelexicon.

3. It also aids in the empowerment of the Deaf and Deaf people.

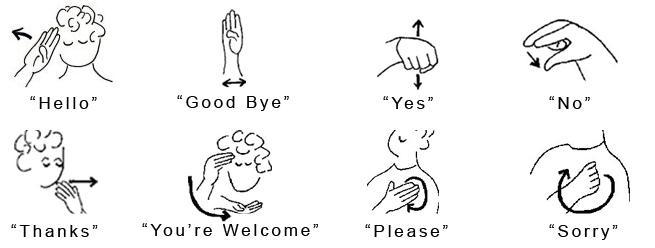

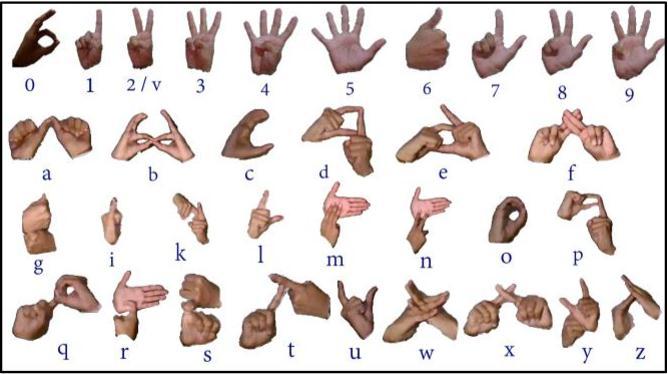

Oneofthemostusefulformsofcommunicationforthose who cannot hear or communicate is sign language. Additionally,ithelpsthosewhocanhearbutcannottalkor viceversa.Deafanddumbhumanbeingscanbebenefited fromsignlanguage.Signlanguageisacollectionofvarious hand gestures, body postures, and facial and body movements.Thisuniquegestureisusedbydeafindividuals to convey their ideas [24]. Each hand gesture, face expression, and body movement have a distinct meaning that is attributed to it [25]. Different sign languages are utilized in various regions of the globe. Verbal communicationlanguageandcultureofanygivenlocation have an impact on the sign language there. For instance, American Sign Language (ASL) is used in the USA, but research on Indian sign language has recently begun with thestandardizationofISL[26].Thereareover6000gestures forwordsthatareoftenusedinfingerspellinginAmerican SignLanguage,whichalsohasitsownsyntax.26movements and single hand are utilized in fingers pronunciations to represent the 26 English letters. The project's goal is to createasystemthatcantranslateAmericanSignLanguage intotext.Thesignlanguageglovesareaneffectivetoolfor ensuring that conversations have significance [27]. A schematicoftheASL,ISLandcommonsignlanguageshas beengivenFig.1,2,and3,respectively.

Fig - 1: Americansignlanguages

Fig - 2: Indiansignlanguages

Humansoftenutilizehandgesturestocommunicatetheir intentionswhileinteractingwithotherpeopleortechnology. A computer and a person are communicating with one anothernonverballyviathismedium.Analternatemeansof communication for voice technology is this. It is a contemporarymethodthatallowcomputercommandstobe executedafterbeingcorrectlyrecordedandunderstoodby hand gesture. Real-time applications like automated television controlling [28], automation [29], intelligent monitoring [30], virtual and augmented reality [31], sign languageidentification[32],entertaining,smartinterfaces [33][34], etc. often use human-generated motions or gestures.Gesturesareacontinualmovementthatahuman can easily perceive but that a computer has a hard time pickingupon.Humangesturesincludemotionsofthehead, hand,arms,fingers,etc.Relativetootherhumananatomical parts, hands are the best understandable and practical mechanismforHuman-MachineInteraction(HMI).

It is separated into two categories: static gestures and dynamic movements. A static gesture is a certain arrangementandstancethatisshown bya singlepicture. And,Adynamicgestureisamixofstanceandatime-related videosequence.Asingleframewithminimalmetadataand low processing effort is used for every static gesture. Dynamicgesture,ontheotherhand,isbettersuitedforrealtime application since it is in the format of video and includes more complicated and significant information. Additionally,thisgestureneededhigh-endgearfortraining and testing. To focus on hand gesture detection, several researchers have attempted to apply various machine

Fig - 3: Somepopularsignlanguages

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

learning techniques [35]. Hands with fingers, palms, and thumbs are included in this scientific discipline of hand gestures in order to detect, identify, and recognize the gesture.Becauseofthetinysizerelativetootherbodyparts, increased identification difficulty, and frequent self- or object occlusions, hand estimation is particularly challenging. Additionally, it is challenging to build an efficient hand motion detection system. Since numerous investigationshavebeenconducted,handgesturedetection, especially from 2-dimensional video streams using digital cameras,hasbeenreported.

These methods, however, run into issues such color sensitivities,opacity,clutteredbackground,lightingshifts, and light variance. Investigators can now take into consideration the 2D scene details thanks to the recently releasedversionoftheMicrosoftKinectcamerathathasa realisticandaffordabledepthsensor.Furthermore,because of the advanced technology used to create it, the depth sensor is more resistant to variations in light. The recognition method has traditionally been carried out by machinelearningalgorithmsafterexplicitextractionofthe spatial-temporalhandgesturecharacteristics[36].Manyof theelementsweremanuallybuiltor"hand-crafted"bythe userinaccordancewiththeframeworktoaddressparticular issueslikehandsizevariationandvariableillumination.The method of feature extraction in the hand-crafted methodologyisproblem-oriented.Findingtheidealbalance between effectiveness and precision in computers is also necessaryfortheproductionofhand-craftedcharacteristics. Deep learning has brought about a paradigm change in computervision,however,becausetorecentadvancements in data and processing resources with strong hardware. These deep learning-based algorithms have recently been able to infer meaningful information from movies. Processing this data doesn't need an outside algorithm. Interesting results have also been found in a number of tasks,notablyrecognitionofhandgestures[37].Ontheuse ofhandgesturerecognitioninourdailylives,arecentreview workhasshedsomelight.Someofthefrequentusesofhand gesture recognition include robot control, gaming surveillance, and sign language understanding. The main goal of this study is to offer a thorough analysis of hand gesture recognition, which is the core of sign language recognition[38].

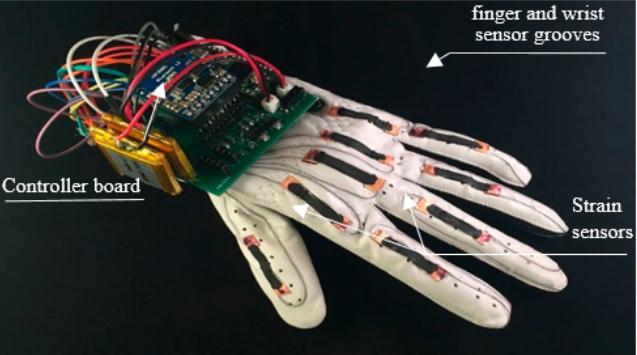

Thereareseveralhardwaremethodsthatareutilizedto getdata about body location.Themajority oftrackers are device- or image-based. Gathering the motions is the first phase, and then identifying them is the next. This present researchmakesanefforttoanalyzedatafromflexsensors installedonagloveinordertorecognizethemotionsthat aredone.Despiteanumberofflawsinthesystem,suchas uncertaintyandnoise,thepredictionaccuracyforthegadget is relatively high. The majority of people who are speechand hearing-impaired has drastically increased in recent years.Tocommunicate,thesefolksusetheirownlanguage.

However,wemustcomprehendtheirlanguage,whichmight be challenging attimes.By employing flexsensors,whose output is sent to the microcontroller, the project seeks to solvethisproblemandenabletwo-waycommunication.The signals are processed by the microcontroller, which transformsanalogimpulsesintodigitalsignals.Theintended outputisalsopresentedwiththespokenoutputwhenthe gesturehasbeenfurtheranalyzed[39].

Inconsiderationoftheacademiccommunity'sgrowing interestingesturerecognizingingeneralandhandgesture identification in particular during the last two decades, a multitude of relevant approaches and tools have evolved. Althoughseveralsecondarystudieshaveattemptedtocover theseanswers,therearenocomprehensivestudiesinthis fieldofstudy.Thefundamentalcontributionofthispaperis thethoroughanalysisandcompilationofsignificantfindings onhandgesturerecognition,supportingtechnologies,and vision-basedhandgesturerecognitionmethods.Theprimary methodsandalgorithmsforvision-basedgesturerecognition arealsoexploredinthispaper.Thispaperoffersathorough examinationofhandgestureanalysismethods,taxonomies forhandgestureidentificationmethods,methodologiesand programs,implications,unresolvedproblems,andpossible futureresearchareas.

Theliteraturereviews,polls,andstudiesonhandgesture recognitionarebrieflydiscussedinthissubsection.Areview oftheresearchonvision-basedhumanmotiontrackingwas done by Moeslund et al. [40]. The review gives a general overviewofthefourmotioncapturesystemproceduresof identification,tracking,initiation,andmotionanalysis.The authors also analyzed and addressed a variety of system functioning features and suggested a number of potential futureresearchavenuestodevelopthisfield.Derpanisetal. [41] discussion on hand gesture recognition included a varietyofangles.Thestudyfocusesonanumberofgroundbreakingpapersinthesubjectsunderdiscussion,including featuretypes,classificationstrategies,gestureset(linguistic) encoding, and hand gesture recognition implementations. Common issues in the field of vision-based hand gesture identification are discussed in the study's conclusion. The survey by Mitra et al. [42] is an analysis of the prior literature with a primary emphasis on hand gestures and facialreactions.Techniquesforrecognizingfacialmovement and hand gestures based on vision are presented. This important paper also identifies current difficulties and potentialresearchramifications.Thecorpusofinformation on hand gesture recognition has been reviewed in a literature study by Chaudhary et al. [43]. The primary emphasis of this review is on artificial neural networks, genetic algorithms, and other soft computing-based methodologies. It was discovered that the majority of researchersrecognizehandsinappearance-basedmodeling using their fingers. Various sensor and vision-based hand gesturedetectionalgorithmswereexaminedbyCoreraetal. [44] in addition to current tools. The article covers the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

design issues and logical difficulties associated with hand gesture recognition methods. The authors also assess the advantagesanddisadvantagesofsensor-andvision-based approaches

This section of the study highlights the present research needsbyspecifyingtheinvestigationtopicsandassociated keywords.

The issue of identifying and demonstrating hand motion recognition techniques is not new. Different strategies, procedures,andtechniqueshavebeencreatedthroughout time to demonstrate the components of hand gesture recognition. The following SLR research issues will be addressedinthisstudy:

Thefollowingresearchquestionswillbeaddressedbythis SLR:

Q1. What current techniques and supporting technologies are availableforhandgesturerecognition?

Findingsfromaddressingtheresearchquestions.Thispart shouldaddresstheresearchqueriescreatedtoachievethe objectivesofthestudy.Interactingandcommunicatingwith people may be done via gestures. Recognizing gestures demonstratesone'scapacitytodiscernthemessageanother person is trying to convey. Gesture recognition is the practice of identifying human gestures by computers. The physical movement of one's fingers, arms, hands, or torso mayconveyagestureandconveyamessage[45].

Gesturerecognitionhasbeenusedincomputerscienceto understandandanalyzehumangesturesusingmathematical algorithms. Although they may be made with the whole body, gestures usually start with the hands or the face. Gestures are expressive, meaningful bodily motions that include physical actions of the fingers, hands, arms, head, face,ortorso,accordingtoresearchbyMitraandAcharya [46].Incomparisontoamachine'scapacityforinformation recognition, the human perception of body motions is distinct from and more spontaneous. Through the use of sensesincludinghearingandvision,humansarebetterable todetectgesturesonthespot.

Compared to other body parts, the hand is the one that is usedforgesturingthemost.Asaresult,thehandistheideal communication tool and candidate for HCI integration. Natural and efficient non-verbal communication using a computer interface is made possible by human hand movements.Handgesturesaretheexpressivegesturesigns of the hands, arms, or fingers. Hand gesture recognition

includesbothstaticmotionswithcomplicatedbackgrounds anddynamicgesturesthatinteractwithbothcomputersand people.Thehandservesasthemachine'sinputdirectly;no intermediary medium is required for the communication functionofgesturerecognition[47].

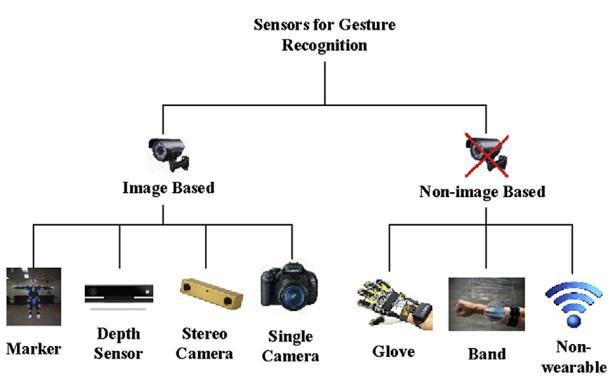

Sensor-basedanalysisandvision-basedanalysisarethetwo mainkindsofhandgestureanalysismethodologiesthatare utilizedtorecognizehandgestures.Inordertomakehand gestures,thefirstsolutioncallsfortheintegrationofvisual ormechanicalsensorsinglovesthatalsocarrieselectrical signalsincorporatedinthefinger’sflexormuscles.Usingan additional sensor, the hand position may be determined. Then, a specific glove with signal-receiving capabilities is attached to an auditory or magnetic sensor. To transmit information pertinent to hand position identification, this glove is linked to a software toolbox [48]. The second categoryofmethods,referredtoas“vision-basedanalysis,” relyonthewayinwhichindividualspickuponcuesfrom theirsurroundings.Thisapproachextractsseveralattributes generatedfromspecificphotosusingavarietyofextraction methods.Mostmethodsforrecognizinghandgesturesuse mechanical sensors, which is often employed to regulate signals from the environment and surrounds. Communicationsystemsthatmerelyutilizesignalsinclude mechanical senses as well. The application of mechanical sensorsforhandgestureidentification,ontheotherhand,is associated with a few problems with the accuracy and dependabilityofthedataacquiredaswellaspotentialnoise from electromagnetic equipment. The process of gesture engagementmaybefacilitatedbyvisualperception[49].

GestureRecognitionisatermusedtodescribetheprocessof analyzing and translating hand motions into meaningful instructions.Therefore,themajorobjectiveofhandgesture recognitionistodevelopaplatformthatcandetecthuman hand gestures as inputs and that can then utilize these gesturestounderstandandexamineinformationinorderto generate output data that is in accordance with specific criteria supplied in the input. There are two categories of handgesturerecognitiontechnologies:1.Sensor-basedand 2.Vision-basedmethodsexist[50].

Sensor-based technologies depend on tools for user interactions and other real-world objects that relies on technologicaladvancements.Aschematicofvarioussensors forgesturerecognitionhasbeenusedinFig 4.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

coloured-coded to guide the operation of monitoring the handandfindingthepalmandfingersthatwillsupplythe precisegeometriccharacteristicsthatleadtothefabrication oftheprosthetichand[52].

The glove technique makes use of sensors to record the locationandmovementsofthehands.Themotionsensors onthehandare responsible fordetectingtheuser'shand, while the sensors on the gloves are responsible for determiningthepropercoordinatesofwheretheuser'spalm andfingersarelocated.Thismethodislaborioussincethe user must be physically linked to the computer. An illustration of a sensor-based technique is the data glove [51].Theadvantagesofthisstrategyincludehighlyaccurate and quick response times. In Fig. 5 a glove consisting of sensorshasbeenshown.

Fig - 6: Colourmarkerbasedhandgesturerecognition

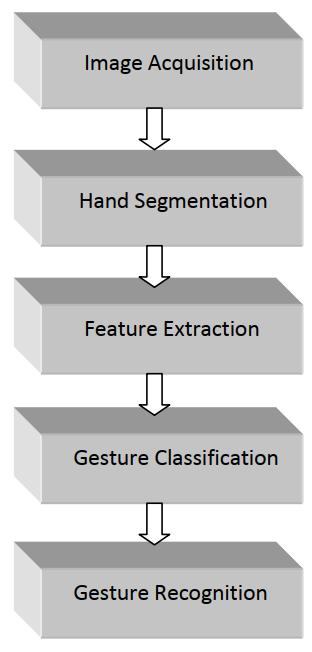

Forarecognitionsystemtofunctionnaturally,acameraisall thatisneededtocaptureapicture.Inreal-timeapplications, thatismorebeneficial[53].Althoughthisapproachseems straightforward, there are several obstacles to overcome when using it, including a complicated backdrop, varying lighting,andotherobjectswithdifferentskintonesholding thehanditem.Technologiesthatrelyonvisiondealswith theaspectsofanimagesuchastextureandcolourthatare necessary for gesture recognition. Following picture preprocessing, various strategies have developed for recognizing hand objects. Steps for the execution of programshavebeenshowninFig.7,whichshowsinitiation fromtheimagecapturingtothegesturerecognitionstep.

The drawback of this strategy is that data gloves are prohibitively costly, need the use of the whole hardware toolbox, and are rigid in character. Therefore, the visionbased technique was developed in order to get over this restriction.

AschematichasbeenaddedinFig. 6relatedtothecoloured marked hand glove recognition system. According to this method, the glove that the human hand will wear is

Fig.-7: Visionbasedhandgesturerecognitionsystem

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

Thismethodinvolvesmodellingtheaestheticappealofthe inputhandpictureutilizingmorphologicaloperations,which isthenmatchedtothe extractionoffeaturesofthestored image. Especially in comparison to a 3D model-based technique, this method is simpler and offers real-time performance.Thisstrategy'sgeneralaimistolookforareas inapicturethatareskin-coloured.Manystudieshavebeen conductedrecentlythatuseinvariantcharacteristics,suchas theAdboostlearningmethodratherofmodellingthewhole hand,onemayidentifyspecificlocationsandregionsonthe handbyusingthisinvariantproperty.Theadvantageofthis approachisthatitsolvestheocclusionissue[54].

Fig - 8: 3Dmodel-basedapproaches.

Thismethodusesa 3D model toanalyzeandsimulatethe geometryofthehandasdepictedinFig.8.Adepthvariable isprovidedtothe3Dmodeltoincreaseaccuracysincesome data is lost during the 2D projection [3]. Volumetric and skeletonmodelsarethetwotypesof3Dmodels.Thosetwo models had limitations while they were being designed. Becauseitdealswiththe3Dmodel'sfoundationallookofthe user'shand,volumetricmodelsareemployedinrealworld applications. This system's enormous dimensionality parameters, which force the designer to operate in three dimensions,arethefundamentaldesignchallenge[55].

BasedonseveralcolouredindicatorsasshowninFig.9,this designisusedtomonitorthemobilityofthebodyorspecific body components. Users may magnify, rotate, sketch, and scribbleononscreenkeyboardsbymountingtheRed,Green, Blue, and yellow-coloured markers, which are used to communicate withthevirtual prototypes. Thistypeoffers moreversatility[56].

Byrestrictingthevariables,theskeletalmodelingfixesthe issues with the volumetric model. The sparse erosion will result in more efficiency. The feature optimization is intricate. Compressive sense is employed to retrieve the sparsesignalsfromasmallnumberofobservations,which helps to save resources [57]. A schematic of 3-D skeletal basedhasbeendepictedinFig.10.

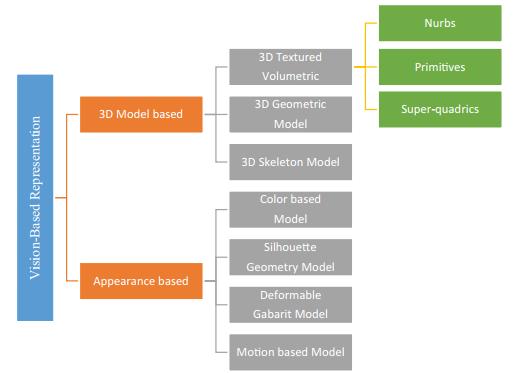

The motions of diverse human body gestures may be described by a variety of paradigms and depictions of gestures. The 3D Prototype and Appearance-based techniquesare thetwo primarymethods forrepresenting gestures,asshowninFig.11.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

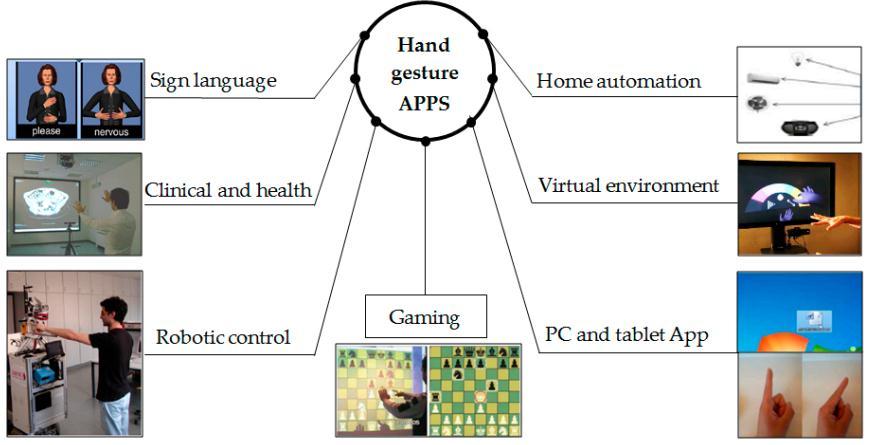

many different fields [59]. Fig. 13 shows the possible applicationofhandgestureinteractionsystem.

Fig -11: Representationofvision-basedhandrecognition techniques

Fig -13: Applicationofhandgestureinteractionsystem

a) Virtual reality: Increasing real-world applications and virtualgesturesbothhavehelpedtodrivesignificantgrowth forcomputerhardware.

b) Surgical Clinic: TheKinecttechnologyisoftenutilizedin hospitals to streamline operations owing to increasing workload.Themanyfunctionalities,suchasmove,modify, and zoom in on Ct scanners, MRIs, and other diagnostic pictures,willbeoperatedbythephysiciansusingthehand gesturesystem.

Fig -12: Statisticaldataoftheuseof3Dprototypeand appearance-basedtechniques

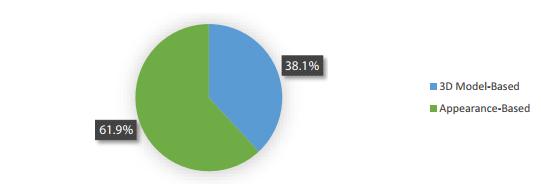

A statistical study of the use of 3D prototype and appearance-based techniques in vision-based gesture identificationisshowninFig.12.Here,itappearsthatthe scientificcommunityfavorsappearance-basedmethodsover 3Dmodelapproachandvision-basedmethodsforgesture detection.38.1%oftheacademicpublicationsdiscussed3D model-based techniques, while 61.9% of the articles discussedappearance-basedtechniques[58].

Q2. What are the many situations in which hand gestures for interactivity are used commercially available programs, equipment, and application domains?

Systems for recognizing hand gestures using vision are crucialforseveraleverydaytasks.

This subsection addresses some disciplines that require gestureinteractionssincegesturerecognitioniscrucial in

c) Programs for desktop and tablet computers: Gestures areutilizedintheseapplicationstoprovideanalternative kindofinteractivitytothatproducedbythekeyboardand mice.

d) Sign Language: The use of signs as a means of communications is seen as an essential component of interpersonal communication. This is due to the fact that sign language is seen to have a stronger structural foundation and is better suited for test beds used in algorithmic computer vision. Furthermore, it may be said that using such algorithms will help persons with impairmentscommunicatewithoneanother.Theliterature haspaidalotofattentiontohowsignlanguageforthedeaf encouragestheutilizationofgestures[60].

According to the current assessment, investigation on gesturerecognitionandvision-basedapproachestypically encountersanumberofopenproblemsanddifficulties.The researchofhandgestureidentificationisnowconfrontinga number of difficulties, including the identification of invariant characteristics, transition models between gestures, minimum sign language recognition units, automated recognition unit segments, recognition

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

approacheswithscalabilityregardingvocabulary,secondary information, signer independence and mixed gestures verification,etc.

The static gesture recognition based on vision is thus the currenttrendinhandgesturerecognitionandprimarilyhas thefollowingtwotechnologicalchallenges:

1. Difficulties detecting targets Target detection involves extracting the item of interest from the visual stream by capturing the target against a complicated backdrop. The separationofthehumanhandregionfromotherbackdrop areasinapictureisalwaysachallengeforvision-basedhand gesture identification systems, mostly because of the variability of the background and unanticipated environmentalelements.

2.ChallengeswithtargetidentificationUnderstandinghand gestures helps to clarify the broad implications related to hand posture and movement. The essential technique for recognizinghandgesturesistoextractgeometricinvariant aspectsinlightofthehandgesture'ssubsequentproperties.

3.Sincethehandisaflexibleentity,comparablemovements mayvarygreatlyfromoneanotherwhileyetsharingagreat deal of similarities. The human hand has more than 20 degreesoffreedom,anditmaymoveinavarietyofintricate ways.Asaresult,similargesturesdonebydifferentpersons may differ from those produced by the same person at a differenttimeorlocation.

4. Since identifying finger characteristics is a crucial componentofhandgesturerecognition,thehandincludesa lot of duplicate information. One such redundant informationisthepalmfeature.

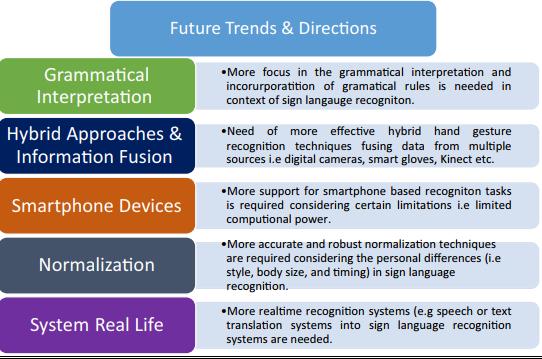

We were able to identify a number of potential study avenues and research subjects that may be investigated furtherbasedonouranalysisandinspectionofmorethan 150 papers. The main developments and directions in researchforgesturerecognitionareshowninFig.14.First, SLs contain unique grammatical rules that have not been wellstudiedinrelationtotheirequivalentspokenlanguages. Althoughtherehavebeenrelativelyfewresearcheffortsin this area, it may be a future avenue for hand gesture detection problems. Therefore, research into and incorporation of sign language morphological norms into spokenexpressionsmaybebeneficialforprospectivesign language recognition systems. Second, creating hybrid techniquesisacurrenttrendinmanydomainsthatmaybe researchedandusedinhandgesturedetection.

Development of hybrid systems incorporating various algorithms and approaches, as well as non-homogeneous sensors, such as Kinect, innovative gloves, image sensors, detectors,etc.,isthusurgentlyneeded.Thisisregardedas

anotherworthwhilestudytopicintheareaofrecognitionof handgestures.

Fig -14: Futureresearchtrendsanddirections

Thegeneralpublic,peoplewithphysicaldisabilities,those playing3Dgames,andpeoplecommunicatingnonverbally with computers or other people may all use hand gesture detection systems. There is a need for study since hand gesturerecognitionsystemsarebeingusedmoreandmore. This article provides a critical analysis of the many approaches employed nowadays. The hand gesture recognitionsystem'sgenerickeyelementsandtechniqueare detailed. The categorization, monitoring, extraction of features, and gesture detection methods are briefly compared. Developing highly effective and intelligent Human-Computer Interfaces (HCI) requires the use of vision-basedgesturerecognitionalgorithms.Techniquesfor recognizinghandgestureshavebecomea popularkind of HCI.

Therehasbeenanoticeablechangeinstudyfocustoward the understanding of sign language. This research also outlined prospective uses for hand gesture recognition technologiesaswellasthedifficultiesinthisfieldfromthree angles: system, environmental, and gesture-specific difficulties. The findings of this systematic review also suggestedseveralpossiblefuturestudytopics,includingthe urgentneedforafocusonlinguisticinterpretations,hybrid methodologies, smart phones, standardization, and realworldsystems.

[1] G Adithya V., Vinod P. R., Usha Gopalakrishnan, “ArtificialNeuralNetworkBasedMethodforIndianSign LanguageRecognition,”IEEEConferenceonInformation

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page640

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

andCommunicationTechnologies(ICT),2013,pp.10801085.

[2] Rajam,P.SubhaandGBalakrishnan,"RealTimeIndian Sign Language Recognition System to aid Deaf and Dumb people," 13th International Conference on CommunicationTechnology(ICCT),2011,pp737-742.

[3] Deepika Tewari, Sanjay Kumar Srivastava, “A Visual Recognition of Static Hand Gestures in Indian Sign Language based on Kohonen Self- Organizing MapAlgorithm,” International Journal of Engineering andAdvancedTechnology(IJEAT),Vol.2,Dec2012,pp. 165-170.

[4] G.R.S.Murthy,R.S. Jadon, “AReviewof VisionBased Hand Gestures Recognition,” International Journal of InformationTechnologyandKnowledgeManagement, vol.2(2),2009,pp.405-410.

[5] P. Garg, N. Aggarwal and S. Sofat. “Vision Based Hand Gesture Recognition,” World Academy of Science, EngineeringandTechnology,Vol.49,2009,pp.972-977.

[6] FakhreddineKarray,MiladAlemzadeh,JamilAbouSaleh, Mo Nours Arab, “Human Computer Interaction: OverviewonStateoftheArt,”InternationalJournalon SmartSensingandIntelligentSystems,Vol.1(1),2008,

[7] Appenrodt J, Handrich S, Al-Hamadi A, Michaelis B , “Multistereocameradatafusionforfingertipdetection in gesture recognition systems,” International conferenceofsoftcomputingandpatternrecognition,” 2010,pp 35–40.

[8] AuephanwiriyakulS,PhitakwinaiS,SuttapakW,Chanda P, Theera-Umpon N,“Thai sign language translation using scale invariant feature transform and hidden markovmodels,”PatternRecognLett34(11),2013,pp. 1291–1298

[9] AujeszkyT,EidM,“Agesturerecognitionarchitecture for Arabic sign language communication system,” MultimedToolsAppl75(14),2016,pp.8493–8511.

[10] Bao J, Song A, Guo Y, Tang H, “Dynamic hand gesture recognition based on SURF tracking,” international conference on electric information and control engineering,2011, pp338–341.

[11]BellarbiA,BenbelkacemS,Zenati-HendaN,BelhocineM

“Handgestureinteractionusingcolorbasedmethodfor tabletop interfaces,” 7th international symposium on intelligentsignalprocessing,2011,pp.1–6.

[12]Dhruva N. and Sudhir Rao Rupanagudi, Sachin S.K., Sthuthi B., Pavithra R. and Raghavendra, “Novel

SegmentationAlgorithmforHandGestureRecognition,” IEEE,2013,pp.383-388.

[13]Gaolin Fang, Wen Gao, and Debin Zhao, “Large Vocabulary Continuous Sign Language Recognition Based on Transition-Movement Models,” IEEE transactionsonsystems,man,andcybernetics parta: systemsandhumans,Vol.37(1),2007,pp.1-9.

[14]Sulochana M. Nadgeri,Dr. S.D.Sawarkar,Mr.A.D.Gaw ande, “Hand gesture recognition using CAMSHIFT Algorithm,”ThirdInternationalConferenceonEmerging Trends in Engineering and Technology, IEEE ,2010, pp.37-41.

[15] Vasiliki E. Kosmidou, and Leontios J. Hadjileontiadis, “Sign Language Recognition Using Intrinsic-Mode SampleEntropyonsEMGandAccelerometerData,”IEEE transactions on biomedical engineering, Vol. 56 (12), 2009,pp.2879-2890.

[16] Joyeeta Singha, Karen Das, “Indian Sign Language Recognition Using Eigen Value Weighted Euclidean DistanceBasedClassificationTechnique,”International JournalofAdvancedComputerScienceandApplications, Vol.4,No.2,2013,pp.188-195.

[17]DipakKumarGhosh,SamitAri,“AStaticHandGesture RecognitionAlgorithmUsingK-MeanBasedRadialBasis FunctionNeuralNetwork,”IEEE,2011,pp.1-5.

[18]N. D. Binh, T. Ejima, “Real-Time Hand Gesture RecognitionUsingPseudo3-DHiddenMarkovModel,” 5th IEEE International Conference on Cognitive Informatics, 2, 2006,pp.820-824.

[19] Y. Han, “A Low-Cost Visual Motion Data Glove as an InputDevicetoInterpretHumanHandGestures,” IEEE Transactions on Consumer Electronics,vol. 56, 2010,pp. 501-509.

[20]L. Dipietro, A. M. Sabatini, P. Dario, “Survey of Glove Based Systems and Their Applications, “ IEEE Transaction on Systems, Man and Cybernetics, Part C: Applications and Reviews,vol.38, 2008,pp.461-482.

[21]X. Y. Wang, G. Z. Dai, X. W. Zhang, “Recognition of ComplexDynamicGestureBasedonHMM-FNNModel,” Journal of Software,vol.19, 2008,pp.2302-2312.

[22]F.Quek,T.Mysliwiec,M.Zhao, “FingerMouse:a FreehandPointingInterface,” IEEE International Conference on Automatic Face and Gesture Recognition-FGR,1995, pp.372-377.

[23]J. Triesch, C. V. D. Malsburg, “A System for PersonindependentHandPostureRecognitionagainstComplex

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page641

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

Backgrounds,” IEEETransactiononPatternAnalysisand Machine Intelligence,vol.23, 2001,pp.1449-1453.

[24] B. Stenger, “Template Based Hand Pose Recognition UsingMultipleCues,” Lecture Notes inComputerScience, Vol.3852,2006,pp.551-560.

[25] C. Keskin, F. Kirac and Y. E. Kara, Lale Akarun, “Real Time Hand Pose Estimation Using Depth Sensors,” In Proceedings of the IEEE International Conference on Computer Vision Workshops,2011,pp.1228–1234.

[26] J. Davis, M. Shah, “Visual Gesture Recognition,” IEEE Proceedings on Vision, Image and Signal Processing,vol. 1411994,pp.101-106.

[27]Jerome M. Allen, Pierre K. Asselin, Richard Foulds, “American Sign Language Finger Spelling Recognition System,”IEEE,2003,pp 285-286.

[28]Nimitha N, Ranjitha R, Santhosh Kumar V, Steffin Franklin, “Implementation of Real Time Health MonitoringSystemUsingZigbeeForMilitarySoldiers,” InternationalJournalforResearchinAppliedScience& EngineeringTechnology(IJRASET),Volume3,IssueIV, April2015,pp.1056-1059.

[29]A. S. Ghotkar , G. K. Kharate, “Hand Segmentation Techniques to Hand Gesture Recognition for Natural HumanComputerInteraction,”InternationalJournalof HumanComputerInteraction(IJHCI),ComputerScience Journal,Malaysia,Volume3,no.1,April2012,pp.15-25.

[30]U. Rokade, D. Doye, M. Kokare, “Hand Gesture RecognitionUsingObjectBasedKeyFrameSelection,” International Conference on digital Image Processing, IEEEComputerSociety,2009,pp.228-291.

[31]N.D.Binh,E.Shuichi,T.Ejima,“RealtimeHandTracking andGestureRecognitionSystem,” ICGSTInternational ConferenceonGraphics,VisionandImage Processing, GVIP05Conference,2005,pp.362-368.

[32] T. Maung,“Real-Time Hand Tracking and Gesture RecognitionSystemUsingNeuralNetworks”,PWASET, Volume38,2009,pp.470-474.

[33]Feng-Sheng Chen, Chih-Ming Fu, Chung-Lin Huang; “Hand gesture recognition using a real-time tracking methodandhiddenMarkovmodels,”ImageandVision Computing,vol.21,2003,pp.745-758.

[34]Yu Bo, Chen Yong Qiang, Huang Ying Shu,Xia Chenjei; “Static hand gesture recognition algorithm based on finger angle characteristics,” Proceedings of the 33rd ChineseControlConference,2014,pp.8239-8242.

[35] PalviSingh,MunishRattan,“HandGestureRecognition Using Statistical Analysis of Curvelet Coefficients,” International Conference on Machine Intelligence ResearchandAdvancement,IEEE,2013,pp435-439.

[36]LiuYun,ZhangLifeng,ZhangShujun,“AHandGesture RecognitionMethodBasedonMulti-FeatureFusionand Template Matching,” Procedia Engineering, vol. 29, 2012,pp 1678-1684.

[37] U. Rokade, D. Doye, M. Kokare, “Hand Gesture Recognition by thinning method,” International ConferenceondigitalImageProcessing,IEEEComputer Society,2009,pp.284-287.

[38] Liling Ma, Jing Zhang, Junzheng Wang ,“Modified CRF Algorithm for Dynamic Hand Gesture Recognition,” Proceedings of the 33rd Chinese Control Conference, IEEE,2014,pp 4763-4767.

[39]C.WangandS.C.Chan;“Anewhandgesturerecognition algorithmbasedonjointcolor-depthSuper-pixelEarth Mover’sDistance”;InternationalWorkshoponCognitive InformationProcessing,IEEE,2014,pp 1-6.

[40]MoeslundTB,GranumE,“Asurveyofcomputervisionbased human motion capture”, Comput Vis Image Underst,vol.81(3),pp231–268.

[41]DerpanisKG,“Meanshiftclustering,”Lecturenotes,vol. 32,2015,pp1-4.

[42] Mitra S, Member S, Acharya T, Member S, “Gesture recognition:asurvey,”IEEETransSystManCybernPart CApplRev,vol.37(3),2007,pp.311–324

[43]Chaudhary A, Raheja J, Das K, Raheja S, “Intelligent approaches to interact with machines using hand gesture recognition in natural way: a survey,” Int J ComputSciEngSurv,vol.2(1),2011,pp.122–133.

[44]Corera S, Krishnarajah N, “Capturing hand gesture movement: a survey on tools, techniques and logical considerations,”Proceedingsofchisparks,2011,pp 111.

[45]Hu M, Shen F, Zhao J, “Hidden Markov models based dynamic hand gesture recognition with incremental learning method,” international joint conference on neuralnetworks(IJCNN),2014,pp 3108–3115

[46]Huang D-Y, Hu W-C, Chang S-H, “Vision-based hand gesturerecognitionusingPCA+GaborfiltersandSVM,” fifthinternationalconferenceonintelligentinformation hidingandmultimediasignalprocessing,2009,pp1–4.

© 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page642

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN: 2395-0072

[47]Huang D-Y, Hu W-C, Chang S-H , “Gabor filter-based hand-poseangleestimationforhandgesturerecognition undervaryingillumination,”ExpertSystAppl,vol.38(5), 2011,pp.6031–6042

[48]HuangD,TangW,DingY,WanT,WuX,ChenY,“Motion capture of hand movements using stereo vision for minimally invasive vascular interventions,” sixth internationalconferenceonimageandgraphics,2011, pp 737–742

[49] IbargurenA,MaurtuaI,SierraB,“Layeredarchitecture for real time sign recognition: Hand gesture and movement,”EngApplArtifIntell,vol.23(7),2010,pp. 1216–122.

[50]IonescuB,CoquinD,LambertP,BuzuloiuV,“Dynamic hand gesture recognition using the skeleton of the hand,” EURASIPJAdvSignalProcess,vol.13,2005,pp. 2101–2109

[51]Ionescu D, IonescuB,Gadea C,IslamS,“Anintelligent gesture interface for controlling TV sets and set-top boxes,” 6th IEEE international symposium on applied computationalintelligenceandinformatics(SACI),2011, pp.159–164.

[52] IonescuD,IonescuB,GadeaC,IslamS,“Amultimodal interactionmethodthatcombinesgesturesandphysical gamecontrollers,”proceeding-internationalconference computer Communicationnetworks,ICCCN,2011,pp1–6

[53]Jain AK, “Data clustering: 50 years beyond K-means,” PatternRecognLett,vol.31(8),2010,pp.651–666

[54] Ju SX, Black MJ, Minneman S, Kimber D, “Analysis of gestureandactionintechnicaltalksforvideoindexing,” Proceedings of IEEE computer society conference on computervisionandpatternrecognition,1997,pp595–601.

[55]Just A, “Two-handed gestures for human-computer interaction,”ResearchreportIDIAP,2006,pp.31-43.

[56]KaânicheM,“Gesturerecognitionfromvideosequences,” Diss.UniversitéNiceSophiaAntipolis,2009,pp.57-69.

[57]Kanungo T, Mount DM, Netanyahu NS, Piatko CD, Silverman R, Wu AY, “An efficient k-means clustering algorithm: analysis and implementation,” IEEE Trans PatternAnalMachIntell,vol.24(7),2002,pp.881–892.

[58]Karam M, “A framework for research and design of gesture-basedhuman-computerinteractions,”Doctoral dissertation,UniversityofSouthampton,2006,pp.130138.

[59]Kausar S, Javed MY, “A survey on sign language recognition,”FrontInfTechnol,2011,pp.95–98

[60]Kılıboz NÇ, Güdükbay U, “A hand gesture recognition technique for human–computer interaction,” J Vis CommunImageRepresent,vol.28,2015,pp.97–104

Sunil G. Deshmukh acquired the B.E. degree in Electronics Engineering from Dr. Babasaheb AmbedkarMarathwadaUniversity, Aurangabad in 1991 and M.E. degreeinElectronicsEngineering from Dr. Babasaheb Ambedkar Marathwada University (BAMU), Aurangabadin2007.Currently,he is pursuing Ph.D. from Dr. BabasahebAmbedkarMarathwada University,Aurangabad, India.He is the professional member of ISTE,Hisareasofinterestsinclude image processing, Humancomputerinteraction.

Shekhar M. Jagade secured the B.E. degree in Electronics and Telecommunication Engineering from Government Engineering College, Aurangabad in 1990 and M.E degree in Electronics and CommunicationEngineeringfrom Swami Ramanand Teerth MarathwadaUniversity,Nandedin 1999 and Ph.D from Swami Ramanand Teerth Marathwada University,Nandedin2008 Hehas published nine paper in international journals and three papers in international conferences. He is working as a vice-principal in the N B Navale Sinhgad College of Engineering, Kegaon-Solapur, Maharashtra, India He is the professional memberofISTE,ComputerSociety of India, and Institution of Engineers India His field of interests includes image processing, micro-electronics, Human-computerinteraction

2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page643