International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

1,2,3,4,5

Abstract - Face Recognition is a computer application that is capable of detecting, tracking, identifying, or verifying human faces from an image or video captured using a digital camera. The human face is a sophisticated multidimensional structure that shuttles a bunch of information including expression, feelings, facial features, etc. This research paper presents advanced image processing techniques. The growth in face mask detection algorithms in recent times has compelled humankind to come up with solutions to tackle the pandemic situation. We have designed a solution with a system that uses numerous algorithms and methods to identify unmasked individuals using several mask detections models and such people can be penalized for violating rules. The proposed system in this research adopts frameworks of OpenCV, Keras, TensorFlow, and deep learning libraries to detect face masks in realtime. To detect masks, initially, the model is trained using the Multi-Task Cascade Convolutional Neural Network (MTCNN) algorithm. The face is first detected using the OpenCV library and facial imagery is stored and passed as an input to the mask detection classifier for classification. For facial detection, the Viola-Jones algorithm is used which is also known as the Haar Cascade algorithm. Our experiment results show that our method is very accurate, reliable, and robust for a face recognition system that can be practically implemented in a real-life environment as a COVID-19monitoringsystem.

Key Words: Mask Detection, Face Recognition, Machine Learning,TensorFlow,OpenCV,Python,etc.

In today's scenario, where the whole world has beenunderlockdownformorethanaperiodof1andhalf yearsduetotheworldwidespreadofthedeadlyCOVID-19 virus. All the institutes and organizations (Educational as well as Corporates) have been working remotely for this whole period from the safety of their home. The only weaponwehadagainstthisviruswasHand-Sanitizersand masks using these we have survived through these times. Now as the Antivirus Vaccines have been produced, we can see a decrease in the number of infections gradually and people have started to come into the organizations physically. But still, we know that even after taking shots of vaccine it is mandatory to follow the Covid norms of

***

social distancing and wearing masks. The organizations havetheresponsibilitytocheckwhethertheemployeesor students in the case of educational organizations are following these rules while entering the premises of the organization. Manually checking whether the person is wearingamaskornotisahectictaskandrequiresalotof manpower. Here comes the use of our system of face maskdetectionandimposingafineonthepersonwhowill violatetherulesofwearingmasksproperly.Suchasystem canbeinstalledattheentranceoftheorganization.

Computer vision is one of the emerging frameworks in the field of object detection and is widely being used in various aspects of research in artificial intelligence.There have been both supervised and unsupervised approaches to machine learning in the past for object detection in an image. An enhanced supervised machine learning technique of object detection has been deployedinthisproject.Todetectmasks,initially,amodel is trained using CNN algorithms with datasets of faces provided. Face masks are first detected using OpenCV (Open-Source Computer Vision) and those image frames are stored and passed to the mask detection classifier for classification.

For facial detection, the Viola-Jones algorithm is used which is also known as the Haar-Cascade algorithm. Wehaveusedamaskclassifiermodeltodifferentiatefaces withmasksandwithoutmasks.Ifapersonwithoutamask is detected their face is recognized using face-recognition models and compared with existing databases and a correspondingfineisimposedonthatperson.

In the proposed system we are going to build a Face Recognition and Face-Mask detection ML model integrated with UI. detected without a mask, the name/id of that person is identified using face recognition and a corresponding fine will be charged to that person by the concerned authority. Using suitable UI the information of registeredusersandtheircorrespondingfinecanbeseen. This data will help to control the misbehavior of people regarding wearing masks. The system is divided into the followingparts:

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

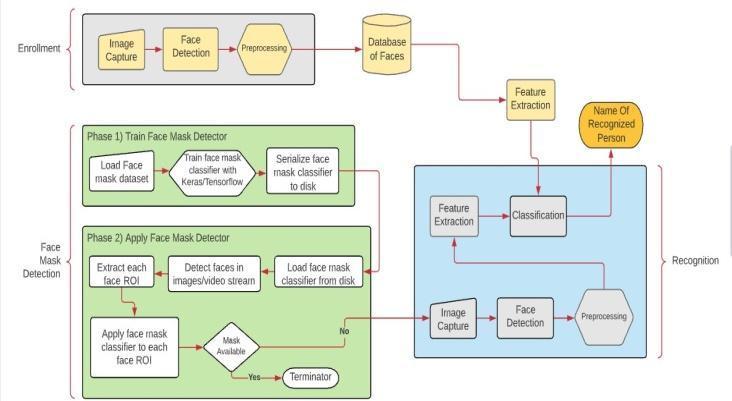

resize the image to 224 × 224 pixels to maintain consistency.ThetrainedmodelisdeployedusingOpenCV. The model is applied to the real-time video frames captured using a webcam to detect people in the frame wearing a mask or not. For face recognition, following processesareinvolved:

i)FaceDetection,

ii)FacialFeatureExtractionfromcapturedimages,

iii) Classification & matching of images based on a databaseoffaceimages.

Figure1.ProposedArchitecture

The System consists of various steps that are Image Capture, Face Mask Detection, Face Recognition, Comparison of Image, and Fine Implementation these can be achieved by ML techniques. The People in the organizations after being registered on the system their faceimagedatais collected andstoredinthedatabase. At the entrance of organizations people entering in will be monitored using any suitable camera and if people without a mask are detected by a face mask detection model such people will be recognized by the face recognitionmodelbymatchingtheirfaceswithanalready available and fine will be charged for them by face identification Proposed model involves a two-stage process for detecting person wearing a face mask or not usingwebcam

i)Identifyingfacesfromtheinputframe.

ii) Detecting mask on the recognized face region and classifyingaccordingly.

Dataset has been divided into the training and the testing datasets. To train efficiently and effectively, we have considered 80% of total images as a training dataset and 20% of total images as testing data sets to test the prediction accuracy. For simplicity, the images in our training data collection are classified into two categories “withmask”and“withoutmask”.usedalightweightimage classifier, MobileNetV2, which gives high accuracy and is well suited for mobile devices. In pre-processing steps,

Transferredlearningusingpre-trainedconvolutionneural networks as feature extractors has been applied to face recognition.Twopublicwell-knownfacedatabaseswillbe usedforfacerecognitionoffacesinthissystem.CNNsare supervised machine learning techniques that can extract deepknowledgefromadatasetthroughrigorousexamplebased training. This machine learning approach mimics the human brain when learning. CNNs have been successfullyappliedtofeatureextraction,facerecognition, classification, segmentation, and examination centers, where accuracy and precision are highly desired to serve thepurpose.Itcanbeusedinsmartcityinnovation,andit wouldboostthedevelopmentprocessinmanydeveloping countries. Our framework presents a chance to be more ready for the next crisis or to evaluate the effects of the huge scope of social change in respecting sanitary protectionrules.

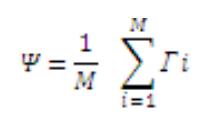

A mathematical model is a description of a system using mathematicalconceptsandlanguage.Amodelmayhelpto explain a system and to study the effects of different components of a system to predict the behavior of a system. The mathematical modeling for our system is as follows

S={∑,F,δ,C}

S =FaceRecognition.

∑ = set of input symbols = {Video File, image, character information}

F = set of output symbol = {Match Found then notify to user,NotFound}

δ= 1.Start

2.ReadtrainingsetofN*Nimages

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

3.ResizeimagedimensionstoN2*1 4. Select training set of N2*M Dimensions, M: number of sampleimages 5. Find the average face, subtract from the faces in the trainingset,andcreatematrixA

Where, Ψ=averageimage, M=numberofimages,and Гi=imagevector. Φi=Гi–Ψ Where,i=1,2,3,…,M. A=[Φ1,Φ2,Φ3…ΦM] 6.Calculatecovariancematrix:AA' 7.Calculateeigenvectorsoftheccovariancematrix. 8. Calculate eigenfaces = No. of training images –no. of classes(totalnumberofpeople)ofeigenvectors.

9. Create reduced eigenface space. The selected set of eigenvectors is multiplied by the matrix to create a reducedeigenface 10.Calculatetheeigenfaceoftheimageinquestion. 11. Calculate Euclidean distances between the image and theeigenfaces.

12.FindtheminimumEuclideandistance.

13. Output: image with the minimum Euclidean distance orimageunrecognizable.

C={Thesystemwillnotprocesstheaudiodata,Eigenfaces will generate the grayscale images, and the algorithm willrunonlyonkeyframes.}

Inthisresearch,identificationofanindividualfor biometric monitoring has been made using Local Binary

Patterns Histogram Algorithm. This monitoring system identifies a person from their front-facing and side-facing images and also functions well with low-quality pictures. The complete monitoring system follows a structure of steps from collecting the imagery to loading of the video camera. If the video camera is running, facial detection is initiated,thentheprocessingoffacialimageryisexecuted and special features of the face are extracted and identified.Usingtheseextractedfacialfeatures,themodel makesits recognition. The researchers have implemented this research using multiple datasets of facial images. LocalBinaryPatternsHistogramisconsideredtobeoneof thebestsuitingalgorithmsforfacerecognition.

CNN is primarily designed for multi-dimensional data processing and the main advantage is its automatic face-feature extraction, which is then forwarded to the classifier network. In this paper, We have tried to use the CNN algorithm for better human face recognition. The convolution Neural Net has been implemented to extract facial expressions and other features in a connected layer alsothesoftmaxclassifierinuse.Adatabaseof50images eachofaperson wastestedforaccuracyandbyusingthis algorithm we found that this one of the finest face recognition algorithms but has some accuracy issues duringdulllighting.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

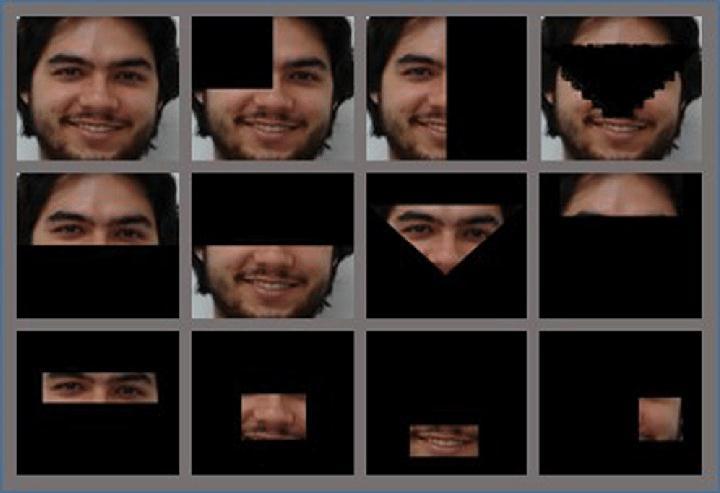

In this research, we have divided the face into various partsusingFEIdatasets.Wegeneratedtwelvetestingsets whereeachtestcorrespondstothepartoftheface.These parts were classified as forehead, eyes, mouth, nose, cheeks etc. Faces with eyes and nose, lower half of the face, upper half of the face, right and left half of the face, three quartered face and also full facial images were generated.

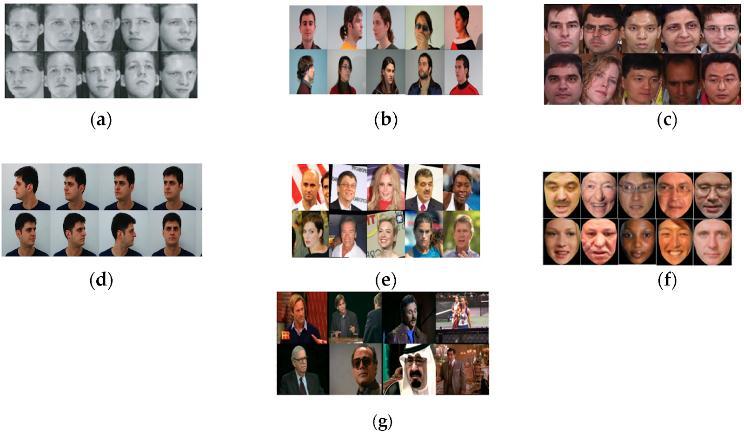

Sample images from all datasets: (a) sample images from ORL dataset; (b) sample images from GTAV face dataset; (c) sample images from Georgia Tech face dataset; (d) sample images from FEI dataset; (e) sample images from LFW dataset; (f) sample images from F_LFW dataset; (g) sampleimagesfromYTFdataset.

LBPH is used for face-recognition and it is known for its abilitytorecognizethefaceofapersonfromthefrontside as well as with the side face. In addition with LBPH, we have also used haar cascade classifier for extracting the face features like eyes,nose, smile, frontal face and side view of the face. The entire process consists of 3 steps as Collection of the image using a camera input stream followed by image detection and finally face recognition. Inthis paper, wehave used theLR500 dataset which was designed to test LBPH. For detection purposes, haar classifierhasusedtheadaboostalgorithm.

After the extraction of features the training process is completed and the images stored are then saved in yml format. For the accuracy and reliability, LBPH algorithm was tested with the dataset and we have found the valid resultsbutatsomepointtheresultswereinaccurate.

After generating images and extracting features from the images, the data was fed to VGGF model and then CS-Wo parts and linear SVM-Wo parts classifiers to check the rating of recognition for individual facial part separately. The results are summarized as; rate of recognition is highest on three quarters and full facial imagery with a ratingof100%.Later,It wasnotedthat,forrighthalfand top half facial images the rating falls down slightly with SVM-Wo classifier but with CS-Wo classifier, a rating of 100% is still maintained. For the bottom half of the face, the rating of recognition drops down to 50% with the SVM-Wo classifier and for CS-Wo classifier, a rating of 60%isobtained.

LBPH

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

Parts of the face we have used for testing the recognition ratesontheFEIdataset.

Classifier Partoftheface Rate of Recognition (Accuracy)

CS-Wo Three quarters and fullfacialimagery 98%

SVM-Wo 97.4%

CS-Wo Right half and Top Half 98% SVM-Wo <98%

CS-Wo BottomHalf 60%

SVM-Wo 50%

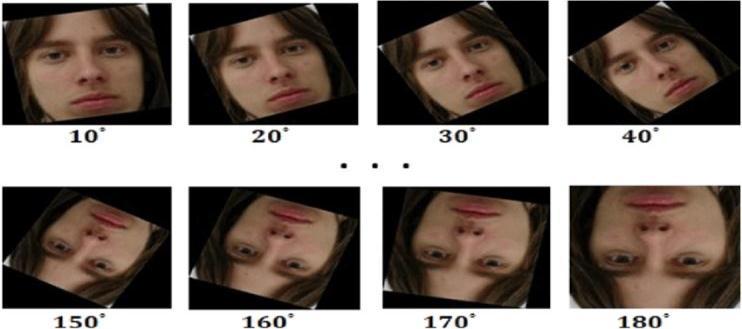

Inthisexperimentaltest,alltheimageswererotatedbyan increment of eighteen degrees, starting from ten degrees toonehundredandeighty degrees.Alltherotatedimages were given as an input to VGGF model to extract facial features and then to the two classifiers mentioned above (CS-Wo & SVM-Wo). This experiment was carried out usingbothcases;withrotatedandwithoutrotatedimages.

Itwasquiteadequatetoobtainanaccuracyof100% with faces rotated at 10 and 20 degrees. At a rotation of about 30 to 40 degrees, the recognition rate fell down to 98% and 80% respectively for both classifiers. The worst rate ofrecognitionwasobtainedatarotationfrom50degrees and above. Resulting in almost 0% for both classifiers. In this experiment, the CS-W classifier recorded the highest accuracy in recognition rating in most of the cases. It was notedthatasthe rotationkeptincreasing,therecognition rate kept decreasing for SVM-W classifier while the CS-W classifiermaintaineditsrateofrecognitionevenathigher degreerotations.ThisindicatestheCS-Wisabetterimage classifierthanSVM-W.

Illustrationoffacerotation(10⁰to180⁰)ontheFEIdataset.

Classifier RotationLevel Rate of Recognition

CS-Wo 10 degrees to 20 degrees 98%

SVM-Wo 98%

CS-Wo 30 degrees to 40 degrees 98%to80% SVM-Wo 98%to80%

CS-Wo >50degrees ~0%

SVM-Wo ~0%

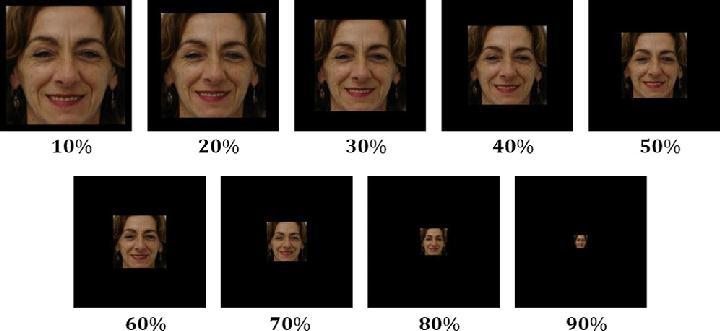

In this experimental test, the facial images were zoomed outat10%to90%tofindtheeffectsofzoominganimage on the results of rating of face recognition. As experimented above, the zoomed out facial imagery was passed as an input to the VGGF model to extract unique facial features and then pass it to the above mentioned classifiers(CSandSVM).

As the first step, we evaluated the rate of recognition without zoomed images and trained the classifiers. It was noted that at 10% to 50% zoom, a rating of 100% was obtained using the SVM-Wo classifier while on the other hand,after70%zoomtherecognitionratedroppeddown significantly which eventually reached 0% as the zoom kept increasing. In contrary, while using the CS-Wo classifier,lowerrecognitionrateswereproducedbetween 10% to 50% zoom when compared to SVM-Wo classifier. We noticed that as we added zoomed out facial images to the training dataset, the SVM-W classifier displayed an improvement in the rate of recognition at a zoom level of 40% and 50%. At 70%, 80% and 90% zoom levels, a steadyrateofrecognitionwasobserved.Inthecaseofthe CS-W classifier, a recognition rate of 100% was obtained between10%to60%zoomlevel.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

An example of zooming out (10% to 90%) of faces on the FEIdataset.

Classifier ZoomLevel

RateofRecognition

CS-Wo 10%to50% <10%

SVM-Wo 98%

CS-Wo >70% ~10%

SVM-Wo <10%to~0%

CS-Wo >50% ~0%

SVM-Wo ~0%

CS-W 10%to60% 98%

Here we are using three different generalized datasets namelyORL,FEIandLFWdatasets.

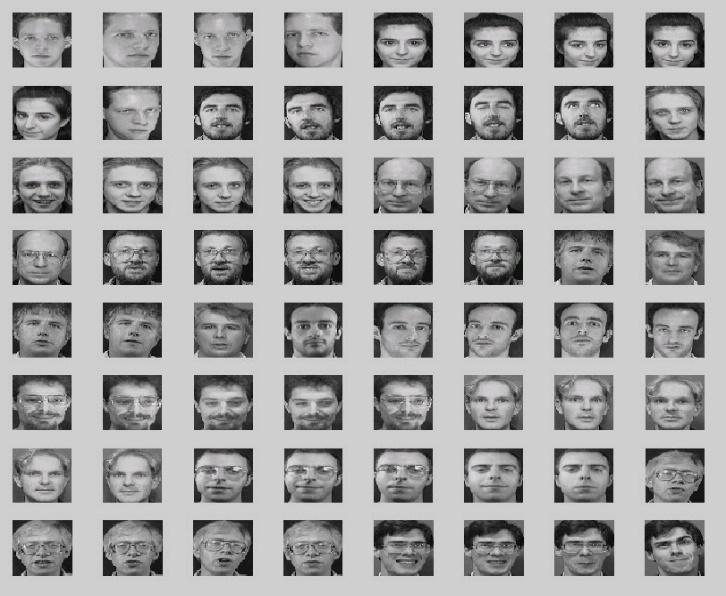

The ORL database of faces is a collection of 10 different images of 40 individual persons which were collected between1992and1994atthelaboratory.Thisdatasethas imageswhichweretakeninbrighttodarklightningareas. For some subjects the images were taken at different times also with varying facial expressions such as open/closed eyes, smiling face/not smiling with some facial details as glasses/no glasses. We have transformed these images into ORL.npz format which is given by numpy which stores these in an array format. Some pluginsfromimage.iointheformat.npzwerealsoused.

TheFEIfacedatabaseis aBrazilianfacedatabasethathas facesof200studentswithequalno.ofmalesandfemales. This dataset was prepared by FEI university. For each person there are 14 images bringing the total to 2800 images in a dataset. All images are coloured and with a whitehomogenousbackground.Thesubjectsarebetween the age group of 19 to 40 years old. These images have variationsinfacialexpressionsaswellaswithPosture.We haveused50%ofthedatasetforthetrainingpurposeand theother50%forthe testingpurpose. We havealsoused our own database where we have 10 final year students and there are 100 images of each individual that sums to 1000 images. These images were taken with different lighting exposures and facial expressions like smile, seriousness,etc

SamplefacedatafromtheORLdataset.

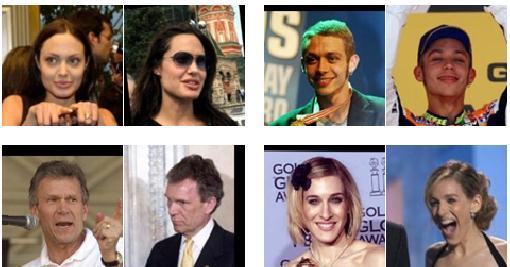

Labeled faces in the wild (LFW) is a large dataset of face pictures designed for variable testing and studying the capability of face recognition algorithms in simulating uncontrolledscenarios.Thereareatotal13,233imagesof 5749 people collected from the internet, all these images were detected and centered by viola jones face detector

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

with the faces havinglarge variations in expression, pose, illumination as well as resolution. Most of the subjects around 4070 person's have just a single image. The original database contained roughly four different sets of LFW images and also three different types of 'aligned' images. Also images have significant background clutter. Wealsohadtodopre-processingoftheimagestoextract theexactfacedimensionsfromtheoriginalimage.

Precision Recall F1-score

WithoutMask 1.00 0.99 0.99

WithMask 0.99 1.00 0.99

These experimental outputs were carried out on an Intel Core i5 8th genlaptop with 8GBRAMand 2GBintegrated graphics.WemadeuseofGoogleCollaboratoryfortesting of our face detection models. The datasets were taken from Kaggle which were classified as with and without masks.Someofthedatasetwasalsopreparedbyuswithin our college students. This dataset was used to train the facedetectionmodelandthemodelwastestedliveusinga laptop video camera. Our algorithm pre-processes the images and extracts unique facial features for making an accurateidentificationoffaces.

The GUI of this desktop application was built using PyQT and the TKinter library. Our ML model was built using TensorFlow. The average accuracy of our ML modelresultedinmorethan90%.CalculationofAccuracy andPrecisionwasmeasuredfor positivepredictedvalues. We made a combination of models and classifiers with facialblendingtechniquestoextractuniquefacialfeatures eveninlowlightingconditionsandlow-resolutionimages. The accuracy of algorithms that we tested are mentioned below. Finally, after multiple testing, our face detection model was successfully able to detect faces against maskedandunmaskedfaces.

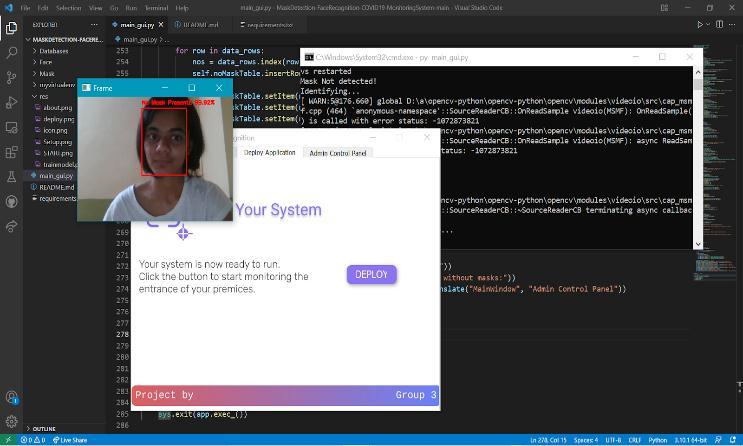

Picture 1. Person With Mask Detected

Description: When the system comes across a person, it tries to identify them. Since, in the demo, the person is wearing a mask, the face mask model identifies that the person is wearing a mask and ignores their entry in the defaulter’sdatabase.

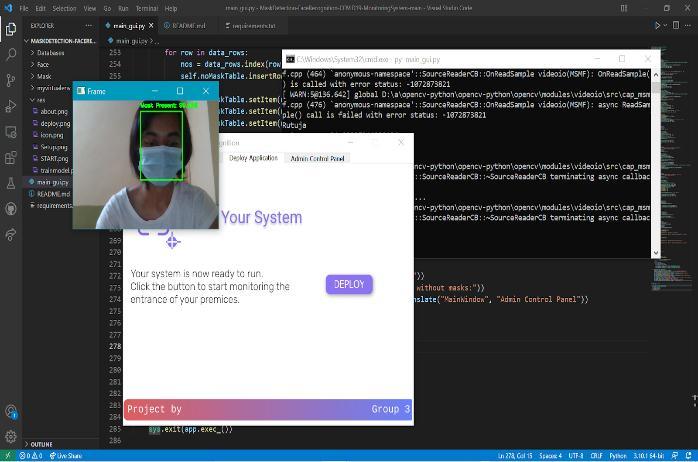

Picture 2. Person Without Mask Detected

Description: Similarly, if a person not wearing a mask is detected, then the face recognition algorithm is triggered tomarktheirentryintothedefaulter’stableforpenalties.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

[2] Face MaskRecognition using TensorFlow a Deep Learning Framework | Bhagyashree Kulkarni1, Dr. Suma.V2 PG Scholar1, Professor2 Department of Information Science Dayananda Sagar College of Engineering, Bangalore, India | ISSN 2321 3361 © 2021 IJESC|Volume11IssueNo.05

[3] CNN-BASED MASK DETECTION SYSTEM USING OPENCVANDMOBILENETV2|HarrietChrista.G,Anisha.K ,JessicaJ,KMartin|20213rdInternationalConferenceon Signal Processing and Communication (ICPSC) | 13 – 14 May2021|Coimbatore

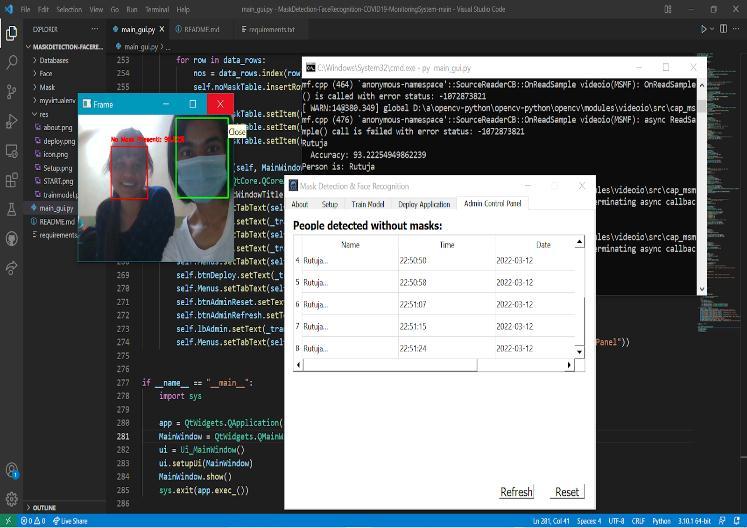

Picture 3. Name of person without mask

Description: Using GUI, the admin can find the list of peopledetectedwithoutamaskwhichcanbefurtherused toverifythelegitimacyofthesystem.

This project is focused on providing a better solution for regulatingtheuseofamaskbythepeopleusingfacemask detection and imposing a fine on the particular person who will violate the rule by their face recognition. This systemwillautomatethehectictaskofcheckingthemask manuallythusreducingtheeffortsandincreasingthetime efficiency and accuracy. The system can work very efficiently using the power of Machine Learning techniques and algorithms like TensorFlow, Keras, CNN, OpenCV,etc

TheComputerVisionlibrarywasfoundto bea pioneer in the field of Image and Video Processing with the help of this system can be made very useful and robust in features. This embedded vision-based application can be used in any working environment such as public place, station, corporate environment, streets, shopping malls, and examination centers, where accuracy and precision are highly desired to serve the purpose. It can be used in smartcityinnovation,anditwouldboostthedevelopment process in many developing countries. Our framework presentsachancetobemorereadyforthenextcrisisorto evaluate the effects of the huge scope of social change in respectingsanitaryprotectionrules.

[1] FaceMask Detection System, Joby K. J.1, Priyanga K K2 | International Journal of Innovative Research in Science,EngineeringandTechnology(IJIRSET)|||Volume 10, Issue 6, June 2021|| DOI:10.15680/IJIRSET.2021.1006071

[4] Face Recognition using Transferred Deep Learning for Feature Extraction | Amornpan Phornchaicharoen, Praisan Padungweang | The 4th International Conference on Digital Arts, Media and Technologyand2ndECTINorthernSectionConferenceon Electrical,Electronics,ComputerandTelecommunications Engineering

[5] Deep Convolutional Neural Network-Based Approaches for Face Recognition | Soad Almabdy 1, * and Lamiaa Elrefaei 1,2 | Appl. Sci. 2019, 9(20), 4397; https://doi.org/10.3390/app9204397

[6] Deepfacerecognitionusingimperfectfacialdata| Ali Elmahmudi, Hassan Ugail ∗ | Future Generation Computer Systems Volume 99, October 2019, Pages 213225

[7] Real-Time Detection and Recognition of Human Faces | Agnihotram Venkata Sripriya, Mungi Geethika, Vaddi Radhesyam| Proceedings of the International ConferenceonIntelligentComputingand Control Systems (ICICCS 2020) IEEE Xplore Part Number: CFP20K74-ART; ISBN:978-1-7281-4876-2

[8] Image processing effects on the deep face recognition system | Jinhua Zeng1, *, Xiulian Qiu2, and Shaopei Shi1 | Mathematical Biosciences and Engineering Volume18,Issue2,1187–1200|13January2021