International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

12B. Tech, Dept. of Electronics & Communication Engineering, ****

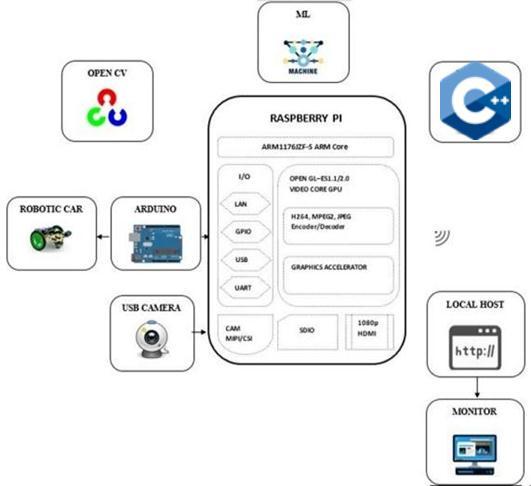

Abstract:- This paper aims to represent a miniature version of self-driving cars using the Internet of Things. The raspberry pi and Arduino UNO will function as the main processor chips, an 8MP pi high-resolution camera will provide the necessary information, raspberry pi will evaluate the results (demo), and raspberry pi will be trained in pi with neural networks and a machine learning algorithm, resulting in the detection of traffic lanes, traffic lights, etc., and the car will behave accordingly. In addition to these features, the car will overtake any obstacles with a proper LED signal.

Keywords:- Self Driving Car, Internet of Things, Machine Learning, Image Processing, Open CV, Raspberry Pi.

ADV, which stands for AUTONOMOUSLY DRIVEN VEHICLE, in human terms is called "SELF DRIVING CAR," which isabbreviated as SDC.These carsprobably don’t need a driver; all they do is sense things going around them and act accordingly. Many multinational companies like Alphabet (Waymo), Uber, etc., use three types of things called cameras, Lidars, and sensors. The use of cameras and Lidars in an SDC requires a lot of manpower, in which a person has to instruct the SDC about the objects, obstacles, and signs. This kind of process exhausts a lot of capital and funding which we cannotspare.

So,whatiftherearenosensorsandlidars?

Inthisproject,wedevelopedaprocesswheretheSDCis trained with a Convolutional Neural Network which is a classofArtificialNeuralnetworksusedtoanalyzevisual imagery. So, there is no need of using sensors or lidars andjustusingcameraswillbesufficient

Let’s dive into the topic, this project is based on IoT “INTERNET OF THINGS” which is used to access the dataandcontrolsystemovertheinternet.

Using 4 DC gear motors and 4 non-slippery rubber tiers the assembling and disassembling will be easier. The

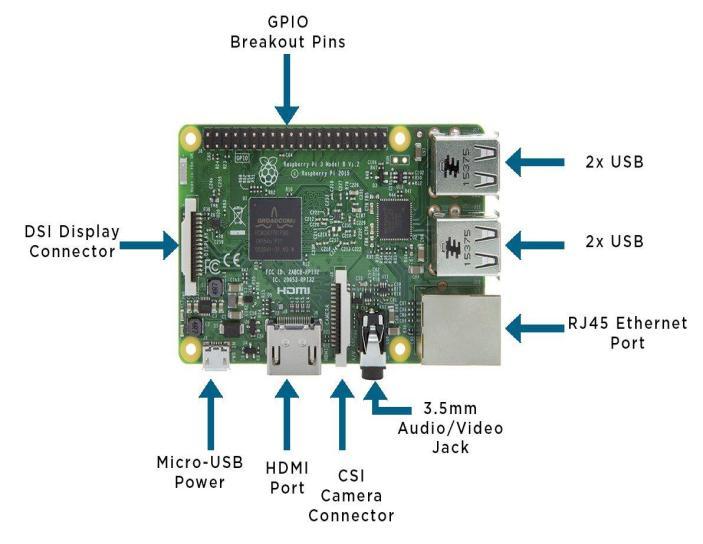

unit’s main process is assembling RASPBERRY PI B3+ whichhandlesimageprocessing.Raspberrypicamerais hookedupwithaCSCcabletotheCSCportofRaspberry piB3+.

Fig -1:RaspberryPiB3+

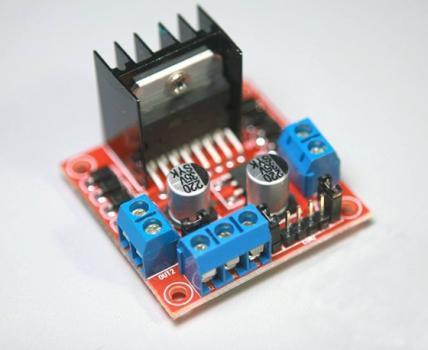

The L298N motor driver IC has many applications in the embedded field, especially in robotics. Most microcontrollers operate with very low voltage and current, while motors require higher voltage and current, microcontrollers cannot provide much higher current to them. We use L298N motor driver IC for this purpose.

Fig -2:L298Nmotordriver

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

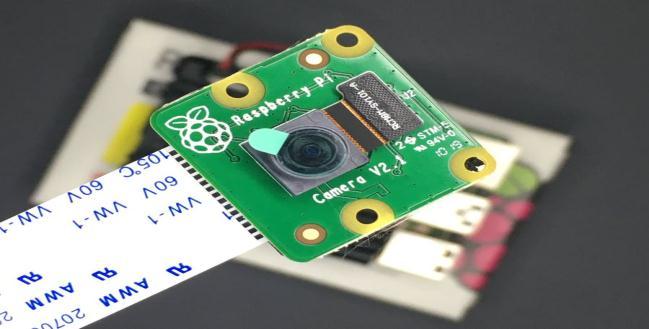

All Raspberry Pi cameras are capable of taking highresolution photos along with full HD 1080p video and are fully programmable. There are versions for visible light and infrared radiation. However, there is no infraredversionoftheHQcamera.However,theIRfilter canberemovedifrequired.

To set up the Raspberry pi we used Raspbian Lite (300MB) to flash the micro SD. This micro SD was used tostoreourimages.ThenweconnecttotheRaspberrypi andPCusingtheinternet.

Open CV is an image processing library that is free and easy to use. Open CV can be used in C, C++, and Python. We used C++ to write the code and compile it. Once assembled, an image view was opened for ROI creation, perspective transformation, thresholding operations, and calibration. We then installed Raspbian on the RaspberrypiandthepicablelibrarytoaccesstheGPIOs.

The 74164 IC is a serial input 8-bit edge-triggered shift register that has outputs from each of eight stages. SERIAL DATA INPUT PINS are serial input data entered on the SDA pin or the SDB pin as they are logically ANDed.EachinputcanbeusedasanactiveHIGHenable with data input on the other pin. The serial data on the DSA and DSB pins must be stable before and after the risingedgeofCPtomeetsetupandtimingrequirements.

The BC547 (NPN) transistor has two operating states: forward bias and reverse bias. In the forward bias condition, the current can only pass when the collector and emitter are connected. In the reverse bias state, it actsasadisconnectswitchandnocurrentwillpass.

LED Dynamic Turn Indicators arelocatedattherearof the chassis to indicate vehicle direction through lights that move across the car in the direction of intended travel.Especiallyfordesign-drivenFrontlight functions suchasDaytimeRunningLights(DRL),LEDsarealready the first choice in mid-range cars today, and more and more mid-range cars are getting standard LED headlightsatleastasanoption.

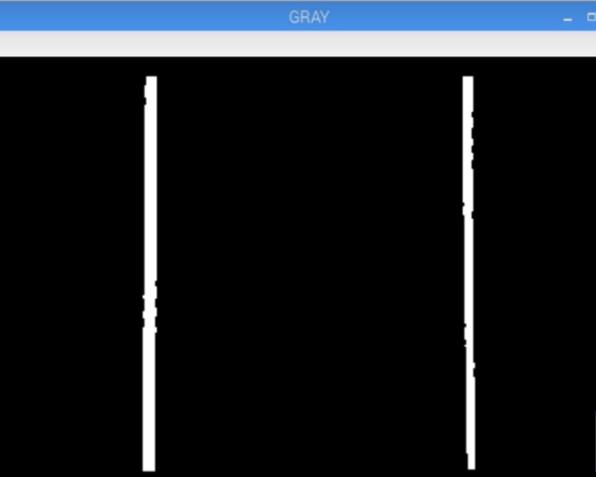

Gaussian Blur is a pre-processing process used to reduce any image noise. The proposed system uses this particular pre-processing method to erase multiple recorded boundaries and preserve most of the distinguishableimageboundaries.

Canny Edge Detection is a technique that extracts usefulstructuralinformationfromvariousvisionobjects and reduces the amount of data to process. It calculates thegradientsin eachdirectionofourblurredimageand trackstheedges/sideswith largechangesinintensity.It hasbeen widelyused in manycomputervision systems. Canny found that the requirements for applying edge detectionondifferentvisionsystemsareverysimilar.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

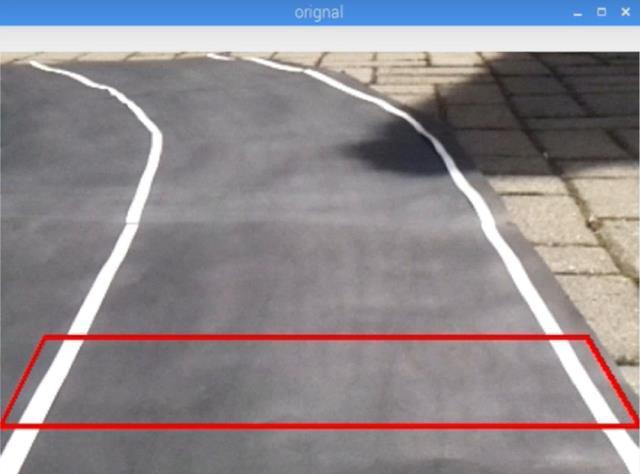

A Region of interest is the patterns in the data identified for a specific purpose. By performing Canny Edge Detection, many of the boundaries found are no traces. The concept of ROI is commonly used in many applications.

The endocardial border is probably defined on the image, possibly during different phases of the cardiac cycletoassesscardiacfunction.

In computer vision and optical character recognition, ROI defines the boundaries of the object under consideration. In many applications, symbolic labels are addedtotheROItocompactlydescribeitscontents.

The Cascade Trainer GUI isaprogramthatwillbeused to train, test, and improve cascade classifier models. It uses a graphical interface to set parameters and facilitates the use of OpenCV tools for training and testingclassifiers.

After launching the Cascade Trainer GUI, a screen will appear. This is the initial screen and can be used for classifiertraining.Typically,totrainclassifiers,youhave to provide the tool with thousands of positive and negative image samples, but there are cases where you canachievethesamewithfewersamples.

Soconnecttherobot bodytothechassisandthewheels of the automated car, solder the motors and fix them to the chassis, raspberry pi, Arduino UNO, and the motor controller and make the circuit wiring according to the wiringdiagram.

Using an ethernet cable, connect the raspberry pi to the computerandturnonthepi'sWi-Fifunctionsothatyou can connect and access the Pi using Wi-Fi and the VNC viewerthatisinstalledonthepi'soperatingsystem.

The Raspberry-pi instructs the Arduino UNO and it commandstheL298NICstokeeppoweringthetiresand then stop the prototype contingent's movement depending on the object's approach. The calculated displacement is also displayed in the output window of theprogram.

Digital image processing provides a clear image for information extraction. Image processing in SDC is done using OPEN CV. This process includes various subprocesses such as image compression, object detection, and recognition. The captured image is enhanced, restored,andprocessedusingbothpseudoandfullcolor.

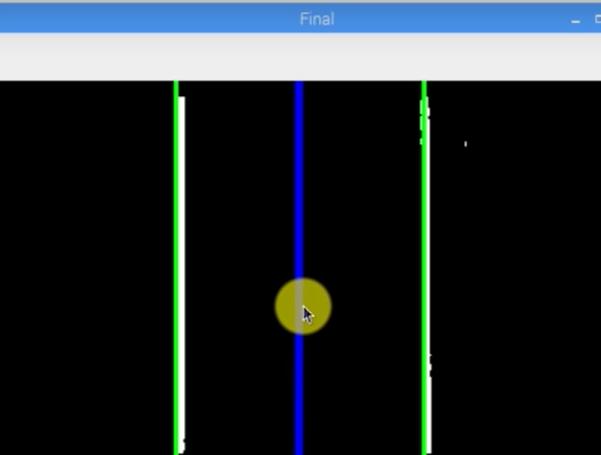

Alanedetectionsystemisusedtoassistthedriver.Itcan detect streaks, which helps locate marks. Increases driver safety by detecting traffic lanes at night. Finally, thelanedetectionsystemislikeanassistantthataimsto improvedriving.

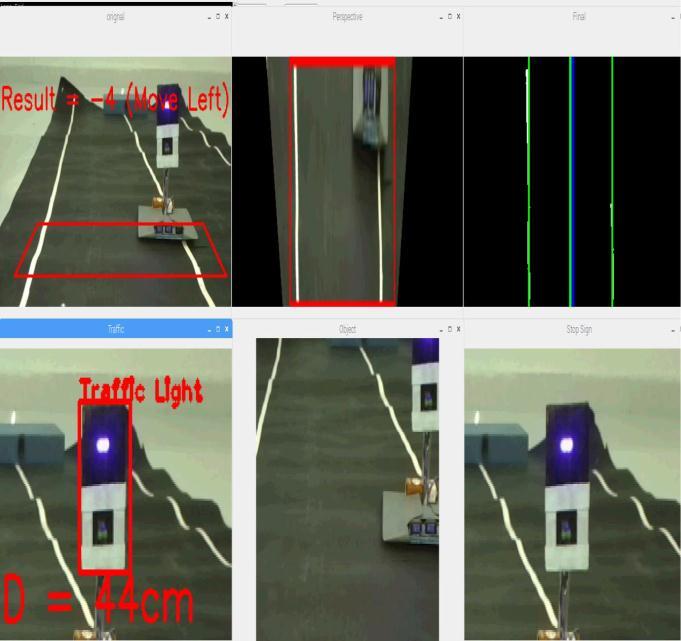

Fig -10:LaneDetection(ComputerView)

Machineslearnindifferentwayswithdifferentamounts of "supervision", supervised, unsupervised and semisupervised. Supervised learning is the most commonly used form of ML and also the simplest. Thus, unsupervised learning does not require so much data and has the most practical users. convolutional neural network, which is a class of artificial neural networks usedtoanalyzevisualimages.Computervisionisusedto spotanobjectinfrontofaprototypeandmakedecisions accordingly.

Fig -11:MLExample

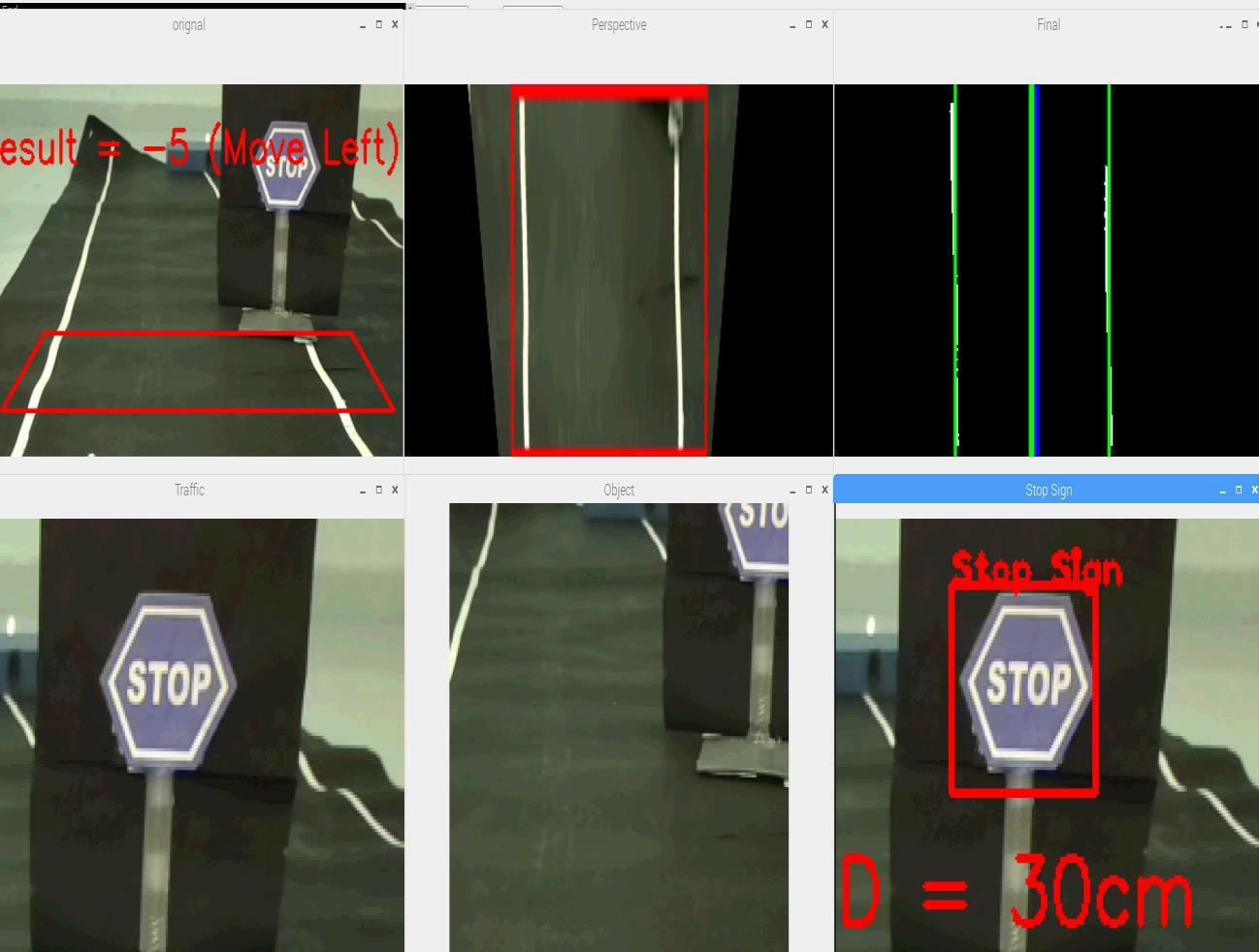

When we start the engine, all the components start working and the camera starts taking pictures. Lane detectionissuccessful,obstacledetectionsuccessfuland traffic signals are detected and behaved accordingly. They are also mostly flown high by air deviations, more preciselyairandcarmotiondisturbances.

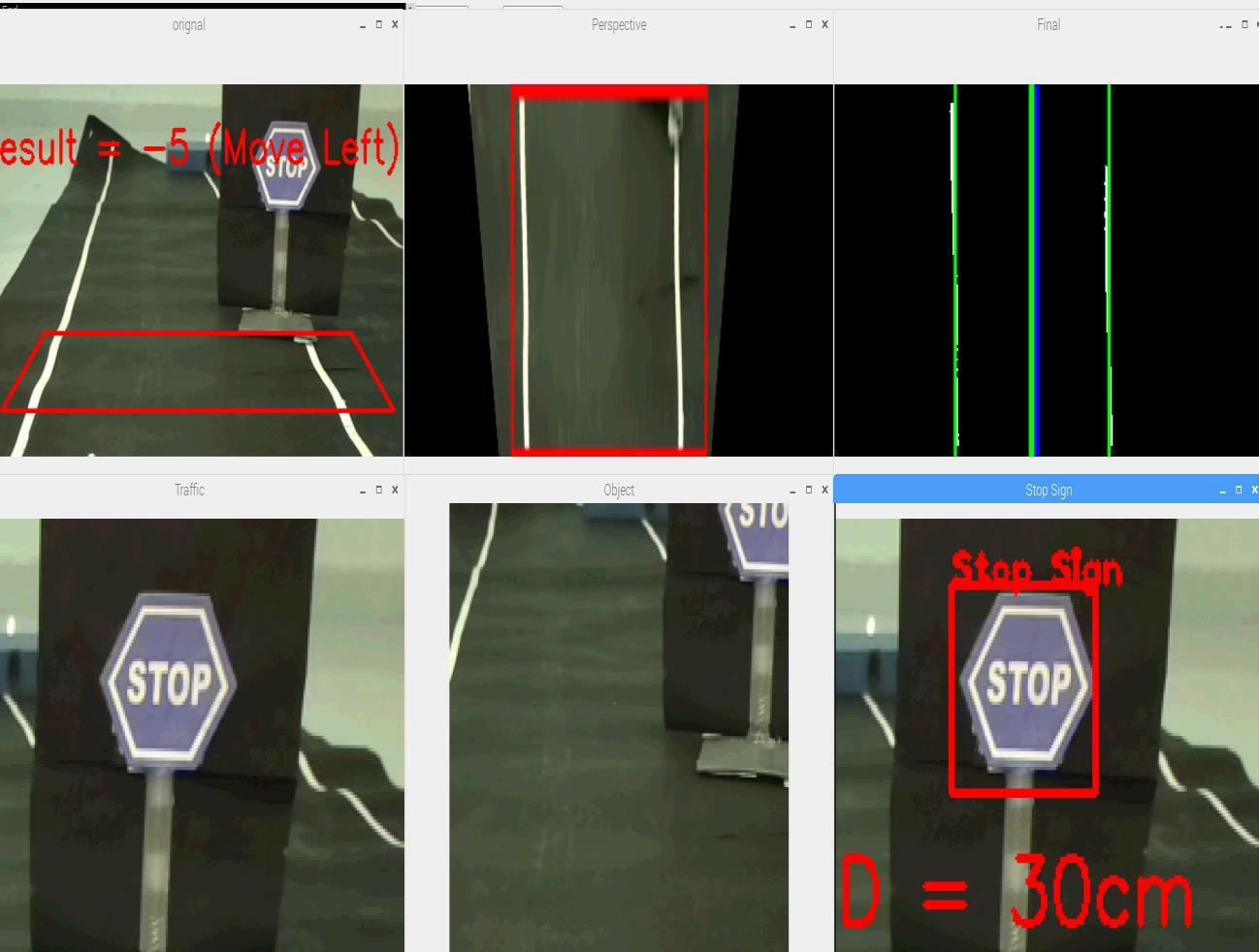

IfSelfDrivingCardetectsaSTOPsigninitspath,itstops at a 10cm distance (as per programmed) before the STOPsignposition.

Human View:

Fig -12:StopSign(HumanView)

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

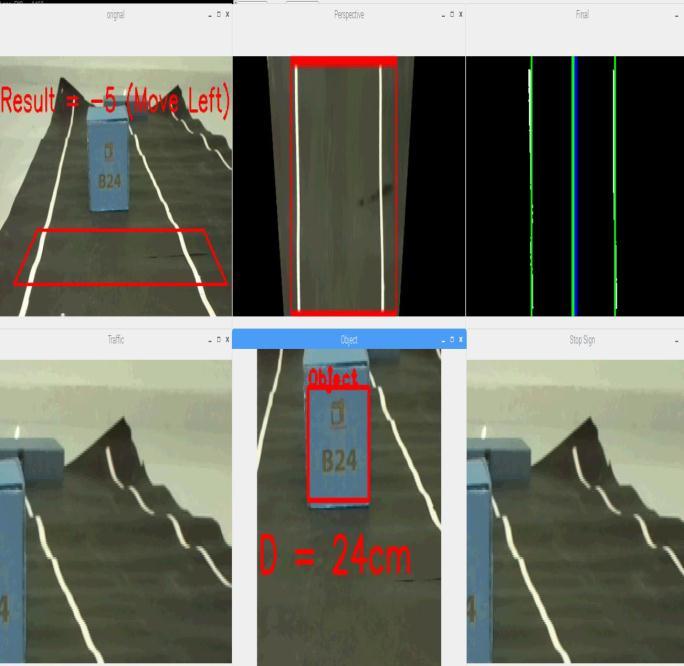

Computer View(Runtime Status):

Computer View(Runtime Status):

Fig -13:StopSign(ComputerView)

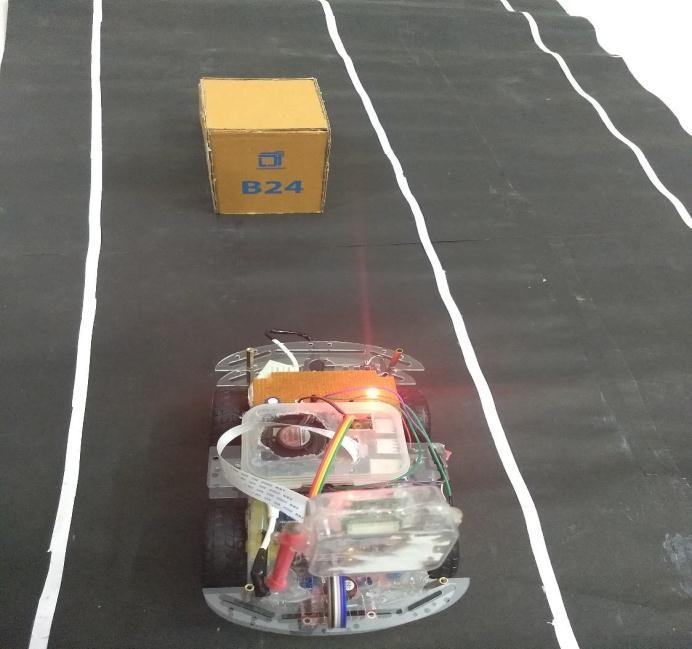

If there is any obstacle in the path of Self Driving Car, then the car will overtake the obstacle at a specified distanceandcomesbacktothesamelane.

ObstacledetectioninSelfDrivingcarscanbeachievedby usingMachinelearning.

Human View:

Fig -15:ObstacleDetection(ComputerView)

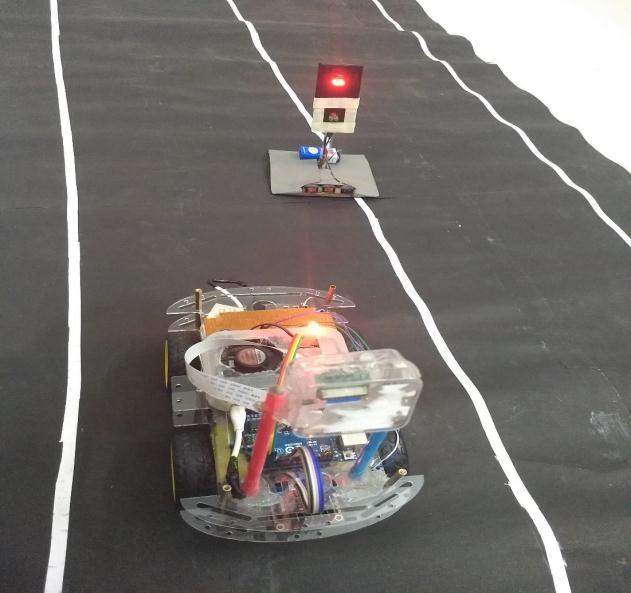

WhenSelfDrivingCardetectsatrafficlightinitspath,it checks the color of the light and acts accordingly. Iftheredlightisdetected,Carstopsuntilthegreenlight appears. If it’s a green light, then Car continues to move forwardwithoutstopping.

Human View:

Fig -14:ObstacleDetection(HumanView)

Fig -16:TrafficLightDetection(HumanView)

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 09 Issue: 10 | Oct 2022 www.irjet.net p-ISSN:2395-0072

[5] MohammadFaisalBinAhmed,Md.SaefUllahMiah, Md. Muneef Anjum Timu, Shakura Akter, Md. Sarker.b, “TheIssuesandthePossibleSolutions for Implementing Self-Driving Cars in Bangladesh” in Regin10HumanitarianTechnologyConference(R10HTC),IEEE,2017,pp:250-254.

[6] MargritBetke,EsinHaritaoglu,LarryS.Davis,“Realtimemultiplevehicledetectionandtrackingfroma movingvehicle”,inMachineVisionandApplication, IEEE,2000,pp:69-83

[7] Chun-Che Wang, Shih-Shinh Huang and Li-Chen Fu, Pei-YungHsiao“DriverAssistanceSystemforLane Detection and Vehicle Recognition with Night Vision”,IEEE

[8] Ruturaj Kulkarni, Shruti Dhavalikar, Sonal Bangar, “Traffic Light Detection and Recognition for Self Driving Cars using Deep Learning” in 4th International Conference on Computing Communication Control and Automation(ICCUBEA),IEEE,2018

ThisSelfDrivingCaristrainedandtestedusinganormal resolutionraspberrypicamerawithalotofsamplesand in a safe environment. To avoid typical environmental changesandconditionswhichareunpredictable,theSelf Driving Car must be trained perfectly to be able to perform perfectly without any false decisions. The operationofourself-drivingcarisoutlinedinthispaper.

[1] Aditya Kumar Jain, “Workingmodel of Self-driving car using Convolutional Neural Network, Raspberry Pi andArduino”, Proceeding of the 2nd International Conference on Electronics, Communication and Aerospace Technology (ICECA),IEEE,2018,pp:1630-1635.

[2] Raivo Sell, Anton Rassõlkin, Mairo Leier, JuhanPeepErnits,“Self-drivingcarISEAUTOforresearch and education” in 19th international conferenceon Research and Education in Mechatronics (REM), IEEE,2018,pp:111-116.

[3] T. Banerjee, S. Bose, A. Chakraborty, T. Samadder, Bhaskar Kumar, T.K. Rana, “Self Driving Cars: A PeepintotheFuture”,IEEE,2017,pp:33-38.

[4] Qudsia Memon, Muzamil Ahmed and ShahzebAli, “Self-DrivingandDriverRelaxing Vehicle”,inIEEE 2016,pp:170-174.

[9] R. Mohanapriya, L.K. Hema, Dipesh warkumar Yadav, Vivek Kumar Verma, “Driverless Intelligent Vehicle forFuturePublicTransportBasedOnGPS”, in International Conference on Signal Processing, EmbeddedSystemandCommunicationTechnologies andtheirapplicationsforSustainableandRenewable Energy(ICSECSRE`14),Vol.3Special Issue3,April 2014,pp:378-384

[10]Giuseppe Lugano, “Virtual Assistants and SelfDrivingCars”,IEEE,2017,pp:1-5.

[11]Dong, D., Li, X., &Sun, “A Vision-based Method for Improving the Safety of Self-driving”, 12th international conference on reliability, maintainability and safety(ICRMS),2018, pp: 167171.

KailasSaiTeja

B Tech,Dept of Electronics& CommunicationEngineering

KanaparthiSaiPranayaChary

B.Tech,Dept.of Electronics& CommunicationEngineering