TEXT TO IMAGE GENERATION USING GAN

Mrs. L. Indira1 , M. Sunil2 , M. Vamshidhar3, S. Ravi Teja4, R. V. Praneeth51 Assistant Professor, Department of Computer Science and Engineering

2,3,4,5 Undergraduate Student, Department of Computer Science and Engineering, Vallurupalli Nageswara Rao

Vignana Jyothi Institute of Engineering and Technology, Hyderabad, Telangana, India ***

Abstract - Producing good images from descriptions is a challenge in computer vision with practical applications. To address this issue, we propose Stacked Generative Interconnected Networks (StackGAN) to combine the 256×256 real images described in the annotation. The process splits the problem into two phases: Phase-I generates the low-level image by drawing pictures and colours from the text, while Phase-II edits the Phase-I results to create the high image with photorealistic details. . price picture. Conditional magnification is used to improve image contrast and stable GAN training. Extensive testing demonstrates that our development method is better than the state-of-the-art method and proves its effectiveness in creating realistic images based on descriptions. In summary, StackGAN provides a multi-level approach with additional optimization to improve composite images and shows great results in creating high-quality images from text.

Key Words: Stack GAN, Resolution, Conditioning Augmentation, Image generation

1.INTRODUCTION

Creatingrealisticimagesfromdescriptionsisanimportantanddifficulttask,applicableinmanyfieldssuchasphotoediting and computer aided design. Recently, prolific competing networks (GANs) are showing promise in creating real images. ConditionalGANsspeciallydesignedtogenerateimagesfromdescriptivetexthavebeenshowntobeabletogeneratetextrelatedimages.

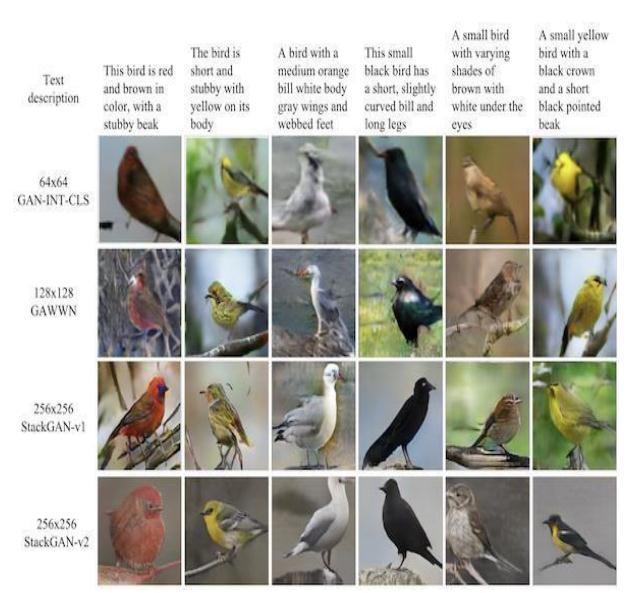

However,trainingGANstocreaterealisticimagesfromannotationsremainschallenging.Increasingthenumberoflayersused tosolveimageproblemsincurrentGANmodelsoftenmakestrainingunstableandineffective.Theproblemarisesbecausethe classificationofthenaturalimageandtheimplicitclassificationmodeldonotoverlapwellinhighpixelspace.Thisproblem becomesmoreseriousastheimageresolutionincreases.Previoustutorialswerelimitedtocreatingsensible64×64images fromdescriptionswithoutrealobjectsanddetailslikeabird'sbeakandeyes.Also,annotationsarerequiredtocreatehigher resolutionimagessuchas128×128.

Tosolvetheseproblems,wepresentStackedGenerativeAdversarialNetworks(StackGAN),whichdecomposestheproblemof text-to photorealismimagesynthesisintotwomanageableproblems.Wefirstdevelopedthedecoderusingalevel-IGAN.Next, wesettheLevel-IIGANontopoftheLevel-IGANtocreateareallygoodimage(forexample,256×256)accordingtotheLevelIresultsanddescription.LeveragingtheTier-Iresultsandtext,theTier IIGANlearnstocaptureinformationtheTier-IGAN wouldmissandaddmoredetailtotheproduct.

Thisapproachimprovestherenderingofhigh-resolutionimagesbyincreasingtheabilitytomodelthedistributionoftheimage distribution.

Wealsoproposedevelopinganewmethodtohandlethedifferencesinvarioustexteventscausedbythelimitationofthe numberoftrainingtext-imagepairs.Thistechniquefacilitatessmoothingofthecentralcoolingmanifoldbyallowingsmall randomperturbations.ItimprovesthecontrastofsyntheticimagesandimprovesthetrainingofGANs.Ourcontributionscan besummarizedasfollows:

(1)Weproposeacommoncrosslinkingalgorithmthatimprovestheactual(256×256resolution)efficiencyofimagebinding withoutdecomposingtheproblemintocontrolproblems.

(2)WeintroducetheamplificationtechniqueleadingtodifferentdesignandstabilityfortrainingGANs.

(3) Through extensive and thorough testing, we demonstrate the effectiveness of the overall design and the impact of individualproducts.SuchusefulinformationcouldguidetheGANdesignpatterninthefuture.

2. RELATED WORK

Tosolvethisproblem,researchershaveproposedmanywaystoimprovethetrainingprocessandimproveimagequality.Some methodsfocusonpower-basedGANs,whileothersinvolvevariablessuchasattributesorclassrecords.Therearealsomethods thatusecoolimage-to-imagefunctionssuchasphotoediting,relocation,andsuperresolution.However,thesesuper-resolution techniqueshavelimitationsinaddingimportantdetailsorcorrectingimperfectionsinlow-resolutionimages.

Recently,attemptshavebeenmadetocreateimagesfromnegativedescriptions.

TheseincludemethodssuchasAlignDRAW,eventPixelCNN,andapproximateLangevinsampling.Whilethesemethodshave beenshowntobeeffective,theyofteninvolverepetitiveprocessesorhavelimitedfunctionality.

ConditionalGANswerealsousedtogenerateimagesfromtheannotations.Forexample,Reedetal.Reliable64×64birdand flowerimagesweresuccessfullyprocessedusingGANformalism.

Inthenextwork,theyextendedthe128×128renderingmethodusingannotationtools.

TherearemethodsthatuseaseriesofGANstocreateimages,ratherthanusingasingleGAN.Wangetal.S2-GANisproposed, whichseparatestheinternalsceneproductionintomodelandstyleproduction.Incontrast,thesecondlevelofStackGANaims toimprovethecontentoftheproductandfixthedefectsaccordingtothedescription.

Insummary,workontext-to-imagesynthesisusingStackGANleadstoadvancesinmodeling,includingVAEs,autoregressive models, and GANs. Various methods have been proposed to stabilize GAN training, including adaptive conversion and renderingfromannotations.Whilepreviousmethodshaveshowngoodresults,StackGANstandsoutbytacklingthechallenge ofcreatinghigh resolutionimageswithrealisticdetails.Itsbi-levelarchitectureandconditionalmagnificationtechniqueshelp synthesizediverseandvisuallyappealingimages.

3. METHODOLODY

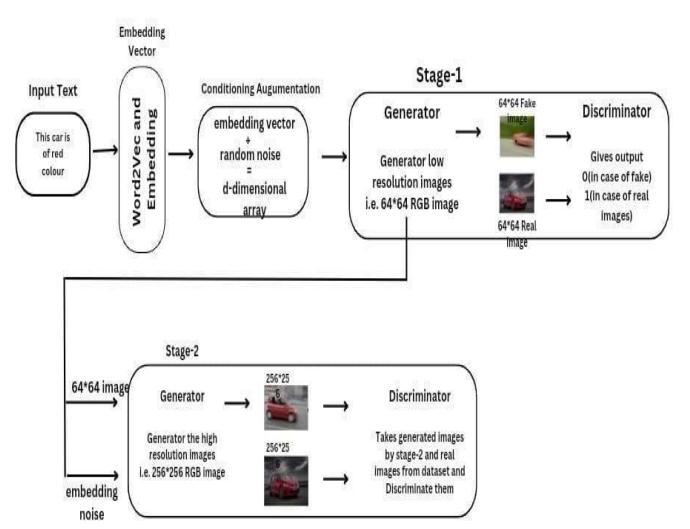

OurproposedStackGANapproachaddressesthechallengeofcreatinghigh-qualityimagesfromannotations.Toachievethis goal,weproposea two-stepapproachthatbreaksdowntheproblemintomoremanageableproblemsandallowsforthe creationofrealisticimagesfromthedescriptions.InthefirstphaseofStackGAN,weuseagenerativecompetitornetwork

(GAN)togeneratealow-resolutionimagefromagivendefinition.Thisfirstphase,calledPhase-I,focusesoncapturingthe shapeandcoloroftheobjectsdescribedinthetext.Bycreatinglow resolutionimages,welaythefoundationforfurther developmentathigherlevels.Stage-IGANgeneratespreliminaryresultsfromlowresolutionimagesasinputforthenextstage.

OurproposedStackGANapproachaddressesthechallengeofcreatinghigh-qualityimagesfromannotations.Toachievethis goal,weproposea two-stepapproachthatbreaksdowntheproblemintomoremanageableproblemsandallowsforthe creationofrealisticimagesfromthedescriptions.InthefirstphaseofStackGAN,weuseagenerativecompetitornetwork (GAN)togeneratealow-resolutionimagefromagivendefinition.Thisfirstphase,calledPhase-I,focusesoncapturingthe shapeandcoloroftheobjectsdescribedinthetext.Bycreatinglow resolutionimages,welaythefoundationforfurther developmentathigherlevels.Stage-IGANgeneratespreliminaryresultsfromlowresolutionimagesasinputforthenextstage.

Intraining,wedomanyexperimentsusingtestdataandcomparethemwiththelatestmethods.Theseexperimentsareusedto validate the effectiveness of StackGAN in generating realistic images in descriptive text. We considered both quality and quantityinouranalysis.

Forqualityevaluation,weanalyzethevisual qualityofthegeneratedimageandcompareitwiththeactual imageonthe ground.Weevaluatefactorssuchasimageclarity,accuracyofthedescriptionprovided,andavailabilityofgoodcontentand spareparts.

4. RESULTS

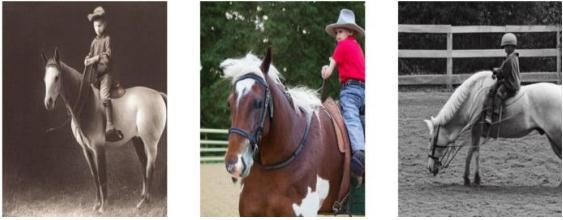

Thisproject’smajorobjectivewastogeneratetheimageupongiventext.Theimageswhicharegeneratedduringthetraining phaseareasfollows:

Theoutputimageswhenthetextinputisprovidedareshownbelow:

INPUTTOMODEL:BoyRidingahorse

OUTPUTOFTHEMODEL:

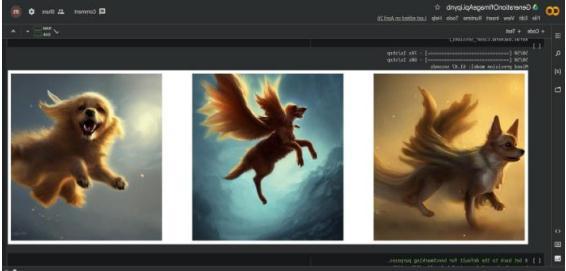

INPUTTOMODEL:Adogwithwingsflyinginair.

OUTPUTOFTHEMODEL:

AnotherfinaloutputimagewhichisconvertedusingGANtechnology:

3. CONCLUSIONS AND FUTURE SCOPE

Inthiswork,weintroduceanewmethod,StackGAN,tocombinereal timeimagesfromannotationsusinglinearcompositing andamplificationevents.Ourmethodsolvesthetext-to-imagesynthesisproblemwithauniquesketchoptimizationtechnique. Thefirststage-IGANcapturessimplecolorsandinformationofobjectsbasedonannotations.Thenextstage,Stage-IIGAN, improvesStage-Iresultsbycorrectingimperfectionsandaddingadditionaldetails,resultinginhigherresolutionandbetter imagequality.

Variousqualitativetestsareconductedtoevaluatetheeffectivenessofourplan.Theresultsdemonstratetheeffectivenessof StackGANingeneratinghigherresolutionimages(eg256×256)withaccurateandvariedcontent.Ourmethodoutperforms existingtext-to-imagegeneratormodelsintermsofoutputqualityandrelevancetoaparticulardescription.Thesuccessof StackGANopensupnewpossibilitiesformanypracticalapplicationssuchasimageprocessingandcomputer-aideddesign.By accuratelytranslatingtheannotationintohigh-qualityimages,ourmethodhelpsimprovecomputervisionperformanceand expandsthecapabilitiesofimagesynthesis.

REFERENCES

[1]SceneRetrievalWithAttentionalText-to-ImageGenerativeAdversarialNetworkbyrintaroyanagi,rentogo,takahiroogawa, &mikihaseyama.

[2]TexttoImageSynthesisforImprovedImageCaptioningbymd.zakirhossain,ferdoussohel,mohdfairuzshiratuddin,hamid laga,mohammedbennamoun.

[3]SemanticObjectAccuracyforGenerativeText-to-ImageSynthesisbyTobiasHinz,StefanHeinrich,andStefanWermter.

[4]DynamicMemoryGenerativeAdversarialNetworksforText-to ImageSynthesisbyMinfengZhu1,PingboPanWeiChenYi Yang.

[5]Real-worldImageConstructionfromProvidedTextthroughPerceptualUnderstandingbyKanishGarg,AjeetkumarSingh, DorienHerremansandBrejeshLall.

[6]ResearchonTexttoImageBasedonGenerativeAdversarialNetworkbyLiXiaolin1,GaoYuwei.

[7]Super-ResolutionbasedRecognitionwithGANforLow-ResolvedTextImagesbyMing-ChaoXu,FeiYin,andCheng-LinLiu.

[8]ANovelVisualRepresentationonTextUsingDiverseConditionalGANforVisualRecognitionbyTaoHu,ChengjiangLong, Member,andChunxiaXiao,Member.

[9]ASimpleandEffectiveBaselineforText-to-ImageSynthesisbyMingTaoHaoTangFeiWu1XiaoyuanJingBing-KunBao Changsheng.

[10]Knowledge-TransferGenerativeAdversarialNetworkforText-to ImageSynthesisbyHongchenTan,XiupingLiu,Meng Liu,BaocaiYin,andXinLi.

[11]Bastien,F.,Lamblin,P.,Pascanu,R.,Bergstra,J.,Goodfellow,I.J.,Bergeron,A.,Bouchard,N.,andBengio,Y.(2012).Theano: newfeaturesandspeedimprovements.DeepLearningandUnsupervisedFeatureLearningNIPS2012Workshop.

[12]Bengio,Y.(2009).LearningdeeparchitecturesforAI.NowPublishers.

[13]Bengio,Y.,Mesnil,G.,Dauphin,Y.,andRifai,S.(2013).Bettermixingviadeeprepresentations.InICML’13.

[14]Bengio,Y.,Thibodeau-Laufer,E.,andYosinski,J.(2014a).Deepgenerativestochasticnetworkstrainablebybackprop.In ICML’14.

[15]Bengio,Y.,Thibodeau-Laufer,E.,Alain,G.,andYosinski,J.(2014b).Deepgenerativestochasticnetworkstrainableby backprop.InProceedingsofthe30thInternationalConferenceonMachineLearning(ICML’14).

[16]Bergstra,J.,Breuleux,O.,Bastien,F.,Lamblin,P.,Pascanu,R.,Desjardins,G.,Turian,J., Warde-Farley,D.,andBengio,Y. (2010).Theano:aCPUandGPUmathexpressioncompiler.InProceedingsofthePythonforScientificComputingConference (SciPy).OralPresentation