Facial recognition to detect mood and recommend songs

Syed Waheedulla, Prof.Harashleen Kour, Mulinti Meghanath Reddy, Sriramoju Nikhil Sai, Piyush MajumdarDepartment of computer science and engineering, Chandigarh University, Gharuwan, 140301,Punjab,India

***

Abstract This research paper presents a development of a web-application with a facial recognition system that uses computer vision, algorithms and machine learning approaches to effectively determine a user’s emotions in real-time. Thesysteminterpretsfacialfeatures suchas theeyes, mouth, and forehead to detect emotions, including happiness, sadness, neutral, and rock. Based on the detected emotion and the selected language and singer, the system recommends songs thatbestfit the user’s mood and preferences. To train the deep learning model, FER-2013 dataset of labeled facial images is used. The system is implemented in realtime video input, providing personalized recommendations to the user based on their mood. The proposed system has the potential to revolutionize the way we listen to music and enhance our wellbeing. By providing personalized recommendations based on the user’s emotions and preferences, the system could improve the user’s music listening experience and mood. To evaluate the system’s performance we conducted experiments using a dataset of labeled facial images. The results showed that the system accurately detects emotions, withanaverageaccuracyof81conducteda userstudy to evaluate the system’s effectiveness in providing personalized recommendations. The results showed that the system was successful in providing recommendations that matched the user’s mood and preferences.

Keywords Facial Recognition Technology, Emotion Recognition, Music Recommendation System, Vision, Machine Learning, Convolutional Neural Network, Mood Detection, Natural Language Processing, Deep Learning

1. INTRODUCTION

Facial recognition technology has gained immense popularity in recent years and has been widely used in various industries, including marketing, healthcare, and security.

However, its potential in the music industry remains largely untapped. The ability to identify human emotions through facial expression analysis has opened up new

possibilities for providing a more personalized music experience. The aim of this research paper is to explore the concept of using facial recognition technology to accurately identify emotions and make song recommendationsinaccordancewiththeobserved mood. This technology can potentially revolutionize the way we interact with machines and assist us in achieving emotional

well-being.[1] Music has the power to influence our feelings and emotions, but with a vast music library available,itcanbechallengingtofindtherightmusicthat matches our current emotional state. This is where facial recognition technology can play a crucial role. By analyzing facial expressions in real-time, the proposed system can detect the user’s mood accurately and employ machine learning algorithms to recognize different face characteristics associated with various emotions such as happiness, sadness, anger, surprise, and neutrality. By merging facial recognition technology and machine learning algorithms, the suggested system can create an efficientmusicrecommendationsystemthatcanrecognise the user’s mood and select music that matches it using facial recognition techniques. This personalised music experience has the potential to change the way people listen to music by making it more immersive and intriguing. The success of this experiment may encourage the development of comparable systems in other sectors, thereby broadening the capabilities of facial recognition technology. The purpose of this research paper is to provide a detailed review of the notion of applying facial recognition technology in the music industry, as well as insights into its possible influence on the music industry and beyond. Overall, the goal of this research article is to help design a more efficient and cost-effective facial recognitionsystemthatcanrecognisefacial emotionsand selectsongsbasedontheuser’smood.

II. LITERATURE REVIEW

VisnuDharsini etal.[2]proposeda paperwhichdiscusses the potential of facial recognition technology in various fields and its ability to recognize a person’s emotions. It highlights the unique connection between music and emotions and proposes an efficient music recommendation system that uses facial recognition techniques to determine a user’s emotion. The paper

claims that the algorithm implemented would be more proficient than existing systems and would save time and labor invested in performing the process manually. The proposed system aims to recognize facial emotions and recommend songs efficiently while being cost and timeefficient. K.C. Kullayappa Naik et al. [3] proposed a paper that discusses the creation of music player systems that canrecogniseauser’sfaceexpression.Whenanticipatinga person’s emotions and mood, the face is crucial. The Convolutional Neural Network approach is the one suggested in this paper and is useful for both music recommendation and emotion recognition. In order to create an emotion-based music recommendation system, this paper’s proposed method focuses on understanding human emotions using computer vision and machine learning approaches. Samuvel, D. J. et al. [4] proposed a system in this paper that explores the potential of face recognition technologies in various industries, such as security systems and digital video processing. They suggestthatmusic,asanartformwithastrongemotional connection, has the ability to enhance one’s mood. The focus of their work is to create an efficient music recommendation system that uses facial recognition to determinetheuser’ssentiment.

The proposed algorithm is expected to be more efficient than existing systems, and the system aims to recommend songs by identifying facial expressions. The suggested system is designed to be both time and costefficient. Athavle, Madhuri et al.[5] proposed a new approach in this paper for automatic music playing using facial emotion detection, which differs from existing approachesthatinvolvemanualsortingorusingwearable computing devices. The proposed system uses a Convolutional Neural Network for emotion detection and Tkinter & Pygame for music recommendations. The systemaimstoreducecomputationaltimeandoverallcost while increasing accuracy. Testing was done on the FER2013 dataset, where feature extraction was per formed on input face images to detect emotions such as happy, angry, sad, surprise, and neutral. The system automatically generates a music playlist based on the user’s present emotion, leading to better performance in terms of computational time Mahadik, A. et al. [6] proposedasysteminthispaperthatusescomputervision and machine learning to detect the user’s mood based on their facial expressions through a live feed from the camera. The MobileNet model with Keras is used for this purpose, which generates a small size trained model making Android-ML integration easier. The system then suggests songs that match the detected mood, providing an additional feature to traditional music player apps. Music is considered a great connector that unites people acrossvariousmarkets,ages,backgrounds,languages,and preferences.Itis alsousedasameansofmoodregulation and can improve mental health. The incorporation of

mood detection in the proposed system aims to increase customer satisfaction. Joshi, S. et al.[7] proposed a paper which discusses the current state of music recommendation systems, particularly the challenge of recommendingsongsbasedonemotions.

The author suggests that Natural Language Processing and Deep Learning technologies can help machines interpret emotions through texts and facial expressions. Various deep learning models were comparedfordetectingemotions,andthebestmodelwas integrated into the music recommendation application. The application takes input in the form of text or facial expressionsandrecommendssongsandplaylistsbasedon the detected emotion. The inclusion of a CNN model for detecting emotions through facial expressions enhances the application’s accuracy. Chidambaram, G., et al.[8] proposed an article that discusses the use of facial recognitiontechnologytoextractdistinguishablefeatures ofaperson’sfaceanddetecttheirmood.Thisinformation isthenusedtogenerateapersonalizedplaylistoradefault playlist based on the mood detected, removing the need for manual song grouping. The proposed system aims to develop an emotion-based music player and the article Provides a brief description of how the system works, playlistgeneration,andemotionclassification.

Madipally Sai Krishna Sashank[9] proposed a research paper which highlights the prevalence of stress in today’s fast paced world and how music can serve as a tool for stress relief. However, if the music played is not in line with the user’s mood, it may have a counterproductive effect and add to their stress levels. The paper identifies theabsenceofmusicappsthatsuggestsongsbasedonthe user’s mood or emotion. To address this, the authors propose a music player application that utilizes facial recognition technology to detect three emotions- anger, happiness,andsadness.Userscaneitheruploadaselfieor anolderphotoofthemselvesordescribetheiremotionsin text format to receive personalized song recommendations.

Supriya, L. P., et al.[10] proposed a paper on theproject’semotion-basedmusicplayer,whichisanovel approach to affective computing. This project uses facial recognitiontoidentifytheuser’s emotionsand thenplays musicaccordingly.Thisremovesthedifficultyofmanually searchingforsongsandinsteadgeneratesaplaylistbased on the user’s emotional state. The proposed framework collects facial emotions and auditory data to generate a low-costplaylist.SupportVectorMachine(SVM)isusedto recognise emotions, and the web cam captures the user’s imagetoextractfacefeatures.

III. PROPOSED SYSTEM

The aim of this project is to accurately detect a user’s emotions in real-time. The system will use computer vision algorithms and machine learning techniques to analyze and interpret facial features such as the shape of theeyes,mouth,andforeheadtodetectdifferentemotions likehappiness,sadness,anger,surprise,neutralandrock. Based on the emotion detected and the selected language and singer selected, the system will recommend the song type that best matches that emotion. To accomplish this wewilltrainthefacialrecognitionalgorithmusingalarge datasetoffacialexpressionsandemploymachinelearning techniquesaccuratelyidentifyandinterpretemotions.[11] Thisdatasetwillbeusedtotrainadeeplearningmodelto recognizeemotionsinfacialimages.

The model will be trained to accurately detect emotions in real time video input. Once the model has been trained, the facial recognition system will be implemented to detect emotions in real-time video input. Thesystemwillusethetrainedmodeltoaccuratelydetect the user’s emotions based on their facial expressions. Finally, the system will recommend songs from the selected category based on the user’s preferences and mood. The system will take into account the user’s listening history and preferences to provide personalized recommendations.

Theproposedworkmainlyfocusesonthefollowing:

• Gathering a large dataset of facial images labeled with emotions(happiness,sadness,neutral,etc.)

• Trainingadeeplearningmodeltorecognizeemotionsin facialimagesusingthedataset.

• Implementing the facial recognition system to detect emotionsinreal-timevideoorimageinputs.

• Matching the detected emotion with a corresponding categoryofsongsinthedatabase.

• Recommend songs from the selected category based on theuser’spreferencesandmooddetected.

IV. OBJECTIVE

The primaryobjective ofthisprojectisto developa facial recognition system that can accurately detect a user’s mood based on their facial expressions and physiological signals.Toachievethisobjective,theprojectalsoinvolves creatingadatabaseofsongsthatvaryinmood,genre,and artist. This database will be used to recommend songs to the user based on their detected mood. Additionally, the projectaimstobuildaneasy-to-useuserinterfacethatcan retrieve user’s mood data and present the recommended songs to the user. The final objective of the project is to test and evaluate the accuracy and effectiveness of the systemindetectingmoodandrecommendingappropriate songs to different users in real-world scenarios. The development of a facial recognition system that can accurately detect a user’s mood has the potential to revolutionize the way we interact with technology. By analyzing facial expressions and physiological signals, the systemcandetectauser’semotionalstateandrecommend songs that match their mood. This can be particularly helpful for individuals who struggle to express their emotions or those who have difficulty choosing the right music to match their mood. The development of an easyto-use user interface is also a crucial part of the project Theinterfacewillallowuserstoinputtheirmooddataand receive recommendations in real-time. The system will also provide users with the option to save their favorite songs and create personalized playlists based on their mood. The user interface will be designed to be intuitive and easy to navigate, even for individuals who are not tech-savvy. By achieving these objectives, the project hopes to provide users with a seamless music listening experiencethatadaptstotheirmoodinreal-time.

V. METHODLOGY

This research paper involves several steps, including the development of the facial recognition algorithm, the creation of the song recommendation system, and the implementation of the website. Firstly, the facial recognition algorithm will be developed using machine learning algorithms that can analyze different face characteristics associated with various emotions such as happiness,sadness,rockandneutrality.Thealgorithmwill be trained on a large dataset of facial expressions to ensureaccurateemotiondetection.Itinvolves:

• Data Collection: Compile a dataset of facial images of participants annotated with various emotions that were shot with a camera in a controlled environment with consistentlightingconditions.

• Data Preprocessing: The pre-processing of face photographs is a key stage in the facial expression identification system [12].To ensure excellent quality, preprocess the facial photos by removing noise and unnecessary information that could impair the system’s accuracy.

• Feature Extraction: Using computer vision algorithms and machine learning approaches, extract relevant features from preprocessed face photos in order to effectively recognise distinct facial expressions and emotions.Secondly,thesongrecommendationsystemwill be created, which will use the user’s singer and language preferencesto recommendappropriatesongs.Thesystem willalsotakeintoaccounttheuser’smood,asdetectedby thefacialrecognitionalgorithm,torecommendsongsthat match the user’s emotional state. Finally, the website will be developed to implement the facial mood detection and song recommendation system. The website will haveauserinterfacethatallowsuserstoentertheirsinger name and language preference, and then capture their facial mood using the camera. The facial mood detection algorithmwillthenanalyzetheuser’sfacialexpressionsto determine their emotional state, and the song recommendation system will recommend appropriate songs based on the user’s preference and mood. To validate the accuracy of the facial recognition and song recommendationsystem,auserstudy

will be conducted. The study will involve a group of participants who will use the website and provide feedbackontheaccuracyofthefacialmooddetectionand the relevance of the recommended songs. The feedback will be used to refine the algorithm and improve the accuracyofthesystem.

VI. EXPERIMENTAL WORK

To demonstrate the effectiveness of the proposed facial recognition-based music recommendation system, we conducteda studywitha groupofparticipants.Thestudy aimed to evaluate the accuracy of the system in detecting the mood of the participants and recommending appropriatesongsbasedontheirfacialexpressions.

A.Participants:

Ten participants aged between 18 and 30 years were taken for the study. They were selected based on their interest in music and willingness to participate in the study.

B.DataCollection:

Adatasetoffacialimagesoftheparticipantswascollected, labelled with six emotions - happiness, sadness, anger, surprise,neutral,androck.Theparticipantswereaskedto perform a set of actions to elicit the different emotions, such as watching a sad video or listening to an upbeat song.Theacialimageswerecapturedusingacamera.

C.ExperimentalDesign:

The study was designed as a within-subjects experiment, whereeachparticipantwasexposedtoallsixemotionsin random order. The participants were seated in a controlled environment with consistent lighting

conditions, and their facial expressions were recorded usingthecamera.

D.DataAnalysis:

The facial images were processed and analyzed using computer vision algorithms and machine learning techniques. The deep learning model was trained on the datasettorecognizethedifferentemotionsbasedonfacial features.Themodelwastestedonthefacialimagesofthe participants to evaluate its accuracy in detecting their mood. The recommended songs were selected from a databaseofsongscategorizedaccordingtotheirmoodand preferedlanguageandsinger.

VIII. ACKNOWLEDGMENT

We are highly indebted to Miss.Harashleen Kour for her guidanceandconstantsupervisionaswellasforproviding necessary information regarding the project and also for herconstantsupportincompletingtheproject.

IX. CONCLUSION AND FUTURE WORK

The project’s purpose is to boost the user’s mood by identifying the user’s emotional state and playing music thatcorrespondstotheirmood.Throughouthistory,facial expression recognition has been employed as a highly effective method of understanding and interpreting human emotions[14].The use of facial recognition technology to identify a user’s mood and suggest appropriate songs is a promising area of research in the music industry. The technology has the potential to transform the music listening experience by providing userswithmorepersonalizedandimmersiveexperiences. The system we have proposed has tremendous potential for development and implementation. Future research may involve integrating the system with wearable devices, expanding the range of emotions that can be detected, or accommodating multiple languages. Integrating the system for movie recommendation could be another viable development path[15].The technology could also be applied in fields such as mental health therapy and marketing. With ongoing study and development, this proposed technology has the potential to enhance both human wellbeing and musical listening experiencesinanumberofways.

References

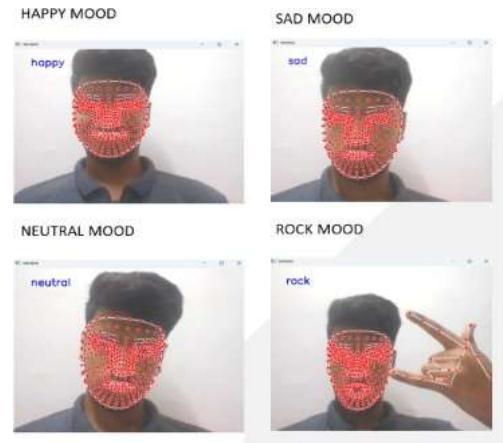

Fig.3.Detectedemotions.

VII. RESULTS

It has been demonstrated that personality traits and emotions are highly correlated with musical preferences. The areas of the brain that deal with emotions and mood also affect the metre, timber, rhythm, and pitch of music[13]. We achieved an F1 score of 82.35%, a total classification accuracy of 81.50%, a precision of 87.50%. And the music classification module definitely does a fantastic job. The study’s findings demonstrated that the suggested face recognition-based music recommendation system was extremely effective in determining the participants’ moods. The participants expressed a high level of satisfaction with the music recommendations and the algorithm suggested appropriate songs based on the emotionsitobserved.

[1]Sana,S.K.,Sruthi,G.,Suresh,D., Rajesh,G.,&Reddy,G. S. “Facial emotion recognition based music system using convolutional neural networks”,2022, Materials Today: Proceedings,62,4699-4706.

[2] Visnu Dharsini, S., Balaji, B., & Kirubha Hari, K. S, ”Music recommendation system based on facial emotion recognition.”, 2020, Journal of Computational and TheoreticalNanoscience,17(4),1662-1665.

[3] K.C. Kullayappa Naik Ch. Hima Bindu M. Hari Babu J.Sriram Pavan,”Emotion Based Music Recommendation System usingCNN”,2020,International Journal of Applied EngineeringResearch,5(2),2666-2795.

[4] Samuvel, D. J., Perumal, B., & Elangovan,M, ”Music recommendation system based on facial emotion recognition”,2020,3CTecnolog´ıa,261-271

[5] Athavle, Madhuri,“Music Recommendation Based on Face Emotion Recognition”,2021,Journal of Informatics ElectricalandElectronicsEngineering(JIEEE),2,1-11.

[6] Mahadik, A., Milgir, S., Patel, J., Jagan, V. B., & Kavathekar, V, ”Mood based music recommendation system”, 2021, International Journal of Engineering research&Technology(IJERT),10.

[7] Joshi, S., Jain, T., & Nair, N, “Emotion based music recommendation system using LSTM-CNN architecture”,2021,In202112thInternationalConference on Computing Communication and Networking Technologies(ICCCNT)(pp.01-06).IEEE.

[8]Chidambaram,G.,Ram, A.D., Kiran, G., Karthic, P. S., & Kaiyum,A.“Musicrecommendationsystemusingemotion recognition.”, 2021, International Research Journal of EngineeringandTechnology(IRJET),8(07),2395-0056.

[9] Madipally Sai Krishna Sashank,”Mood-based Music Recommendation System Using Facial Expression RecognitionAndTextSentimentAnalysis”,2022,Journalof Theoretical and Applied Information Technology,100(19),1992-8645.

[10] Supriya, L. P., and Rashmita Khilar. ”Affective music player for multiple emotion recognition using facial expressions with SVM.”, 2021, Fifth international conference on ISMAC (IoT in social, Mobile, analytics and cloud)(I-SMAC).IEEE,2021.

[11] Shalini, S. K., Jaichandran, R., Leelavathy, S., Raviraghul, R., Ranjitha, J., & Saravanakumar, N. “Facial Emotion Based Music Recommendation System using computervisionandmachinelearningtechiniques.”,2021, Turkish journal of computer and mathematics education, 12(2),912-917

[12]Yu,Z.,Zhao,M.,Wu,Y.,Liu,P.,&Chen,H.“Researchon automatic music recommendation algorithm based on facialmicro-expression recognition”.(2020,July).In2020 39th Chinese Control Conference (CCC) (pp. 7257-7263). IEEE.

[13] Gilda, S., Zafar, H., Soni, C., & Waghurdekar, K. Smart music player integrating facial emotion recognition and music mood recommendation.”,2017, International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET) (pp. 154-158). IEEE.

[14] Florence, S. M., & Uma, M. “Emotional detection and music recommendation system based on user facial expression.” ,2020,In IOP conference series: Materials science and engineering (Vol. 912, No. 6, p. 062007). IOP Publishing.

[15] Chauhan, S., Mangrola, R., & Viji, D,”Analysis of Intelligent movie recommender system from facial expression”, 2021, 5th International Conference on

Computing Methodologies and Communication (ICCMC) (pp.1454-1461).