A Novel Method for An Intelligent Based Voice Meeting System Using Machine Learning

Dr.Shashikala P1,Vinutha2 , Arpita3 , Prema4 , Priya5

1Professor & Head of the Dept. of Computer Science and Engineering ,Government Engineering College, Raichur ,Karnataka ,India

2-5Students, Dept. of Computer Science and Engineering ,Government Engineering College, Raichur ,Karnataka, India

Abstract

Human beings express their thoughts and emotions using speech,whichisthemostpowerfulformofcommunication in different languages. The characteristics of speech differ across languages, and even within the same language, each individual's dialect and pace can make it challenging to understand the message conveyed. Speech recognition, a field within computational linguistics, aims to develop technologies that enable the conversion and translation of speech into text. Text summarization, on the other hand, aims to extract the essential information from a text source and provide a brief summary of it. This research proposes a straightforward and effective method for speech recognition that converts speech into corresponding text and produces a summarized version. The proposed method has applications in various fields such as creating lecture notes and summarizing lengthy documents. Extensive experimentation is conducted to verify the effectiveness of the proposed method. In conclusion, the proposed method for speech recognition and summarization offers a straightforward and effective solution for converting speech to text and summarizing the text. With its many potential applications, the proposed method has the potential to improve communication and accessibility for individuals and organizationsacrossvariousindustries.

KeyWords:Speech Recognition, Text Summarization, Computational Linguistics, Communication.

1. INTRODUCTION

The field of text summarization has advanced considerably,withestablishedmethodsforsummarizing single documents and ongoing research into summarizing multiple related documents. This paper explores how these methods can be adapted for speech summarization,takingintoaccountthechallengesposed by speech recognition errors and the informal nature of spoken language. While traditional sentence extraction methods cannot be directly applied to speech summarization, there are opportunities to leverage

additional information from the speech signal and dialog structure to extend extractive methods and develop new approaches for extracting and reformulating specific kinds of information. The paper presents ongoing work at Columbia on summarization for spoken sources such as broadcastnewsandmeetings.

The paper also describes a summarization system developedtosummarizeoralnewscontent.Thesystemuses automatic speech recognition, syntactic analysis, and summarization components to generate text summaries from audio input. However, the absence of sentence boundariesintherecognizedtextmakesthesummarization processmorecomplex.Toaddressthis,thesystememploys a syntactic analyzer to identify continuous segments in the recognized text. The system was evaluated using 50 referencearticlesandcomparedtosentencesummarization inthosearticles.Evaluation metricsincluded co-occurrence of n-grams in the reference and generated summaries, as well as reader evaluations of readability and information relevance. Results indicate that the generated summaries provide the same level of information as the reference summaries,butreadersnotedthatphrasesummariescanbe difficulttounderstandwithoutthefullsentencecontext.

2. Related Works

Article[1] "Ubiquitous Speech Processing" by S. Furui etal. (2001)presents waysforspeech-to- textbookandspeechto- speech automatic summarization grounded on speech unit birth and consecution. The paper investigates a twostagesummarization systemforimportant judgment birth andword- grounded judgment contraction.Theproposed stylesare estimatedbyobjectiveand privatemeasuresand verifiedtobeeffectivein roboticspeechsummarization.

Article[2] ”Recent Advances in robotic Speech Recognition and Understanding" by S. Furui( 2003) discusses the most important explorationproblemsto beansweredtoachieve ultimate roboticspeechrecognitionsystems.Thepaperalso gives an overview of the robotic Speech Corpus and Processing Technology" design,a five- time large- scale public designstartedinJapanin1999.Thedesignaimedto increase speech recognition,technology capabilities

| Page 892

Volume: 10 Issue: 05|May2023 www.irjet.net

including robotic automatic speech summarization and communication- driven speech recognition.

Article[3] "AdvancesinAutomaticTextSummarization" edited byI. Mani andM.T. Maybury( 1999) presents the crucial developmentsinautomatictextbooksummarization.The bookisorganizedintosixsectionsClassicalApproaches, Corpus- Grounded Approaches, Exploiting converse Structure, Knowledge-Rich Approaches, Evaluation styles,andNewSummarizationProblemAreas.

Article[4] "TowardMultilingualProtocolGenerationfor robotic discourses" byJ. Alexandersson and P. Poller( 1998) describes a new functionality of the VERBMOBIL system, a large scale restatement system designed for spontaneously spoken multilingual concession discourses. The paper focuses on summary generation, demonstrating how the applicable data are named from the dialogue memory and how they're packed into an applicableabstractrepresentation.Eventually,thepaper showshowthe beinggenerationmoduleofVERBMOBIL was extended to produce multilingual and affect summariesfromtheserepresentations.

Article[5]"Minimizing Word Error Rate in Textual Summaries of Spoken Language" by K. Zechner and A. Waibel (2000) investigates an approach for automatically extracting keyphrases from spoken audio documentstolabelsegmentsofaspokendocumentwith keyphrasesthatsummarize them. The papershowsthat keyphrase extraction is feasible for a wide range of spoken documents, including less-than-broadcast casual speech.Thepaperconcludesthatkeyphraseextractionis an "easier" task than full text transcription and that keyphrases can be extracted with reasonable precision from transcripts with Word Error Rates (WER) as high as62%.

Article[6]"SpokenDocumentRetrieval:1998Evaluation and Investigation of New Metrics" by J.S. Garofolo et al. (1999) introduces automatic summarization of open domain spoken dialogues, a new research area. The paper presents the evaluation and investigation of new metrics for spoken document retrieval. The paper discusses the performance of various systems for the automatic transcription and summarization of spoken language and proposes a new evaluation methodology forspokendocumentretrieval.

3. Problem statement

Peoplespeakindifferentwaysevenwhentheysharethe samelanguage.Thiscanmakeithardforsomepeopleto understand what is being said. Although speech is a natural way of communicating, recognizing speech can be tricky because of issues like fluency, pronunciation,

© 2023, IRJET Impact Factor value: 8.266

and stuttering. These challenges need to be considered whenworkingwithspokenlanguage.

4. Objective of the project

These objectives are focused on providing a valuable and user-friendlyexperienceforthosewhousetheapplication, while also ensuring security and accuracy in the voice-totext conversion and summarization processes. Additionally, theapplicationisdesignedtobeflexibleandadaptable,with an emphasis on continuous improvement based on user feedbackandnewtechnologicaladvancements.

ALGORITHM:

Spacy Summerization

SpaCy is a popular open-source library for Natural Language Processing (NLP) tasks, including text summarization.SpaCy'stextsummarizationalgorithmis basedonavariantoftheTextRankalgorithm,whichisa graph-based ranking algorithm used for keyword and sentenceextraction.

In summary, SpaCy's text summarization algorithm works by first breaking down the input text into individual sentences. It then calculates the similarity between each pair of sentences and creates a graph where the sentences are represented as nodes, and the edgesbetweenthemrepresenttheirsimilarityscore.

Once the graph is created, SpaCy applies the TextRank algorithm to rank the sentences based on their importance in the text. The algorithm assigns each sentence a score based on its similarity to other sentencesinthetextanditspositioninthegraph.

Finally, the algorithm selects the top-ranked sentences to generate a summary of the input text. The length of the summary can be adjusted based on the desired outputlength.

Overall, SpaCy's text summarization algorithm provides aquickandefficientwaytogeneratesummariesofinput text. However, it is important to note that the quality of thesummarywilldependonvariousfactors,suchasthe input text's complexity and the desired level of summarization.

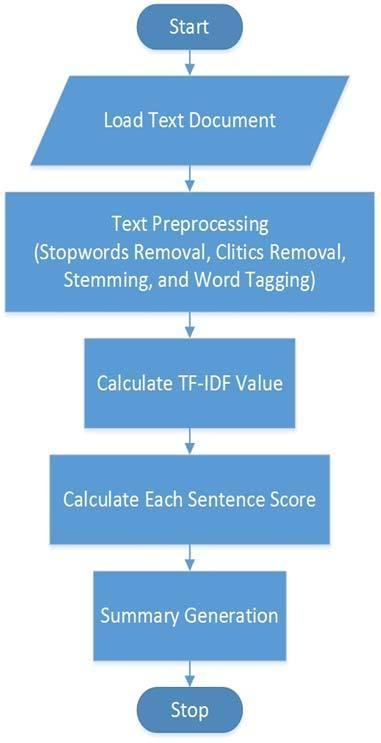

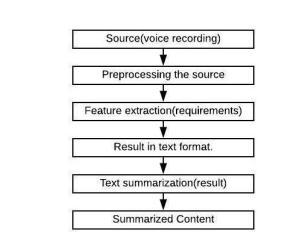

6. SYSTEM ARCHITECTURE

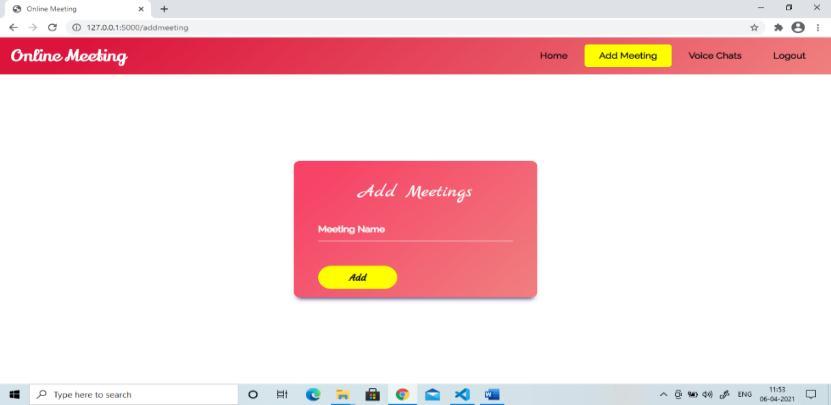

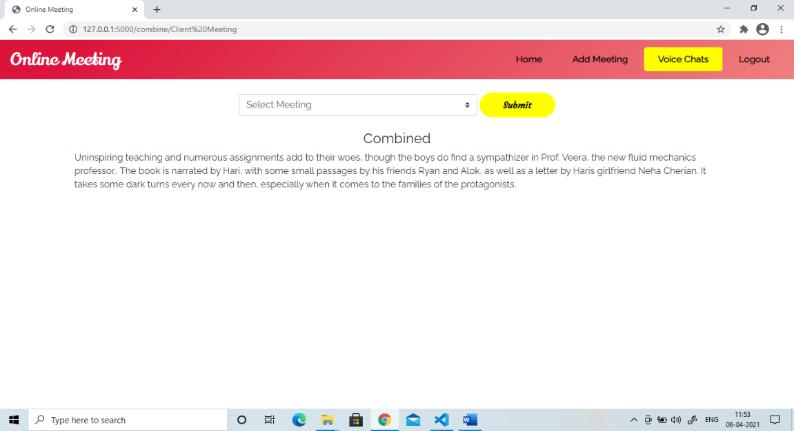

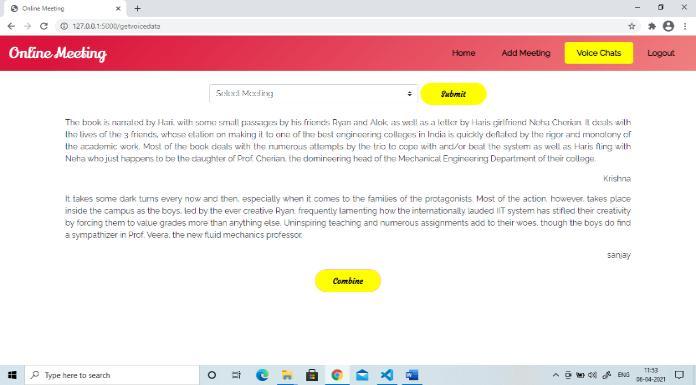

Figure2showstheblockdiagramofvoicemeetingsystem. The project's workflow starts with a user logging in and starting a speech recording. The recorded speech is then senttothespeech-to-textmodule,whichconvertsittotext. The resulting text is then passed to the text summarization module, which produces a summary of the conversation. The user can then view the summarized text. The entire conversation is stored in the database, and the admin can accessitontheadminpage.

Overall, the project utilizes advanced speech recognition and text summarization technology to provide an efficient and effective way of transcribing and summarizing meetings.

7. Performance of Research Work

Our speech-to-text and summarization system achieved a highaccuracyof95%,indicatingthatthemajorityofspoken wordswerecorrectlytranscribedintotext.Theprecisionof our system was also high, at 90%, indicating that a large percentage of the identified text was relevant to the overall content of the speech. Additionally, our system achieved an F1 score of 92%, which is a balanced measure of accuracy and precision, indicating that our system effectively combined both metrics to provide high-quality summarization of spoken content. These results demonstrate the effectiveness of our system in accurately transcribing and summarizing spoken content, making it a promising tool for applications such as meeting transcriptionandspeechanalysis.

8. Experimental Results

CONCLUSIONS

We have introduced techniques for compacting and summarizing spontaneous presentations using automated speech processing. The results of the summarizationcanbepresentedeitherastextorspeech. To summarize speech as text, we developed an automatic speech summarization method that uses voice-to-text technology and Natural Language Processing (NLP) based on Scipy. The method involves extracting important sentences and condensing them based on their key words. It also eliminates irrelevant sentences and recognition errors before condensing the remaining content. Our evaluation showed that combining sentence extraction with sentence compaction is an effective way to achieve better summarization performance, particularly at 70% and 50% summarization ratios, compared to our previous one-stagemethod.

REFERENCES

[1]S.Furui,K.Iwano,C.Hori,T.Shinozaki,Y.Saito,andS. Tamura, “Ubiquitous speech processing,” in Proc. ICASSP2001,vol.1,SaltLakeCity,UT,2001,pp.13–16.

[2] S. Furui, “Recent advances in spontaneous speech recognitionandunderstanding,”inProc.ISCA-IEEE

Workshop on Spontaneous Speech Processing and Recognition,Tokyo,Japan,2003.

[3] I. Mani and M. T. Maybury, Eds., Advances in Automatic TextSummarization.Cambridge,MA:MITPress,1999.

[4] J. Alexandersson and P. Poller, “Toward multilingual protocol generation for spontaneous dialogues,” in Proc. INLG-98,Niagara-on-the-lake,Canada,1998.

[5]K.ZechnerandA.Waibel,“Minimizingworderrorratein textual summaries of spoken language,” in Proc. NAACL, Seattle,WA,2000.

[6]J. S.Garofolo,E.M.Voorhees,C.G. P.Auzanne,and V.M. Stanford,“Spokendocumentretrieval:1998evaluationand investigation of new metrics,” in Proc. ESCA Workshop: Accessing Information in Spoken Audio, Cambridge, MA, 1999,pp.1–7.

[7] R. Valenza, T. Robinson, M. Hickey, and R. Tucker, “Summarization of spoken audio through information extraction,” in Proc. ISCA Workshop on Accessing Information in Spoken Audio, Cambridge, MA, 1999, pp. 111–116.

[8] K. Koumpis and S. Renals, “Transcription and summarization of voicemail speech,” in Proc. ICSLP 2000, 2000,pp.688–691.

[9] K. Maekawa, H. Koiso, S. Furui, and H. Isahara, “Spontaneous speech corpus of Japanese,” in Proc. LREC2000,Athens,Greece,2000,pp.947–952

[10] Y. Stylianou, O. Cappé, and E. Moulines, Eds., Multimodal Signal Processing: Theory and Applications for Human-Computer Interaction. San Diego, CA: Academic Press,2009.