Intelligent Transportation System Based On Machine Learning For Vehicle Perception

Harshilsinh Rana1 , Dr. Dipesh Makwana2

1Student, Dept. of I.C Engineering, L.D college of Engineering, Gujarat, India

2 Associate Professor, Dept. of I C Engineering, L.D college of Engineering, Gujarat, India ***

Abstract - The term "intelligent transportationsystems," or ITS for short, refers to a group of services and applications that include, among others, driverless vehicles, traveler information systems, and management of public transit systems. Future smart cities and urban planning are predicted to heavily rely on ITS, which will improve transportation and transit efficiency, road and traffic safety, as well as energy efficiency and environmental pollution reduction .However, because of its scalability, a wide range of quality-of-service requirements, and the enormous amount of data it will produce, ITS poses a number of difficulties .In this study, we investigate how to make ITS possible using machine learning (ML), a field that has recently attracted a lot of attention. We offer a comprehensive analysis of the current state-of-the-art which covers many fold perspectives grouped into ITS MLdriven supporting tasks, namely perception, prediction and management of how ML technology has been used to a variety of ITS applications and services, like cooperative driving and road hazard warning, and determine future paths for how ITS might more fully utilize and profit from ML technology

Key Words: Intelligent transportation system, Perception tasks, Machine Learning, Road safety, Privacy and security

1.INTRODUCTION

The use of information, communication, and sensing technologies in transportation and transit systems is commonly referred to as "intelligent transportation systems," or ITS for short[1]. Future smart cities are anticipatedtoincorporateITSasakeyelement,anditwill likelycontainarangeofservicesandapplications,including autonomous vehicles, traveler information systems, and managementofpublictransitsystems,tomentionafew[2]. It is anticipated that ITS services will make a substantial contribution to higher energy efficiency, decreased environmentalpollution,improvedroadandtrafficsafety, and transportation and transit efficiency. ITS applications have been made possible by remarkable developments in sensing, computation, and wireless communication technology; but because of their scalabilityanda range of quality-of-servicerequirements,theywillpresentanumber ofobstacles,additionallytotheenormousamountsofdata thattheywillproduce.

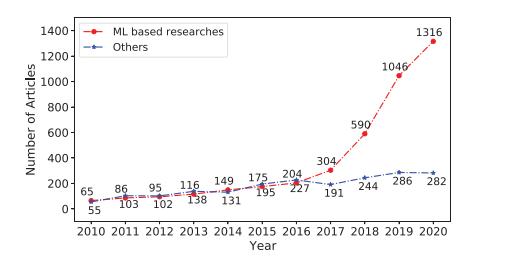

Paralleltothis,cloudandedgecomputing,aswellasother technologies,havehelpedmachinelearning(ML)techniques togainalotofpopularity.Awiderangeofapplicationsthat, like ITS services, demand a variety of requirements have incorporated machine learning (ML). For prediction and precise decision making[3-5] in addition to vehicular cybersecurity, ML technologies like deep learning and reinforcementlearninghavebeenparticularlyhelpfultools todiscoverpatternsandunderlyingstructuresinmassive datasets.[6]Thenumberofresearchinitiativesleveraging ML toenableandoptimizeITStaskshasclearlyincreased overthepast10years,accordingtostatisticsonscientific publications(seeFigure1).

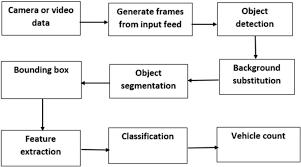

The following figure explains about the number of publications that are done on ITS, which are included in machinelearningapproaches.Somedifferentfunctionsthat arealsousedintheproposedsystemasvehicledetection, identification,classification,speeddetection.Hencewewill use the different algorithms for all the system. This study work intends to discuss a precise and useful method for counting moving vehicles that may be applied in the confusingtrafficsituation.Tofindamovingvehicleandgeta foreground image, techniques like adaptive background reductionandmorphologicalactivitiesareutilized

Chart -1:NumberofpublicationsonITS,includingMLbasedapproaches,from2010to2020

Nowwewillhavesomebackgroundinformationaboutthe computervisionandOpenCV.Thegoalofthebranchofstudy known as computer vision is to make it possible for machinestoanalyzeandcomprehendvisualdatafromthe environment. It is a branch of artificial intelligence (AI) concerned with giving machines the ability to see and comprehend the visual world. Object identification and

recognition, picture and video analysis, autonomous vehicles,medicalimageanalysis,andmanyotherusesare justafewofthemanyapplicationsforcomputervision.

Foruseinreal-timecomputervisionapplications,OpenCV (OpenSourceComputerVisionLibrary)isanopen-source computervisionlibrary.Amultinationalgroupofdevelopers nowmaintainsitafterIntelinitiallybuiltit.Foravarietyof computervisionapplications,includingprocessingimages and videos, object detection and recognition, feature detection and matching, and machine learning, OpenCV offers a set of tools and functions. Python, C++, Java, and otherprogramminglanguagesareamongthoseitsupports.

Fornumerouscomputervisionapplications,suchasfacial recognition, gesture recognition, autonomous driving, robotics,andmanyothers,OpenCViswidelyutilizedinboth research and industry. It offers a wide range of computer vision features, such as processing of images and videos, featureextractionandidentification,objectrecognitionand tracking,machinelearning, andmore.Italsohasa sizable user and developer community that contributes to the libraryandoffersassistanceandresourcestootherssothey canutilizeitefficiently.

2. RELATED WORK

Wecannowhaveabriefreviewoftheexistingliteratureon vehicledetection,counting,classification,identification,and speed detection using OpenCV, and compare the different techniquesandapproachesusedbyvariousresearchers.

Vehicle detection is an essential problem in many applications, including autonomous driving, parking management, and traffic monitoring. The three primary methodsforvehicledetectioninOpenCVareYOLO,HOG+ SVM, and Haar cascades. A quick method for detecting automobilesistoutilizeacascadeclassifierwithHaar-like characteristics.However,itmightnotbeeffectiveindifficult scenesorwhenlookingforsmallvehicles.Ontheotherhand, HOG+SVM,whichperformswellinavarietyofillumination circumstances,usesHistogramofOrientedGradients(HOG) featuresandaSupportVectorMachine(SVM)torecognize cars. However, the computational cost might be high. A neuralnetworkisusedintheYOLOdeeplearningmethodto identifyautomobilesinasinglepass.Itcanrecognizesmall vehiclesandperformswellinreal-timeapplications,butit could struggle to recognize obscured or dimly lit vehicles. Therequirementsandlimitationsoftheapplication,suchas thedesiredspeed,accuracy,andcomplexity,determinethe bestcourseofaction.

Another crucial job in many contexts, including traffic controlandcongestionmanagement,isvehiclecounting.The twoprimarymethodsforvehiclecountingwithOpenCVare opticalflowandbackgroundsubtraction.Asimpleandquick method,backgroundsubtractionusesabackgroundmodel

to find and count moving cars. However, it might not be effectiveforoverlappingvehiclesorcomplexscenes.Onthe otherhand,opticalflowcountsthevehicles andestimates their motion, which is useful for real-time applications. It might not be effective for stationary or slowly moving automobiles, though. The application's particular requirementsandconstraints,suchasthedesiredaccuracy, speed,andresilience,mustbetakenintoconsiderationwhile choosingthebeststrategy.

Thepracticeofclassifyingvehiclesintovariouskinds,such ascars,trucks,andbuses,isknownasvehicleclassification. UsingOpenCV,onecancategorizevehiclesusingavarietyof methods, such as color, shape, and texture features. Color featuresareaquickandeasywaytocategorizeautomobiles sincetheyusecolorinformation.However,underdifferent lighting conditions, it might not be precise. While more accurate than color features, shape features may be computationally expensive because they classify vehicles basedontheirgeometricshape.Texturefeaturescategorize carsbasedontheirtexturepatterns,whichworkswellfor different vehicle kinds but may not function well in complicated scenarios. Depending on the requirements of the particular application, the best method for vehicle classificationcanbechosen.

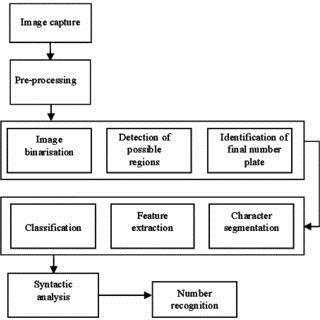

Asidefromtrafficmanagementandlawenforcement,other crucial tasks include vehicle identification and speed detection. License plate detection and recognition are the twoprimarymethodsforidentifyingvehiclesusingOpenCV. Inordertoidentifylicenseplates,licenseplateidentification employsimageprocessingmethodslikeedgedetectionand morphological processes.It works well invarious lighting situationsbutmightnotbeeffectivewithlicenseplatesthat aredamagedorconcealed.Ontheotherhand,licenseplate identification use optical character recognition (OCR) methodstoidentifythecharactersontheplate.Itperforms admirablyforclearlyreadablelicenseplates,butitmightnot bepreciseforcharactersthataredeformedorblurry.The twomajorOpenCVmethodsfordetectingvehiclespeed are GPS tracking and optical flow. When used in real-time applications, optical flow evaluates the motion of vehicles anddeterminestheirspeed.However,itmightnotbeprecise for stationary or slowly moving vehicles. However, GPS tracking,whichismoreprecisethanopticalflow,usesGPS technology to track vehicles and determine their speed. It might not function properly in locations with weak GPS signals, though. Depending on the particular application needsandrestrictions,suchasthedesiredaccuracy,speed, andavailabilityofhardware andsensors,thebestmethod forvehicleidentificationandspeeddetectioncanbechosen.

3. METHODOLOGY

In this section we will have a brief information about the vehicle detection, classification, identification, speed detection.Let’sstartwiththevehicledetection.

3.1 Vehicle Detection

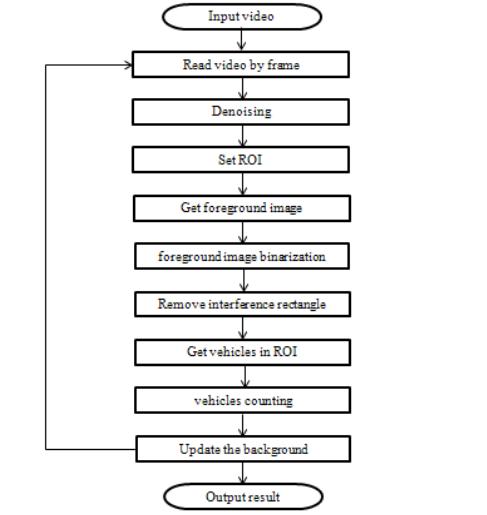

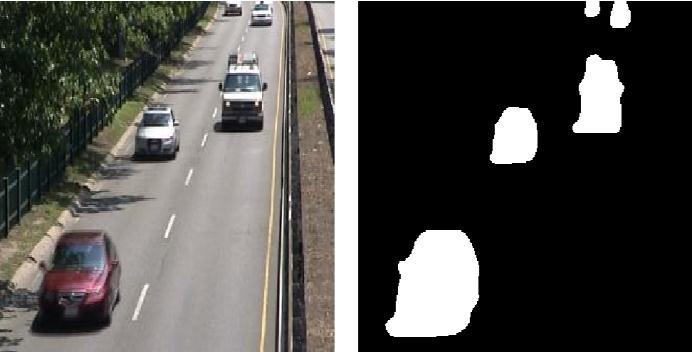

Backgroundsubtractionisusedtofirstidentifypixelsthat mightbeassociatedwithamovingvehicleintheforeground. The current frame in the video is converted from a color (RGB)toagrayscaleimage,andthebackgroundimageofthe streethasnocarsinit[7].Atthattime,thegreyintensityofa backgroundimageissubtractedfromthatofacurrentframe for every pixel (x, y). In another image, also known as a differentimage,theabsolutistresultisstoredinaposition thatissimilartothatofthefirstimage.

backgroundframeisusedfordetection.Thismakesiteasier to distinguishmovingimagesinvideos.Toreducenoiseand fillingapsintheobjects,theforegroundmaskisthentreated using morphological operations including dilation and closing. The recovered contours from the processed mask arenextexaminedtoseeiftheymatchavehicle.

A bounding box is drawn around a vehicle in the video frame if a contour resembles one (i.e., it meets certain establishedcriterialikeminimumwidthandheight).Thelist ofidentifiedcarsisthenexpandedtoincludethecenterof theboundingbox.

A ROI that will be processed further. Zones are isolated withintheROI.Eachwillbetreateddifferentlyinthesteps thatfollow.Additionally,asecondzoneknownasthevirtual detectionzoneisalsoestablishedforvehiclecounting[8]. The zone of virtual recognition is contained inside zones. whichservesasthefoundationofROI.Theupperandlower bounds on the y-coordinate where the automobiles are anticipated to appear implicitly determine the ROI. Particularly,thevariablesuplimitanddownlimitspecifythe top and bottom limits of the ROI, respectively. Contours foundoutsidetheROIarefilteredoutusingthesevalues.The only contours that are taken into account as prospective vehiclesandsenttothevehicletrackingmodulearethose whosecentroidfallsinsidetheROI'sbounds.

In order to obtain the foreground mask, background subtraction which subtracts the current frame from the

Fig -2:DetectionofVehicles

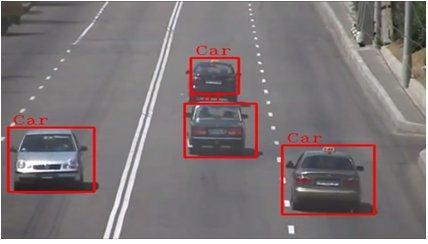

3.2 Vehicle Classification

Detecting, classifying, and counting the number of cars movingthroughaspecificareaofinterestinavideostream isacomputervisionjobknownas"vehicleclassificationand countingusingOpenCV."Toseparatemovingobjectsfrom the backdrop, the algorithm initially uses a background subtractiontechnique.Thebinaryimagethatresultsisthen subjectedtomorphologicalprocessestoeliminatenoiseand fillingaps.

The binary image'scontoursare thenretrieved,and each contour is examined to see if it matches a vehicle by examiningitsarea.Ifacontourisrecognizedasbelongingto avehicle,itscentroidisextractedandtrackedovertimeto establish its course. The technology is also capable of categorizing vehicles based on their size and shape, for instance,separatingcarsfromlorries.Lastbutnotleast,the numberofvehiclesgoingthroughaspecificareaofinterest isdeterminedbywhethertheycrossanillegiblelineonthe screenthatistravellinginaspecificdirection

-3:ProposedmodelofVehicleClassification

3.3 Vehicle Identification

UsingOpenCV,itisintendedtofindlicenseplatesinavideo stream.AnXMLfileisusedtogenerateatrainedclassifier object, establish a minimum area for license plates, and specify a color for drawing rectangles around identified plates. Next, the code determines the desired width and heightofthevideoframe.

animage,anditprovidesalistofboundingboxesthatshow where each detected license plate can be found. It determines if the rectangle's area exceeds the previously determinedminimumarea.Thecodesurroundsthenumber platewitha rectangleandaddswordingtoidentifyitasa "Vehicle number Plate" if the region matches the minimal requirement.

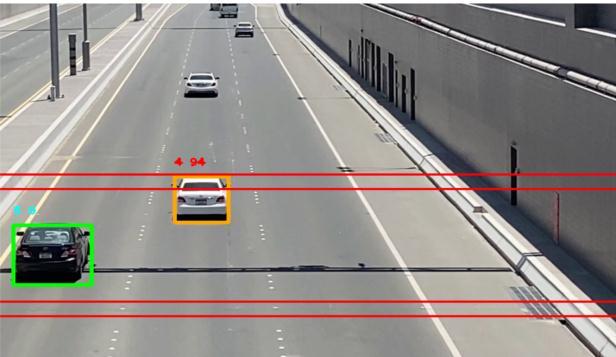

3.4 Vehicle Speed Detection

Itisanimplementationofobjecttrackingonvideofootage usingOpenCVlibrary.Thecodedetectsmovingobjectsina givenregionofinterest(ROI)usingbackgroundsubtraction andmorphologyoperations.

ThedetectedobjectsarethentrackedusingtheEuclidean distancetrackeralgorithm.Thespeedofthetrackedobjectis calculated and used to determine if the object is moving aboveacertainspeedlimit.Ifitis,theobjectismarkedas overspeedingandthecorrespondingrectangleisdrawnin red.Thedetectedobjectsarealsotrackedacrossframesand theirIDsaremaintainedtokeeptrackofindividualobjects. The code also draws lines on the ROI to differentiate between different lanes on the road. It builds bounding boxesaroundthefoundandtrackedobjectsusingtheMOG2 algorithm for object detection and the Euclidean distance techniqueforobjecttracking.Additionally,itkeepstrackof howmanyvehiclesenterandexitthedetectionzone,which ishelpfulformonitoringandanalyzingtraffic.

Thedistancebetweentwosuccessiveframesisdetermined usingthedistanceformulatodeterminethetrackedvehicles' speed.Basedonthevideo'sframerate,theintervalbetween eachframeisfixedandpredefined.Thespeedofthecarcan be calculated by dividing the distance travelled by the passingtime.

Thecarsarethendividedintotwogroupsbasedonwhether or not they are speeding using the estimated speed. The maximumspeedrestrictionintheinterestareaservesasthe basis for defining the classification threshold. A vehicle is consideredtobespeedingifitsspeedexceedsthethreshold and is marked with a red bounding box; otherwise, it is markedwithagreenboundingbox.

4. DETAILED ALGORITHMS

Thevehicledetection,counting,identification,classification, speeddetectionalgorithminvolvesseveralcomputervision techniques and image processing operations. Here is a breakdownofthealgorithmsused:

Fig -4: VehicleNumberPlateIdentificationmodel

Each frame of the video is read from the while loop and turnedintograyscale.Afterthat,thefunctionisutilizedto findlicenseplatesinthegrayscalepicture.Thisfunctionuses aslidingwindowtechniquetofinditemsofvarioussizesin

4.1 Background Subtraction

In computer vision and image processing, the concept of background subtraction is used to distinguish foreground items from the background in an image or video. The fundamentalconceptistotaketheforegroundandsubtract

the backdrop from the current frame. The mathematical ideas and procedures involved in background subtraction are explained in full here. Calculating the average of the framesoverapredeterminedamountoftimeisastandard techniqueforbuildingabackgroundmodel.ConsideringBto bethebackgroundmodelatpixel(x,y);

B(x,y)=(1/N)*Σi=1^NF(x,y,i)

where N is the total number of frames used to build the backgroundmodelandF(x,y,i)isthevalueofthepixelat position(x,y)inframei.

Ifwealreadyhaveabackdropimage,suchasanimageofa buildingoraroad,thebackgroundsubtractiontaskcanbe simple.Inthesituationslistedabove,thebackgroundimage could be eliminated and the foreground objects could be acquired,althoughthisisnotalwaysthecase.Theoriginal informationaboutthesettingmightnotbeavailable,orthe backgroundsmightbedynamic.

Furthermore, because shadowed objects in videos move along with people and vehicles, making background subtractionmorechallengingbecausetheshadowswillbe mistaken for foreground objects by conventional backgroundsubtraction.

A morphological process called dilation adds pixels to an object'sedgesinapicture.Thebasicconceptistoscanthe imagewithastructuringelement(atinybinarymatrix)and seteachpixelvalueto1ifanyofthestructuringelement's pixels overlap any pixels belonging to an object. The structuring element's size and form affect the dilation's extent.

Mdilated(x,y)=∨_{(i,j)∈B}M(x+i,y+j)

Closing is a morphological process that fills in tiny gaps within an item in an image by combining dilatation and erosion. The main concept is to dilate the image with a structuring element, then degrade the outcome using the same structuring element. The object's overall shape is maintainedwhilethelittleholesaresuccessfullyremoved.

Mathematically,thefollowingisthedefinitionoftheclosing ofabinaryimageMwithastructuringelementB:

Mclosed=(Mdilated)erodedwithB

where (M dilated) denotes the enlargement of M by the structuringelementBand(eroded)denotestheerosionof theoutcomebythesamestructuringelementB.

When preprocessing binary images in preparation for additional analysis like connected component labelling or shape analysis, the closing operation is especially helpful. Theremaybenoiseandgapsinthebinarymaskproduced bythresholdingthatneedtobeeliminated.

4.3 Contour Detections:

Thelimitsofashapeareitscontours,whichareutilizedto identify and recognize shapes. The deft edge detection carried out on a binary picture can be used to define the correctness of the contour finding procedure. To find the contours, use the cv2.findContours() method provided by OpenCV.

Forscenarioslikethese,anumberofalgorithmshavebeen developed;someofthemareimplementedinOpenCV,such asBackgroundSubtractionMOG[12],whichbuildsamodel of the image's backdrop using Gaussian distributions. For this, it makes use of three to five Gaussian distributions. Basedonandcombiningthebackgroundimageestimating methodologywithBayesiansegmentation,[13] isanother backgroundsubtractionmethodthathasbeenimplemented inOpenCV.

4.2 Morphological Operations:

Itiscommonpracticetoprocessbinaryimagesacquirefrom backgroundsubtractionorotherimageprocessingmethods using morphological operations like dilation and closure. Here are the mathematical formulas for these operations alongwithanexplanation.

Inordertocreatecontinuouscurvesorcontoursthatoutline the objects in the image, contour detection often requires locatingportionsoftheimagethathaveedgesorintensity changesandlinkingsuchareas.Severalalgorithms,including theLaplacianofGaussian(Log)filter,theSobelfilter,andthe Cannyedgedetector,canbeusedtoaccomplishthis.

In order to extract parameters like area, perimeter, and orientationortoidentifycertainitemsbasedontheirshape ortexture,thecontourscanbeidentifiedandthensubjected toadditionalprocessingoranalysis.Afundamentalmethod in computer vision called contour detection serves as the foundation for many sophisticated algorithms utilized in areas including robotics, autonomous cars, and medical imaging.

4.4 Euclidean Distance Algorithm

AmathematicaltechniqueknownastheEuclideandistance algorithm is used in computer vision to calculate the separationbetweentwopointsinatwo-dimensionalspace. In computer vision, this algorithm is commonly used to calculate the similarity between two images or to match featuresbetweentwoimages.

d = 2√(a2+b2).Hence,thedistancebetweentwopoints(a, b)and(-a,-b)is2√(a2+b2).

The centroids of the bounding boxes are subjected to the Euclideandistancealgorithminaseriesofframeswhenan objectisbeingtracked.Thetwoboundingboxesaretakento represent the same object if the distance between two centroids is less than a predetermined threshold. The algorithmcandeterminetheobject'sspeedanddirectionof motion by comparing the position of the bounding boxes betweenframes.

5. SIMULATION RESULTS

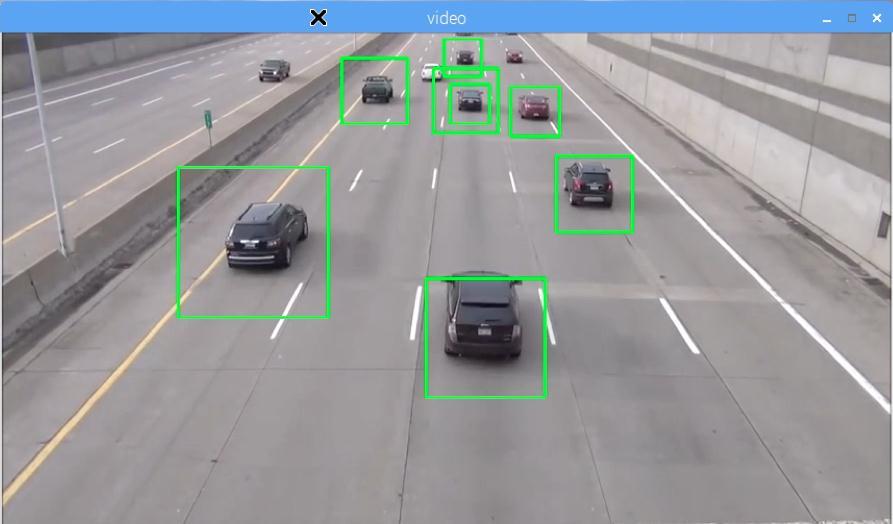

Theproposedsystemisimplementedonpython,usingthe OpenCV bindings. Moving objects are initially segmented usingabackgroundsubtractiontechnique,afterwhichnoise is reduced and object shapes are improved using binary thresholdingandmorphologicalprocesses.

The segmented objects outlines are then extracted, and centroid tracking is used to track the segmented objects across frames. The code assigns an individual ID to each objectandrecordsitspositionandage.

Thecodethencreatestwofictitiouslinesonthescreen,and countsthenumberofvehiclesthatcrossthem.Anobjectis consideredtobemovingdownhillorupwardifitcrossesthe top line in that direction, and upward or downward if it crossesthebottomline.

The main loop reads frames from the video and uses morphological operations and background subtraction to recover the contours of moving objects. The process then repeatsovertheoutlinestodeterminewhethertheysatisfy thenecessaryconditions(suchasarea)tobeclassifiedasa vehicle.Itdeterminesif a detected vehicleisa brand-new one or an existing one and changes the position of any existing vehicles. Additionally, it counts the vehicles that cross the detection lines and changes the direction of movement.

TheEuclideanDistanceTrackerclass,whichkeepstrackof objectsbetweenframes,iscreatedbythecodeasanobject. Then, in order to find objects in the video, it creates a backgroundsubtractorobjectusingthecreateBackground SubtractorMOG2method.

Fig -6:VehicleClassificationResults

Inordertoconcentrateontheareaofinterest,whichisthe road where the vehicles are travelling, the code extracts a region of interest (ROI) from the frame. The ROI is then concealedusingtwodistincttechniques.TheROIissubjected to the object detector in the first technique, which then thresholdsthegeneratedmasktoreducenoise.Thesecond technique applies a morphological opening and closing operation to the foreground mask before eroding it. This methodtakesamorecomplicatedapproach.Thealgorithm thentracksthediscoveredobjectsbetweensuccessiveframes usingtheEuclideanDistanceTrackerobject.Eachobjectis given an ID, and based on its prior position, each object's positionandspeedareupdated.Eachitemhasafixedspeed limitof30,andifanobjectgoesoverthatlimit,thecodeflags itasspeedingbyhighlightingitsIDinred.

Itisessentialtoconsiderothermethodsandtechniquesthat can also be used for vehicle speed detection. One such method is the optical flow method, which estimates the motionofobjectsinasequenceofimages.Opticalflowcan beusedtocalculatethespeedofvehiclesbyanalyzingthe change in position of the vehicle between consecutive frames. However, this method can be sensitive to lighting changes,objectocclusion,andcansufferfrominaccuraciesin objectdetection.

Another technique that can be used for vehicle speed detection is the use of radar or LIDAR. These sensors use radio waves or laser pulses to measure the distance and speedofobjects.Thesemethodsarehighlyaccurateandcan beusedinvariousweatherandlightingconditions.However, the cost of these sensors can be high, and they require specializedequipmentandinstallation.

Incomparison,theEuclideandistancealgorithmisasimple and efficient method for vehicle speed detection. It uses objecttrackingtoestimatethespeedofthevehiclebasedon the change in position between consecutive frames. This methoddoesnotrequirespecializedequipmentandcanbe implementedusingstandardcameras.Itisalsolesssensitive to changes in lighting and object occlusion compared to optical flow.However,theaccuracyofthismethodcan be affected by the accuracy of the object detection algorithm

and the choice of distance metric used in the tracking algorithm. Overall, the Euclidean distance algorithm provides a cost-effective and practical solution for vehicle speeddetectioninvariousapplications.

[5] LuongNC,HoangDT,S.Gong,etal.Applicationsofdeep reinforcement learning in communications and networking:asurvey;2018.1810.07862.

[6] PethoZ,TörökÁ,SzalayZ.Asurveyofneworientations inthefieldofvehicularcybersecurity,applyingartificial intelligencebased˝methods.2021;e4325.

[7] Xiang,X.,Zhai,M.,Lv,N.,&ElSaddik,A.(2018).Vehicle countingbasedonvehicledetectionandtrackingfrom aerialvideos.Sensors,18(8),2560

[8] Veni,S.S.,Hiremath,A.S.,Patil,M.,Shinde,M.,&Teli,A. (2021). Video-Based Detection, Counting and Classification of Vehicles Using OpenCV. Available at SSRN3769139

Fig -7: Resultsofvehiclespeeddetection

6. CONCLUSION

Inthispaper,wehaveimplementedtheproposedsystemon python,usingtheOpenCV.Computervisiontechniquesare utilizedtodetectthevehicleandcountedthenumberofa vehicles that are passing on a particular street utilizing highway videos as input. At last, the vehicles were recognizedandcountedwhentheypassedintothevirtual detectionzone.Wehavediscussedtheimplementationofan object tracking method that uses Euclidean distance in particular.Inordertoimprovetrackingaccuracy,wehave also covered a variety of object identification and background reduction approaches. It shows how these strategiescanbeusedinpracticetotrackmovingobjectsin atrafficvideo.Inconclusion,theimplementationofeffective andprecisetrackingsystemsrequiresasolidunderstanding of computer vision concepts. Object tracking is a complex fieldwithmanymethodologiesandalgorithms

7. REFERENCES

[1] Wang F-Y. Parallel control and management for intelligent transportation systems: concepts, architectures, and applications. IEEE 2010;11(3):630638.

[2] Campolo C, Molinaro A, Scopigno R. From today’s VANETstotomorrow’splanningandthebetsfortheday after.2015;2(3):158-171.

[3] Mao Q, Hu F, Hao Q. Deep learning for intelligent wireless networks: a comprehensive survey. IEEE 2018;20(4):2595-2621.

[4] ZhangC,PatrasP,HaddadiH.Deeplearninginmobile and wireless networking: a survey. IEEE 2019;21(3):2224-2287.

[9] Kandalkar,P.A.,&Dhok,G.P.(2017).ImageProcessing BasedVehicleDetectionAndTrackingSystem.IARJSET International Advanced Research Journal in Science, EngineeringandTechnologyISO3297:2007Certified, 4(11).

[10] Hadi,R.A.,Sulong,G.,&George,L.E.(2014).Vehicle detection and tracking techniques: a concise review. arXivpreprintarXiv:1410.5894.

[11] Kamkar, S., & Safabakhsh, R. (2016). Vehicle detection, counting and classification in various conditions. IET Intelligent Transport Systems, 10(6), 406-413

[12] ] A.B. Godbehere, A. Matsukawa, and K. Goldberg, “Visual tracking of human visitors under variablelighting conditions for a responsive audio art installation”,IEEE,AmericanControlConference(ACC), pp.4305-4312,2012.

[13] P.KaewTraKulPong,andR.Bowden,“Animproved adaptive background mixture model for real-time tracking with shadow detection”, Video-based surveillancesystems,Springer.pp.135-144,2002