Emotion Based Music Player System

Salvina Desai1 , Vrushali Paul2 , Sayali Jadhav3, Pranita Gode4 , Roshani Bhaskarwar5Department of Information Technology, Datta Meghe College Of Engineering, Airoli, Navi Mumbai, Maharashtra, India ***

Abstract - This paper proposes the implementation of an intelligent agent that segregates songs and plays them according to the user's current mood. The music that best matches the emotion is recommended to the user as a playlist. Face emotion recognition is a form of imageprocessing.Facial emotion recognition is the process of converting the movements of a person's face into a digital database using various image processing techniques. Facial Emotion Recognition recognises the face's emotions. The collection of songs is based on the emotions conveyed by each song and then suggests an appropriate playlist. The user's music collection is initially clustered based on the emotion the song conveys. This is calculated taking into consideration the lyrics of the song as well as the melody. Every time the userwishesto generate a mood-based playlist, the user takes a picture of themselves at that instant. This image is subjected to facial detection and emotion recognitiontechniques,recognisingthe emotion of the user.

Key Words: music classification, music recommendation, emotion recognition, intelligent music player, face recognition, feature extraction

1. INTRODUCTION

Emotionrecognitionisafeatureofartificialintelligencethat is becoming more applicable for robotically performing various processes that are relatively more exhausting to performmanually.Thehumanfaceisanessentialpartofthe humanbody,mostlywhenitcomestoextractingaperson's emotionalstateandbehaviouraccordingtothesituation[1]. Recognizingaperson’smoodorstateofmindbasedonthe emotions they show is an important part of making systematic decisions that are best suited to the person in questionforadiversityofapplications.

Indaytodaylife,eachpersonfacesalotofproblems,and thebesthelperforallthestress,anxiety,tension,andworry thatisencounteredismusic[2].Musicplaysaveryvitalrole in building up and enhancing the life of every individual because it is an important medium of entertainment for music lovers and listeners. In today's world, with everincreasing advancement in the field of technology and multimedia, several music players were developed with functionslikefastreverse,forward,variableplaybackspeed (speedinguporslowingdowntheoriginalspeedofaudio), streamingplayback,volumemodulation,genreclassification, etc.Althoughthesefunctionssatisfythebasicrequirements

oftheuser,theuserstillhastomanuallyscrollthroughthe playlist and choose songs based on his current mood and behavior.

Imagineyourselfinaworldwherehumansinteractwith computers. You are sitting in front of your personal computer,whichcanlisten,talk.Ithastheabilitytogather informationaboutyouandinteractwithyouthroughspecial techniqueslikefacialrecognition,speechrecognition,etc.It canevenunderstandyouremotionsatthetouchofamouse. It verifies your identity, feels your presence, and starts interactingwithyou.Thevitalpartofhearingthesonghasto bedoneinafacilitatedway;thatis,theplayerhastobeable to play the song in accordance with the person’s mood. Peopletendtorevealtheiremotions,mainlythroughfacial expressions.Capturingemotions,recognisingtheemotions ofaperson,anddisplayingsuitablesongscorrespondingto his mood can help to calm the user's mind, which has a satisfyingeffectontheuser.

Thisprojectaimstocapturetheemotionsexpressedbya user'sfacialexpressions,andthemusicplayerisstructured tocaptureaperson'semotionsthroughawebcaminterface availableona computer.Thesoftwarecapturestheuser's image, and subsequently, using image segmentation and imageprocessingtechniques,itextractsfeaturesfrom the faceofapersonandtriestorevealtheemotionsthatperson istryingtoexpress.Theaimoftheprojectistolightenthe user'smoodbyplayingsongsthatmatchuserrequestsby capturing the user's emotion through the image. Since ancienttimes,thebestformofexpressionanalysisknownto mankindhasbeenfacialexpressionrecognition.

It will also help in the entertainment field, for the purpose of providing recommendations to an individual personbasedontheircurrentmood.Westudythisfromthe perspective of providing a person with customized music recommendationsbasedontheirstateofmindasdetected fromtheirfacialexpressions[3].Mostmusicconnoisseurs haveextensivemusiccollectionsthatareoftensortedonly based on parameters such as artist, album, genre, and numberoftimesplayed.However,thisoftenleavestheusers with the arduous task of making mood-based playlists, finding the music based on the emotion conveyed by the songs something that is much more essential to the listening experience than it often appears to be. This task onlyincreasesincomplexitywithlarger musiccollections andconsumesalotoftime;automatingtheprocesswould savemanyuserstimeandtheeffortspentindoingthesame manuallyselectionofsongs,whileimprovingtheiroverall

experienceandallowingforabetterenjoymentofthemusic. Itrecognisestheemotionontheuser'sfaceandplayssongs accordingtothatemotion.

2. RELATED WORK

R. Ramanathan et.al have proposed the first part of the emotion-basedmusicplayersystemisemotionrecognition. Thesoftwarecapturestheemotionofapersonintheimage capturedbythewebcamusingvariousimageprocessingand segmentationtechniques.bitextractsfeaturesfromtheface ofaperson.Theavailabledatasetisusedaftertrainingfor classification,clustering,andemotionrecognitionforfaces. The system's next step entails categorising music and assigning labels to each song in accordance with the emotions it conveys. Music and emotion have been the subjectofresearch.Featureextractionfromeachsongbased onthebestfeaturestoextractisthefirststepinrecognition, startingwiththegenerationofrelevantdatasetstogetthe mostaccurateresults[1].

CharlesDarwinwasthefirstscientisttorecognisethat facialexpressionisoneofthemostpowerfulandimmediate means for human beings to communicate their emotions, intentions,andopinionstoeachother[4].RosalindPicard (1997) describes why emotions are important to the computing community. There are two aspects to effective computing: giving the computer the ability to detect emotionsandgivingittheabilitytoexpressemotions.Not only are emotions crucial for rational decision-making, as Picarddescribes,butemotiondetectionisanimportantstep in an adaptive computer system. An adaptive, smart computer system has been driving our efforts to detect a person’s emotional state. An important element of incorporating emotion into computing is improving the productivityofthecomputeruser.

In2011[5],LigangZhangandDianTdevelopedafacial emotionrecognitionsystem(FER).Theyusedadynamic3D Gabor feature approach and obtained the highest correct recognition rate (CRR) on the JAFFE database, and FER is among the top performers on the Cohn-Kanade (CK) database using the same approach. They attested to the effectivenessoftheproposedapproachthroughrecognition performance,computationaltime,andcomparisonwiththe state-of-the-art performance. And concluded that patchbasedGaborfeaturesshowabetterperformanceoverpointbased Gabor features in terms of extracting regional features,keepingthepositioninformation,achievingabetter recognitionperformance,andrequiringasmallernumber.

AsperthesurveydonebyR.A.Patil,VineetSahula,and A.S.MandalforCEERIPilanionexpressionrecognition,the problemisdividedintothreesubproblems:facedetection, featureextraction,andfacialexpressionclassification.Most oftheexistingsystemsassumethatthepresenceoftheface in a scene is ensured. Most of the systems deal with only featureextractionandclassification,assumingthattheface isalreadydetected[6].

The algorithms that we have selected for detecting emotions are from the papers Image Edge Detection AlgorithmBasedonanImprovedCannyOperatorof2012 and Rapid Object Detection Using a Boosted Cascade of Simple Features by Viola and Jones. In "Image Edge DetectionAlgorithmBasedonanImprovedCannyOperator," animprovedcannyedgedetectionalgorithmisproposed[7]. Because the traditional canny algorithm has difficulty treating images that contain salt and pepper noise and becauseitdoesnothavetheadaptiveabilitytoadjustforthe varianceoftheGaussianfiltering,anewcannyalgorithmis presentedinthispaper,inwhichopen-closefilteringisused instead of Gaussian filtering. In this paper, the traditional cannyoperatorisimprovedbyusingmorphologyfilteringto preprocess the noise image. The final edge image can effectively reduce the influence of noise, keep the edge strength and more complete details, and get a more satisfactory subjective result. And by using objective evaluationstandards,comparedwiththetraditionalCanny operator, information entropy, average gradient, peak signal-to-noiseratio,correlationcoefficient,anddistortion degree have also increased significantly. So, the new algorithm is an effective and practical method of edge detection.Automaticfacerecognitionisallaboutextracting thosemeaningfulfeaturesfromanimage,puttingtheminto a useful representation, and performing some kind of classification on them. Face recognition based on the geometricfeaturesofa faceisprobablythemostintuitive approachtofacerecognition[7].

3. DESIGN & METHODOLOGY

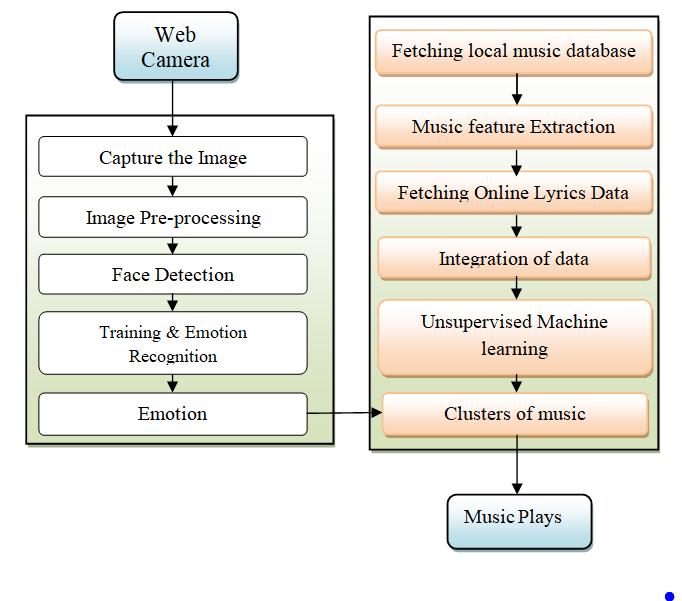

The user's image is captured using a camera or webcam. Oncetheimageiscaptured,theframeofthecapturedimage fromthewebcamsourceisconvertedtoagray-scaleimage toimprovetheperformanceoftheclassifier,whichisusedto identify the face in the image. Once the conversion is complete,theimageissenttoaclassificationalgorithmthat canbe extractedusingfeature extractiontechniques from thewebcamfeedframe.Fromtheextractedface,individual featuresareobtainedandsenttoatrainednetworktodetect the emotions expressed by the user. These images will be usedtotraintheclassifiersothatwhena completelynew andunknownsetofimagesispresentedtoit,itcanextract thelocationoffaciallandmarksfromtheseimagesusingthe knowledge it gained from the training set and return the coordinates of the new facial landmarks it detected. The network is trained with the help of an extensive data set. Thisisusedtoidentifytheemotionitexpressestotheuser.

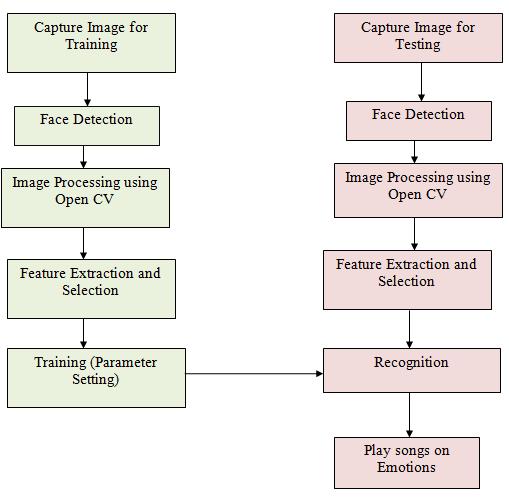

Now that detected face can use image for face recognition.However,ifitsimplyperformsfacerecognition directlyonanormalphotoimage,itwouldprobablygetless than10%accuracy!Thesystemwasdesignedtobeabetter, moreefficient,andlessspace-consumingproductthatusers couldeffectivelyapplyanduse,thatcouldbetested,andthat wassimpletoconfigure.Thefigure1showsthecomponents thattheprojectusestoperformtherequiredworkandtasks andstructureintheprojectmodel.

Fig -1:GeneralblockdiagramoftheSystemdesign

Asshownintheabovefigure,Itisextremelyimportant to apply various image pre-processing techniques to standardise the images that supply a face recognition system. Most face recognition algorithms are extremely sensitive to lighting conditions, so if they were trained to recognise a person when they are in a bright room, they probably won’t recognise them in a dark room, etc. This problemisreferredtoas"lightingdependent,"andthereare alsomanyotherissues,suchasthefactthatthefaceshould alsobeinaveryconsistentpositionwithintheimages(such astheeyesbeinginthesamepixelcoordinates),consistent size, rotation angle, hair and makeup, emotion (smiling, angry,etc.),andpositionoflights(totheleftorabove,etc.). This is why it is so important to use good image preprocessingfiltersbeforeapplyingfacerecognition itis also dothingslikeremovingthepixelsaroundthefacethataren't used,suchaswithanellipticalmasktoonlyshowtheinner face region and not the hair and image background, since theychangemorethanthefacedoes.Forsimplicity,theface recognition system will show Eigen faces and grayscale images [8]. So it will show how to easily convert colour images into grayscale and then easily apply histogram equalisation as a very simple method of automatically standardising the brightness and contrast of your facial images.Forbetterresults,itcanusecolourfacerecognition (ideally with a colour histogram fitting in HSV or another colour space instead of RGB) or apply more processing stagessuchasedgeenhancement,contourdetection,motion detection,etc.Also,thiscodeisresizingimagestoastandard size,butthismightchangetheaspectratiooftheface.

DataFlowDiagram

Adataflowdiagram(DFD)isagraphicalrepresentationof theflowofdatathroughaninformationsystem.Adataflow diagramcanalsobeusedforthevisualizationofstructured dataprocessingdesign.Itiscommonpracticeforadesigner

to first draw a context-level DFD, which shows the interaction between the system and outside entities. This context-levelDFDisthenusedtoshowmoredetailaboutthe systembeingmodel.

A. Face Capturing

Weusedopen-sourcecomputervision(OpenCV),alibraryof programmingfunctionsaimedmainlyatreal-timecomputer vision.It'susuallyusedforreal-timecomputervision,soit's easier to combine with other libraries that can also use NumPy[9].Whenthefirstprocessstarts,thestreamfrom thecameraisaccessed,andabout10photosaretakenfor further processing and emotion recognition. We use an algorithmtocategorisephotos,andforthatweneedalotof positiveimages thatonlycontain imagesof people'sfaces andalsonegativeimagesthatonlycontainimagesofpeople withoutfaces.Instructtheclassifier.Themodelisbuiltusing classifiedphotos.

B. Face Detection

Theprincipalcomponentanalysis(PCA)methodisusedto reduce the face space dimensions. Following that, linear discriminantanalysis(LDA)methodisusedtoobtainimage feature characteristics. We use this method specifically because it maximises the training process's classification between classes. While using the minimal Euclidean approachformatchingfaces,thisalgorithmaidsinpicture recognition processing and helps us categorise user expressionsthatsuggestemotions.

B.1 Dataset

Therewill beonedatasetforimages,whichwehaveused fromtheKagglecollectionforourproject.Theinputimages tothesystemwillbereal-timeimages.Thedatasetusedhere for images contains images of different facial expressions. Every emotion captured in an image is represented by at least one image in the data file. Then the data set will be dividedintothemoodsspecifiedonthelabelofthedataset. Itwillclassifymoodsaccordinglybyrecognizingaperson's facialexpressions,likehappy,sad,surprised,afraid,angry, sad,andneutral.

C. Facial Emotion Recognition

A Python script is used to fetch images containing faces alongwiththeiremotionaldescriptorvalues.Theimagesare contrastenhancedbycontrast-limitedadaptivehistogram equalisationandconvertedtograyscaleinordertomaintain uniformityandincreasetheeffectivenessoftheclassifiers.A cascadeclassifier,trainedwithfaceimages,isusedforface detection,wheretheimageissplitintofixed-sizewindows. Each window is passed to the cascade classifiers and is acceptedifitpassesthroughalltheclassifiers;otherwise,it isrejected.Thedetectedfacesarethenusedtotraintheface, whichworksonreducingvariancebetweenclasses.Fisher's face recognition method proves to be efficient as it works betterwithadditionalfeaturessuchasspectaclesandfacial hair. It is also relatively invariant to lighting. A picture is takenduringtheruntimeoftheapplication,which,afterpreprocessing, is predicted to belong to one of the emotion classesbythefisherfaceclassifier.Themodelalsopermits the user to customize the model in order to reduce the variancewithintheclassesfurther,initiallyorperiodically, such that the only variance would be that of emotion changes[10].

D. Feature Extraction

The features considered while detecting emotion can be static, dynamic, point based geometric, or region based appearance.Toobtainreal-timeperformanceandtoreduce time complexity, for the intent of expression recognition, onlyeyesandmouthareconsidered.Thecombinationoftwo featuresisadequatetoconveyemotionsaccurately.Finally, Inordertoidentifyandsegregatefeaturepointsontheface, apointdetectionalgorithmisused[2].

● Eye Extraction: The eyes display strong vertical edges(horizontaltransitions)duetoitsirisandeye white.InordertofindtheYcoordinateoftheeyes, verticaledgesfromthehorizontalprojectionofthe image is obtained through the use of Sobel mask [14].

● EyebrowExtraction:Tworectangularregionsinthe edgeimagewhichliedirectlyaboveeachoftheeye regions are selected as the eyebrow regions. The

edge images of these two areas are obtained for furtherrefinement.NowSobelmethodwasusedin obtaining the edge image as more images can be detectedwhencomparedtoRobert’smethod.These obtainededgeimagesarethendilatedandtheholes are filled. The result edge images are used in refiningtheeyebrowregions.

● Mouth Extraction: The points in the top region, bottomregion,rightcornerpointsandleftcorner points of the mouth are all extracted and the centroidofthemouthiscalculated.

E. Music Recommendation

Theemotiondetectedfromtheimageprocessingisgivenas inputtotheclustersofmusictoselectaspecificcluster.In ordertoavoidinterfacingwithamusicappormusicmodule, whichwouldinvolveextrainstallation,thesupportfromthe operatingsystemisusedinsteadtoplaythemusicfile.The playlistselectedbytheclustersisplayedbycreatingaforked subprocessthatreturnscontrolbacktothepythonscripton completion of its execution so that other songs can be played. This makes the programme play music on any system,regardlessofitsmusicplayer.

F. Haar cascade classifiers

Multipleimagesarecapturedfromawebcamera.Topredict theemotionaccurately,wemightwanttohavemorethan one facial image. Blurred images can be an error source (especiallyinlowlightconditions)andhence,themultiple images are averaged to get an image devoid of any blur. Histogram equalization is an image processing technique usedtoenhancethecontrastoftheimagebynormalizingthe imagethroughoutitsrange.Thisimageisthencroppedand converted to greyscale so that only the foreground of the image remains, thereby reducing any ambiguity. A Haar classifier is used for face detection where the classifier is trainedwithpre-definedvaryingfacedatawhichenablesit todetectdifferentfacesaccurately[13].Thecorebasisfor HaarclassifierobjectdetectionisHaar-likefeatures.These features,ratherthanusingtheintensityvaluesofapixel,use thechangeincontrastvaluesbetweenadjacentrectangular groups of pixels [11]. The contrast variances between the pixel groupsareusedtodeterminerelativelightanddark areas.TwoorthreeadjacentgroupsAHaar-likefeatureis formedbyarelativecontrastvariance.Haar-likefeaturesare usedtodetectanimage.Haarfeaturescaneasilybescaled byincreasingordecreasingthesizeofthepixelgroupbeing examined.Thisallowsfeaturestobeusedtodetectobjectsof varioussizes[8].EachHaar-likefeatureconsistsoftwoor threejointed"black"and"white"rectangles:Acollectionof basicHaar-likecharacteristics.

ThevalueofaHaar-likefeatureisthedifferencebetweenthe sums of the pixel grey level values within the black and whiterectangularregions:

F(x)=Sumblack rectangle (pixel gray level) – Sumwhite rectangle (pixel gray level)

Compared with raw pixel values, Haar-like features can reduceorincreasethein-classorout-of-classvariability,and thismakesclassificationeasier[9].

4. IMPLEMENTATION & RESULTS

Followingaretheimplementationandresultscreenshots ofourproject.

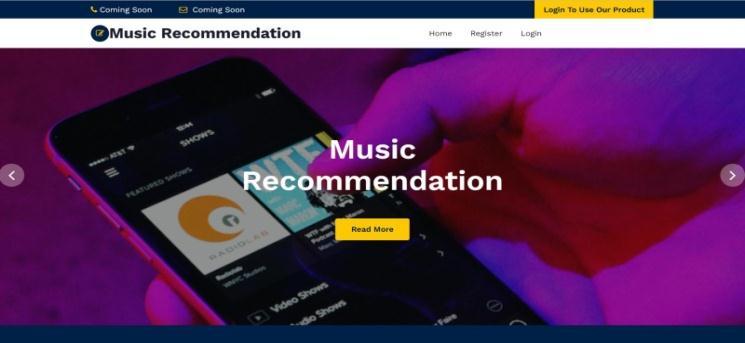

Home Page:

The homepage is the index page of the music recommendationsystem.Registrationandloginoptionsare availableonthehomepage.Byclickingonthisoption,users canregisterandlogintotheiraccounts,asshowninFigure3.

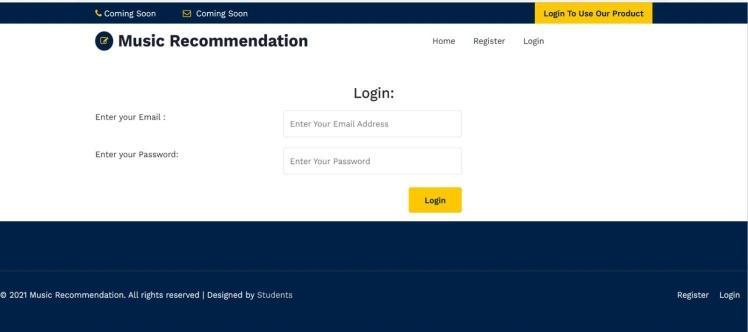

Login Page:

As shown in Figure 5, a user can log in to their profile account after filling in the right information, such as their emailandpassword.Theinformationissenttothedatabase to check for a match. If no match is found, the customer remainsonthesamepage;otherwise,heisdirectedtotheir profilepage.

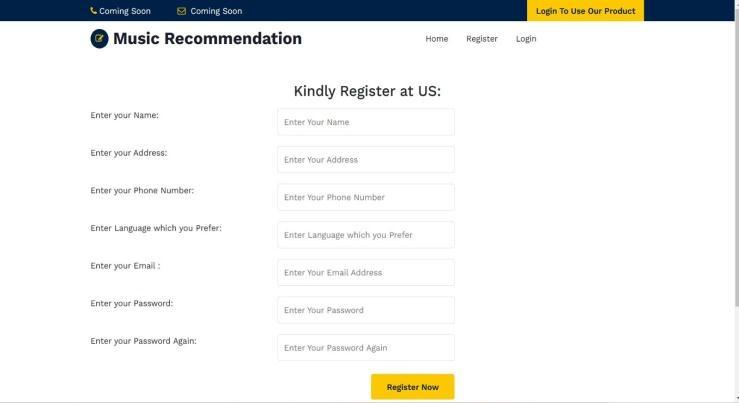

Registration Page:

This registration page is for users who wish to register themselves as users. They can successfully register after fillingoutalloftherequiredinformation,asshowninFigure 4.

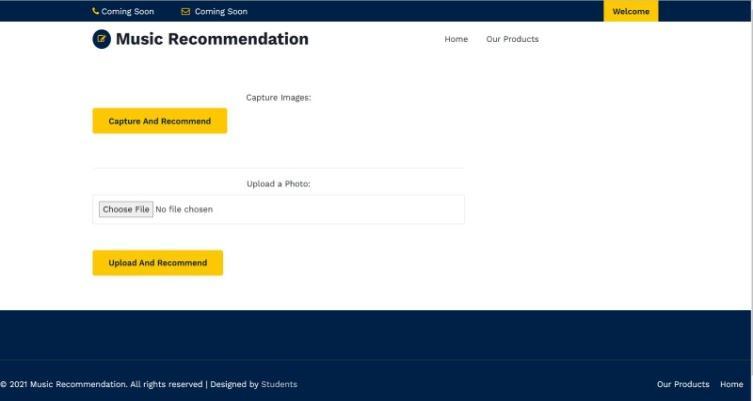

Upload/Capture Image:

Whenauserlogsin,theyaredirectedtoapagewherethey cancaptureimagesanduploadphotos,asshowninFigure6.

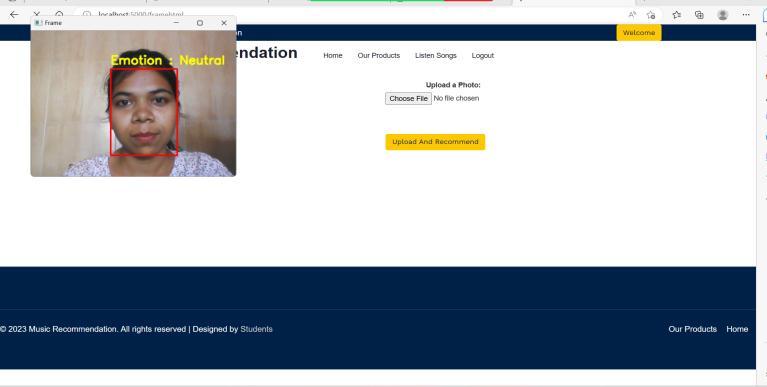

Emotion Recognition:

AsshowninFigure7,oncethecameraisopen,itwillcapture theimageandidentifytheemotions.

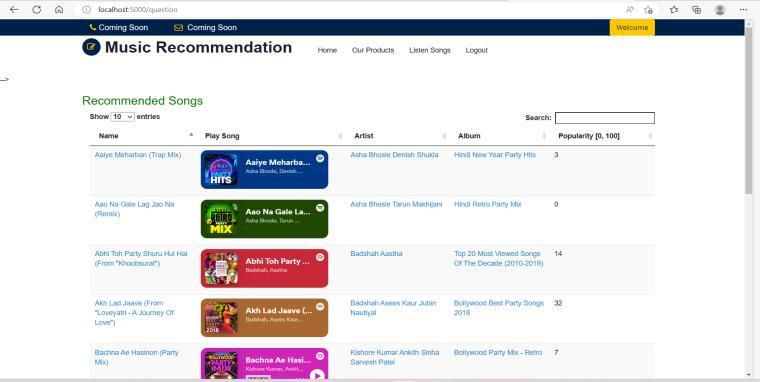

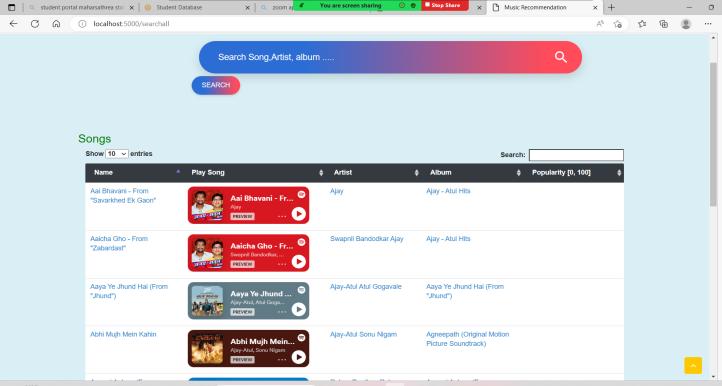

Search song:

Theusercanalsosearchfortheartist,song,andalbumof theirchoice,asshowninFigure8.

6. FUTURE WORK

Music Recommendation Page:

Onceanimageiscapturedoraphotoisuploaded,emotionis detected in it, and a particular playlist of songs is recommendedtotheuser,asshowninFigure9.

In the future, we would like to focus on improving the recognition rate of our system. Also, we would like to developamood-enhancingmusicplayerinthefuturethat startswiththeuser’scurrentemotion(whichmaybesad) and then plays music of positive emotions, thereby eventuallygivingtheuserajoyfulfeeling.Thefuturescope ofthesystemwouldbetodesignamechanismthatwouldbe helpful in music therapy treatment, which will help treat patientssufferingfromdisorderslikementalstress,anxiety, depression,andtrauma.Theproposedsystemalsotendsto avoid the unpredictable results produced in extreme lowlightconditions,andwithverypoorcameraresolutioninthe future,itwillextendtodetectmorefacialfeatures,gestures, andotheremotionalstates(stresslevel,liedetection,etc.). Thisprojectcanbeusedforsecuritypurposesinthefuture. Computers will be able to offer advice in response to the mood of the users. Also, this system will perform various tasksasperthemoodofitsuser.Androiddevelopmentcan detectasleepymoodwhiledriving.Finally,wewouldliketo improvethetimeefficiencyofoursysteminordertomakeit moreappropriateforuseindifferentapplications

REFERENCES

[1] R.Ramanathan,R.Kumaran,R.RamRohan,R.Guptaand V. Prabhu, "An Intelligent Music Player Based on Emotion Recognition," 2017 2nd International ConferenceonComputationalSystemsandInformation TechnologyforSustainableSolution.

[2] S.Deebika,K.A.IndiraandJesline,"AMachineLearning BasedMusicPlayerbyDetectingEmotions,"2019Fifth International Conference on Science Technology Engineering and Mathematics (ICONSTEM), Chennai, India,2019

5. CONCLUSION

The aim of this paper is to explore the area of automatic facialexpressionrecognitionfortheimplementationofan emotion-based music player system. Beginning with the psychologicalmotivationforfacialbehaviouranalysis,this field of science has been extensively studied in terms of application and automation. A wide variety of image processing techniques were developed to meet the requirements of the facial expression recognition system. Apartfromatheoreticalbackground,thisworkprovidesa waytodesignandimplementemotion-basedmusicplayers. Theproposedsystemwillbeabletoprocessvideooffacial behavior, recognize displayed actions in terms of basic emotions, and then play music based on the captured emotions.Majorstrengthsofthesystemarefullautomation aswellasuserandenvironmentindependence.

[3] S.G.KambleandA.H.Kulkarni,"Facialexpressionbased music player," 2016 International Conference on Advances in Computing, Communications and Informatics(ICACCI),Jaipur,India,2016

[4] DarwinC.1998Theexpressionoftheemotionsinman and animals, 3rd edn (ed. Ekman P.). London: Harper Collins;NewYork:OxfordUniversityPress

[5] Ligang Zhang & Dian Tjondronegoro, Dian W. (2011) Facial expression recog-nition using facial movement features.IEEETransactionsonAffectiveComputing

[6] R. A. Patil, V. Sahula and A. S. Mandal, "Automatic recognitionoffacialexpressionsinimagesequences:A review," 2010 5th International Conference on Industrial andInformation Systems,Mangalore,India, 2010

[7] Xiaojun Wang, Xumin Li, Yong Guan,"Image Edge Detection Algorithm Based on Improved Canny

Operator", Computer Engineering, Vo1.36, No.14, pp. 196-198.Jul.2012

[8] Menezes,P.,Barreto,J.C.andDias,J.Facetrackingbased on Haar-like features and Eigenfaces. 5th IFAC SymposiumonIntelligentAutonomousVehicles,Lisbon, Portugal,July5-7,2004.

[9] Adolf,F.How-tobuildsacascadeofboostedclassifiers based on Haar-like features. http://robotik.inflomatik.info/other/opencv/OpenCV_O bjectDetection_HowTo.pdf,June202003.

[10] Bradski, G. Computer vision face tracking for use in a perceptualuserinterface.IntelTechnologyJournal,2nd Quarter,1998.

[11] Lienhart,R.andMaydt,J.AnextendedsetofHaar-like featuresforrapidobjectdetection.IEEEICIP2002,Vol. 1,pp.900-903,Sep.2002

[12] Muller, N., Magaia, L. and Herbst B.M. Singular value decomposition, Eigen faces, and 3D reconstructions. SIAMReview,Vol.46Issue3,pp.518–545.Dec.2004.

[13] Viola, P. and Jones, M. Rapid object detection using a boostedcascadeofsimplefeatures.IEEEConferenceon ComputerVisionandPatternRecognition,2001.

[14] G.ChapleandR.D.Daruwala,"DesignofSobeloperator basedimageedgedetectionalgorithmonFPGA,"2014 InternationalConferenceonCommunicationandSignal Processing,Melmaruvathur,India,2014

[15] TheFacialRecognitionTechnology(FERET)Database. National Institute of Standards and Technology,2003. http://www.itl.nist.gov/iad/humanid/feret

[16] OpenComputerVisionLibraryReferenceManual.Intel Corporation,USA,2001.