Gestures Based Sign Interpretation System using Hand Glove

V. Leela Krishna1 , P. Sekhar , P. Gowri Priya ManiAbstract – In this article, we introduce a system for interpreting sign language that utilizes a glove. People who are unable to speak often communicate using sign language, which can pose a challenge when trying to communicate with those who do not understand it. To address this issue, we propose a glove-based system that utilizes Arduino Uno and flex sensors to translate hand gestures into text andspeech. By doing so, we hope to reduce the communication gap between those who use sign language and those who do not, making it easier for people with speech impairments to communicate with others.

The glove-based sign interpretation system is a promising solution for enabling communication between individuals who use sign language and those who do not. This paper presents a system that uses Arduino Uno and flex sensors to interpret hand gestures and convert them into text and speech. The proposed system has the potential to greatly reduce the communication gap between speech-impaired individuals and those who do not understand sign language. This could revolutionize communication for those who use sign language and help break down barriers to communication.

Key Words: Glove-based sign interpretation system, communication, sign language,ArduinoUno,flexsensors, hand gestures, text, speech, machine learning, speech impairment.

1. INTRODUCTION

Aglove-basedsigninterpretationsystemisadevice that utilizes advanced technology to help people who are deaforhardofhearingcommunicatewithotherswhodonot understandsignlanguage.Thisdeviceconsistsofaspecial gloveequippedwithsensorsthattrackthemovementofthe wearer'shandsandfingers,andthentranslatethegestures intowrittenorspokenlanguage.

The system uses machine learning algorithms to recognizeandinterpretthesignlanguagegesturesmadeby thewearer.Itcanthenconvertthesegesturesintoavariety oflanguages,includingEnglish,Spanish,French,andothers. This technology has the potential to revolutionize communicationforthedeafandhardofhearing,asitenables themtoexpressthemselvesmoreeffectivelyandeasilywith thepeoplearoundthem.

Glove-based sign interpretation systems are still in their earlystagesofdevelopment,buttheyholdimmensepromise

***

, S. Bala Satya Phani Kumar4forthefuture.Asthetechnologyadvances,wecanexpectto see even more advanced systems that can accurately interpretmorecomplexsignlanguagegesturesandprovide more sophisticated translations. Ultimately, these devices have the potential to break down the communication barriersthatexistbetweenthedeafandhardofhearingand therestofsociety,openingupnewpossibilitiesforpersonal andprofessionalrelationships.

ThemainobjectiveofdevelopingtheSignLanguage Interpreter was to facilitate communication between the deafandhearingcommunities.Thisisachievedthroughthe use of a sensor-based hand glove that is connected to an Arduinomicrocontroller,allowingforthetranslationofhand gestures into text and sound. With this smart glove, communication barriers between communities can be eliminated.

Individualswithspeechdisabilitiesoftenexperience difficulties in communicating with others. The purpose of thisdeviceistoenhancetheirqualityoflifebyconverting theirgesturesintospeech,providingavoiceforthosewho are unable to speak. Speech is an important tool for conveying messages, and this project utilizes flex sensors thatareattachedtotheglovestocapturehandmovements. TheoutputfromthesesensorsisthensenttotheArduino, wherethedataisusedtodisplaytextonanLCDscreenand producespeechoutputthroughanAndroidapp.

Themainfocusofthisprojectistocreateasystem that can recognize a predefined set of hand gestures, particularlytheAmericanSignLanguagealphabet,usinga datagloveasaninputdevice.Toachievethis,thesystemwill employartificial intelligencetoolssuchasartificial neural networkstofacilitateinteractionbetweentheuserandthe computer.Thesystemwillclassifyandrecognizethehand gesturesperformedbytheuser.TheASLalphabetcomprises static and dynamic gestures, where static gestures are accomplished by maintaining a hand pose while dynamic gesturesinvolvebothhandposeandmovement.

There are various approaches of Glove Based Sign Interpretationsystemwhichcanbeusedtointerprethand gesturesintotextandspeech.Theseapproachesinclude:

Sensor-Based Approach: In this approach, a sensor-based glove is used to recognize the hand gestures.Theglovecontainssensorsthatdetectthe movementsofthefingers andhand,and send the data to the microcontroller. The microcontroller thenprocessesthedataandproducestextorspeech output.

Machine Learning Approach: This approach involvestheuseofmachinelearningalgorithmsto recognize hand gestures. A large dataset of hand gestures is used to train the machine learning model, which isthen usedtorecognize new hand gestures.

Computer Vision Approach: In this approach, a camera is used to capture the hand gestures. The capturedimagesarethenprocessedusingcomputer vision algorithms to recognize the hand gestures. This approach requires a high-resolution camera andcomplexalgorithmstoprocessthedata.

Hybrid Approach: Thehybridapproachcombines thesensor-based,machinelearning,andcomputer vision approaches to achieve high accuracy in recognizing hand gestures. The data from the sensors and camera are combined and processed usingmachinelearningalgorithmstoproducetext orspeechoutput.

Each of these approaches has its advantages and disadvantages,andthechoiceofapproachdependsonthe specificrequirementsoftheapplication.

1.1 Sensor-Based Approach

The sensor-based approach in glove-based sign interpretationsysteminvolvestheuseofsensorsplacedona glovetocapturehandmovementsandgestures.Flexsensors arecommonlyusedtomeasurethedegreeofflexionineach fingerofthehand,whileaccelerometersensorscanbeplaced ontopoftheglovetocapturetheorientationofthehandin threeaxes.ThesesensorssendanalogsignalstoanArduino microcontroller, which is programmed to interpret the signalsandtranslatethemintotextandspeechusingartificial intelligence tools like neural networks. The sensor-based approach offers a non-invasive and intuitive way for individualswithspeechimpairmentstocommunicatewith others.However,itrequirescarefulcalibrationofthesensors and maynotbe suitableforindividuals withsevere motor impairments.

1.2 Machine Learning Approach

Themachinelearningapproachisapopularmethodused inglove-basedsigninterpretationsystems.Itinvolvesusing algorithms and statistical models to enable the system to learn and improve its performance based on the data collectedfromtheuser.Thisapproachinvolvestrainingthe system to recognize specific sign gestures by providing it withalargedatasetofpre-recordedsignlanguagesamples.

Thesystemthenusesthisdatasettolearnanddevelopan understandingofthepatternsandfeaturesthatdistinguish onegesturefromanother.Themachinelearningapproach typicallyinvolvestheuseofneuralnetworks,decisiontrees, and support vector machines to classify and interpret the handgestures.

Oneadvantageofthemachinelearningapproachisthatit canimprovetheaccuracyofthesystemovertimeasitlearns from more data. However, this approach requires a large amountoftrainingdataandcomplexalgorithms,whichcan betime-consumingandchallengingtoimplement.

1.3 Computer Vision Approach

Computer-basedapproachisapopularmethodused intheglove-basedsigninterpretationsystem.Thisapproach usesadatagloveasaninputdevicethatisconnectedtoa computer. The computer uses artificial intelligence techniques such as neural networks, fuzzy logic, and machinelearningalgorithmstorecognizeandinterpretthe handgesturesmadebytheuser.

Thecomputer-basedapproachinvolvestheuseof sensorsthatareembeddedinthedataglove.Thesesensors measure the degree of flexion in each finger and the orientationofthehandinthreeaxes.Thedatacollectedby the sensors is then transmitted to the computer for processingandinterpretation.

One of the advantages of the computer-based approach is its ability to recognize a wide range of hand gestures.Thisapproachishighlyaccurateandcanrecognize handgestureseveninnoisyenvironments.Additionally,the computer-based approach is highly flexible, as it can be easilymodifiedandupdatedtorecognizenewhandgestures.

However,oneofthedisadvantagesofthisapproach isitscomplexity.Thecomputer-basedapproachrequiresa highleveloftechnicalexpertiseandspecializedequipment. Furthermore,theprocessingtimerequiredtointerprethand gestures can be significant, which may result in delays in communication.

1.4 Hybrid Approach

Hybridapproachcombinestheadvantagesofboth the rule-based and machine learning approaches. In this approach,rulesareusedtoidentifybasichandgestures,and machine learning algorithms are used to recognize more complexgestures.Thisapproachismoreaccuratethanthe rule-based approach and is also capable of recognizing a wider range of gestures. It involves collecting data using sensors and cameras and then using machine learning algorithmstorecognizethegestures.Thehybridapproach providesamoreefficientandaccurateinterpretationofsign language,makingcommunicationeasierbetweendeafand hearing individuals. However, this approach requires significant amounts of training data and computational resourcestoachievehighaccuracy.

2. Hardware Requirements

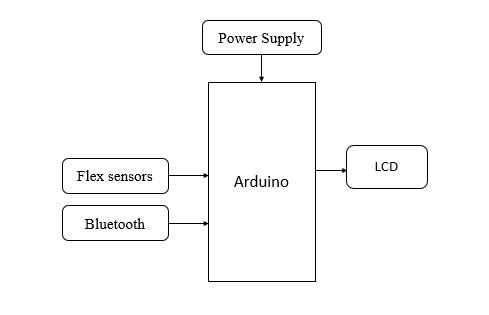

Inthisproject,wehaveimplementedGlove-based SignInterpretationusingArduino.

The components used in Glove based Sign Interpretation systemusingArduinoare:

Arduino Uno board: Itisthemaincontrollerofthe system that receives input from sensors and processesthedatatogeneratetheoutput.

Flex sensors: These sensors are attached to the fingers of the glove and measure the degree of flexionineachfinger.

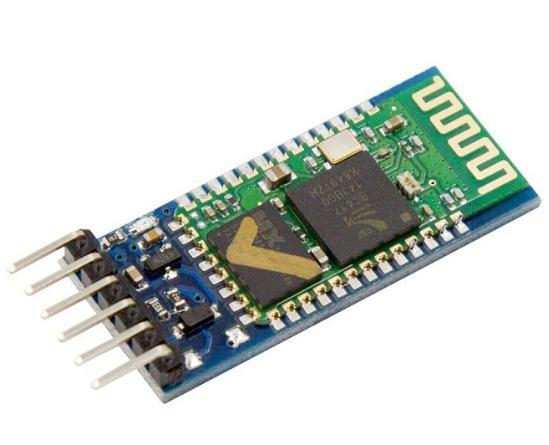

Bluetooth module: The Bluetooth module in a Glove Based Sign Interpretation System enables wirelesscommunicationbetweentheglovesanda computerormobiledevice,allowingforreal-time signlanguageinterpretation.

Breadboard: It is used to make connections betweendifferentcomponents.

Wires: These are used to connect different componentstogether.

Power source: A battery or USB cable is used to powertheArduinoboardandthecomponents.

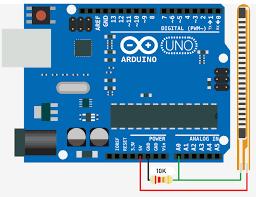

2.1 Arduino Uno Board

Arduino Uno is an open-source microcontroller board designed by Arduino.cc. It is based on the ATmega328P microcontrollerchipandcomeswith14digitalinput/output pins, 6 analog inputs, a 16 MHz quartz crystal, a USB connection,apowerjack,anICSPheader,andaresetbutton. Theboardisdesignedtobeeasytouseandisoftenusedin various projects, from hobbyist to professional level.

The ATmega328P microcontroller chip has 32 KB of flash memory,2KBofSRAM,and1KBofEEPROM.Thedigitalpins on the board can be used as inputs or outputs and can be controlledwithdigitalWrite()anddigitalRead()functionsin theArduinoIDE.Theanaloginputsareusedtoreadanalog signalsandcanbecontrolledwithanalogRead()function.

TheboardcanbepoweredthroughtheUSBconnectionor throughanexternalpowersource,suchasabatteryoranACto-DCadapter.Thepowerjackontheboardacceptsa9-12V DCinput,andthevoltageregulatorontheboardconvertsthis voltageto5VDC,whichisusedtopowerthemicrocontroller andothercomponentsontheboard.

TheboardalsocomeswithanICSPheader,whichallows theboardtobeprogrammedwithanexternalprogrammer, anda resetbutton,whichresetsthemicrocontrollerwhen pressed.

Overall, Arduino Uno is a versatile and easy-to-use microcontrollerboardthatiswidelyusedinthemakerand DIY community for various projects, including the Glove basedSignInterpretationsystem.

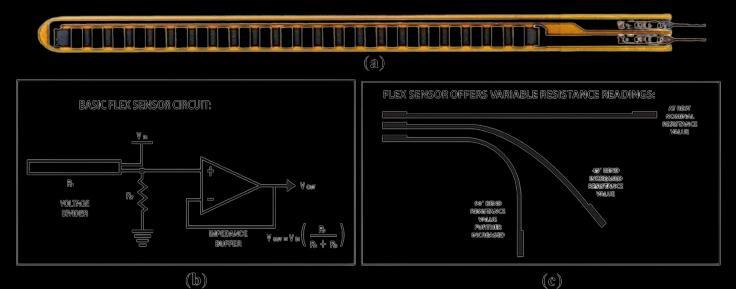

2.2 Flex Sensors

Flexsensorsarebendsensorsthatworkontheprinciple ofpiezoresistiveeffect.Itmeansthattheirresistancechanges whentheyarebentorflexed.Theyaremadeupofathinstrip

offlexiblematerial,suchasplasticorrubber,thatiscoated withaconductivematerial,suchascarbonormetal.When the sensor is flexed, the distance between the conductive strips changes, which results in a change in resistance.

Flexsensorsareavailableindifferentshapesandsizes, depending on the application. Theyare commonly used in robotics,gaming,andmedical devices,suchasprosthetics. They are also used in wearable technology, such as smart gloves, to detect hand gestures and movements.

In the Glove based Sign Interpretation system using Arduino,flexsensorsareattachedtothefingersoftheglove todetectthebendingoffingers.Flexsensorsareshownin belowfigure.

computerorotherdevicesitisconnectedto.TheBluetooth module enables wireless communication between the two devices,allowingforgreaterflexibilityandmobility.

Fig-3(a): FlexSensor

Fig-3(b): BasicFlexSensor

Fig-3(c): FlexSensoroffersvariableresistancereadings

TheBluetoothmoduletypicallyoperatesonthe2.4GHz frequencybandandusestheBluetoothprotocoltoestablisha connectionwiththedeviceitispairedwith.Oncepaired,the devicecansendandreceivedatafromtheglove,suchasthe handgesturesmadebytheuserwearingtheglove.

TherearevarioustypesofBluetoothmodulesavailableon themarket,andthespecificmoduleusedina GloveBased SignInterpretationSystemwoulddependonfactorssuchas the range and speed of communication required, power consumption,andcompatibilitywiththeotherdevicesinthe system.

2.4

Abreadboardisadeviceusedforprototypingelectronic circuits. It allows the user to create and experiment with circuits without the need for soldering. A breadboard is a reusable board with holes drilled into it that are used to insert electronic components such as resistors, capacitors, transistors,andintegratedcircuits.

The output signal from the flex sensors is fed into the Arduinoboard,whichprocessesthedataandconvertsitinto text and speech output. The flex sensors are calibrated according to the range of motion of each finger to ensure accuratedetectionofgestures.

2.3

AGloveBasedSignInterpretationSystemtypicallyusesa Bluetoothmoduletocommunicatebetweenthegloveandthe

Theholesonabreadboardaretypicallyusedtoholdthe legsofelectroniccomponents.Thelegsareinsertedintothe holesandareheldinplacebythefrictionbetweenthelegs andthesidesofthehole.Oncethecomponentsareinserted intothebreadboard,theycanbewiredtogetherusingsmall lengthsofwire.Thewiresareinsertedintotheholesonthe breadboardandareusedtocreatethenecessaryconnections betweencomponents.

Wires are an important component in the glove-based signinterpretationsystemas theyare used toconnectthe variouscomponentstogether.Thesewirescanbeofdifferent types,suchasjumperwires,breadboardwires,andshielded wires.

Jumper wires are used to connect the pins of different componentsonabreadboard.Thesewiresarepre-stripped and pre-formed, making it easy to use them for various connections.Theyareavailableindifferentlengths,colors, andgaugestosuitdifferentrequirements.

Breadboardwiresaresimilartojumperwires,butthey are designed to be used specifically on breadboards. They come with a pin on one end that can be inserted into the breadboard and a socket on the other end that can be connectedtoacomponent.Thesewiresarealsoavailablein differentlengthsandcolors.

Shielded wires are used in situations where the signal needstobeprotectedfrominterference.Thesewireshavea shieldthatsurroundsthesignalwire,whichhelpstoreduce theamountofinterferencepickedupbythewire.Shielded wires are commonly used in audio and video applications wheresignalqualityisimportant.

usedtoconnecttheArduinoboardtothepowersourceand the output devices such as LCD display and speaker. The lengthandtypeofwiresusedinthesystemdependonthe specificrequirementsoftheproject.

2.6 Power Source:

The power source in Glove Based Sign Interpretation Systemistypicallya9VbatteryoraUSBcableconnectedtoa power source such as a computer or wall adapter. The Arduinoboardandothercomponentsarepoweredbythis source,providingthenecessarypowertorunthesystem.Itis importanttouseareliablepowersourcetoensurethatthe system functions properly and does not experience any power-relatedissues.Additionally,thepowersourceshould bechosenbasedonthespecificrequirementsofthesystem andthecomponentsbeingused.

3. Software Resources:

TheArduinoIntegratedDevelopmentEnvironment(IDE) isanapplicationusedforwriting,compiling,anduploading code to Arduino microcontrollers. It is a cross-platform application that is available for Windows, Mac OS X, and Linuxoperatingsystems.

The Arduino IDE provides a user-friendly interface for programming Arduino boards and supports a variety of programminglanguages,includingC,C++,andasimplified versionofC++calledArduinoSketch.

Inadditiontoprovidingatexteditorforwritingcode,the ArduinoIDEalsoincludesaserialmonitorfordebuggingand communication with the Arduino board. It also supports variouslibrariesthatmakeiteasiertointerfacewithexternal sensorsanddevices.

TheArduinoIDEisopen-sourcesoftwareandisfreeto downloadanduse.Itisregularlyupdatedwithnewfeatures and bug fixes, and the community provides a wealth of resources and support for those who are new to programmingwithArduino.

4.

Proposed Method:

The proposed system utilizes flex sensors to capture a user's hand gestures, whichproduce a stream of data that variesbasedonthedegreeofbend.TheArduinoisusedto processthisdataandsendvoicecommandstoanAndroid appviaaBluetoothmodule.Theflexsensorsareresponsible for detecting the hand posture andare made up of carbon resistiveelements.Asthesensorisbent,itproducesoutput resistancecorrespondingtothebendradius.Therefore,this systemenablescommunicationbetweenpeople.

Intheglove-basedsigninterpretationsystem,wiresare used to connect the flex sensors, accelerometer, and BluetoothmoduletotheArduinoboard.Thewiresarealso

5. CONCLUSION

Inconclusion,theGloveBasedSignInterpretationsystem using Arduino is an innovative solution to bridge the communication gap between the hearing and speechimpairedcommunity.Thesystemusesflexsensorstocapture handgesturesandtranslatethemintotextandsound.The Arduinomicrocontrollerprocessesthesensordataandsends ittoanAndroidappviaBluetoothmoduletogeneratevoice commands. The breadboard, wires, and power source are essentialcomponentsofthesystem.Thehybridapproachof using both rule-based and machine learning algorithms enhances the accuracy of gesture recognition. This system hasthepotentialtoimprovethequalityoflifeforindividuals withspeechdisabilitiesbyprovidingthemwithanefficient means of communication. Further improvements and enhancementscanbemadetothissystemtomakeitmore user-friendlyandaccessible.

REFERENCES

[1] Safayet Ahmed; Rafiqul Islam; Md. Saniat Rahman Zishan;Md.RabiulHassan,“Electronicsspeakingsystem forspeechimpairedpeople”,May.2015.

[2] B.G.Lee,Member,IEEE,andS.M.Lee,“Smartwearable hand device for sign language interpretation system withsensorfusion”,Apr.2017.

[3] Ghotkar, Archana S., “Hand Gesture Recognition for Indian Sign Language”, International Conference on Computer Communication and Informatics (ICCCI), 2012,pp1-4.

[4] S. Vigneshwaran;M. Shifa Fathima;V. Vijay Sagar;R. Sree Arshika, "Hand Gesture Recognition and Voice Conversion System for Dump People,” IEEE International Conference on Intelligent and Advanced Systems,2019.

[5] Jinsu Kunjumon;Rajesh Kannan Megalingam, "Hand GestureRecognitionSystemforTranslatingIndianSign Language into Text and Speech”, IEEE International ConferenceonIntelligentandAdvancedSystems,2019, pp597-600.

[6] Byung-woomin,Ho-subyoon,Jungsoh,Takeshiohashi and Toshiaki jima," Visual Recognition of Static/Dynamic Gesture: Gesture-Driven Editing System”, Journal of Visual Languages & Computing Volume10,Issue3,June1999,pp291-309.