Designing Autonomous Car using OpenCV and Machine Learning

R. Hemanth Kumar1 , T. Varun Sai KrishnaAbstract – Self-driving cars, also known as autonomous vehicles, use sensors to perceive the environment and control the car’s different actuators without humanintervention.This project aims to design a self-driving car using two modules: lane detection and traffic signal detection. Lane detection is done using image processing with OpenCV, while traffic signal detection is done by using machine learning. The prototype of the self-driving car is built using raspberry pi as master device and Arduino as the slave device to capture and process the data and control the car’s movements. The raspberry pi camera interface captures every frame of the environment for lane and traffic signal detection. The car makes the right decisions by referencing the lane, and the Arduino controls the orientation of the wheels and speed using motors. This project is inspired by recent surge in the automated car industry, and it shows the potential of self-driving cars in future.

Key Words: Autonomous car, Lane detection, traffic signal detection, Raspberry pi, Arduino, OpenCV, Machine learning.

1. INTRODUCTION

Road accidents claim 1.25 million lives annually worldwide,causinginjuriesthatleadtodisabilitiesin20-50 millionpeople.Indiaaloneaccountsfor10%ofglobalroad accidents,withthehighestnumberofroadfatalities.Human error is responsible for 78% of accidents, but technology, such as driverless cars, can help address this issue. While humanerrorcanneverbecompletelyeliminated,technology has made significant advancements in recent years, from radar-basedcollisiondetectiontomoreadvancedformsof technology.Asaresult,accidentscanbeprevented,andthe useofautonomousvehiclesmayhelpreduceroadfatalities inthefuture.

Self-drivingcarshavebeenasubjectofgrowinginterestin recentyears,astechnologycontinuestoadvanceandpeople seeksaferandmoreefficientmeansoftransportation.These autonomousvehiclesaredesignedtodrivewithouthuman intervention,relyingonsensors,software,andalgorithmsto interactwiththeirsurroundings.

Wepresentourproject,whichaimstodesignanddevelopa self-driving car prototype using OpenCV and machine learning.Ourobjectiveistocreateacarthatcandetectlanes andtrafficsignalsinreal-time,utilizingcomputervisionand machinelearningtechniques.

, S. Durga Prasad , P. Varuna Sai4***

OpenCV is an open-source computer vision library that providestoolsandalgorithmsforimageprocessing,feature detection, and object recognition. We utilize the power of OpenCV to detect lanes and traffic signals from the frame feed captured by a Raspberry Pi camera. Additionally, machinelearningalgorithmsareusedfortrafficsignaland stopsigndetection.

Ourprojectinvolvestwoprimarymodules:lanedetection andtrafficsignaldetection.Lanedetectionisperformedby OpenCV,whichprocessestheframesfromthecameraand detectstheroad'sedges.Thedetectedlanemarkersarethen usedtocontrolthecar'ssteeringandspeedwiththeaidof motorsconnectedtoanArduinoboard.

Trafficsignaldetectionisperformedusingmachinelearning algorithms. The training dataset consists of traffic signal imagescapturedfromdifferentanglesandinvariouslighting conditions. The model is trained to recognize the various trafficsignaltypesandtheircorrespondingmeanings.

Ourself-drivingcarprototypeisbuiltusingaRaspberryPias themasterdeviceandanArduinoboardastheslavedevice. The Raspberry Pi captures frame frames from the camera andprocessesthemusingtheOpenCVandmachinelearning modules.TheArduinoboardisresponsibleforcontrolling thecar'swheelorientationandspeed.

We evaluated and analyzed the performance of our selfdrivingcarprototype bytestingitunderdifferentlighting conditions to simulate real-world scenarios. The car accuratelydetectedlanesandtrafficsignalsandresponded accordingly.

Ourresearchpaperpresentsanovelapproachtodesigninga self-driving car prototype using OpenCV and machine learning. Our prototype demonstrates the potential of autonomous cars in the future of transportation. The combination of OpenCV and machine learning offers a powerfulsetoftoolsforimageprocessingandAIalgorithms thatcanbeusedtodevelopmoreadvancedself-drivingcar modelsinthefuture.

2. HARDWARE AND SOFTWARE USED

Forthisproject,varioushardwarecomponentsareused such as Raspberry Pi, Pi camera, Arduino, and L298N Hbridge,alongwithsoftwaretoolslikeOpenCV,ArduinoIDE,

and Cascade trainer GUI. Here's a brief overview of each hardwareandsoftwarecomponentusedintheproject.

2.1 Raspberry pi

The Raspberry Pi is a small yet powerful single-board computer available at a low cost. It is equipped with a processorspeedrangingfrom700MHzto1.2GHzforthePi3 model.Theonboardmemorycapacityrangesfrom256MBto 1GBRAM.Theboardhassupportforupto4USBportsand an HDMI port. Additionally, it has several GPIO (General PurposeInputOutput)pinsthatsupportprotocolslikeI²C. The Raspberry Pi supports both Wi-Fi and Bluetooth connectivity,makingithighlycompatiblewithotherdevices. Itisalsoversatile,supportingprogramminglanguageslike Scratch and Python. Several operating systems are compatible with Raspberry Pi hardware, such as Ubuntu MATE, Snappy Ubuntu, and more. Raspbian is specifically designed to support Raspberry Pi's hardware and is a popularchoiceforprojectsinvolvingthedevice[1][2].

2.3 Arduino

The microcontroller used in the project is based on ATmega329P and offers 14 digital input/output pins, of which6canbeusedasPWMoutputs,and6analoginputs.It alsofeaturesa16MHzquartzcrystal,USBconnection,power jack,ICSPheader,andaresetbutton.Thedevicecomeswith 32 kb of flash memory, and 2 kb of SRAM, and weighs approximately25g.Additionally,theArduinoIDEhasauserfriendly interface and utilizes the basic C programming languageforcoding[4]

2.4 L298N Motor Driver

TheLN293DICservesasamotordriverthatconnects thecar'smotorstotheArduino.Byreceivingsignalsfromthe Arduino, itcan initiate or halt the motion of the motorsin responsetothosesignals.

2.2 Pi Camera

The Pi camera is a small and powerful device that can capturehigh-qualityimagesandvideos.Ithasacompactsize of25mmx24mmx9mmandconnectstotheRaspberryPi throughaflexibleserialinterface.Witha5-megapixelimage sensorandfocusedlens,itcanprovideexcellentvideoclarity, making it useful for time-lapse and slow-motion footage. Additionally,thecameraisidealforsecuritypurposesdueto its wide image support and ability to take stills with a resolutionofupto2592x1944and1080p30videoonCamera modulev1[3].

2.5 OpenCV

OpenCV (Open-Source Computer Vision) is an opensource library for computer vision that is widely used for real-time computer vision and machine learning applications. It offers an extensive range of powerful algorithms for computer vision tasks such as object detection,imageprocessing,andfacerecognition.OpenCV has been significantly utilized in autonomous vehicles for

various purposes such as pedestrian detection, lane detection, and object detection. With machine learning algorithms, OpenCV can learn to recognize and react to differentobjectsandcircumstancesontheroad,makingitan essential element in the autonomous vehicle development[5]

2.6 Arduino IDE

The Arduino IDE is a programming platform used to write code for the Arduino board. It includes a compile button that compiles the code and an upload tab that uploadsthecodeontotheboard.Programswrittenonthe ArduinoIDEareoftencalledsketchesandaresavedwitha .inoextension.Theeditorhasvariousotherfeaturessuchas verify,save,andupload,includelibrary,andserialmonitor. The developers have created easy-to-use functions that makecodingbotheasyandenjoyable.Additionally,thereare numerous examples provided for each interface, allowing userstolearnmoreaboutfunctionsandhardware.

2.7 Cascade Trainer GUI

Cascade Trainer GUI is a user-friendly software with a graphical interfacethatisdesignedfortrainingmodelsfor objectdetection.ItisbuiltontheOpenCVlibraryandallows userstocreatecascadeclassifiersforvarioustasks,including facedetection,licenseplaterecognition,andmore.TheGUI makes it easy to select positive and negative images, customize training parameters, and evaluate classifier accuracy.Researchersanddevelopersseekingcustomobject detectionmodelsforcomputervisionapplicationscanbenefit fromCascadeTrainerGUI.

Cascade Trainer GUI uses Haar Cascade Classifier, an MLbased method, to identify objects in images or videos. Positive and negative images train the classifier to detect objectsbasedontheirfeatures.

3. PROPOSED METHODOLOGY

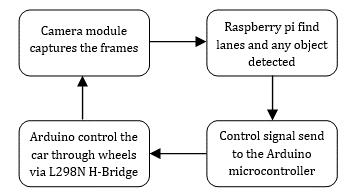

Fig -5:ProposedSelf-drivingmodel

Theproposedmodelforautonomouscarsreliesona Raspberry Pi connected to a Pi cam for capturing images. The captured image is sent to a laptop over the same networkforprocessing.Theprocessedimageresultsinone of four outputs: left, right, forward, or stop. Upon announcement of the output, the corresponding signal is senttotheArduino,enablingthecartomoveina specific directionviamotors.Thismodelisanefficientandeffective waytocontrolthemovementoftheautonomouscarusing computervisionandmachinelearningtechniques.

3.1 Camera Setup

The "raspicam_Cv.h" header file was utilized to accesstheRaspberryPicameraandadjustframeparameters such as height and width. Image quality was enhanced by modifying the brightness, contrast, and saturation of the frame, and the required number of frames was set for the project'spurposes.Theseactionsallowedforobtainingrealtimecameradataintheproject

3.2 Region of Interest

Toenablethevehicletomovebetweenlanesona road,weextractedthelanesbycreatingaregionofinterest basedonconverginglinesinthecameraview.Totransform thelanelinesintoparallel lines,weuseda bird'seyeview approachbydefiningatrapeziumshapeoverthelanesand transforming it into a rectangular view[6]. This technique improvedlanedetectionandallowedformoreprecisevehicle movementontheroad

3.3 Lane center

The captured frame was divided into two equal partstodeterminetheleftandrightlanes.Thebottom1/3rd oftheframewasgrouped,andthemaximumavailablepixel values were selected. The indexes of the maximum pixel values in a row were treated as the left and right lanes in theirrespectiveframes.Wecalculatedthedistancebetween thetwoobtainedlanesandfoundthemidpoint,whichwas

consideredthelanecenter.Thecarreferencepointwasset astheframecenter,anditwasusedtonavigatethecarinthe correct direction between the lanes on the road. The midpointwasusedtodecidewhethertosteerthecartothe leftorrighttomaintainitspositionwithinthelane.

3.4 Master and slave device communication

Commands are sent from the Raspberry Pi to the Arduinobasedonthedistancebetweenthelanecenterand framecenter,triggeringactionssuchasturningleft,right,or movingforward.TheArduinoIDEwasusedtodesigntheleft, right,andforwardfunctions,whilethespeedofthemotors wascontrolledusingPWMsignals.However,theoutputfrom theArduinowasnotpowerfulenoughtodrivethemotors,so weusedanL298Nmotordriver,whichwasconnectedtoan external power supply. The driver received the control signalsfromtheArduino,allowingthecartomoveforwardor turnleftorrightasneeded.

3.5 Object detection

To detect stop signs and traffic signals, machine learningtechniqueswereappliedusingtheCascadeTrainer GUI tool. This tool enabled the creation of custom object detection classifiers without the need for coding. The tool providedadjustableparameterslike the numberofstages, minimumhitrate,andmaximumfalsealarmrate.

3.6 HAAR cascade

HAARcascadesdetectpatternsofpixelintensityin animageviaHAARfeatures-simplerectangularfeaturesthat capture variations in image intensity. For a HAAR cascade classifier to be trained, positive images of the object and negativeimagesofthebackgroundwithouttheobjectwere used.Duringtraining,manyHAARfeaturesweregenerated and the best ones were selected to differentiate between positiveandnegativeexamples.Uponcompletion,theHAAR cascadetrainerproduceda.xmlfilethatcouldbeintegrated with OpenCV using predefined functions to enable object detection.

3.7 Environment setup

Thecarwasdesignedonasmallscale,andforthat purpose, the roads, stop signs, and traffic signals were custom-made. The road was designed in black with white lanestoachievethemaximumvariationinpixelvalueswhen convertedtograyscale.Moreover,thestopsignsandtraffic signalsweremadesmallinsizeandweredesignedtofitthe camera angle and height of the car to ensure accurate detection. These customized designs were critical in achievinghighprecisioninobjectdetectionandprovidingthe car with an optimal environment for testing and development.

4. RESULTS

Thelanedetectionaccuratelydetectedthelaneson theroadinvariouslightingconditions.Thisprocesswasable to detect straight and curved lanes and provide real-time feedbackonthecar'spositionrelativetothelane.

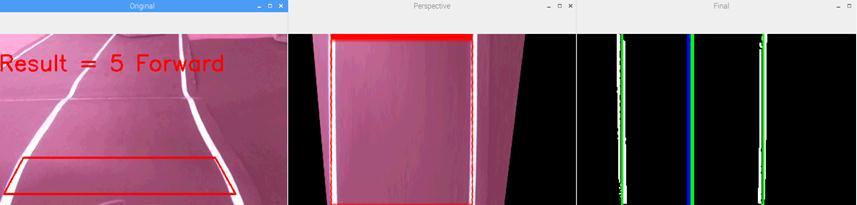

The fig 6 illustrates the lane detection process with three output frames. The leftmost frame displays the captured imagefromtheRaspberryPicamerawitharegionofinterest outlined.Thecenterframeshowsaperspectiveviewofthe road, resembling a bird's eye view. The rightmost frame displaysthedetectedlanes,withthelanecenterandframe centercorrectlymarked.Theframesdemonstratetheability to accurately identify the lanes and provide real-time feedbacktocontrolthecar'smovements.

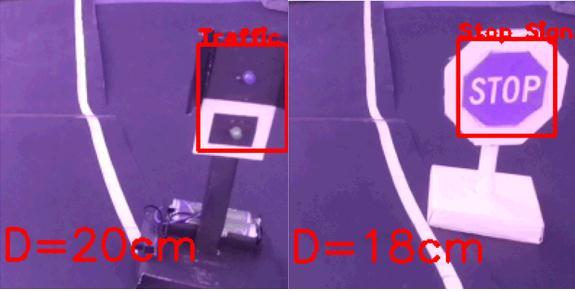

The fig 7 demonstrates the impressive object detection capabilities of the autonomous car system, including the identificationofstopsignsandtrafficsignals.Intheleftmost frame,aboldredrectanglesignalsthedetectionofatraffic signal in the captured image, while the rightmost frame displaysastrikingredrectanglewhenastopsignisdetected. Bothframesalsodisplaythedistancebetweenthecarand the detected object in centimeters, allowing for effective navigation. The stop sign detection is particularly impressive, with a highly accurate distance estimate. Additionally, the traffic signal detection algorithm is incredibly reliable, only activating when the red light is illuminated,ensuringthatthecarstopsonlywhennecessary forsafety.

TheautonomouscardesignedusingOpenCVandmachine learningsuccessfullynavigatedapre-determinedpathwith high accuracy and safety. It could detect and respond to changes in the environment like lane markings, traffic signals,andstopsigns.Theresultsshowcasethepotentialof usingthesetechnologiesfordesigningautonomouscars.

5. CONCLUSION

Inthispaper,amethodtomakeamodelofaselfdrivingcarispresented.Thedifferenthardwarecomponents alongwithsoftwareandneuralnetworkconfigurationare clearly described. With the help of Image Processing and MachineLearning,asuccessfulmodelwasdevelopedwhich workedasperexpectation.

Aside from transportation, self-driving cars can also have significantbenefitsintheautomationindustry.Theycould be used for patrolling and capturing images, reducing accidents caused by the carelessness of goods carrier vehicles,andensuringbetterlogisticflow.Busesforpublic transportwouldbemoreregulatedduetominimalerrors. Hence,duetoitsgreaterautonomousnatureandefficiency, an autonomous car of this nature can be practical and is highly beneficial for better regulation in the goods and peoplemover’ssection.

Furtherresearchinthisareacouldfocusonenhancingthe car's capabilities, such as adding additional modules for obstacledetection,pedestriandetection,andGPSmapping. Investigatingthecar'sperformanceindifferentweatherand lighting conditions could also be explored. Ultimately, the potential impact of this technology is significant, and it is expected to transform the transportation industry in the comingyears.

Overall,theuseofmachinelearningandimageprocessing can help self-driving cars detect and respond to different objectsintheenvironment,whichiscriticalforensuringsafe navigation. Withthehelpofthesetechnologies,successful models have been developed, implemented, and tested. Further research and development in this area hold

immense potential for revolutionizing the transportation andautomationindustries.

REFERENCES

[1] MattRichardson,ShawnWallace,GettingStartedwith RaspberryPi,2ndEdition,PublishedbyMakerMedia, Inc.,USA,2014.BookISBN:978-1-457-18612-7.

[2] https://www.hongkiat.com/blog/pi-operatingsystems/

[3] https://www.raspberrypi.org/documentation/hardwa re/camera/

[4] https://www.arduino.cc/en/Reference/Board?from=G uide.Board

[5] Gary Bradski, Adrian Kaehler, Learning OpenCV: Computer Vision with the OpenCV Library, "O'Reilly Media,Inc.".Copyright, September2008,1stEdition, BookISBN:978-0-596-51613-0

[6] H.Dahlkamp,A. Kaehler,D. Stavens, S. Thrun,andG. Bradski.Self-supervisedmonocularroaddetectionin desertterrain.G.Sukhatme,S.Schaal,W.Burgard,and D. Fox, editors& Proceedings of the Robotics Science andSystemsConference,Philadelphia,PA,2006.

[7] S Swetha, Dr. P Sivakumar, “SSLA Based Traffic Sign and Lane Detection for Autonomous cars”, 2021 InternationalConferenceonArtificialIntelligenceand SmartSystems(ICAIS)©2021IEEE,April2021.

[8] G.S.Pannu,M.D.Ansari,andP.Gupta,“Article:Design and implementation of autonomous car using raspberry pi”, International Journal of Computer Applications,vol.113,no.9,pp.22–29,March2021.

[9] Yue Wanga, Eam Khwang Teoha & Dinggang Shenb, LanedetectionandtrackingusingB-Snake,Imageand Vision Computing 22 (2004) , available at:www.elseviercomputerscience.com,pp.269–280.

[10] Joel C. McCall &Mohan M. Trivedi, Video-Based Lane EstimationandTrackingforDriverAssistance:Survey, System, and Evaluation, IEEE Transactions on IntelligentTransportationSystems,vol.7,no.1,March 2006,pp.20-37.

[11] XiaodongMiao,ShunmingLi&HuanShen,On-Board lanedetectionsystemforintelligentvehiclebasedon monocular vision, International Journal on Smart SensingandIntelligentSystems,vol.5,no.4,December 2012,pp.957-972.

[12] International Journal of Engineering Research in ElectronicsandCommunicationEngineering(IJERECE)

Vol. 5, Issue 4, April 2018 Prototype of Autonomous CarUsingRaspberryPi.

[13] DesignandImplementationofAutonomousCarusing Raspberry Pi International Journal of Computer Applications(0975–8887)Volume113–No.9,March 2015

[14] PratibhaIGolabhavi,B.P.Harish,2020,Self-Driving Car Model using Raspberry Pi, INTERNATIONAL JOURNAL OF ENGINEERING RESEARCH & TECHNOLOGY(IJERT)Volume09,Issue02(February 2020).

[15] Self-Driving Car using Raspberry-Pi and Machine LearningInternationalResearchJournalofEngineering and Technology (IRJET) Volume 06, Issue 03, March 2019.