MILITANT INTRUSION DETECTION USING MACHINE LEARNING

1,2,3,4Student, Department of Electronics and Communication Engineering, East West Institute of Technology, Bangalore, India

5H.O.D, Department of Electronics and Communication Engineering, East West Institute of Technology, Bangalore, India ***

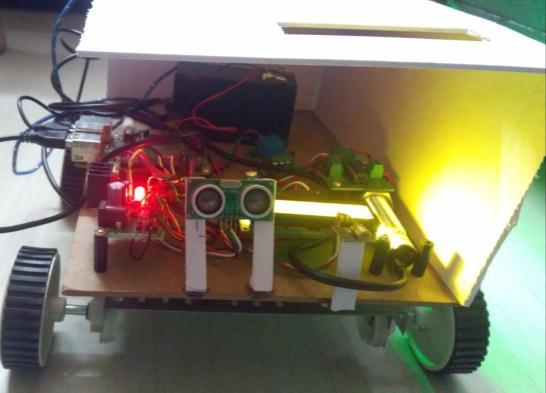

Abstract - The project is being used for monitoring, and live-tracking. The prototype is used in livesurveillance for monitoring and detecting abnormal events based on real-time image processing techniques. Operations of this project have three processing modules, the first processing module is for object detection using the YOLO-V5 algorithmandthe second processing moduleisformonitoringandalarm operations will be carried out by the third processing module.)

Key Words: Live-tracking, Live-surveillance, Object detection, Ultrasonic sensor, YOLO-V5 algorithm.

1. INTRODUCTION

The Militant Intrusion Detection System is very importantforthemilitary.Thissystemdetectsweapons, grenades, armored vehicles, land mines, and intruders. Therealgoalofthissystemistoincreasetheaccuracyof detection of weapons and intruders. The system works basedontheYOLO-V5algorithm,asubtopicofmachine learning.ThedetectionrobotconsistsofaRaspberryPi that contains the detection program. Once the robot detects a weapon or intruder, it sends a message displayed on the LCD screen. Landmine detection is an additionalfunctionoftherobot,whichisperformedwitha metal sensor. By using this robot, many attacks can be detectedinadvance[1],[3],[15].

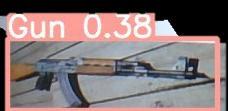

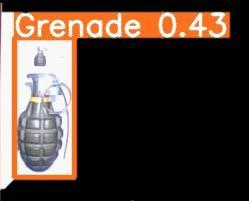

The YOLO-V5 (You Only Look Once) is a neural networkbased algorithm specifically used to classify objects such as weapons, fire, and water drops. It is popularbecauseofitsspeedandaccuracy.Thealgorithm creates a box for each object and detects parts of the object.Whenitdetectssomethingrelatedtotheinput,the objectinquestionisdetected[16].

AkeytechniqueusedinYOLOmodelsisnon-maximal maximumsuppression(NMS).NMSisapost-processing stepusedtoimprovetheaccuracyandefficiencyofobject detection. Object detection typically creates multiple bounding boxes for a single object in an image. These boundingboxesmayoverlaporbeindifferentpositions, buttheyallrepresentthesameobject.

1.1 Objectives

This prototype implements the detection of differentwarshipobjectsusingaYOLO(YouOnly LookOnce)algorithmwhichisthebaseofCNN layers(ConvolutionalNeuralnetwork).

Itdetectsguns,grenades,andtankers.

Oncethesystemdetectstheobjectsthedetection details are stored & it will send alerts to the adminside(controlroom).

1.2 Problem statement

Developamilitaryrobotthatcanperformcomplextasks in difficult and dangerous environments, such as battlefieldsurveillanceandthreatneutralization.

1.3 Motivation

Nowadays,theprotectionofbordersandpersonnelareas becomes very important. Video surveillance plays an important role in real-time. Due to these requirements, cameras are installed at every corner and the video surveillance,systemdetectsthesceneandautomatically detectsabnormalactivitiesandentrances.

1.4 Existing System

Theexistingsystemdoesnotdistinguishbetweennormal andabnormalevents,resultinginpolicearrivingatcrime scenes less and less frequently unless there is visual verification, either by manned patrols or by electronic images from surveillance cameras [12]. Irregularity or anomaly detection is the identification of irregular, unexpected, unpredictable, unusual events or elements that are not considered normally occurring events or regularelementsinapatternorelementinadatasetand thusdifferfromexistingpatterns[6][10].Ananomalyisa pattern that occurs deviantly from a set of standard patterns[14].

2. LITERATURE SURVEY

I Peng Zhao and Lingren Kong used the YOLO-V3 algorithm,whichwasslowandlessaccurate[5],[9].

GyanendraK.VermaandAnamikathenusedtheRCNN algorithm,whichwasfasterthantheYOLO-V3algorithm butcouldnotmatchmanysimilarobjects[7],[13],[14].

AnkitKashyapthenusedSSDfromtheCNNalgorithm whichcollectsthedataandconvertsthemintograyscale images. The converted images are then analyzed and separated by parts and analyzed separately [10], [12], [16].

HarshJain,AyushJain,andAnkitKashyapuseddeep learning algorithms and developed a model that only detectsobjectsapproachingthecamera[1].

ArifWarsidevelopedamodelusingonlyametalsensor andanultrasonicsensortoidentifytheweaponsandthe rifle,butitwasnotabletoidentifytheweaponsandthe intruders[2],[19]

Table -1: Literaturesurvey

1 YOLO-v3:A Lightweight Network Modelfor Improving the Performance ofMilitary Targets Detection

2 . AHandheld Gun Detection usingFaster RCNNDeep Learning

3 Anomaly

Detectionin Videosfor Video surveillance Applications usingNeural Networks

4 Weapon Detection using Artificial Intelligence andDeep Learningfor Security Applications

2020 IEEE Peng Zhao, Lingren Kong

YOLO-v5 algorithmis usedfor extraction, whichis fasterthan theGhostNet algorithm.

3. PROPOSED METHODOLOGY

Themodelconsistsofthreephases:

1. Capturing

Theimageoftheobjectiscapturedusinga USBcamera.

The captured video is then divided into framesforanalysis[5].

2. Recognition

Thisphasefirstdealswithobjectdetection (guns, grenades, armoured cars, and intruders).

Objectdetectionisperformedbased onthe inputimages[1],[3],[4],[6].

3. Alerting

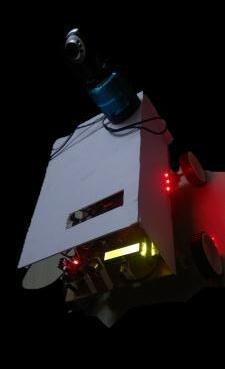

As soon as the objects (guns, grenades, armoured cars) are detected, the RGB light barturns'red'[20]

Whentheintruderenters,thelaserlightON lightsuponceandsendsawarningmessage to the soldiers, which is displayed on the screenLCD[2].

2021 IEEE Gyanen draK. Verma, Anamik a

2022 IEEE Mohana, Vidyash ree Dabbag ol

YOLO-v5has aprecisionof 87.69%.Itis more accuratethan FasterRCNN.

Butinour project,we candetect both weaponsand humans,as wehaveused theYOLO-v5 algorithm.

Fig -1:SystemArchitecture

4. REQUIREMENTS

4.1 Hardware requirements

2022 IEEE Harsh Jain, Ayush Jain, Ankit Kashyap

Toovercome lowtime flexibility,we haveusedthe YOLO-v5 algorithm.

Camera

RGBLedstrip

DCMotor

MotorDriver(H-Bridge)

LCDDisplay

MetalSensor

UltrasonicSensor

Raspberry-pi

Speaker

WIFI

4.2 Software requirements

Operatingsystem:Windows10

SoftwareTool:OpenCV

CodingLanguage:Python

Tool:Imageprocessingtoolbox.

5. IMPLEMENTATION

Inthisproject,solutionsareobtainedusingsoftware and hardware components to achieve the results of Militant Intrusion Detection. Through the YOLO-V5 algorithmintrudersandobjectslikeguns,grenades,and takersaredetected[2],[5],[7].

Makingareal-timeapplicationusingcomputervision isfoundtobeamoreefficientandcreativetaskthatneeds processingaccuracyofthesystem[1],[6],[3].OpenCVis freely available software, which is used to create a computer vision. Open CV is used in programming languageslikePython.Itsupportsmanyinterfacinglike low-level and high-level peripherals that contain cameras[17],[19]

Objectslikegrenades,guns,andtankersaredetected and sent a message and captured image through the telegramapp andtheRGBsensorstripturns red[4]. By using the YOLO-V5 algorithm intruder is detected and displayedontheLCDscreen,andtheLaserlightwillON once.ItisaMachineLearningapproachwherethecascade functionistrainedfromalotofimages[7][8].

Sendsthemtotheopen-cv,whichdetectsthem.

6.

Theproposedsystemincludesthreephaseswhichare object-capturing,recognition,andalerting.

Thecameracapturestheobjectsthatarein

frontoftherobot.

2. Recognition

Thedatasetisextractedfromtheimageit captured.

Thedataisanalyzedbypre-processingand featureextraction.

TheYOLO-V5algorithmisapplied,which createstheframeonthecapturedimage.

Thenitissenttothealertingarea[11].

3. Alerting

Afterdetection,therobotannouncesthe numberofdetectedintrudersorweapons throughtheloudspeaker.

Iftheenvironmentissafe,theGREENLED islit, otherwise,theREDLEDislit.

Thenitisdisplayedonthe7-segmentdisplay LCD.

[4] Gyanendra Kumar Verma et.al “Handheld Gun detection using Faster R-CNN Deep Learning” IEEE Conference2019.

Fig -6: ResultsofMiliantIntrusionDetectionis displayedontheLCDscreen

6.1 ADVANTAGES

Ithasfullyautomatedoperations,withoutanyhuman intervention

Noonecanmanipulatethedatasentbythedeviceto thesoldiers

ItisCostEffective.

Unknownintrudersaredetected.

Attacksareprioralerted.

6 2 FUTURE SCOPE

The system can be further improved by adding an extrafeatureofhumanthreatdetectionbyidentifying themilitantfromthegroupofimages

Therobotcanbeequippedinidentifyingtheweapons concealedandneutralizingthethreat.

Thesystemcanbeextendedtootherdomainssuchas mob management to effectively handle man management.

7. CONCLUSION

TheproposedprojectsuccessfullyimplementsaMilitant Intrusion Detection System by considering various degrees of threats in the form of automated weapons, Grenades, Tankers, and artillery systems in a seamless way.Thisprojectisanewhopeinthebattlefieldwhere humanlivesareatstake.

REFERENCES

[1] HarshaJainet.al “Weaponandmilitantdetection using artificial Intelligence and deep learning for securityapplications”ICESC2020.

[2] Arif Warsi et.al “Automatic handgun and knife detectionalgorithms”IEEEConference2019

[3] Neelam Dwivedi et.al. “Weapon and militant classification using deep Convolutional neural networks”IEEEConferenceCICT2020.

[5] Abhiraj Biswas et.al. “Classification of Objects in Video Records using Neural Network Framework,” International Conference on Smart Systems and InventiveTechnology,2018

[6] Pallavi Raj et.al “Simulation and Performance Analysis of Feature Extraction and Matching Algorithms for Image Processing Applications” IEEE International Conference on Intelligent Sustainable Systems,2019.

[7] Mohana et.al. “Simulation of Object Detection Algorithms for Video Surveillance Applications”, International Conference on I-SMAC (IoT in Social, Mobile,Analytics,andCloud),2018.

[8] Glowacz et.al “Visual Detection of Knives in SecurityApplicationsusingActiveAppearanceModel”, MultimediaToolsApplications,2015.

[9] Mohana et.al “Performance Evaluation of BackgroundModellingMethodsforObjectDetection andTracking”InternationalConferenceonInventive SystemsandControl,2020.

[10] Rojith Vajihalla et.al ”Inventive system and control for performance evaluation of background modeling method of object detection and tracking” Internationalconference,2020.

[11] H R Rohit et.al., “A Review of Artificial Intelligence Methods for Data Science and Data Analytics:ApplicationsandResearchChallenges,”2018 2ndInternationalConferenceonI-SMAC(IoTinSocial, Mobile,AnalyticsandCloud),2018.

[12] AbhirajBiswaset.al.,“ClassificationofObjectsin Video Records using Neural Network Framework,” International Conference on Smart Systems and InventiveTechnology,2018

[13] PallaviRajet.al.,“SimulationandPerformance Analysis of Feature Extraction and Matching Algorithms for Image Processing Applications” IEEE International Conference on Intelligent Sustainable Systems,2019.

[14] Mohana et.al., “Simulation of Object Detection Algorithms for Video Surveillance Applications”, International Conference on I-SMAC (IoT in Social, Mobile,Analytics,andCloud),2018.

[15] Yojan Chitkara et. al., “Background Modelling techniques for foreground detection and Tracking using Gaussian Mixture model” International Conference on Computing Methodologies and Communication,20

[16] N. Jain et.al., “Performance Analysis of Object Detection and Tracking Algorithms for Traffic Surveillance Applications using Neural Networks,” 2019ThirdInternationalConferenceonI-SMAC(IoTin Social,Mobile,Analytics,andCloud),2019.

[17] Mohana et.al., Performance Evaluation of Background Modeling Methods for Object Detection andTracking,”InternationalConferenceonInventive SystemsandControl,2020.

[18] J. Wang et.al., “Detecting static objects in busy scenes”,TechnicalReportTR99-1730,Departmentof ComputerScience,CornellUniversity,2014.

[19] V. P. Korakoppa et.al., “An area-efficient FPGA implementation of moving object detection and face detection using adaptive threshold method,” International Conference on Recent Trends in Electronics, Information & Communication Technology,2017.

[20] S.K.Mankaniet.al.,“Real-time implementation of object detection and tracking on DSP for video surveillanceapplications,”InternationalConferenceon Recent Trends in Electronics, Information & CommunicationTechnology,2016.