Squid Game and Music Synchronization

Abstract - According to a recent info trends study, in 2021, mobile and camera device users will have taken more than 1.5 trillion images, a sharp increase from the data from 2016. These image data will be used in a variety of real-time applications, including visual video surveillance, object identification, object detection, and classification. Advanced computer vision algorithms that were an upgrade over traditional computer vision techniques were created to manage these enormous volumes of data automatically.

One of the most crucial tasks is object detection, which can greatly enhance the functionality of a variety of computer vision-based applications, including object tracking, license plate detection, mask and social distance detection, etc. To create a comprehensive

Key Words: Detection, Computer vision, Image

1.INTRODUCTION

Saliency and scalable object detection are two recent examples of object detection methods based on computer vision systems. The conventional object detection procedure can be divided into region detection, featureextraction,andclassification.Thesesystemshavea number of problems with variations in pose, changing lighting settings, and increased complexity of localization ofanobjectwithinthegivenimage.

In the world of computer vision, the world is displayedin3D,buttheinputtohumansandcomputersis two-dimensional. In addition, computer systems can only processbinarybitsofdata,butsomeusefulprogramsonly work in two dimensions. To take full advantage of computer vision, you need 3D information, not just a collection of 2D views. , These limited opportunities, 3D informationisportrayeddirectlyandisclearlynotasgood as people use. However, designing a robust, feature-rich extractforalltypesofobjectsisconsideredatedioustask due to the different number of lighting variations. To perform visual recognition, you need a classification model and library to further distinguish the categories of objects.Oftheobject.

The application of these advanced computer vision techniques along with various machine learning algorithms like NumPy, TensorFlow which provide support for advanced mathematical calculations along

***

with vast repositories of libraries and packages available in python like Tkinter for front- end design and developmentcansolvevariousreal-worldproblems,these coupledwithdeeplearningalgorithmsandaccurateobject detection using Yolo and various iterations for neural networksprovetobeabooninimprovingtheaccuracyof objectdetectionandtrackingandapplicationoftheseinto the real world.In context to the current issue concerning expensive games with augmented reality and virtual reality because of their costly sensors and high-end processing power required for their application also not convenient for small-scale manufacturers to develop without sufficient research and development, that’s when the application of advanced computer vision comes into picture which has proven to be useful in various applicationlikeyogaposeestimationandvariouscomplex exercises like posture management thus success in this field provides a view to approaching other aspects of applicability.

1.1 Overview

An approach to gaming implementation of a television series which proved to be a motivation for this implementationofagamebasedsolelyjustonthecamera sensor and advanced computer vision technologies like tensor flow for artificial intelligence and NumPy for calculations and Tkinter for front-end development, we intend to replicate the real world game played in the movie scene into a gaming scenario using the cheapest of resources as possible and proving that immersive games canalsobecheapandnotneedinganycomplexconsoles

1.2 Problem Statement

Current Augmented Reality and Virtual Reality games are too expensive as the need of costly sensors to recognize movements is very high. Training of players, athletesanddancers’postureandresponsetimecurrently needs an experienced human supervision. Motion detection using cameras is not much used in game development due to its accuracy Issues also as it hinders profit margin of console developers. Understanding community,andprovidementalhealthguidance.

2. EXISTING SYSTEM

Many types of immersive games, many with respect to virtual reality and recently augmented reality applicationshasincreasedinthebasicformat,buttheyare quite expensive as they’re need arises for expensive sensorsand customizedhardware(consoles)andsoftware areneeded.

Previous use cases of computer vision had very complex process of object detecting and tracking which proved to be slower and more process intensive with so many processes needed for detection and its application and unabletocompetewiththeirconsole’scounterpart.

3. PROPOSED SYSTEM

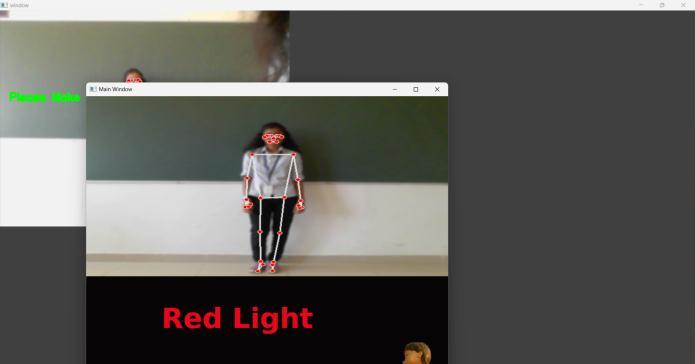

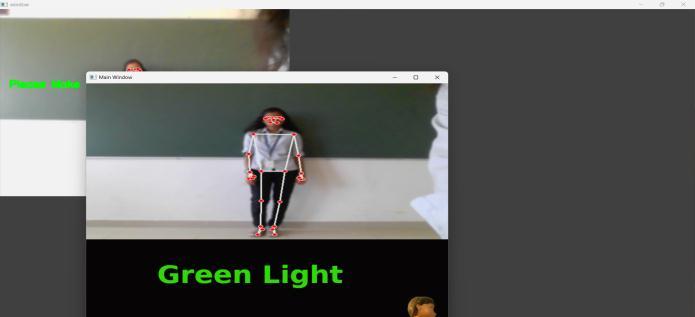

Recent advancements in advanced computer visionandpopularityofpythonasthegotoprogramming language for artificial intelligence and machine learning applications led to many developers getting involved in making custom libraries and packages, also improvement in tensor flow allowed faster insight discovery from data. Due to this aspect, and the lack of intelligence in current generation gaming consoles meant that we could try to develop immersive games with just basic sensors and cameras. Furthermore, as success and acceptability were seeninARintheusecasesofpostureandyogatrainingas well as trying on of glasses and visualizing a piece of furniture in the living environment. Thus, as a first approachweintendtoreplicateimplementationofa realworld game (squid game- red light green light) with just thecamerabackedbyartificialintelligence

4.

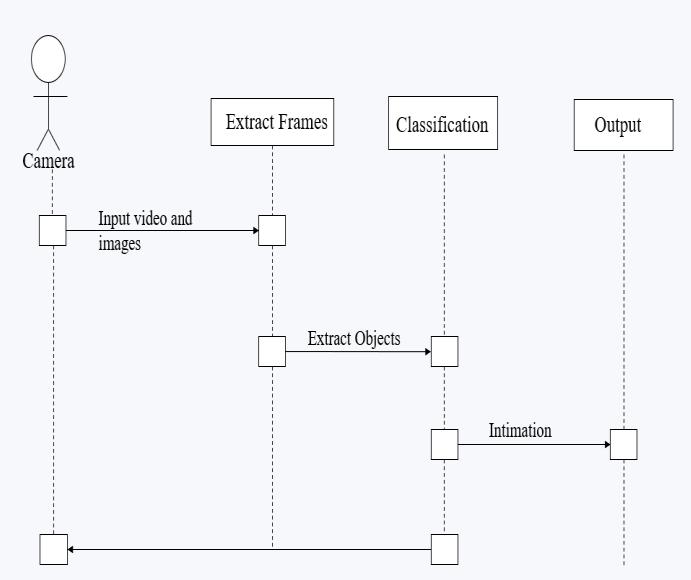

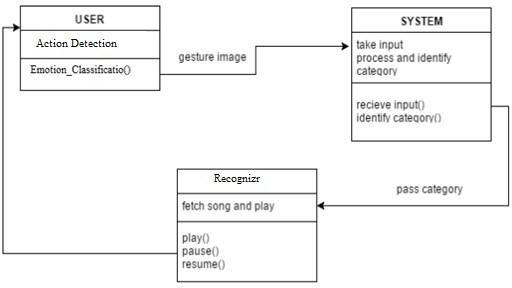

Theproposeddesignofapplicationofcomputervisionand artificialintelligenceandmachinelearningalgorithmand libraries.

5.MODULE DESCRIPTION

Open CV: This research focuses on development of an Immersive gaming application with bare minimum hardware requirements possible, that’s where application of advanced computer vision comes into picture which is usedtounderstandthecharacteristicsoftheimageframes obtained from the video or a live stream, as it is a opensourceopensourcelibraryforcomputervisioncontaining machinelearninganditplaysamajor.

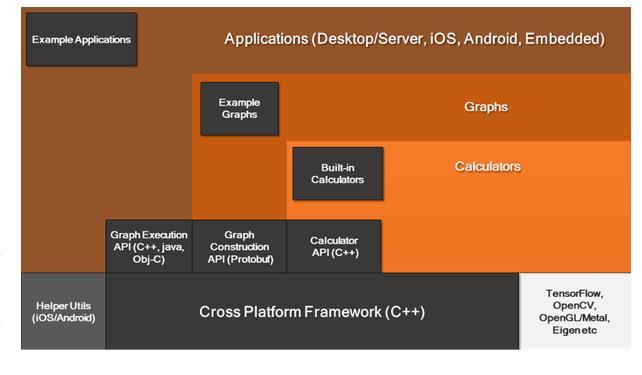

Media-Pipe: Media Pipe is a python package built by google for building machine learning pipeline models for processing time-series of data like videos, audio, etc. its cross-platform Framework works in Desktops, Servers and android as well as ios use case . Like Raspberry Pi Media Pipe is powered by revolutionary product and servicesthat weusedaily.Unlikepower-hungrymachine learning Frameworks, Media Pipe requires minimal resources, Media Pipe opened up a whole new world of opportunity for researchers and developers following public release . Media Pipe Toolkit comprises the Framework and the Solutions, the diagram shows how mediapipeisorganizedwithitsfeatures.

Tkinter: Thetkinterpackageisstandard Python user interface graphicaluserinterfacetoolkit,BothTkand tkinterareavailable on manyplatformsandarecross platformcompatible.Runningpythontkinterwasdone as a standard installation procedure from the command line of scripts using the pip command and later import and used as an object during implementation .In this project we use tkinter to representanimageofhowthegameplayisgoingtobe along with button operations and playing of instructionsalongwithlinkingofthreadingmodules.

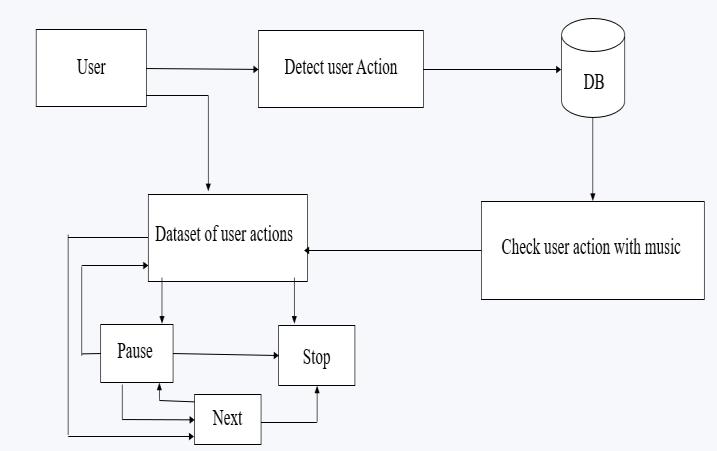

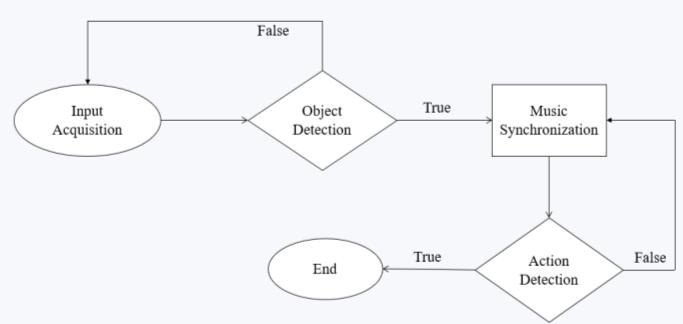

6.FLOWCHART

Multi-Threading: Multi-threading is a way of multitasking using threads, as the modern processors have the capability to run multiple threads at a time, itshighly beneficial to run different taskson different threads of a processor to reduce the delay and improveperformance.

Fig -6: MethodologyFlowchart

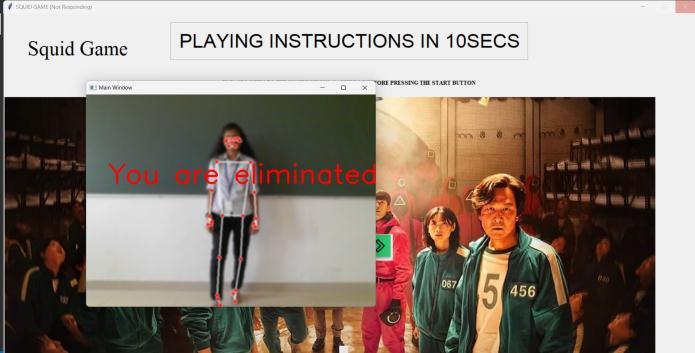

1.In level 0 the user interacts with the graphical interface developedwithTkinter.

2.Arecordingoftheinstructionsofthegameisplayedvia theplay-soundmodule.

3.Thestartbuttonisconnectedtotheback-endcodeviaan os module (latency is reduced and performance is increasedbyimplementingmultithreading).

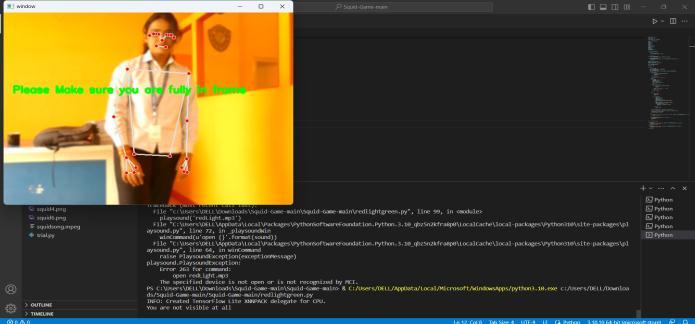

4.When The start button is pressed the open computer vision kicks into action and connects to the camera therebydisplayingavisualaswellasanalyzingtheframes.

5.Humanisolation,detectionandsegmentationisdoneby media pipe which is an ml based google developed module.

6.The action of the user is captured via the landmarks drawn according to the necessity by the media pipe module.

7.TheLandmarksaregeneratedasasetofmatricesorcoordinateequationswhicharethenfedintoNumPylibrary andtensorflowforgatheringinsightsfromthedata.

8.Later insights are analyzed and a threshold is given to identifywhetherthesubjectiscompletelyvisibleornot.

9.When the subject(player) is completely visible the gameplaybeginsalongwithmusicsynchronization.

8. CONCLUSIONS

When compared to the conventional immersive gaming technologies and the hardware and processing capabilities needed for its effective functioning, we have proposed implementation of artificial intelligence and machine learning into the immersive gaming technology, which was possible due to the previous success observed in implementation of media pipe in exercise posture as well as yoga pose detection which facilitated its applicationinthefieldofimmersivegaming,Withthehelp of media pipe and ai and ml implementation using tensor flow the capabilities of the system needed for an immersivegamingexperienceisconsiderablyreduced.

ACKNOWLEDGEMENT

We would like to extend our deepest gratitude to our Project Guide, Prof. Suhasini, who guided us and provided us with his valuable knowledge and suggestions onthisprojectandhelpedusimproveourprojectbeyond our limits. Secondly, we would like to thank our Project Coordinator, Dr. Ranjit K N and Dr HK Chethan, who helped us finalize this project within the limited time frameandbyconstantlysupportingus.Wewouldalsolike toexpressourheartfeltthankstoourHeadofDepartment, Dr. Ranjit KN, for providing us with a platform where we can try to work on developing projects and demonstrate the practical applicationsof our academiccurriculum. We would like to express our gratitude to our Principal, Dr. Y TKrishneGowda,whogaveusagoldenopportunitytodo this wonderful project on the topic of ‘Squid Game and MusicSynchronization’,whichhasalsohelpedusindoing alotofresearchandlearningtheirimplementation.

REFERENCES

[1] Venkata Ramana, Lakshmi Prasanna “Human activity recognition using opencv”, International journal of creativeresearchthoughts(IJCRT),(2021).

[2] Lamiyah Khattar, Garima Aggarwal “Analysis of humanactivityrecognitionusingdeeplearning.”2021 11th International Conference on Colud Computing, DataScience&Engineering.

[3] Long Cheng,Yani Guan “Recognition of human activities using machine learning methods with wearable sensors” IEEE Members Research and DevelopmentDepartmentin2017.

[4] Ran He, Zhenan Sun “Adversarial cross spectral face completion for NIR-VIS face recognition.” IEEE paper receivedonJanuary2019.

[5] Jing Wang, Yu cheng “Walk and learn: facial attribute representation learning”,2016 IEEE Conference on ComputerVisionandPatternRecognition.

[6] Yongjing lin,Huosheng xie “Face gender recognition based on face recognition feature vectors”, International Conference on Information Systemsand ComputerAidedEducation(ICISCAE),(2020).

[7] Mohanad Babiker, Muhamed Zaharadeen “Automated Daily Human Activity Recognition for Video Surveillance Using Neural Network.” International Conference on Smart Instrumentation, Measurement andApplications(ICSIMA)28-30November2017.

[8] Neha Sana Ghosh, Anupam Ghosh “Detection of Human Activity by Widget.” 2020 8th International

Conference on Reliability, Infocom Technologies and Optimization(ICRITO)June4-5,2020.

[9] ANYANG,WANGKAN“SegmentationandRecognition of Basic and Transitional Activities for Continuous PhysicalHumanActivity”IEEEpaperon2016.

BIOGRAPHIES

Suhasini, Professor at Maharaja Institute Of Technology

Thandavapura, Mysore, Department of Computer Science andEngineering.

Bhavana VR, Student of Maharaja Institute Of Technology

Thandavapura, Mysore. Pursuing Bachelor's of Engineering Degree in Computer Science and Engineering.

Kruthik Gowda HR, Student of Maharaja Institute Of Technology

Thandavapura, Mysore. Pursuing Bachelor's of Engineering Degree in Computer Science and Engineering.

Ramya BL, Student of Maharaja Institute Of Technology

Thandavapura, Mysore. Pursuing Bachelor's of Engineering Degree in Computer Science and Engineering.

Shama R, Student of Maharaja Institute Of Technology

Thandavapura, Mysore. Pursuing Bachelor's of Engineering Degree in Computer Science and Engineering.