International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 04 | Apr 2023 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 04 | Apr 2023 www.irjet.net p-ISSN:2395-0072

Ragavendra S1 , Subhash S2 , Nishanth B3 , Chandan J4

1 Assistant Professor, Department of Computer Science and Engineering, Jnanavikas Institute of Technology, Karnataka,India

2Undergraduate Student, Department of Computer Science and Engineering, Jnanavikas Institute of Technology, Karnataka, India

3Undergraduate Student, Department of Computer Science and Engineering, Jnanavikas Institute of Technology, Karnataka, India

4Undergraduate Student, Department of Computer Science and Engineering, Jnanavikas Institute of Technology, Karnataka, India ***

Abstract – Less people are aware of the symptoms of skin illness and how to prevent it, making it one of the deadliest forms of cancer. The aim of this study is to identify and categorize different forms of skin cancer using machine learning and image processing techniques. We produced a pre-processing image for this endeavor. We lowered the dataset's size,

To fit the needs of each model, the photos were resized and had their hairs removed. The EfficientNet B0 skin ISIC dataset was trained using pre-trained ImageNet weights and modified convolution neural networks.

Keywords- Disease Detection, Image Processing,YOLOR,EfficientNetB0,Quantification

INTRODUCTION

Skin cancer, which is on the rise globally, is the sixth mostcommon cancer. Normally, tissues are made up of cells, and tissues make up the skin. Consequently, cancer is caused byabnormal or unregulated cell development in connected tissues or other nearby tissues. Numerous factors, such asUV radiation exposure, a weakened immune system, afamily history of the disease, and others, may affect thedevelopment of cancer. These types of cell developmentpatterns can appearinbothbenignandmalignanttissues. Benigntumours, which arecancerous growths, aresometimes mistakenfor minormoles.Malignanttumours,ontheotherhand,aretreatedlikeacancerthatmayspreadfatally.

Thebody'sothertissuescouldalsobeharmedbythem.Basalcells,squamouscells,andmelanocytesarethethreetypesof cellsthatmakeuptheskin'souterlayer.Theseareatfaultforthetissues'developmentofcancer.

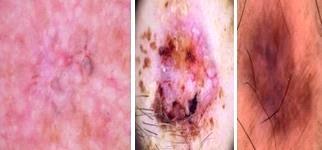

Thethreefatalformsofskindisease(SCC)aremelanoma,basalcellcarcinoma(BCC),andsquamouscellcarcinoma(SCC).

Vascular lesions, actinic keratosis (AK), benign keratosis, dermatofibroma, and melanocytic nevus are a few examplesof furthertypes.Melanomaisthemostdangeroustypeof cancerbecauseitcancomebackevenaftertreatment.The United StatesandAustraliahavethehighestratesofskincancer.

The study by YaliNie& team, Automatic Melanoma Yolo Deep Convolution Neural Networks for Detection" [1] describes yolo approaches based on DCNNs that are used to identify melanoma. For melanoma detection in lightweight system

applications,theyprovideimportant advantages. YOLO's mean average precision (mAP) cangoascloseto0.82withjust 200trainingphotographs.

A Convolution Neural Network and YOLO-based Real- Time Skin Cancer Detection System is another paper by Hasna FadhilahHasyaetal.Forthebenefitof patientswhodonotwishtowaitfortheresultsofhospitallab tests,theauthorof this research [2] created a method for real-time skin cancer detection. The goal is to create a skin cancer detection algorithmthatwillmakeiteasierandmoreefficientforclinicianstoanalyzeskincancertestfindings.

The Convolution Neural Network (CNN) is utilized to process and aggregate skin cancer picture data, and YOLO is the real-timesystem.YOLOV3hasa96%overallaccuracyrateanda99%real-timeaccuracyrate.

PerformanceEvaluationofEarlyMelanomaDetectionAccordingtotheresearchofR.S.ShiyamSundarandM. Vadivel[3], oneofthemethodsthatusestheMVSMclassifieristheSkinLesionClassificationSystem.Melanomaisthemostcommon kind of skin disease. Early disease detection is the key to reducing thedisease's effects. Actinic keratosis, squamous cell cancer, basal cell cancer, and seborrheic verruca are the five forms of skin lesions that are categorized and taken into accountbytheproposedmethod.

Ferreira, P. M. and colleagues provide a basic concept for an annotation tool that can improve dermoscopy datasets in their publication "A tool for annotating dermoscopy datasets" [5]. Create a ground truth image under dermatologist supervision and apply manual segmentation approaches to automate categorization and segmentation procedures. Any detection system's feature extraction phase is essential. A posteriori boundary edition, region labelling, segmentation comparison, border reshaping, multi-user ground truth annotation, manual segmentation, image uploading and display aresomeofthemainfeaturesofthisprogramme.

In their study, Vedantin Chintawar and Jignyasa Sanghavi examined various feature extraction techniques and recommendedthetechniquethatwouldbemostuseful forapplicationsrequiringthedetectionofskincancer. Enhancing

FeatureSelectioninSkinDiseaseDiagnosisSystems.

Competencies"[6].Thefirstandmostcrucialstepisthemethodofhairremoval,andthenextissegmentationutilizingthe OTSUprocedure.Aspectsincludingwholeness,luminosity,fastcorners,solidity,shapeskewness,andborderskewnessare allretrievedbytheprojectedsystem.

Specifictraitsmustexist,inaccordancewithHutokshiSui's"Extractionoftexturefeaturesformelanoma"[7].Inthisstudy, thetextureofskinlesionsisexaminedusing greyscalephotosratherthancolourprofiles.SVMisutilizedasaclassifierto distinguishbetweendifferenttypesofskincancer,whileGLCMisusedtoextractfeatures.

METHODOLGY

DATASET: Inthisstudy,weemployedabespokedatasetmadeupof3000Googlephotosandsamplesofskincancer.

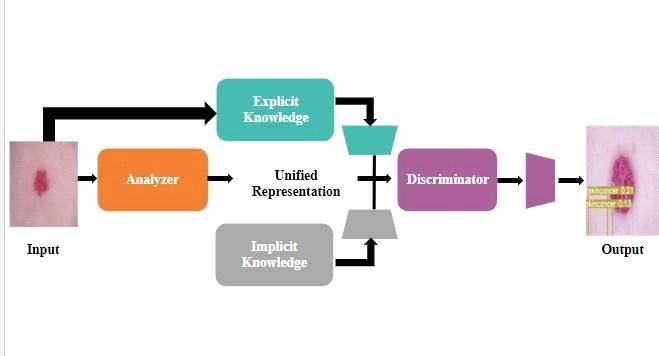

METHOD: YOLORisanevolutionofthemachinelearningprogram"YouOnlyLearnOneRepresentation".Theauthorship, design,andmodelarchitectureofcontemporaryobjectidentificationmachinelearningalgorithmslikeYOLORdifferfrom thoseofitspredecessors.

A"unifiednetwork for concurrently encoding implicitandexplicit information" ishowYOLOR describesit. Theseresults supportthefindingsoftheYOLORstudyarticle"YouOnlyLearnOneRepresentation:UnifiedNetworkforMultipleTasks," whichemphasizesthevalue ofleveragingimplicitknowledge.

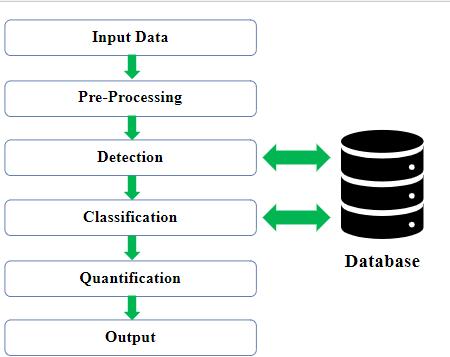

AfunctionaldiagramusedtodemonstratetheadvisedmethodisshowninFigure1.Eachblockisexplainedingreatdetail below.

• Input Image: ThesuggestedmethodisbasedontheISIC2019Challengedataset,whichconsistsofnineclasses,each with eight images. Researchers at the University of Bristol created the proposed system using a dataset of highresolutiondermoscopicimages.

• Pre-processing: The technique utilized to get thephotosmustcontainanumberofdiscrepancies.Asaresult,thisis what the pre- main processing aims to achieve. By cropping or removing the background or other distracting components,theimage'squality,clarity,andotherattributesareimprovedinthefollowingstep. Theprimary preprocessingproceduresare noisereduction,imageenhancement,andgrayscale conversion.Thephotographs that will be used in this suggestedapproacharefirstmadegrayscale.Theimageisthenimproved and noise is removed using the medianandGaussianfilters. The dull razor iscombined withanabundance ofhairremoval fromskin blemishes. The goal of image enhancement is to make an imagebetter by increasing its visibility. The majority ofskin lesions often consist of body hair, which makesaccurate and precise classification challenging. As a result, the dull razor methodisusedtoremoveundesirablehairfromphotographs.Thefollowingtasksaretypicallyaccomplishedwiththe DullRazormethod:

• The grayscale morphological procedure wasutilizedtolocatethehairontheskinlesion.

• By using bilinear interpolation, it finds the hair pixel, determines whether the structure is long or short, and then substitutesthehairpixel.

• Therestoredhairpixelisthenafterwardssmoothedusingtheadaptivemedianfilter.

• Image Preprocessing: By increasing visibility, image enhancement tries to raise the quality of an image. Body hair frequentlymakesupthemajorityofskinlesions,makingaccurateandprecisecharacterizationchallenging.Asaresult, the is eliminated from the photos using the dull razor technique. The following tasks are where the Dull Razor approachismostfrequentlyapplied:

• Thehairontheskinstrainislocatedusingthegreyscalesegmentationmethod.

• The hair pixel is located, its length or thinness is determined, and it is subsequently exchanged via bilinear interpolation.

• Anadaptivemedianfilteristhenusedtosmooththerecoveredhairpixel.Augmentation ofimages.

• In order to effectively detect skin illnesses, skin depictions are changed and made simpler through image magnification. The training and assessment skin's image data sets were utilized to streamline the model and lower thelikelihoodofapplicationerrors.Theadd-ontechnologyturnstheskinimageintoRGBusingscrolling,rotating,and cuttingproceduresaswellascolourtransformationtoexpandthelibrary'sstoragespace.Tomaintainthedatabase's sizeandimagequalityforbothhealthyandunhealthyskin,moreskinphotographsareupgraded.

• Feature Extraction: Inordertoprovidetheproper platform and suitable boundaries, the image processing feature mustberemoved.Theextractionofverticalimage-vectorscanbedoneusingaYOLOR[19]-basedfindingfeature.The removalprocedureassessesthestructure,size,shape,colour, andotherrealisticaspectsofthe image database.This extraction method allows the different skin types to be correctly categorized. The movaltechnique eliminates the characteristicsofdiversewoundtypesandskindiseasecolours.

• Skin-Based Classification: The main objective of this research is to train a convolution neural network (CNN) to differentiate skin blight using a visual database. Two in-depth studyapproaches were employed to diagnose various disorders in skin cancer: It utilizes EfficientNet B0. The CNN classification model, which is based on an image processingsystem,employstrainedand assessedskinimagedatatoidentifytheskincancercategory.

Using pre-trained EfficientNet B0 models, Figure 3 in this work shows images of early and late stage skin malignancies suchactinickeratosis,basalcellcarcinoma,dermatofibroma,andothers.

Incomputervision,objectdetectionreferstotheprocessof locating,classifying,andidentifyingone ormoreobjectsina picture. To overcome this difficult issue, methods for object localization, object identification, and object categorization mustbeused.

To extract details about the objects and shapes in a photograph, significant processing of the same object detection and recognitiononacomputerisrequired.Theidentificationofanobjectinanimageorvideoisknownas objectdetectionin computer vision. For particular packages, a variety of ways has been employed to accurately and reliably identify the object. These suggested solutions are still ineffective and wrong, though. These object detection problems are better handledbydeepneuralnetworksandmachinelearningapproaches.

In the area of computer vision, which has many applications, object detection has drawn a lot of interest. This includes automated management robots, the medical sector, leisure repute, agency security tracking, and more. Prior to anything else, well-known item identification techniques like the histogram of oriented gradients (hog) (hog) were discovered by using feature extraction techniques like the speed-up strong features (surf), adjacent binary, and adjacent binary. Both colourandstylehistograms (lbp)areused.The methodoftakingpicturesandtheequipmentfeaturesthatcandescribea thing'sattributesmakeupthemainfunctionextractionstrategy.

Astraightforward, practical,and successfulunifiedobjectrecognitionparadigmfor full-lengthimages. In vacantregions, which are employed in programs that depend on swift, precise object recognition, YOLO also performed well. In a degenerativedisplaythatidentifiesdegradedphotoslikenoisyandoccludedimages,themodelistrainedondeteriorated photographs.

Due to its connections to video analysis and picture interpretation, object detection has recently attracted a lot of study attention. The conventional approach to object detection is based on handcrafted attributes and shallow trainable structures. Combining a range of low-level image features with high-level information from object detectors and scene classifiersisastraightforwardtechnique toenhancetheperformanceofobjectdetectorsandsceneclassifiers.

Astraightforward, practical,and successfulunifiedobjectrecognitionparadigmfor full-lengthimages. In vacantregions, which are employed in programs that depend on swift, precise object recognition, YOLO also performed well. In a degenerativedisplaythatidentifiesdegradedphotoslikenoisyandoccludedimages,themodelistrainedondeteriorated photographs.

Due to its connections to video analysis and picture interpretation, object detection has recently attracted a lot of study attention. The conventional approach to object detection is based on handcrafted attributes and shallow trainable structures. Combining a range of low-level imagefeatures with high-level information from object detectors and scene classifiersisastraightforwardtechnique toenhancetheperformanceofobjectdetectorsandsceneclassifiers.

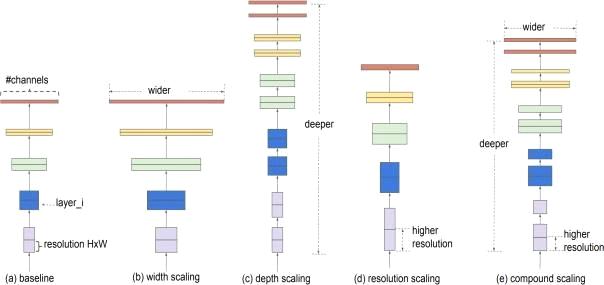

• EfficientNet B0 Architecture:

EfficientNet Bo: Among the models utilized were Xception, InceptionResNetV2, and EfficientNetB0. The selected models obtained high Top-1 accuracy on the ImageNet dataset. They all employed categorical cross-entropy and the Adam optimizerforthelossfunction.

The second approach combined Sigmoid-only with InceptionResNetV2, Xception, andEfficientNetB3. During the training process, each model created a checkpoint of itself, documenting whether or not its balance was accurate at each epoch. The average balance accuracy of the chosen model checkpoints was used to gauge the effectiveness of the strategy.All models containing Sigmoid activationintheirpredictionlayerswereevaluatedusingtheF1-score.Randomcut,rotation, and flipping were used to improve the training set. Random cut, rotation, and flipping were also used to improve the trainingset.

Table2liststhevarioustrainingparametersthatwereusedtodevelopthedifferentmodelclasses.

Detection and Classification: The YOLOR model receives images ofthetroublesomeareaandusesthemtodetermine whetherornottheskiniscancerous.Iftheskinismalignant,theaffectedareaisrepresentedbyarectanglebox.

Quantification: When a skin cancer image is discovered, the detection algorithm examines it and generates a heat map thatshowstheoverallproportionoftheaffectedskinregion.

YOLOR performs better than YOLOv4, Scaled YOLOv4, and earlier iterations. Feature alignment, multitasking, and prediction improvement are all included in the object detection functionality. The bounding box, confidence score, and classtowhichtheobjectbelongsareallincludedinthismodel'soutput.Morepotentmethodsforlearningsemantic,highlevel, and deeper features are becoming available as deep learning develops, allowing it to address the shortcomings of conventionalarchitectures. Thesemodelsdisplayawidediversityofbehaviorsintermsof networkarchitecture,training procedures, optimization strategies, and other factors. This study examines frameworks for deep learning-based object detection.Inotherwords,thistechniquesignificantlyincreasesthemachine'saccuracyinobjectrecognition.

• "Automatic Detection of Melanoma with Yolo Deep Convolutional Neural Networks", International Journal of Technical Research and Applications, November 2019. YaliNie, Paolo Sommella, Mattias O'Nils, and Consolatina Liguori.

• HasnaFadhilahHasya, HilalHudanNuha, and MamanAbdurohman, "Real Time-based Skin Cancer Detection System Using Convolutional Neural Network and YOLO", International Journal of Technical Research and Applications,September2021.

• International Conference on Circuit, Power and Computing Technologies [ICCPCT], 2016. Performance Analysis of MelanomaEarlyDetectionUsingSkinLessionClassificationSystem.ShiyamSundar,R.S.,andVadivel,M.

• Shearlettransformandnaïvebayesclassifieraretwo machinelearning algorithms thatcan beusedtodiagnose skin cancer.J.RamKumar,K.Gopalakrishnan,andS.MohanKumar.

• Ferreira, Mendonça, Rozeira, and Rocha created an annotation tool for dermoscopy image segmentation, which they thenpresentedintheproceedingsofthefirstinternationalsymposiumonvisualinterfacesforgroundtruthgathering incomputervisionapplications(p.5).May2012ACM.

• Improved Skin Disease Detection System Feature Selection Capabilities, International Journal of Innovative TechnologyandExploringEngineering,Volume8,Issue8S3,June2019.JignyasaSanghaviandVedantiCintawar

• Hutokshi Sui, Manisha Samala, Divya Gupta, and Neha Kudu, "Texture feature extraction for classification of melanoma,"InternationalResearchJournalofEngineeringandTechnology(IRJET),Volume05,Issue03,March2018.

• P. Tschandl, C. Rosendahl, and H. Kittler, The HAM10000 dataset: a large collection of multi-source dermatoscopic imagesofcommonpigmentedskinlesions(2019),Sci.Data5,5.(2018)

• Codella,M.Emre,B.H.CelebiMarchetti,N.C.F.DavidGutman, M.A. Sweta Dusza Vladimir LiopyrisAadiKalloo Kittler, H.; Mishra, N. A. Halpern gave a presentation titled "Skin Lesion Analysis Towards Melanoma Detection A Challenge at the 2017 ISBI," 2017 arXiv:1710.05006 at the InternationalSymposium on Biomedical Imaging (ISBI), whichwasorganisedbytheInternationalSkinImagingCollaboration(ISIC).

• MarcCombalia,NoelC.F.Codella,VeronicaRotemberg,BrianHelba,VeronicaVilaplana,OferReiter,AllanC. Halpern, SusanaPuig,andJosepMalvehy,"BCN20000:DermoscopicLesionsintheWild,"2019;arXiv:1908.02288.

• "Detection and feature extraction of sleep bruxism disease using discrete wavelet transform," Lecture Notes in ElectricalEngineering605,byA.K.Panigrahy,N.ArunVignesh,andC.UshaKumari.

• JournalofAdvancedResearchinDynamicControlSystems,9,SpecialIssue11,480-486(2017).Protectionofmedical picturewatermarking.

• Padmavathi K. and Krishna K.S.R., "Myocardial infraction detection using magnitude squared coherenceand Support Vector Machine," in MedCom 2014: International Conference on Medical Imaging, m- Health, and Emerging CommunicationSystems,art.no.7006037,pp.382-385.