VOICE RECOGNITION BASED MEDI ASSISTANT

Abstract - The "VOICE RECOGNITION BASED MEDI ASSISTANT" promises to improve healthcare through a paradigm for hands-free and eye-free interaction. However, little is known about the problems encountered during developmentofsystemespeciallyfortheelderlyhealth-related work.Tosolvethis let'slookatmaintenancedelivery method and QoL improvement for the elderly. finally, identified key design challenges and opportunities can occur when integratingvoice-basedinteractionsvirtualassistant(IVA)in the life of the elderly. our findings will help practitioners conductingresearchandresearch.developmentofintelligent devices utilizing his full-fledged IVA provide better care and support a better quality of life because of the aging population.

Key Words: InteractiveVirtualAssistant(IVA),Mediassistant, Chatbot,

1.INTRODUCTION

Theagingofthepopulationwasseenasaglobalproblemin the21stcenturyapproachforimprovinghealthcareandlife routinemanagementresultinginanimprovementinquality of life QoL however obtaining and utilising such breakthroughtechnologiesisfrequentlydifficultforageing folks who are frequently left behind while numerous systemssuchasElectronicHealthRecord(HER)andPatient Portals(PPS)havebeenwidelyusedtoimprovehealthdata management and patient provider communications it is difficultforageingpopulationswithmultiplecomorbidities toadoptlearnandinteractwithsuchtoolswhichcanonlybe accessedthroughgraphicaluserinterfacesguisondesktops andmobiledevices.theconceptofthissimplebutefficient projectemergedoutoftheneedtoovercomethisfearofthe driver and other road users if they are properly implemented overall this simple device could help reduce the40-0atsomepointinthefuture.

IntelligentVirtualAssistants(IVAs)basedonvoice allowuserstonaturallyengagewithdigitalsystemswhile remaininghands-freeandeye-free.Asaresult,theyarethe nextgamechangerforfuturehealthcare,particularlyamong theelderly.WiththerisingacceptanceofIVAs,researchhave examined how older persons use existing IVA features in smart speakers to enhance their daily routines, as well as potentialimpedimentspreventingtheiradoption.

Whilethesefindingsmostlyfocusedonolderadults' experiences with existing IVA features on a single type of smart-home device, practical demands, challenges, and designstrategiesforincorporatingthesedevicesintoolder persons'dailylivesremainunexplored.Furthermore,while thequalityofhealthcaredeliveryandQoLenhancementare controlled by both care providers and patients, previous researchsolelyfocusedonthepatientexperience,leavinga hugegapbetweenproviderexpectationsandqualityofcare delivery.

1.1 Objective Of Research

Thegoalofresearchforavoiceandmachinelearning-based medicalassistantistocreateareliablesystemthatcanhelp medical professionals diagnose and treat patients, give patientspersonalizedhealthadvice,automateadministrative tasks,enhancemedicaltranscriptionanddocumentation,and improvethepatientexperienceoverall.

2. LITERATURE REVIEW

[1]Angel-Echo: A Personalized Health Care Application. Mengxuan Ma, Karen Ai, and Jordan Hubbard are the authors :

Technologyiscontinuallybreakingnewgroundinhealthcare byprovidingnewavenuesformedicalpersonneltocarefor their patients. Health data can now be collected remotely without limiting patients' independence, thanks to the development of wearable sensors and upgraded types of wirelesscommunicationsuchasBluetoothlowenergy(BLE) connectivity. Interactive speech interfaces, such as the Amazon Echo, make it simple for those with less technologicalskillstoaccessarangeofdatabyutilisingvoice requests, making communicating with technology easier. Wearablesensors,suchastheAngelSensor,inconjunction withvoiceinteractivedevices,suchastheAmazonEcho,have enabledthecreationofapplicationsthatallowforsimpleuser involvement.Weproposeasmartapplicationthatmonitors healthstatusbyintegratingtheAngelsensor'sdatacollection capabilities and the Amazon Echo's voice interface capabilities.WealsogiveAmazonEchovoicerecognitiontest resultsforvariouspopulations.

[2]Implementation of interactive healthcare advisor model using chatbot and visualization. Tae-Ho Hwang, JuHui Lee, Se-Min Hyun, and KangYoon Lee are the authors:

Usingtheinfluxofvariousinformationhasmajoraffectson human lives in the fourth industrial revolution era. The application of artificial intelligence data in the medical industry, in particular, has the potential to influence and affectsociety.Thecomponentsrequiredforestablishingthe interactive healthcare advisor model (IHAM) and chatbotbased IHAM are described in this paper. The biological information of the target users used in the study, such as body temperature, oxygen saturation (SpO2), pulse, electrocardiogram (ECG), and so on, was measured and analysed using biological sensors based on the oneM2M platform, as well as an interactive chatbot to analyse everyday biophysical conditions. Furthermore, the accumulated biological information in the chatbot and biologicalsensorsaresenttousersviathechatbot,andthe chatbot also provides medical advice to boost the user's overallhealth.

[3]Chatbot for Healthcare System Using Artificial Intelligence. Lekha Athota, Vinod Kumar Shukla, Nitin Pandey, and Ajay Rana are the authors:

Health care is critical to a happy life. However, it is quite difficulttogetadoctor'sconsultationtoeveryhealth-related condition.TheideaistouseArtificialIntelligencetobuilda medicalchatbotthatcandiagnosediseasesandprovidebasic informationaboutthembeforecontactingadoctor.Thiswill help to minimise healthcare expenditures while also improving access to medical knowledge via a medical chatbot. Chatbots are computer programmes that engage withusersthroughnaturallanguage.Thechatbotsavesthe data in the database in order to recognise the sentence keywords, make a query decision, and respond to the question.Then-gram,TFIDF,andcosinesimilarityareused tocalculaterankingandsentencesimilarity.Eachsentence from the given input sentence will be scored, and more similar sentences will be found for the query. The expert programme,a thirdparty,tacklesthequestionsuppliedto thebotthatisnotunderstoodorisnotinthedatabase.

[4]IntelliDoctor – AI based Medical Assistant. Dr. Meera Gandhi, Vishal Kumar Singh, and Vivek Kumar are the authors:

IntelliDoctor is a personal medical assistant powered by Artificial Intelligence (AI). This interactive programme analysessymptomstodiagnose,anticipatemedicaldisorders, andoffersremediesandideasdependingontheuser'sinputs in an effort to deliver smart healthcare and make it more accessible. Furthermore, the software tracks users' health activities such as step counts, sleep tracking, heart rate sensing,andotherinformation,andprovidesusers'periodic health reports. It takes into account numerous exercise

activitiestrackedaswellasothercriteriasuchastheirage, gender,location,previousmedicaldata,andcalorieintaketo provide a more accurate analysis.accurate analysis. It provides accurate comprehensive diagnosis and also functionsasapre-screeninginstrumentfordoctors.

[5]verview of the Speech Recognition Technology. JianliangMeng, Junwei Zhang, and HaoquanZhaoarethe authors:

Speechrecognitionisacross-disciplinaryfieldthatusesthe voiceastheresearchobject.Speechrecognitionenablesthe machinetoconvertaspeechsignalintotextorcommandsvia an identification and understanding process, as well as to performnaturalvoicecommunication.

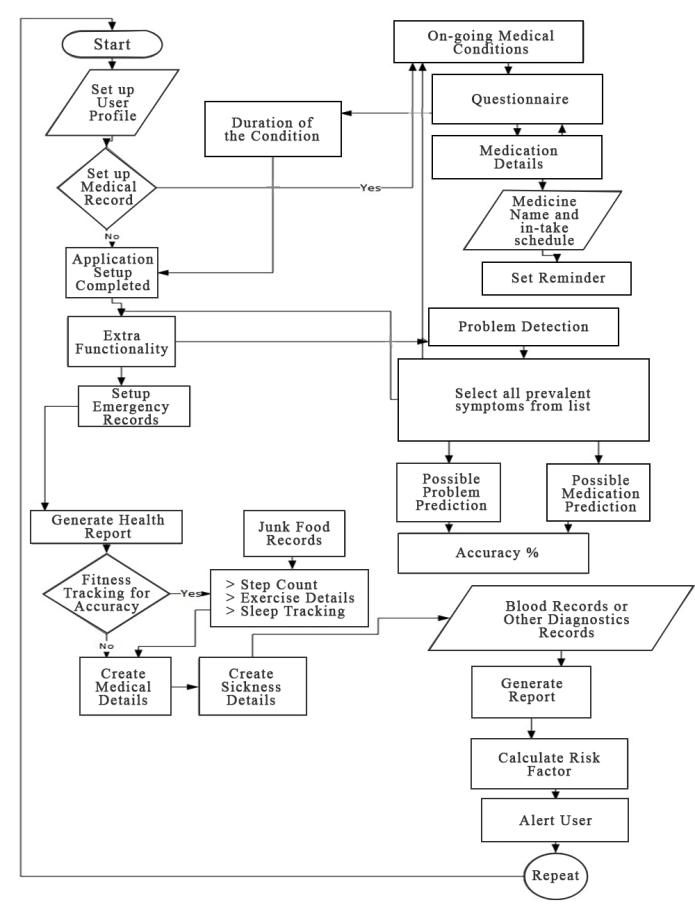

2. PROPOSED WORK Fig -1:SystemFlowchart

Conversational virtual assistants, or voice assistants, automateuserinteractions.Artificialintelligenceisusedto fuelchatbots,whichusesmachinelearningtocomprehend natural language. The paper's primary goal is to assist readers with basic health information. When a person initiallyaccessesthewebsite,theymustregisterbeforethey canaskthebotquestions.Iftheanswerisnotfoundinthe database,thesystemusesanexpertsystemtorespondtothe requests. Domain experts are also required to register by

providingcertaindetails.Thechatbot'sdataissavedinthe databaseaspattern-templatedata.Thedatabasequeriesin thiscasearehandledbyaNoSQLdatabase.

N-gram: The goal of N-gram is to expand N-gram models throughtheuseofvariablelengtharrangements.Agrouping ofwords,awordclass,agrammaticalfeature,oranyother succession of items that the modeller believes to have important language structure data might be considered a sequence.N-gramsare employedinthissystemtoextract the pertinent keywords from the database, compress the text,ordecreasetheamountofdatainthedocument.

Sentencesimilarity:Todeterminehowsimilartwosentences are, cosine similarity is utilised. The number of query weights directly relates to how similar the query and the documentare.Sincethewordfrequencycannotbenegative, the similarity calculation result for the two papers falls between0and1.

Findthematchingphrase:Theuserinterfaceretrievesand displays the answers to the query that were discovered throughtheaforementionedprocess.

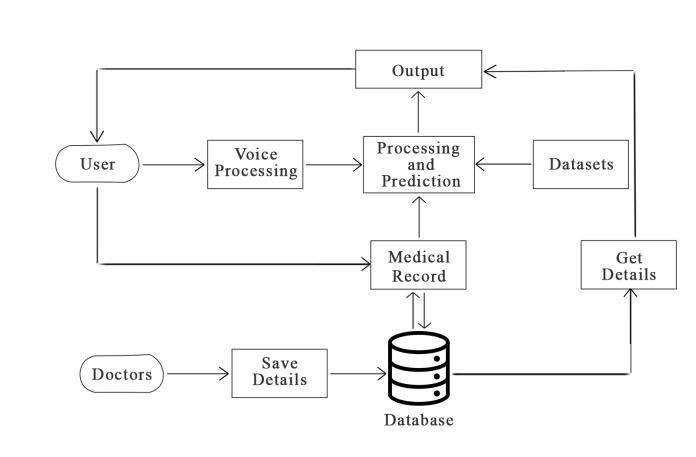

Fig-2ShowsSystemarchitecture.Theinquiryisenteredby theclientintotheUIasspeech.Theuserinterfacereceives the user's inquiry and then delivers it to the chatbot programme. The pre-processing stages for literary experiencesinthechatbotapplicationincludetokenization, inwhichthewordsaretokenized,thestopwordsarethen eliminated,andfeatureextractionisbasedonN-gram,TFIDF,andcosinelikeness.Theknowledgedatabasestoresthe answers to questions so that they can be recovered and retrieved.

Tokenization:Theword-by-wordseparationofsentencesor wordsforeasierprocessing.Everytimeitencountersoneof therundownsoftheselectedcharacter,itdividestextinto words.Sentencesarebrokenupintoindividualwords,and allpunctuationisremoved.Thissuggestswhatcomesnext.

Stop words removal:Stop words are eliminated from sentences in order to extract significant keywords. It is mostly used to eliminate extraneous elements from sentences, such as words that occur far too frequently. Additionally,itisutilisedtoremovetermslikean,a,andthe that are unnecessary or have ambiguous meanings. This action is taken to lessen computational complexity or processingtime.

N-gram TFIDF-based feature extraction: The method of featureextraction,whichranksthequalitiesinaccordance withthedocument,isoneofcharacteristicdiminution.This phaseimprovesthedocument'sefficiencyandsuitability.It is employed to extract the list of keywords and their frequencywithinthetext.

TF-IDF: The weight of each phrase in the sentence is determinedusingphraseFrequencyandInverseDocument Frequency.Todeterminehowfrequentlyawordorphrase appearsinasentence,usethetermfrequency.

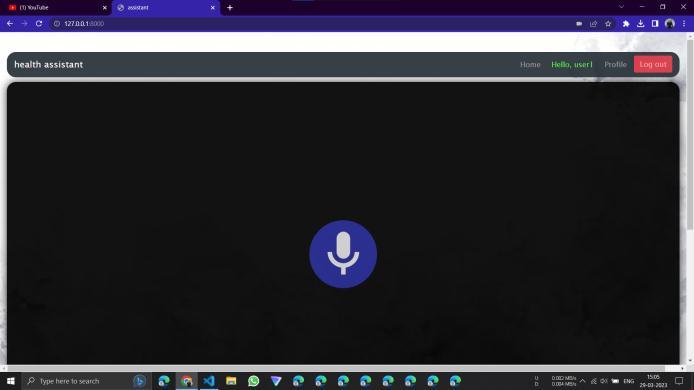

ResultsandAnalysis:Theapplicationusesaquestion-andanswerprotocol,anditconsistsofaloginpagewhereusers mustprovidetheirinformationtoregisterfortheapplication iftheyarenewusers,apagethatdisplayssimilaranswersto theuser'squeryifoneisalreadyinthedatabase,andapage whereexpertsrespondtoquestionsdirectlyfromusers.To speedupqueryexecution,theapplicationleveragesbigram and trigram in addition to n-gram text compression. To communicate the responses to the users, N-gram, TF-IDF, andcosinesimilaritywereused.

Web technology in use: React is a UI development library based on JavaScript. It is controlled by Facebook and an open-source development community. React is a popular libraryinwebdevelopmenteventhoughitisn'talanguage. ThelibrarymadeitsdebutinMay2013andiscurrentlyone ofthefrontendlibrariesforwebdevelopmentthatismost frequently used. The application will use Express.js for server side development together with MongoDB as its primarydatabase.

3. RESULTS

Systems that engage with patients, respond to their questionsaboutmedicine,andofferthemmedicaladviceare knownasvoicerecognition-basedmedicalassistants.These technologies are intended to be more effective than more conventionalformsofcommunication,suchphonecallsor emails, and they can give patients advice that is more individualised and precise. Utilising machine learning algorithmstoanalysemedicaldataandfindpatternsthatcan be used to forecast a patient's likelihood of contracting a specificdiseaseisknownasmachinelearning-baseddisease prediction. Large datasets of medical records, genetic information,andotherpertinentdatacanbeusedtotrain these algorithms to increase their accuracy and dependability.

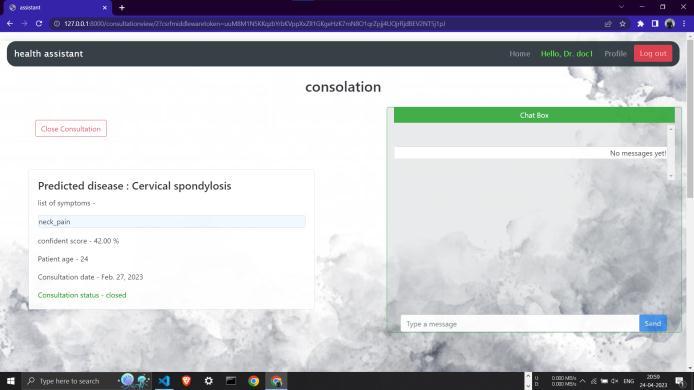

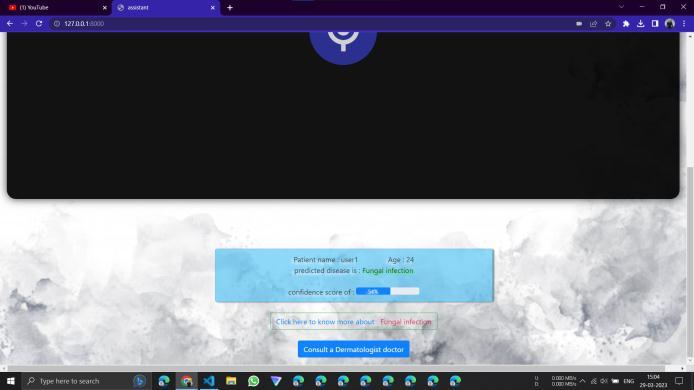

Fig

:ResultsAfterDiagnosingtheSymptomsfromUser

Fig-4 shows that the chatbot will accept user input and deliverittothebackendMLalgorithm,whichwillforecast theresults.Whentheresultsarecomputed,thechatbotwill pronouncetheoutputanddisplaytheresultsalongwiththe requireddata.

Fig-5showsthedoctorandpatientbeingabletotalkabout the disease, and the doctor having complete information aboutthediseaseprojected.

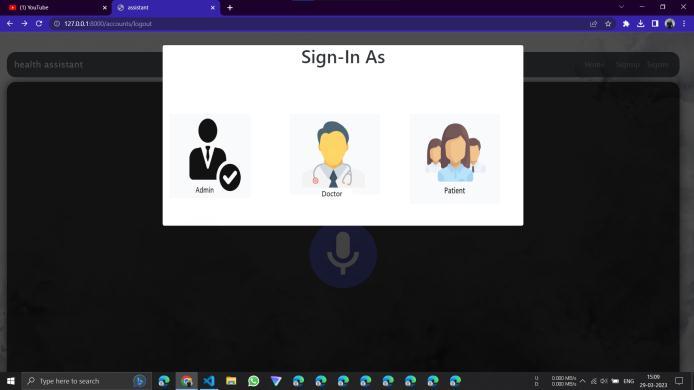

Fig -6 shows, a user, administrator, or doctor can connect intothesystemusingthismenu,whereheorshewillbeable tore-loginautomaticallyduringthefollowingvisit.Fromthe perspective ofthe loggedin user, theAPI requests will be secure.

5. ADVANTAGES

Thereareseveraladvantagestousingphysicianassistants based on speech recognition and disease prediction using machine learning in health care. Some of these advantagesinclude:

Improved accuracy and speed of diagnoses: Machine learning algorithms are able to analyze large amounts of medical data to identify trends and predict disease risk, leadingtoearlieraccuracyindignoses.

Enhanced patient experience: Voice-activated medical assistants provide patients with instant access to medical advice and information, enabling them to manage their healthmoreeffectively.

Increasedefficiency:Routinechorescanbeautomatedusing voicerecognition-basedmedicalassistants,savingmedical practitioners time and allowing them to focus on more sophisticatedpatientcare.

Personalized medicine: Individual patient data can be analysed by machine learning algorithms to produce personalisedtreatmentplansbasedoncharacteristicssuch as heredity and lifestyle, resulting in more successfultherapies.

Reducedhealthcarecosts:Voicerecognition-basedmedical assistantsanddiseasepredictionusingmachinelearningcan help cut healthcare expenses by enabling earlier disease detectionandmoreeffectivetreatments.

Improved healthcare outcomes:Voice recognition-based medical assistants and illness prediction using machine learning can enhance overall healthcare outcomes by offering patients with more efficient, accurate, and personalisedmedicaltreatment.

Improved healthcare outcomes:Voice recognition-based medicalassistantsandillnesspredictionbasedonmachine learning can enhance overall healthcare outcomes by offering patients with more efficient, accurate, and personalisedmedicaltreatment.

Overall, voice recognition-based medical assistants and illness prediction based on machine learning have the potentialtotransformhealthcarebyofferingmoreefficient, accurate, and personalised medical treatment while loweringhealthcarecostsandincreasingpatientoutcomes.

6 DISADVANTAGES

Alongwiththebenefits,therearesomepossibledrawbacks toemployingvoicerecognition-basedmedicalassistantsand machinelearningtoforecastdiseaseinhealthcare.Hereare someofthemajordrawbacks:

Privacyandsecurityconcerns: Patient data collectionand storagemayposeprivacyandsecurityconcerns,especiallyif the data is not properly secured or comes into thewronghands.

Algorithmicbias:MLalgorithmscanbebiasedifthetraining dataisbiased,potentiallyleadingtoinaccuratepredictions ordiagnosesandperpetuatinghealthcaredisparities.

Limited access: Some patients may not have access to the technology required to employ voice recognition-based medicalassistants,thusleavingthembehind.

Technical difficulties: Technical issues, such as voice recognition mistakes or software faults, could result in inaccuratediagnosisorrecommendations.

Legal and ethical issues: The employment of voice recognition-basedmedicalassistantsandmachinelearningbaseddiseasepredictionposeslegal andethical concerns, such as accountability and obligation in the event of an inaccuratediagnosisoradvise.

Dependency on technology: The increased reliance on technology may result in less human interaction and empathy,thuscompromisingpatientsatisfactionandtrust.

Overall, while voice recognition-based medical assistants and illness prediction using machine learning have the potential to transform healthcare, it is critical to address these possible drawbacks to ensure that they are utilised responsiblyandethically.

7. CONCLUSIONS

Finally,bygivingpatientsmoreeffective,individualised,and precise medical advice and diagnoses, voice recognitionbased medical assistants and disease prediction using machine learning have the potential to revolutionise healthcare.Thesetechnologiesdo,however,alsogiveriseto privacy and data security issues, as well as the risk of algorithmic discrimination and bias. Strong privacy and security protocols, unbiased and trustworthy disease prediction algorithms, and the incorporation of ethical considerations into the development and use of these systemsareallnecessaryforaddressingtheseconcernsin ordertoensurethesuccessofthesetechnologies.

Overall,theintegrationofthesetechnologiesoffers hopeforthefutureofhealthcare,butitiscrucialtoapproach theirdevelopmentanddeploymentwithcareandprudence toguaranteethattheyaresuccessful.

REFERENCES

[1] Ma, M., Skubic, M., Ai, K., & Hubbard, J. (2017, July). Angel-echo: a personalized health care application. In 2017IEEE/ACMInternationalConferenceonConnected Health: Applications, Systems and Engineering Technologies(CHASE)(pp.258-259).IEEE.

[2] Hwang, T. H., Lee, J., Hyun, S. M., & Lee, K. (2020, October). Implementation of interactive healthcare advisormodelusingchatbotandvisualization.In2020 International Conference on Information and Communication Technology Convergence (ICTC) (pp. 452-455).IEEE.

[3] Athota, L., Shukla, V. K., Pandey, N., & Rana, A. (2020, June). Chatbot for healthcare system using artificial intelligence. In 2020 8th International conference on reliability, infocom technologies and optimization (trendsandfuturedirections)(ICRITO)(pp.619-622). IEEE.

[4] Gandhi, M., Singh, V. K., & Kumar, V. (2019, March). Intellidoctor-ai based medical assistant. In 2019 Fifth International Conference on Science Technology EngineeringandMathematics(ICONSTEM)(Vol.1,pp. 162-168).IEEE.

[5] Meng,J.,Zhang,J.,&Zhao,H.(2012,August).Overview of the speech recognition technology. In 2012 fourth international conference on computational and informationsciences(pp.199-202).IEEE.

[6] Hwang, T. H., Lee, J., Hyun, S. M., & Lee, K. (2020, October). Implementation of interactive healthcare advisormodelusingchatbotandvisualization.In2020 International Conference on Information and Communication Technology Convergence (ICTC) (pp. 452-455).IEEE.