Automated Media Player using Hand Gesture

Priyadarshini Kannan1, Sayak Bose2, V. Joseph Raymond31B.Tech, CSE-IT, SRM University, Kattankulathur, Tamil Nadu. 603203

2B.Tech CSE-IT, SRM University, Kattankulathur, Tamil Nadu. 603203

3Assistant Professor, Dept. of Networking and Communications, SRM University, Tamil Nadu, India ***

Abstract - An automated media player using hand gestures is a system that allows users to control media playback through the use of hand gestures, without the need for traditional input devices like a mouse or keyboard. The system typically relies on machine learning algorithms and computer vision techniques to interpret user hand gestures and respond accordingly.

The development of this technology has the potential to create a more intuitive and natural user interhand for media playback, with potential applications in home entertainment systems, public spaces, and vehicles. Additionally, it can assist people with disabilities who may have difficulty using traditional input devices. This technology represents an exciting development in the field of human-computer interaction, and has the potential to revolutionize the way we interact with media.

Key Words: Interhand,Human-computerinteraction,ConvolutionalNeuralNetwork(CNN),Squeezenet,Realtimeimage classification,Detect,Patternrecognition,Pooling.

1. INTRODUCTION

Anautomatedmediaplayerusinghandgesturesisasystemthatallowsuserstocontrolmediaplaybackthroughtheuseof hand gestures. This technology is typically powered by machine learning algorithms and computer vision techniques, whichallowthesystemtointerpretuserhandgesturesandrespondaccordingly.

Theidea behindan automatedmediaplayerusinghand gesturesistocreatea moreintuitiveandnatural userinterhand for media playback. Instead of relying on traditional input devices like a mouse or keyboard, users can control playback withsimplehandgestures.

Thistechnologyhasnumerouspotentialapplications,includinginhomeentertainmentsystems,publicspaceslikeairports ormuseums,andinvehicles.Itcanalsobeusedtoassistpeoplewithdisabilitieswhomayhavedifficultyusingtraditional inputdevices.

Overall,anautomatedmediaplayerusinghandgesturesisaninnovativeandexcitingdevelopmentinthefieldofhumancomputerinteraction,andhasthepotentialtorevolutionizethewayweinteractwithmedia.

The system uses cameras or other sensors to capture the user's hand gestures and translate them into commands for mediaplayback.Userscanperformsimplehandgestureslikeswiping,pointing,orgrabbingtoplay,pause,rewind,orfast forwardmediacontent.

Thistechnologyhasseveralpotentialapplications,includinginhomeentertainmentsystems,publicspaceslikeairportsor museums,andinvehicles.Itcanalsoassistpeoplewithdisabilitieswho

mayhavedifficultyusingtraditionalinputdevices

The development of an automated media player using hand gestures has the potential to create a more intuitive and natural user interhand for media playback, with the ability to control media playback without the need for traditional input devices like a mouse or keyboard. However, there are also potential challenges and limitations that need to be addressed,suchastheneedforaccurateandrobustgesturerecognitionalgorithmsandpotentialprivacyconcernsrelated tothecollectionofuserdata.

2. Motivation

The motivation behind developing an automated media player using hand gestures is to create a more natural and intuitive way for users to interact with media playback. Traditional input devices like a mouse or keyboard can be cumbersome and require a certain level of dexterity and physical ability, which can be a challenge for some users, especiallythosewithdisabilities.

Anautomatedmediaplayer usinghandgesturesoffers a moreaccessibleandconvenientwaytocontrol media playback, withouttheneedforphysicalcontactwithsharedinputdevices,potentiallyreducingthespreadofgermsorillnesses.

Furthermore, this technology has potential applications in public spaces, vehicles, and other situations where traditional inputdevicesmaynotbepractical,providingamoreefficientandconvenientwaytocontrolmediaplayback.

Additionally, the development of an automated media player using hand gestures offers an opportunity to explore the potential of machine learning algorithms and computer vision techniques in the field of human-computer interaction, potentiallyleadingtonewadvancementsinthisfield.

Overall, the motivation behind developing an automated media player using hand gestures is to provide a more natural, accessible, and efficient way for users to interact with media playback, while also exploring the potential of new technologiesinthefieldofhuman-computerinteraction.

Theinnovationideabehindtheautomatedmediaplayerusinghandgesturesistocreateamorenaturalandintuitiveway foruserstointeractwithmediaplayback,withouttheneedfortraditionalinputdeviceslikeamouseorkeyboard.

The system uses machine learning algorithms and computer vision techniques to interpret user hand gestures and respond accordingly, allowing users to perform simple gestures like swiping, pointing, or grabbing to control media playback.

Thistechnologyhasseveralpotentialapplications,includinginhomeentertainmentsystems,publicspaces,andvehicles.It canalsoassistpeoplewithdisabilitieswhomayhavedifficultyusingtraditionalinputdevices.

The innovation behind this technology lies in its ability to create a more intuitive and natural user interhand for media playback,potentiallyimprovingtheuserexperienceandaccessibilityforawiderrangeofusers.Italsohasthepotentialto reducetheneedforphysicalcontactwithsharedinputdevices,potentiallyreducingthespreadofgermsorillnesses.

Furthermore, the development of an automated media player using hand gestures offers an opportunity to explore the potential of machine learning algorithms and computer vision techniques in the field of human-computer interaction, potentiallyleadingtonewadvancementsinthisfield.

Theobjectivesofanautomatedmediaplayerusinghandgesturesare:

1. Tocreateamoreintuitiveandnaturaluserinterhandformediaplayback.

2. Toenableuserstocontrolmediaplaybackwithouttheneedfortraditionalinputdeviceslikeamouseorkeyboard.

3. Toimproveaccessibilityforpeoplewithdisabilitieswhomayhavedifficultyusingtraditionalinputdevices.

4. To provide a more convenient and efficient way to control media playback, especially in public spaces, vehicles, or othersituationswheretraditionalinputdevicesmaynotbepractical.

5. To reduce the need for physical contact with shared input devices, potentially reducing the spread of germs or illnesses.

6. To explore the potential of machine learning algorithms and computer vision techniques in the field of humancomputerinteraction.

7. Toaddresspotentialprivacyconcernsrelatedtothecollectionofuserdata.

8. To improve the accuracy and robustness of gesture recognition algorithms to ensure reliable and consistent performance

3. Methodology

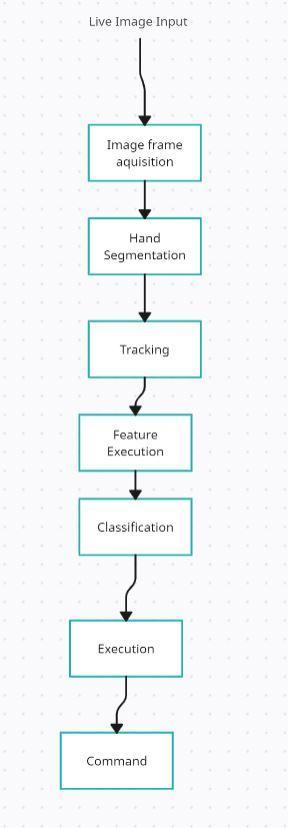

Theoverallmethodologyfollowedintheproposedtechniquehasthreestages.

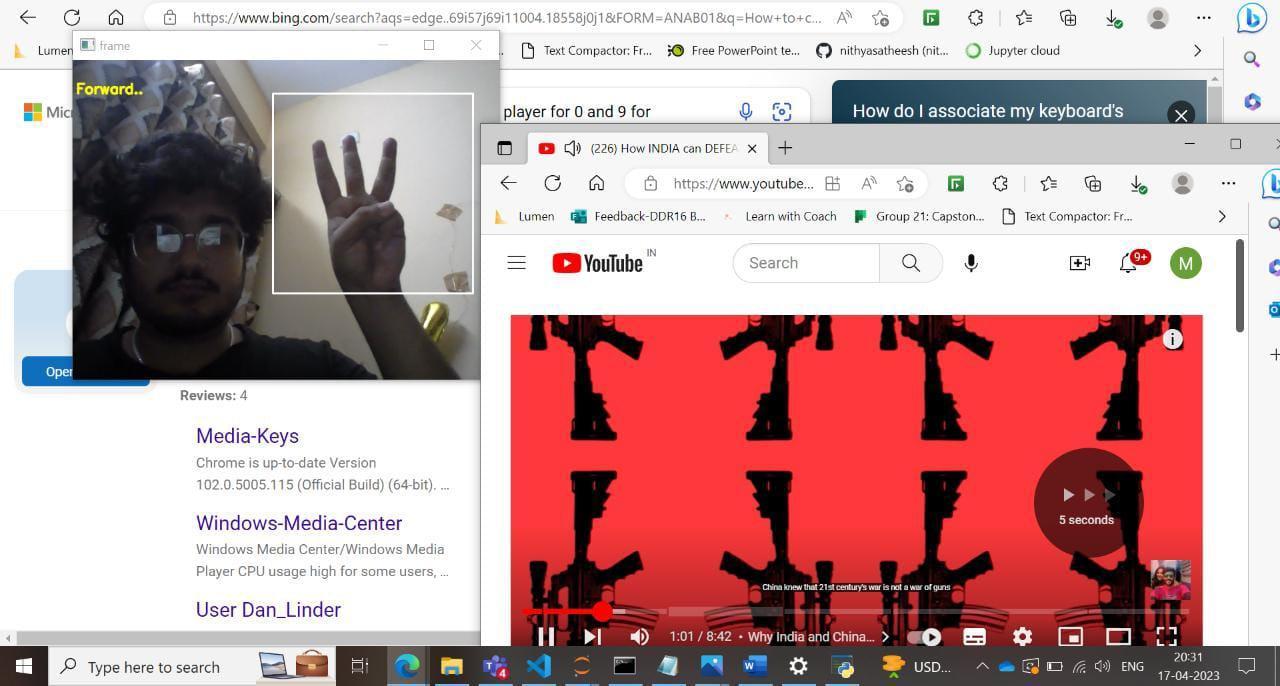

3.1 Gatheringtheimagesordatasetfromtheuser

At first we are using ‘OpenCV’ library to have a wide range of image and video processing functions, including image filtering, edge detection, feature detection, image segmentation and object tracking. Then we move on to gathering the

images with the functions in the cv2 library. While gathering the images we try to gather as many images as possible as moretheamountthedatathebetterhemodelwillbeeg.Wecreateafolder‘right’inthefolderofgatheringimagesandin that folder we gathered 1000 images which would signify ‘right’. Once we are done with gathering images we are then havingthe‘NumPy’package,whichareusedforsavingthefiles.

3.2TrainingtheModel

Firstof all wearetryingto usethe Squeezenet’ model whichisa part of CNN algorithm. We are usinggitnore tocall the squeezenetmodulefromgithub,integrateinourmodel.Thenweuse‘Keras’and‘Tensorflow’packageswhichareusedto train our model. They are used for feature extraction and classification. Tensorflow in this code provides certain operationslikepooling,convolutionandactivationfunctions.Kerashereprovidesahigh-levelAPIfordefining,compiling andtrainingthemodel.

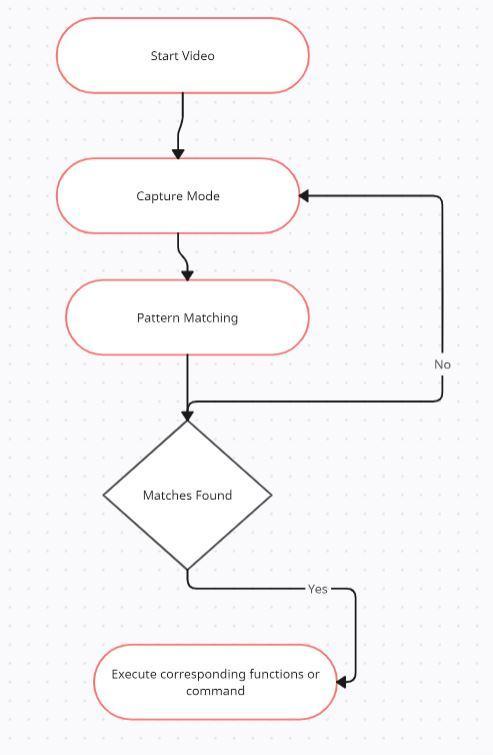

3.3Usingthetrainedmoduleforpredictions

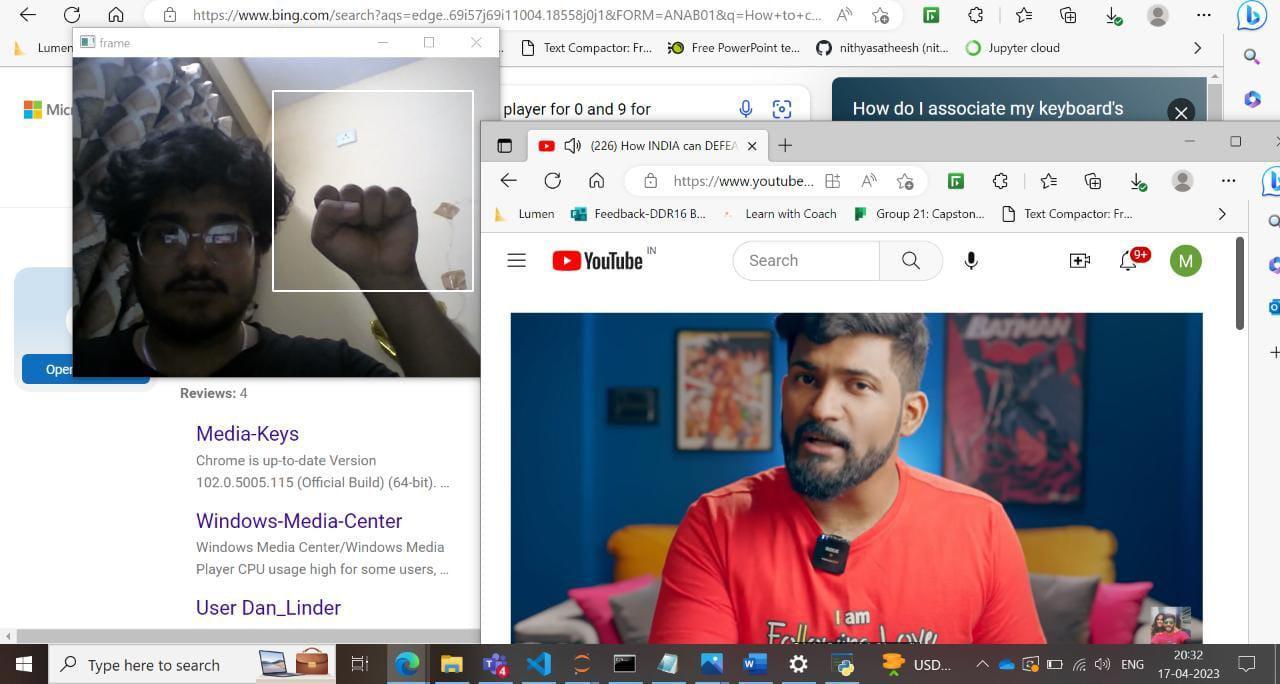

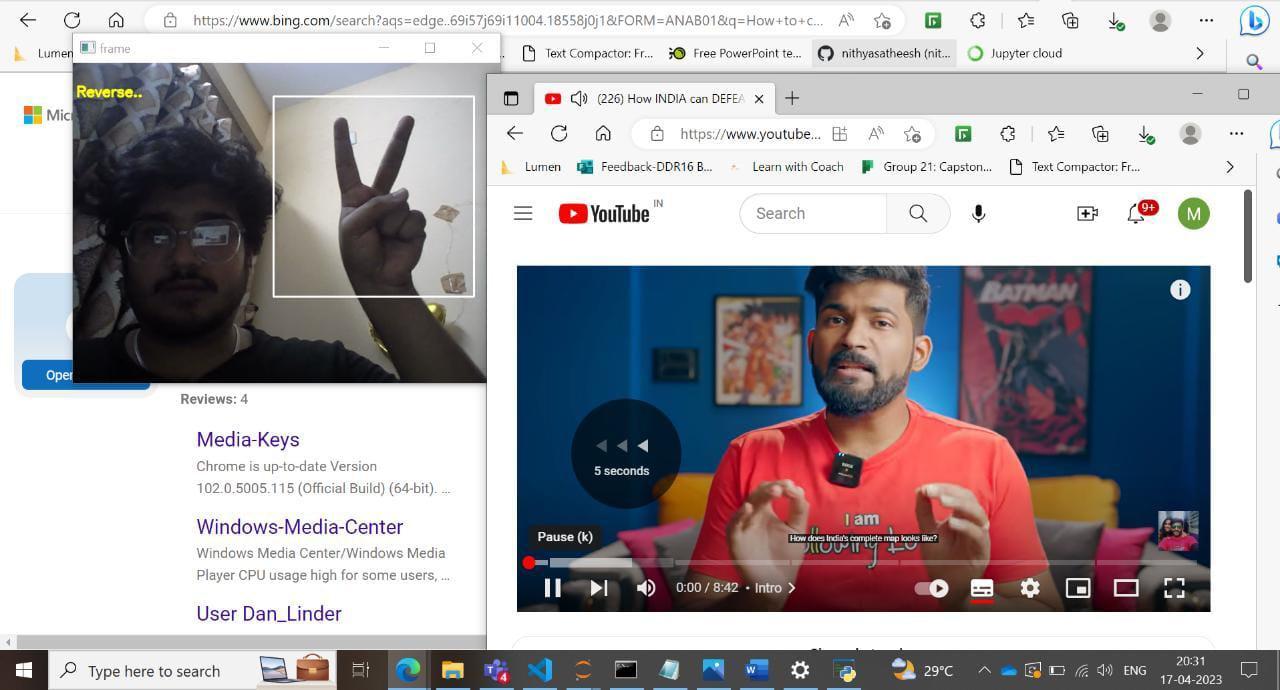

We are using ‘pyautogui’ to integrate the labels with a particular media controlling function and then using the model trainedtopredictthegesturesandthusthelabelandproducingamediacontrollerfunctionattachedtoit.Hereweattach the functions such as ‘nothing’, ‘rewind’ and ‘Forward’ to the labels of data. Then after that it switches on the video feed andtakesinlivevideoinputsandfunctionsthemediafunctionsattachedtoittohandlethemediaplayer.

3.4 Accuracytesting

From ‘tensorflow.keras.models’ package we import the ‘load_model’ file, we then use the ‘Evaluate’ function to find the accuracywhichiscomingtobe89%.

The model evaluate function is used to evaluate the model’s accuracy on the test dataset. This function takes in the test dataandlabelsandreturnsthetestlossandaccuracyasoutput.Theverboseargumentissetto2toprinttheevaluation processandresultstotheconsole.

4. System Architecture

Fromthisexperimentweused1000imagesofdatafor5differentdatafiles.Itwasseenthatthemoreimagesweprovide the moresmoothlytheprocessgoes.The results were inthefavourof themodel andit was workingandcontrollingthe mediaplayersmoothly.Theaccuracywascomingtonear90%andincreasesasweputmoredataintoit.

6. Conclusions and Future work

In conclusion, an automated media player using hand gestures can provide an innovative and convenient way of interacting with multimedia content. This technology utilizes computer vision algorithms to detect and interpret hand gestures,allowinguserstocontrolmediaplaybackwithoutphysicalcontactwithadevice.Ithasthepotentialtoenhance user experience by enabling hands-free operation and increasing accessibility for individuals with disabilities. However, the success of this technology depends on its accuracy and reliability, which can be affected by factors such as lighting, backgroundnoise,anduservariability.Additionally,theremaybeconcernsregardingprivacyandsecurity,astheuse of cameras for gesture recognition can raise issues of data collection and surveillance. Overall, while automated media players using hand gestures hold promise for the future, careful consideration must be given to their design, implementation,andethicalimplications.

There are several potential enhancements that could be made to an automated media player using hand gestures in the future,including:

1. Improved accuracy and reliability: The accuracy and reliability of hand gesture recognition can be improved by incorporating more advanced computer vision algorithms and machine learning models. This could involve training the systemonalargerdatasetofhandgesturesandincorporatingreal-timefeedbacktoimproveitsperformance.

2. Gesturecustomization:Usersmayhavedifferentpreferencesforthetypesofhandgesturesusedtocontrolmedia playback.Allowinguserstocustomizeorpersonalizethesetofrecognizedgesturescouldenhancetheiroverallexperience withthesystem.

3. Multimodal interaction: Incorporating other forms of interaction, such as voice commands or physical buttons, could provide users with more flexibility in controlling media playback. This could be particularly useful in noisy or crowdedenvironmentswherehandgesturesmaynotbepractical.

4. Integration with smart home devices: Automated media players using hand gestures could be integrated with othersmarthomedevices,suchaslightsorthermostats,toprovideamoreseamlessandintegrateduserexperience.

5. Accessibility features: Incorporating accessibility features such as support for sign language gestures or haptic feedbackcouldmakethesystemmoreaccessibleandinclusiveforindividualswithdisabilities.

6. Securityandprivacyfeatures:Astheuseofcamerasforgesturerecognitionraisesconcernsregardingprivacyand security, future enhancements could include the incorporation of encryption or privacy-preserving techniques to ensure thatuserdataisprotected.

Overall, the future enhancements for an automated media player using hand gestures will depend on continued advancementsincomputervisionandmachinelearning,aswellasafocusonuserexperienceandprivacyconcerns.

REFERENCES

[1]"AReal-TimeGestureRecognitionSystemforMedia Playersusing Convolutional Neural Networks" byJ.Jung,H.Kim, andH.Park.Thispaperproposesareal-timegesturerecognitionsystemforcontrollingmediaplayersusingaCNN.

[2] "Real-Time Hand Gesture Recognition for Controlling Media Players using Convolutional Neural Networks" by M. M. Asghar and F. Hussain. The authors proposed a real-time hand gesture recognition system for controlling media players usingaCNNandOpenCV.

[3] "Hand Gesture Recognition for TV Remote Control Using Convolutional Neural Networks" by T. H. Nguyen and N. T. Nguyen. This paper proposes a hand gesture recognition system using a CNN for TV remote control, including media playerplaybackcontrol.

[4] "A Novel Hand Gesture Recognition Technique for Human-Computer Interaction" by S. S. Rajput and V. K. Dixit. This paperproposesa handgesturerecognitiontechniqueusinga CNN andadepthcamera forcontrollingmediaplayersand othercomputerapplications.

[5]"AConvolutionalNeuralNetwork-BasedHandGestureRecognitionSystemforHomeAutomation"byV.K.Meenaand S.M.Rathod.ThispaperproposesaCNN-basedhandgesturerecognitionsystemforcontrollinghomeautomationdevices, includingmediaplayers.

[6]"Real-TimeGestureRecognitionforMediaPlayersUsingConvolutionalNeuralNetworks"byP.Abrol,K.Agrawal,and P. Varshney. The authors proposed a real-time gesture recognition system for media players using a CNN and transfer learning.

[7]"HandGestureRecognitionforHuman-ComputerInteractionUsingConvolutionalNeuralNetworks"byM.SinghandR. Yadav. This paper proposes a CNN-based hand gesture recognition system for human-computer interaction, including mediaplayercontrol.

[8] "Real-Time Hand Gesture Recognition System for Media Player Using Deep Learning" by R. Kumar, M. Singh, and A. Kumar.Theauthorsproposedareal-timehandgesturerecognitionsystemformediaplayercontrolusingaCNNanddeep learningtechniques.

[9] "Real-Time Hand Gesture Recognition for Media Player Using Transfer Learning" by J. K. Jindal, M. K. Garg, and M. Arora.Thispaperproposesareal-timehandgesturerecognitionsystemformediaplayercontrolusingaCNNandtransfer learning.

[10] "Real-Time Hand Gesture Recognition System for Home Automation using Convolutional Neural Networks" by V. K. Meena and S. M. Rathod. This paper proposes a real-time hand gesture recognition system for home automation using a CNN,includingcontrolofmediaplayers.