Object Detection and Tracking AI Robot

Jayati Bhardwaj1 , Mitali2 , Manu Verma3 , Madhav4 1,2,3,4 Department of CSE MIT, Moradabad, U.P, India ***Abstract: The task of object detection is essential in the fieldsofroboticsandcomputervision.Thispaper'sgoalis to provide an overview of recent developments in object detection utilizing AI robots. The study explores several objectdetectiontechniques,includingdeeplearning-based methods and their drawbacks. The study also gives a general overview of how object detection is used in practical contexts like robots and self-driving cars. The advantages of deploying AI robots for object detection over conventional computer vision techniques are the maintopicsofdiscussion.

I. INTRODUCTION

Artificial intelligence (AI) and its uses in robotics have attracted increasing attention in recent years. Providing robots with the ability to detect and recognize items in their environment is one of the key issues in robotics. As the name suggests, object detection is the procedure of locating items in an image or video frame and creating boundingboxesaroundthem[3].Forrobotstocarryouta varietyofactivities,includinggrabbing,manipulating,and navigating,thistaskisessential[7].

Traditionally,hand-craftedfeatureslikeSIFTandHOGand machine learning methods like SVM and Random Forest have been used for object detection. However, these techniques have limits in terms of effectiveness and precision,particularlyGrowinginteresthasbeenshownin artificialintelligence(AI)anditsusesinroboticsinrecent years[5].Givingrobotstheabilitytodetectandrecognize items in their environment is one of the most significant robotics challenges. The act of recognizing objects in an imageorvideoframeandcreatingboundingboxesaround them is known as object detection, as the name suggests. Robots must complete this activity in order to carry out other activities including grasping, manipulating, and navigating[9].

Traditionally, hand-crafted features and machine learning algorithms like SVM and Random Forest have been used for object detection [14]. Examples of these features include SIFT and HOG. However, these techniques have shortcomings, particularly in terms of efficiency and accuracy.

Inthispaper,wepresentacomprehensiveexaminationof the state-of-the-art in object detection utilizing AI robots. Weconcentrateonrecentadvancesinthearea,discussing both conventional and deep learning-based approaches andhighlighting theiradvantagesand disadvantages[15]. We also go over numerous robotics uses for object detection,likegrasping,manipulating,andnavigation,and we give some insight into the difficulties and potential futuredirectionsofthisfieldofstudy.

The goals of this paper are to offer an overview of the subject's current state and to encourage more research andgrowthinit

II. LITERATURE REVIEW

Formanyyears,objectidentificationalgorithmshavebeen being developed for AI robots. Early object detection techniques relied on manually made features, such as scale-invariant feature transforms, histograms of directed gradients, and sped-up robust features (SURF) (HOG)[6]. These methods have been widely applied to AI robots for object detection; however they have a number of shortcomings. For example, they are sensitive to the size and orientation of objects and have trouble capturing intricateshapesandtextures.

The use of deep learning-based object detection techniqueshasincreased recently[9]. Theseconvolutional neuralnetwork(CNN)-basedtechniqueshavebeenshown to outperform more traditional feature-based methods in a variety of object identification tasks. The three most prevalentdeeplearning-basedobjectdetectiontechniques areregion-basedconvolutionalneuralnetworks,YouOnly Look Once (YOLO), and single shot multi-box detectors (SSD) (R-CNN).These techniques may automatically learn objectfeaturesandcarry outobject detection atthesame timesincetheyaretrainedfrombeginningtoend.

Dealing with occlusions, where objects are partially or fully obscured from vision, is one of the difficulties in object detection. Several approaches have been put forth to deal with this problem, such as attention-based approaches,wheretheAIrobotfocusesontheportionsof the image that are the most pertinent, and occlusion-

awareapproaches,wheretheAIrobottakestheocclusions intoaccountwhenperformingobjectdetection.

[1] X.Zhang,Y Yang et.al. described a technique for object detection and tracking in outdoor settings that makes use of a mobile robot with a camera and a LiDAR sensor. The suggested approach combines a Kalman filter for object trackingwithadeepneuralnetworkfordetection.

J.Redmon and S.Divvala[2] work proposed a moving vehicle: Real-time Multiple Object Detection and Tracking

The real-time object recognition system YOLO (You Only Look Once), which is described in this paper, can identify and track numerous objects in a video stream from a moving vehicle. The suggested approach concurrently detectsandtracksobjectsinavideostreamusingasingle neuralnetwork.

S.Wang,R.ClarkandH.Wen[3]developedareal-timeobject identificationandtrackingsystemforautonomousdriving applications is presented in this study. The suggested system combines the Kalman filter and Hungarian algorithm for object tracking with a deep neural network for object detection. The system is appropriate for realtime applications because it is built to operate with lowlatency input and output. Table1 represents the comparative work analysis among different detection & trackingsystems.

III.PROPOSED WORK

A. Algorithm Used:

Fast R-CNN enhances the object identification speed and precision of the original R-CNN method. Using a single deep neural network to carry out both object detection and feature extraction, as opposed to using several networks for these tasks, is the main novelty of Fast RCNN. Fast R-CNN can now be both more accurate and fasterthanR-CNNthankstothismethod.

Using a selective search technique, the Fast R-CNN algorithmfirstchoosesasetofobjectproposals(i.e.,areas in the image that could contain an object). Following the extraction of features from these suggestions using a convolutional neural network (CNN), the features are inputintoasetoffullyconnectedlayersthatcarryoutthe actualobjectclassificationandboundingboxregression.

Overall, Fast R-CNN is a popular and successful object identification technique that has been employed in many fields, such as robotics, self-driving automobiles, and medical imaging.We used fast R-CNN because it has high accuracy among all algorithms. Table2 represents

comparison among different object detection algorithms alongwiththeiraccuracies.

Table2:Comparisonamongalgorithmsaccuraciesusedin ObjectDetection

B.Materials Used

HardwareComponents

1. Esp-32Cam

2. GearMotor

3. Wheels

4. ServoMotor

5. PortablePowerBank

6. PlasticCase

7. ArduinoNano

8. Hco5BluetoothModule

SoftwareUsed

1. ArdiunoIde

2. Esp32AiCamera

3. ArdiunoAutomation

C. Working Model

The proposed method has been evaluated in three different types of scenarios for item detection and recognitioninreal-worldsettings.First,a semi-structured scene was taken into consideration in order to conduct a methodical analysis of the effectiveness depending on several factors. The second experiment featured two actual, chaotic settings. In this experiment the object had tofoundamongvarietyofcommonplacethingslikebooks, clocks, calendar and pens. Lastly, a picture dataset has been used to assess the system's performance through

International

Research Journal of Engineering and Technology (IRJET)

object instance recognition and in comparison to other cutting-edgemethods

An execution time performance analysis is offered as a conclusion.Twomulti-jointedlimbsandahumanoidtorso equippedwithaMicrosoftTO40pan-tilt-divergencestereo headwereemployedinthefirsttwostudies.TwoImaging Source DFK 31BF03-Z2 cameras mounted on the head take 1024x768-pixel colour images at 30 frames per second. High-resolution optical encoders give the motor positions,andthedistancebetweenthecamerasis270.

The object's position and orientation were altered on everyframe. The number of observed orientationsclearly varies depending on the object in question; for instance, the toy vehicle was observed in 12 different orientations, whilsttheredballhasonlyoneorientation(roughlyevery 30degrees).Theacceptedstrategystartswiththeimage's capturing.Thisimageservesastheinputfortwoseparate processes:

Segmenting the colour cue and the two other cues that were observed (i.e. motion and shape). The goal of this difference was to increase effectiveness. In order to segment the image and represent it in L1L2L3 coordinates, memory data on the various elements to be located is used. The other objects were also tested in similar ways. Figure 8 shows a few of the outcomes (just thefinaloutcome).

Itshouldbe emphasised thatthe resultsarefroma single study because the data are not random. As can be seen, onlyoneobjectissearchedatatime.

This result was reached after testing the system's functionality under a variety of circumstances that could lead to problems (such as shadows, flickering light sources, different light reflexes, partially visible objects, etc.). As shown, even when items changed their orientation, location within the picture, or angle of view from the cameras, all of the objects were still accurately identified.

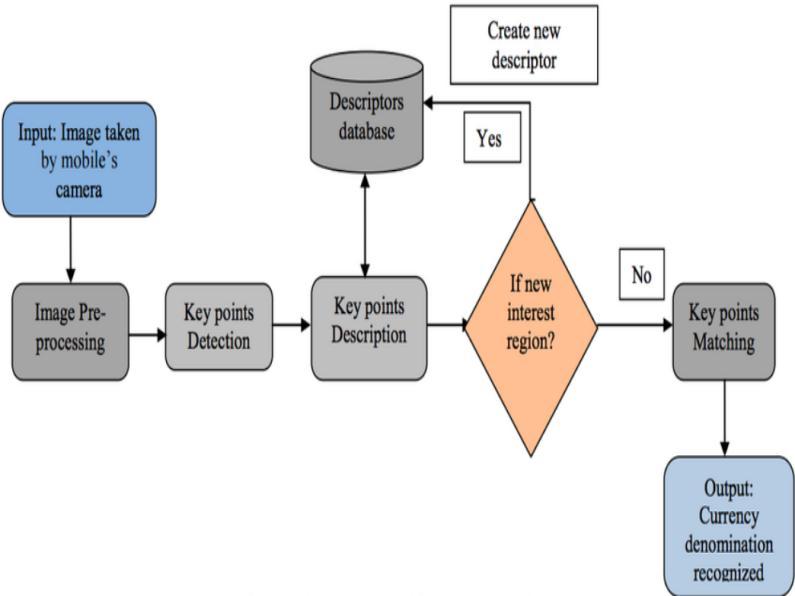

Fig.1ObjectDetectionProcess

Figure shows flow chart of object detection. Firstly, input image will be taken and then it is pre-processed through key points .if new region is present then it will go to database otherwise output will be generated through matchingofkeypoints.

EXPERIMENT 1

Inthisexperiment,themachinewaspositionedinfrontof tables that contained the some objects. In this experimental setup, the table was initially empty. However, after a short while, a human began placing and removing various objects from the table without directly interacting withtherobotsystem.Inthisway,the motion cueassistedindeterminingthepresenceofahumaninthe robot workplaceas well asthe brand-newobjectinstance on the table. Indeed, the three visual cues are given identicalweightwhensegmentationresultsarecalculated in this experiment. Targets have included various objects suchasaredball,atoycar,abottle,andamoneybox.

EXPERIMENT 2

The things that were to be found and identified in this experiment were set up on a desk. Two unstructured environments wereemployed, eachwitha differentset of commonplace items like textured books, pens, clocks, etc. Throughout the scenario under consideration, these objects were situated in various positions and/or orientations, which in some circumstances led to partial occlusion.

Volume: 10 Issue: 04 | Apr 2023 www.irjet.net

Notwithstanding the environment's features and those of the items themselves including the toy automobile, whose colour was strikingly similar to that of its surroundings all targets were accurately identified. The car and the stapler have been recognised and effectively identified in a case where two objects were found in a single photograph. This is similar to how the newly developed approach successfully focuses on the target object.

EXPERIMENT 3

Inthe final validation experiment,weusea publicpicture repository to compare the performance of our methodology against leading-edge techniques. Actually, there are many public image repositories available because object recognition is essential for many applications. These datasets give researchers the opportunity to assess their methods with a variety of objects and settings, as well as to assess how well they perform in comparison to other cutting-edge methods. These repositories could be categorised, nevertheless, accordingtotheobjectivestheymustachieve.

so the term "object recognition" might relate to a variety ofapplicationscenariosoritmaybebasedonaparticular setofinputdata.Therearevariouslevelsofsemantics(for example, category recognition, instance recognition, pose recognition, etc.). The required evaluation dataset must therefore comply with the demands of a particular technique. This dataset consists of thousands of RGB-D camera images of 300 common objects taken from differentanglesinhouseholdandbusinessenvironments.

Because objects are organised into a hierarchy of 51 categories, each of which comprises three to fourteen instances, each object can only belong to one category To fully evaluate the segmentation procedure, ground truth photos are also provided. As a result, this image dataset enables the evaluation of object recognition methods on two different levels: •Level of categories. The process of categorising newly unseen objects based on previously seenobjectsfromthesamecategoryisknownascategory recognition.Inother words, thisrecognitionlevel equates to determining if an object is an apple or a cup. Example level.

Is this Ester's or Angel's coffee cup? is the question that needs to be answered in this situation. Although the capacity to recognise objects at both levels is crucial for robotic tasks, only instance identification is taken into accountinthis work becauseno categoryabstraction was done. Finding the specific physical instance of an object

that has already been presented is the goal of the recognitionalgorithm.

IV.RESULTS & CONCLUSION

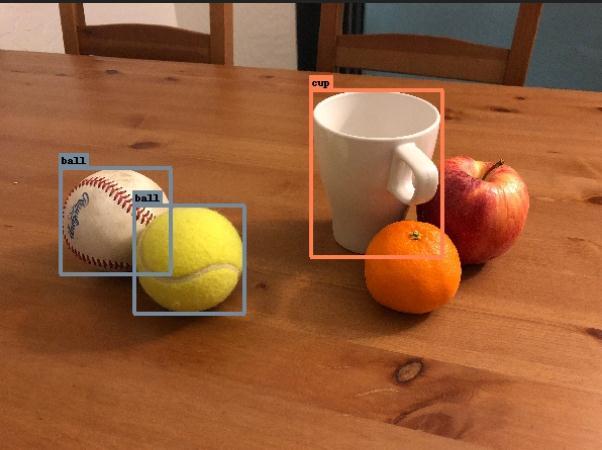

Afterimplementationofaboveproposedmethods,wefind the faster R-CNN having highest accuracy. So, we implemented the algorithm for object detection. For the controlweusevoicecommandimplementwiththehelpof HC05Bluetoothmoduleandforthemanualcontrolweuse esp-32 cam connected with Arduino IDE. Figure 2 represents the final results of object detection by the proposedmethod.

Modulesweimplementedsuccessfully

1. Detection

2. Tracking

3. Movementdetection

4. Lanetracking

5. AvoidObstacles

6. Interactionwithhumans

With the help of previously done research we are able to achieve all the modules with good accuracy. Combined accuracyofallthemodulesisnearly76%.

Title

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 04 | Apr 2023 www.irjet.net

X.Zhang,Y Yang, and Y.Liu [1]

“Objectdetectionandtracking usingaMobileRobot”

J.Redmon and S.Divvala[2]

“RealTimeTrackingwhile drivingaMovingVehicle”

2395-0072

S.Wang,R.Clark and H.Wen[3]

“RealTimeobjectdetectionand TrackingforAutonomous DrivingApplications

Method

Deepconvolutionalneuralnetwork andKalmanfilterfor detection andtracking

YOLO forreal-timeobject detectionandtracking

Dataset Customoutdoordatasetwith LiDARandcamerasensors COCOdatasetisused

Deepneuralnetworkforobject detectionandKalmanfilterand Hungarianalgorithmforobject tracking

KITTIdatasetforobject detectionandtrackingin autonomousdrivingapplications

Result

Detectionaccuracyof87.3%and trackingaccuracyof82.5%on outdoordataset

REFERENCES

Itsprocessingspeedis45 frameper/sec

[1] X.Zhang,Y Yang,andY.Liu , “Infrastructure-Based Object Detection and Tracking for Cooperative Driving Automation: A Survey” 2022 IEEE IntelligentVehiclesSymposium(IV),IEEE

[2] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, "You only look once: Unified, real-time object detection." arXiv preprint arXiv:1506.02640 (2015).”

[3] S.Wang,R.Clark and H.Wen, “Real Time object detection and Tracking for Autonomous Driving Applications”

[4] C. Chen, A. Seff, A. L. Kornhauser, and J. Xiao, “Deepdriving: Learning affordance for direct perceptioninautonomousdriving,”inICCV,2015.

[5] X.Chen,H.Ma,J.Wan,B.Li,andT.Xia,“Multi-view 3d objectdetection network for autonomous driving,”inCVPR,2017.

[6] Dundar, J. Jin, B. Martini, and E. Culurciello, “Embedded streaming deep neural networks acceleratorwithapplications,”IEEETrans.Neural Netw. & Learning Syst., vol. 28, no. 7, pp. 1572–1583,2017.

Thesystemisappropriatefor real-timeapplicationsbecauseit isbuilttooperatewithlowlatencyinputandoutput.

[7] R. J. Cintra, S. Duffner, C. Garcia, and A. Leite, “Low-complexity approximate convolutional neural networks,” IEEE Trans. Neural Netw. & LearningSyst.,vol.PP,no.99,pp.1–12,2018.

[8] S. H. Khan, M. Hayat, M. Bennamoun, F. A. Sohel, and R. Togneri, “Cost-sensitive learning of deep feature representations from imbalanced data.” IEEE Trans. Neural Netw. & Learning Syst., vol. PP,no.99,pp.1–15,2017.

[9] Stuhlsatz, J. Lippel, and T. Zielke, “Feature extraction with deep neural networks by a generalized discriminant analysis.” IEEE Trans.NeuralNetw.&LearningSyst.,vol.23,no.4, pp.596–608,2012.

[10] R. Girshick, J. Donahue, T. Darrell, and J.Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,”inCVPR, 2014.

[11] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only lookonce: Unified, real-time objectdetection,”inCVPR,2016.

[12] S. Ren, K. He, R. Girshick, and J. Sun,“Faster r-cnn: Towards real-time object detection with region proposal networks,” in NIPS, 2015,pp. 91–99.

[13] D. G. Lowe, “Distinctive image features from scale-invariant key-points,” Int. J. of Comput. Vision,vol.60,no.2,pp.91–110,2004.

[14] N. Dalal and B. Triggs, “Histograms of orientedgradientsforhumandetection,”inCVPR, 2016.

[15] R. Lienhart and J. Maydt, “An extended set of haar-like features for rapid object detection,” in ICIP,2002.

[16] C. Cortes and V. Vapnik, “Support vector machine,” Machine Learning, vol. 20, no. 3, pp. 273

297,1995.

[17] Y. Freund and R. E. Schapire, “A desiciontheoreticgeneralizationofon-linelearningandan application to boosting,” J. of Comput. & Sys. Sci., vol.13,no.5,pp.663–671,1997.

[18] P. F. Felzenszwalb, R. B. Girshick, D. McAllester, and D. Ramanan, “Object detection with discriminatively trained part-based models,”IEEE Trans. Pattern Anal. Mach. Intell., vol. 32, pp. 1627–1645,2010.

[19] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zis-serman, “The pascal visual objectclasseschallenge2007(voc2007