STUDENT TEACHER INTERACTION ANALYSIS WITH EMOTION RECOGNITION FROM VIDEO AND AUDIO INPUT: RESEARCH

Rajat Dubey1, Vedant Juikar2, Roshani Surwade3, Prof. Rasika Shintre4

1,2,3B.E. Student, Computer Department, 4Project Guide, Smt. Indira Gandhi College of Engineering Navi Mumbai, Maharashtra, India ***

Abstract - Emotions are significant because they are essential to the learning process. Emotions, behaviour, and thoughts are intimately connected in such a way that the sum of these three factors determines how we act and what choices we make. The selection of a database, identifying numerous speech-related variables, and making an appropriate classification model choice are the three key hurdles in emotion recognition. In order to understand how these emotional states relate to students' and teachers' comprehension, it is important to first define the facial physical behaviours that are associated with various emotional states. The usefulness of facial expression and voice recognition between a teacher and student in a classroom was first examined in this study.

Key words:SpeechEmotionRecognition,FacialEmotion Recognition, JAVA, Node.js, Computer Neural Network, RecurrentNeuralNetwork,MFCC,SupportVectorMachine

1. INTRODUCTION

In artificial intelligence, it is common practice to take real timephotos,videos,oraudiosofpeopleinordertoanalyze theirfacialandverbalexpressionsminutely.Becausethere is very little facial muscle twisting, it is difficult for machines to recognize emotions, which leads to inconsistent results. The contact between teachers and students is the most important component of any classroom setting. In interactions between teachers and pupils,theimpactbroughtaboutbyfacialexpressionsand voiceisverystrong.

expressions. Real-world classrooms allow for in-person interactions. In order to accomplish this, many schools haveregularlyscheduledchatroomswithaudioandvideo conferencing interactions. In these spaces, students may easily connect with one another and the instructor just as they would in a traditional classroom. Through this technique,theteacherwillbeabletoidentifythestudent's facial expressions or spoken expressions of satisfaction with me as they occur throughout interactions with the student. The fundamental tenet is that teachers must be able to read students' minds and observe their facial expressions.

We analyzed at whether the students' facial action units conveyedtheiremotionsinrelationtocomprehension.The primaryhypothesisofthefirststepofthisstudyproposed that students frequently use nonverbal communication in the classroom, and that this nonverbal communication takes the form of emotion recognition from video and audio.This,inturn, enables lecturers to gauge thelevel of understandingofthestudents.

The models that are available include Voice Emotion Recognition, which recognizes emotions through speech but is unable to produce results from video input. Face Emotion Recognition uses video to identify emotions, however it is unable to do so with audio input, and no analysisreportisproduced.

2. LITERATURE SURVEY

We provide an overview of some current research in the fields of speech emotion recognition (SER) and facial expressionrecognition(FER),aswellassomeFERsystems utilized in classrooms as the foundation for teacherstudent interaction, because the face plays a significant roleintheexpressionandperceptionofemotions.

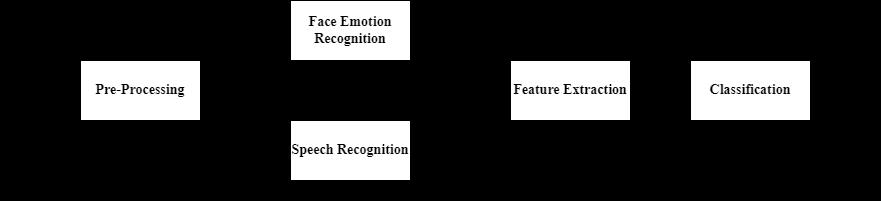

Fig. 1 Basic Emotion Recognition

The key sources of information for figuring out a person's interior feelings in humans are speech and facial

A system that allowed them to collect audio recordings of the emotions of irritation, happiness, and melancholy togetherwiththeirSTE,pitch,andMFCCcoefficients.Only the three basic emotions anger, happiness, and sadness were identified. Using feature vectors as input, the multi-classSupport vectormachine (SVM)generatesa model for each emotion. Deep Belief Networks (DBNs) have an accuracy rate that is roughly 5% greater than Support Vector Machines (SVMs) and Artificial Neural Networks (ANNs) when compared to them. The results

demonstrate how much better the features recovered usingDeepBeliefNetworksarethantheoriginalfeature.

The speech signal's audio feature is the sound. Feature extraction is the process of extracting a little amount of informationfromvocalexpressionthatcanthenbeutilized to act for each speaker. Feature extraction and feature classification must be the primary SER strategies. For feature classification, both linear and nonlinear classifiers can be utilized. Support Vector Machines are a popular classifier in linear classifiers (SVMs). These kinds of classifiers are useful for SER since speech signals are thought to vary. Deep learning approaches have more benefits for SER than conventional approaches. Deep learning approaches have the capacity to recognize complicated internal structure and do not require manual featureextractionortweaking.Themajorityofthecurrent facerecognitionalgorithms fallinto oneoftwocategories: geometric feature-based or image template-based. While localfacecharacteristicsareextractedandtheirgeometric and aesthetic properties are used in feature-based approaches. Our primary point of emphasis during social interactions is the face, which is crucial for expressing identity and emotion. We can quickly recognise familiar faces even after being apart for a long period because we have learned to recognise hundreds of faces over the course of our existence. Particularly intriguing are computational models for face recognition since they can advance both theoretical understanding and real-world use.Systemthatallowscomputerstocategorizenumerous audio voice files into distinct emotions including joyful, sad, angry, and neutral. In this paper, we used Tennent Meeting as an example for mode testing. The framework primarily comprises of two parts: online course platforms andadeeplearningmodelbasedonCNN.

3. METHODOLOGY

Recognizing speech and facial emotions relies heavily onfacialcommunicationandexpression.Theyarebasedon thephysicalandpsychologicalcircumstances.

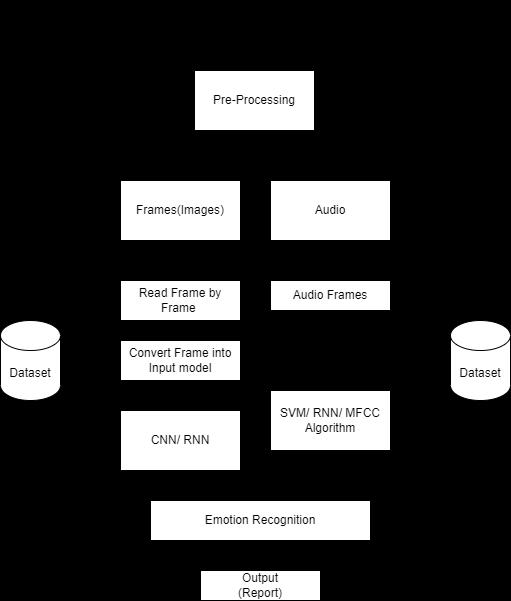

A dataset is trained and tested as part of the supervised learning process used to train the emotion recognition system. Face Detection, Image Pre-processing, Feature Extraction, and Classification are all steps in the basic processofafacialemotionidentificationsystem.

3.1 Face Emotion Recognition:

Trackingthefacethroughtheunprocessedinputphotosis what it entails. On the training dataset, OpenCV is used to implementitafterCNNprocessesit.Therearecontinually new models based on the CNN structure that have produced better outcomes for the identification of facial emotions.

3.1 Speech Recognition:

Two different types of models the discrete speech emotion model and the continuous speech emotion model are included in the SER model. The first model displays a variety of individualistic emotions, suggesting that a particular voice has only one individualistic emotion, whereas the second model indicates that the emotionisintheemotionspaceandthateachemotionhas its own special strength. It makes use of a range of emotions, including neutral, disgust, wrath, fear, surprise, joy,happiness,andsadness.

3.2 Feature Extraction:

To identify emotions, we have employed face traits. Finding and extracting appropriate features is one of the most important components of an emotion recognition system. These elements were selected to represent the information that was wanted. After pre-processing, facial featureswithhighexpressionintensityare retrievedfrom animage,suchasthecornersofthemouth,nose,forehead creases,andeyebrows.

Differentaspectsbasedonspeechandfacialexpressionare combined. In comparison to systems created utilising individual features, several studies on the combination of features have shown a significant improvement in classificationaccuracy.

3.3 Classification:

Aclassificationtechniqueisemployedtominimisethedata dimensionality because the data obtained from the extraction of voice and facial features has a very high dimensionality. Support vector machine algorithm is used in this procedure. To recognise various patterns, SVM is employed. SVM is employed to train the proper feature based data set, and even with the availability of only a modest amount of training data, it produces high classificationaccuracy.

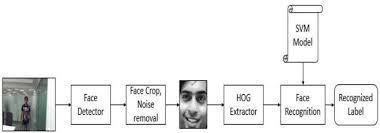

Multiple photographs of the same face taken at various facial expressions will yield a variety of sample images. Theimagecanbecroppedandsavedasasampleimagefor examination when the face has been located. Face Detection,FacePrediction,andFaceTrackingarethethree main steps in the process of identifying a face in a video sequence.

Face recognitionalsoinvolvesthetaskscarriedout by the Face Capture program. An HOG face highlight vector must be eliminatedinordertoperceivetheobtained face.After that, this vector is used in the SVM model to determine a coordination score for the information vector containing all of the names. The SVM restores the mark with the highestscore,whichtestifiestothecertaintyofthenearest coordinate within the prepared face information. A program was also created to do SVM-based recognition of spontaneous facial expressions in the video, which was speculativelymentionedasanadditionintheproposal.

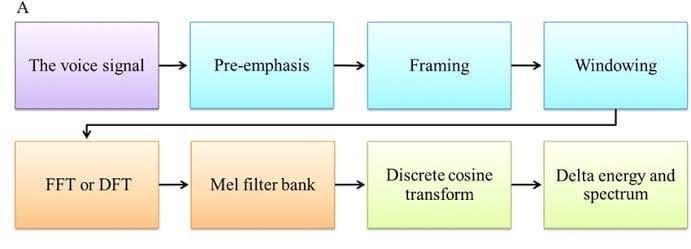

4.3 Mel-Frequency Cepstrum Coefficient (MFCC)

The preferred method of spectral property encoding for voicecommunications.Thesearethegreatestbecausethey take into account how sensitively humans perceive frequencies,makingthemthebestforspeechrecognition.

4. ALGORITHM FOR EMOTION RECOGNITION

4.1 Support Vector Machines (SVM)

Data analysis and classification algorithms under supervision are effective at classifying students' facial expressions.

An algorithm for machine learning that can be used for classificationissupportvectormachines.

The most often used and frequently most effective algorithms for voice emotion recognition are Support Vector Machines (SVM) with non-linear kernels. Using a kernel mapping function,an SVMwitha non-linearkernel converts the input feature vectors into a higher dimensional feature space. Classifiers that are non-linear in the original space can be made linear in the feature spacebyusingthepropernonlinearkernelfunctions.

The task ofsupervised learning isspeechrecognition. The audio signal will be the input for the speech recognition issue, and we must predict the text from the audio signal. Since there would be a lot of noise in the audio signal, we cannot feed the raw audio signal into our model. It has beenshownthatusingthebasemodel'sinputasafeature extracted input instead of the input's raw audio signal wouldresultinsignificantlyhigherperformance.

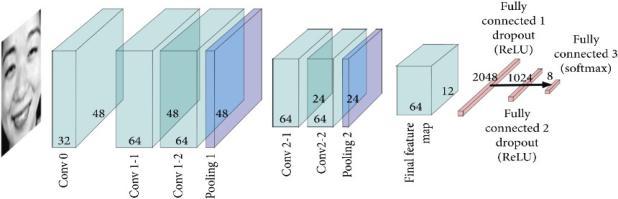

4.4 Convolutional Neural Networks (CNN)

The applied deep learning model's architecture, which preferred the FER above other comparable models, has alsobeenestablished.Followingaconvolutionallayerwith 32featuremaps,theinputlayerisfollowedbytwoblocks that each contain two convolutional layers and one maxpoolinglayerwith64featuremaps.Thefirstconvolutional layer'skernelsizeissetto33,thesecond'sto55,themaxpooling layers each have a kernel of size 2 2 and stride 2,

and as a result, the input image will be compressed to a quarter of its original size. Rectified Linear Units are used as the activation function in the 2 subsequent fully connectedlayers,ADropoutisaddedaftereachofthetwo completelyconnectedlayerstopreventover-fitting;inthis paper, the two values are both set to 0.5. The next output layer consists of 8 units, and softmax is used as the activationfunctiontocategorizetheexpressionslookedat as contempt, anger, disgust, fear, happiness, sorrow, and neutral.

~14K images labeled with bounding boxes. Furthermore the model has been trained to predict bounding boxes, which entirely cover facial feature points, thus it general producesbetterresults.

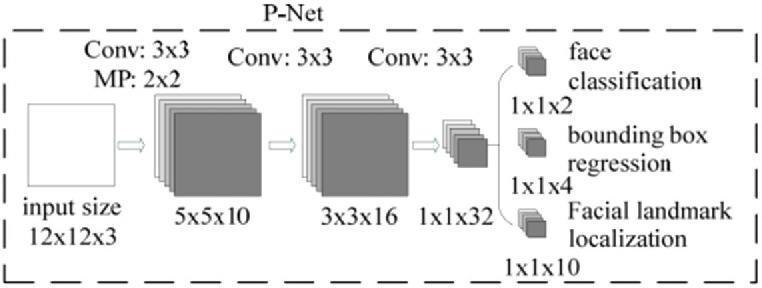

III. MTCNN

MTCNN should be able to detect a wide range of face bounding box sizes. MTCNN is a 3 stage cascaded CNN, which simultaneously returns 5 face landmark points alongwiththeboundingboxes.

6CNNmodelinemotionrecognition

I. SSD MobileNet V1

UseofSSDMobilenetV1forfacedetection.Itistocreatea face recognition system, which entails developing a face detector to determine the location of a face in an image and a face identification model to identify whose face it is bycomparingittothealreadyexistingdatabaseoffaces.In order to perform tasks like object detection and picture recognition on mobile devices with limited computational resources, the SSD MobileNet V1 convolutional neural networkarchitecturewasdeveloped.

We propose a deep cascaded multi-task framework which exploits the inherent correlation between detection and alignmenttoboostuptheirperformance.Inparticular,our framework leverages a cascaded architecture with three stages of carefully designed deep convolutional networks to predict face and landmark location in a coarse-to-fine manner.Inaddition,weproposeanewonlinehardsample mining strategy that further improves the performance in practice. Our method achieves superior accuracy over the state-of-the-art techniques on the challenging FDDB and WIDER FACE benchmarks for face detection, and AFLW benchmark for face alignment, while keeps real time performance.

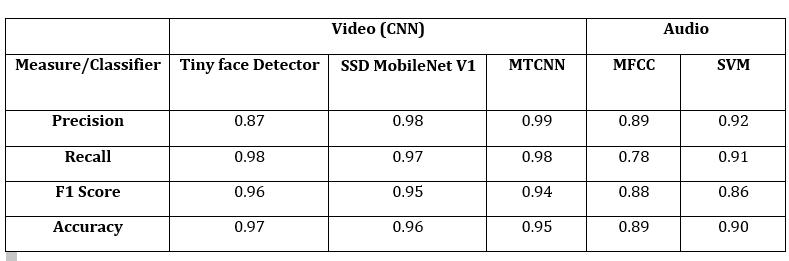

5. PERFORMANCE MATRIX

Convolutionalneuralnetwork(CNN)analysisofaudioand videooftenresultsinthecreationofaperformancematrix that includes an assessment of the CNN model's precision and efficiency in processing audio and video input. Followingmeasuresshowstheperformanceofmodel.

a. Precision: Precision measures the ratio of real positive predictions i.e., outcomes that were accuratelyforecastedaspositive tothetotalnumber ofpositivepredictionswhichCNNmodelmade.

b. Recall: Recall, also referred to as sensitivity or the true positive rate, calculates the ratio of true positive forecasts to all of the data's actual positive outcomes.

Fig. 7 CNN model in emotion recognition

This project implements a SSD (Single Shot Multibox Detector) based on MobileNetV1. The neural net will compute the locations of each face in an image and will returntheboundingboxestogetherwithitsprobabilityfor each face. This face detector is aiming towards obtaining high accuracy in detecting face bounding boxes instead of lowinferencetime.

II. Tiny Face Detector

The Tiny Face Detector is a real time face detector, which is much faster, smaller and less resource consuming comparedtotheSSDMobilenetV1facedetector,inreturn it performs slightly less well on detecting small faces. The face detector has been trained on a custom dataset of

c. F1-score: The harmonic mean of accuracy and memory, which offers a balanced assessment of both precision and recall, is the F1-score. It is a frequently employed metric where recall and precision are of equal importance. In Fig. F1 score of different algorithmsisshown.

d. Accuracy: ThisgaugeshowaccuratelytheCNNmodel haspredictedtheoutcomesfortheanalysedaudioand video data overall. It is determined as the proportion of accurately predicted results to the entire sample size.

Table. 1 Performance Matrix

6. IMPLEMENTATION

6.1 Input

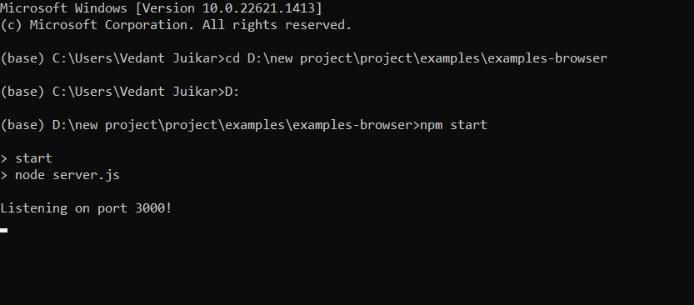

LaunchtheAnacondasoftware,navigatetotheNode.jsfile in the command prompt, and launch the programme file forvideoemotionidentification.The programme beganto function,andusingtheaudioandvideoinput,itrecognised the emotions and produced the graph of that feeling for theteacherandthepupil.

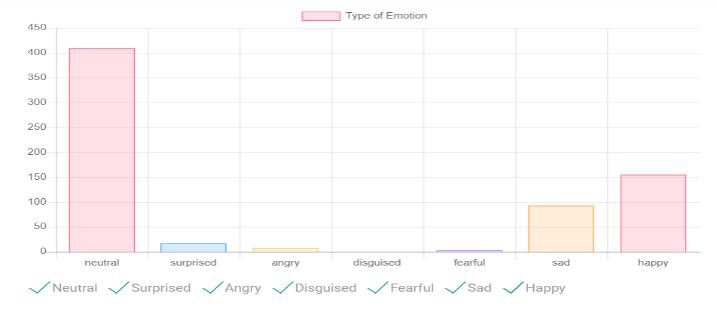

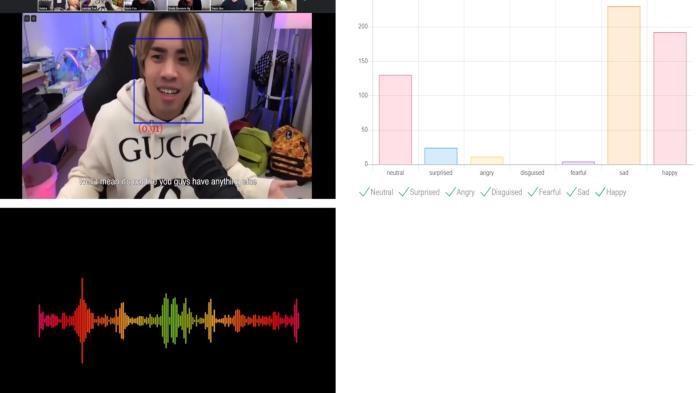

6.3 Emotion Graph

The graph is created after playinga video. The created graph is based on the audio and video's recognized emotions. The total amount of emotions based on the audioandvideoaredisplayedasagraph

6.2 Audio/Video Detection

Choosetheinteractionanalysisvideobetweentheteacher and students. Play the video. Two different emotion kinds are recognised simultaneously: face emotion and spoken emotion detection using video input. The UI created for emotiondetectioncountstheamountoffacialexpressions made by the pupils in the video and while engaging with theteacher,thereforetheiraudiowillbecountedaswell.

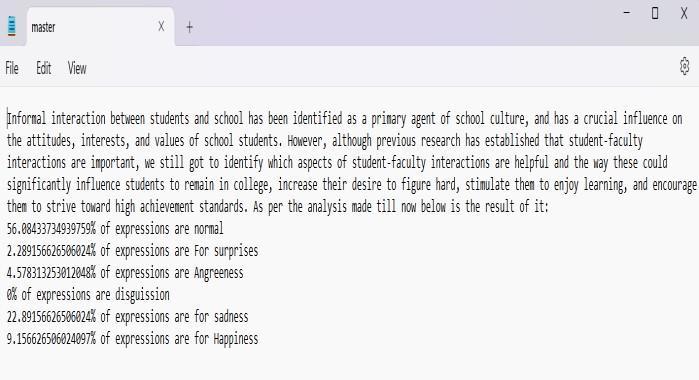

6.4 Audio/ Video Report

As a video is played, emotions are picked up, and we createda graphtoanalyse thosefeelingsinrelationtothe teacher-student interaction. There are many levels of emotionseeninthecreatedgraph.

This proposal is mainly used for the recorded teacherstudentinteractioninaclassroomtoidentifytheemotions oftheteacheraswellasthestudentthroughtheaudioand videoinput.Itisawayofgeneratingareportontherange ofemotionsofthe teacherandstudentfromthefacial and audio frames. With this feature, the emotions of the studentsareidentified.Thishelpsoutthelecturetomakea lecture more interactive, increase their desire to figure hard,stimulatethemtoenjoylearningandencouragement themtostrivetowardshighachievementstandard.

8 CONCLUSION

The analysis of audio and facial emotions holds great promise in enhancing our comprehension of human emotions and conduct. By incorporating data from both modalities, the precision and dependability of emotion recognition algorithms can be enhanced, and fresh

applications of this technology are predicted to surface in domains like healthcare, education, and entertainment. Moreover,thereisa need to ensure thatthedata usedfor training and testing emotion recognition algorithms is diverse and representative of the populationthe future of audio and facial emotion analysis is exciting and holds immense potential to improve our understanding of human emotions and behavior. With further advancements in technology and research, we can expect to see new and innovative applications of this technology in a wide range of domains, making our interactions with technology and with each other more efficient and empathetic

9. FUTURE SCOPE

The future scope of audio and facial emotion analysis is vast and promising. With the increasing development of machine learning and artificial intelligence, it is expected that the accuracy and reliability of emotion detection algorithms will continue to improve. In addition, new applications of this technology are likely to emerge in fields such as healthcare, education, and entertainment. For example, in healthcare, emotion detection could be usedtomonitor patients for signs of mental health issues, such as depression or anxiety. In education, it could be usedtoanalyzestudentengagementandidentifyareasfor improvementinteachingmethods.

10. REFERENCES

[1] G. Tonguç and B. O. Ozkara, “Automatic recognition of student emotions from facial expressions during a lecture,” Computers & Education, vol. 148, Article ID 103797,2020.

[2] S. Lugović*, I. Dunđer** and M. Horvat “TechniquesandApplicationsofEmotionRecognition inSpeech”,May 2016

[3] Y.-I.Tian,T.Kanade,andJ.F.Cohn,“Recognizingaction unitsforfacialexpressionanalysis,”IEEETransactions on Pattern Analysis and Machine Intelligence, vol. 23, no.2,pp.97–115,2001

[4] Z.Zeng,M.Pantic,G.I.Roisman,andT.S.Huang,“Asurvey of affect recognition methods: audio, visual, and spontaneous expressions,” IEEE Transactions on Pattern Analysis and MachineIntelligence, vol. 31, no. 1,pp.39–58,2009.

[5] B. Martinez and M. F. Valstar, “Advances, challenges, and opportunities in automatic facial expression recognition,” inAdvancesin FaceDetectionandFacial Image Analysis, pp. 63–100, Springer, Cham, Switzerland,2016

BIOGRAPHERS

Rajat Dubey is pursuing the Bachelor degree (B.E.) in Computer Engineering from Smt. Indira Gandhi college Of Engineering,NaviMumbai

Vedant Juikaris pursuing theBachelor degree (B.E.) in Computer Engineering from Smt. Indira Gandhi college Of Engineering,NaviMumbai

Roshani Surwade is pursuing the Bachelor degree (B.E.) in Computer Engineering from Smt. Indira Gandhi collegeOfEngineering,NaviMumbai.

PROF. Rasika Shintre, Obtained the Bachelordegree(B.E.Computer)inthe year 2011 from Ramrao Adik Institute of Technology (RAIT), Nerul and Master Degree(M.E.Computer)From Bharti Vidyapeeth College Of Engineering, Navi Mumbai.She is Asst. Prof in Smt. Indira Gandhi college Of Engg. Of Mumbai University and having about 11 years of experience. Her area of interest include Data Mining and InformationRetrieval.