Dr.

MESSAGE CONVEYOR FOR LOCKED SYNDROME PATIENTS BY VIRTUAL KEYBOARD

R. V. Shalini1 Dept. of Biomedical Engineering , Sri Shakthi Institute Of Engineering And Technology, Tamilnadu, India

1Abhiram.K, Sri Shakthi Institute Of Engineering And Technology

2Abimannan.A, Sri Shakthi Institute Of Engineering and Technology

3Anandhu Raji, Sri Shakthi Institute of Engineering and Technology

4Nishad.S, Sri Shakthi Institute Of Engineering and Technology

Abstract the Message conveyor for locked syndrome patients is an assistive technology designed to help individualswithLocked-insyndrome.Locked-insyndrome is a rare disorder of the nervous system. People with locked-in syndrome are paralyzed except for the muscles that control eye movement. Conscious (aware) and can thinkandreason,butcannotmoveorspeak;althoughthey may be able to communicate with blinking eye movements. The system consists of a camera that detects the user's face and eyes and tracks the movement of the eyes to control a virtual keyboard on the screen. The system uses a Histogram of Oriented Gradients (HOG) descriptorstodetecttheuser'sface,andaShapePredictor 68FaceLandmarkalgorithmtodetecttheuser'seyes.Eye blinking is detected by drawing two lines in the eye and checking for the disappearance of the vertical line. Eye gazedetectionisdonebydetectingeyeballsandapplyinga threshold on them. The system also counts the white part of the eye to determine the direction of the gaze. The virtual keyboard is displayed on the screen and is controlled by eye movements and blinking. The user can select letters and symbols by blinking, and additional controls are provided to adjust the settings such as languageandspeedofthespeechoutput.Theoutputofthe system can be in the form of text or speech, and is displayed on a 16x2 LCD or pronounced using a speaker. The text or speech output is determined by the user's selections on the virtual keyboard. In summary, the Eye GazeControlledTypingSystemisaninnovativetechnology that enables individuals with motor impairments or disabilities to communicate using eye movements and blinking. The system uses advanced computer vision algorithms to detect the user's face, eyes, and gaze direction and provides a simple and intuitive interface for controlling the virtual keyboard and adjusting the system settings

Keywords: Assistive technology, Eye gaze tracking, Computer vision, Virtual keyboard, Eye blink detection NodeMCU,LCD,Voiceplayback,Disabilities.

I. INTRODUCTION

The Message conveyor for locked syndrome patients is an innovative assistive technology designed to help individuals with motor impairments or disabilities to communicate using eye movements and blinking. This system uses advanced computer vision algorithms to detect the user's face, eyes, and gaze direction, and providesasimpleandintuitiveinterfaceforcontrollingthe virtual keyboard and adjusting the system settings. The system works by detecting the user's face using a Histogram of Oriented Gradients (HOG) descriptors and tracking the movement of the user's eyes using a Shape Predictor 68 Face Landmark algorithm. Eye blinking is detectedby drawing twolinesin the eyeandcheckingfor thedisappearanceoftheverticalline.Eyegazedetectionis done by detecting eyeballs and applying a threshold on them. The system also counts the white part of the eye to determine the direction of the gaze. The virtual keyboard is displayed on the screen and is controlled by eye movements and blinking. The user can select letters and symbols by blinking, and additional controls are provided to adjust the settings such as language and speed of the speechoutput.Theoutputofthesystemcanbeintheform of text or speech, and is displayed on a 16x2 LCD or pronounced using a speaker. The text or speech output is determined by the user's selections on the virtual keyboard. Overall, the Eye Gaze Controlled Typing System is an innovative technology that provides a new way for individuals with motor impairments or disabilities to communicateandexpressthemselves.

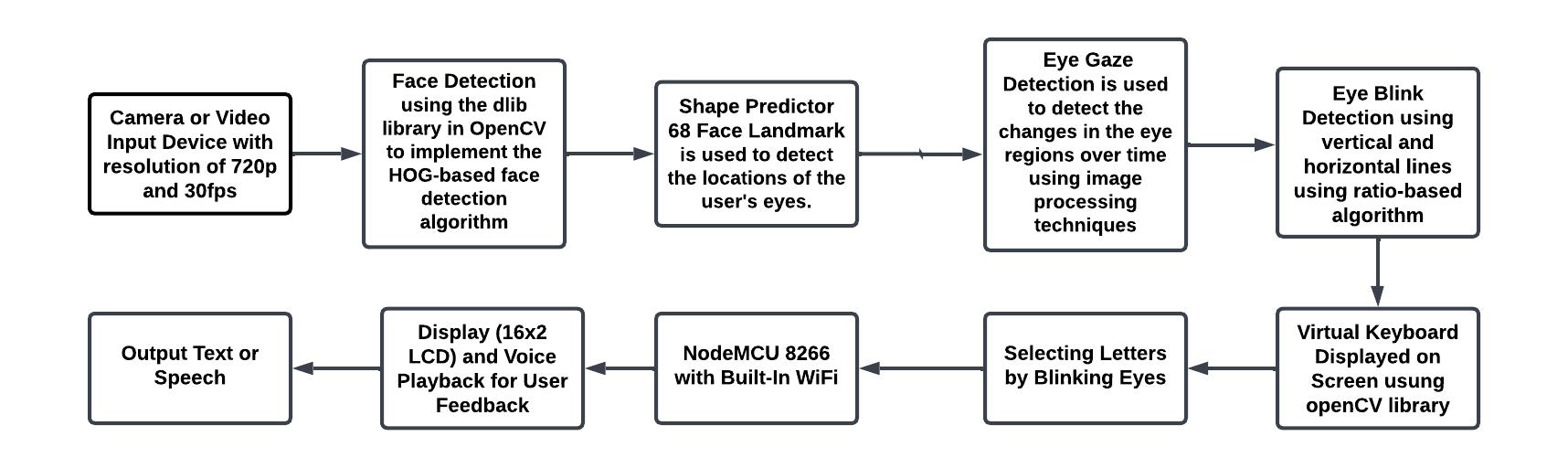

II. BLOCK DIAGRAM

III. PROPOSED METHODOLOGY

The camera or video input device captures the user's face and eye movements. The face detection component uses the Histogram of Oriented Gradients (HOG) feature descriptor to identify the user's face in the image. The shapepredictor 68face landmarks uses 68specificpoints to identify features such as the eyes, nose, lips, eyebrows, and face area. The eye detection component uses these landmarks to identify the user's eyes. The eye blink detectioncomponenttracksverticalandhorizontallinesin the eyes to detect blinking. The eye gaze detection component tracks the user's eyeballs and applies a thresholdtoidentifythewhitepartoftheeye.Thisallows the system to determine which part of the virtual keyboard the user is looking at. The virtual keyboard is displayed on the screen, and the user selects letters and other characters by blinking their eyes. The NodeMCU with built-in WIFI receives the user's text selection and sends it to the display (16x2 LCD) and voice playback components for user feedback. The user can interact with thesystemandcontrolitsfunctions.

IV. MODULE DESCRIPTION

A.Camera or Video Input Device

Thecamera usedinthe eye gaze-controlledtypingsystem can vary depending on the specific implementation and requirementsofthe system. However,generally,a camera withhighresolutionandagoodframerateispreferredfor accuratetrackingoftheuser'seyemovements. Ingeneral, acamerawitharesolutionof720porhigherissuitablefor eye tracking. The frame rate should be at least 30 frames per second (fps) to ensure smooth and accurate tracking. The camera should also have good low-light performance,

asthesystemmaybeusedindifferentlightingconditions. In terms of the camera type, both webcam and Smartphone cameras can be used for eye tracking. However,webcamsaretypicallypreferredfortheireaseof useandbetterstability,whileSmartphonecamerasmaybe more portable and flexible in terms of placement. It’s important to note that the camera used in the system should be compatible with the software used for face detection, eye detection, and eye tracking. The specific software may have recommended camera requirements, so it's important to check with the software documentation or manufacturer for any specific camera recommendations.

WebCamera

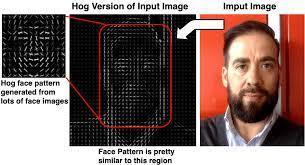

B. Face Detection (using HOG)

The face detection block in the eye gaze-controlled typing system uses the Histogram of Oriented Gradients (HOG) feature descriptor to detect faces in the captured image. HOG is a popular feature descriptor used in computer visionandimageprocessingforobjectdetection,including face detection. Here's a brief overview of how to face detection using HOG works: Image pre-processing: The input image is pre-processed to improve the performance of the face detection algorithm. This may include steps such as greyscale conversion, contrast normalization, and histogram equalization. Sliding window approach: The HOG descriptor works by dividing the input image into small, overlapping sub-windows or regions. Each subwindow is represented as a feature vector using the HOG descriptor. Gradient orientation calculation: The HOG descriptor counts the number of occurrences of gradient orientation within each sub-window. The gradient orientation is calculated by computing the gradient magnitude and direction of the pixels in the sub-window. Histogram of oriented gradients: The gradient orientation values are grouped into bins to form a histogram of oriented gradients for each sub-window. The HOG descriptorisaconcatenationofthese histograms.Support Vector Machine (SVM) classification: The HOG descriptor

isusedasinputtoabinarySVMclassifier,whichistrained to distinguish between face and non-face sub-windows. The classifier is trained using a large number of positive (face) and negative (non-face) examples. On-maximum suppression: The output of the classifier is a set of bounding boxesaroundpotential faceregionsintheinput image. To reduce false positives, non-maximum suppression is applied to eliminate overlapping bounding boxes and keep only the most likely face region. The face detection block in the eye gaze-controlled typing system uses the dlib library in OpenCV to implement the HOGbased face detection algorithm. This algorithm has been shown to be more accurate than other methods for face detection, making it a popular choice for many computer visionapplications.

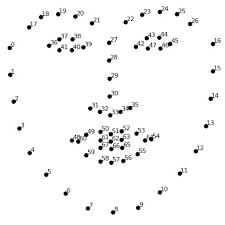

Facial landmark detection: Once the shape predictor 68 face algorithm is trained, it can be used to detect the 68 faciallandmarksinnewimages.Thisinvolvesapplyingthe trainedregressionmodeltotheinputimagetopredictthe locations of the landmarks. Landmark tracking: The landmark locations can be used to track the face as it moves, which can be useful for applications such as facial expression analysis or eye gaze tracking. In the eye gazecontrolledtypingsystem,theshapepredictor 68 facesare used to detect the locations of the user's eyes. The algorithm is used to locate the eye regions by identifying specificlandmarks,suchasthecornersoftheeyesandthe iris. Once the eye regions are located, the system can use additional image processing techniques to detect eye blinks and track the user's eye gaze to select keys on the virtualkeyboard

Fig.4.2.FaceDetectionusingHOG

c. Shape Predictor 68 Face Landmark

The shape predictor 68 face is a machine learning-based algorithm used to locate 68 specific landmarks or key pointsontheface.Itiscommonlyusedincomputervision applications such as face tracking, face recognition, and facial expression analysis. Here’s an overview of how the shape predictor 68 face works: Training data: The shape predictor68facealgorithmistrainedonalargedatasetof face images, which are labeled with the locations of 68 specific facial landmarks. The training process involves using machine learning algorithms to learn the relationship between facial features and landmark locations. Feature extraction: The shape predictor 68 face algorithm uses feature extraction techniques to identify faciallandmarks.Specifically,itextractsHOGfeaturesfrom theimage,whicharethenusedtotrainaregressionmodel to predict the locations of the 68 landmarks. Regression model: The shape predictor 68 face algorithms use a regression model to predict the locations of the 68 facial landmarks.Theregression model takestheextractedHOG features as input and outputs the landmark coordinates.

Fig.4.3.ShapePredictor68Face

To detect eye gaze, the eye gaze-controlled typing system uses image processing techniques to analyze the video feed from the camera and monitor changes in the eye regions over time.The basic principle behind eye gaze detection is that the position of the iris in the eye can be used to infer the direction of the person's gaze. In the eye gaze-controlled typing system, the eye gaze detection algorithm first isolates the eye regions using the shape predictor 68 face algorithm. Once the eye regions are identified, the system applies a threshold to the grayscale imagetoconvertit intoa binaryimage.This binaryimage is then used to detect the iris and estimate the position of the pupil center. Once the position of the pupil center is estimated, the system uses various algorithms, such as Hough transforms and circular pattern matching, to estimate the position and direction of the gaze. This estimation is typically done by comparing the position of the pupil center with the position of the eye corners and

usingthisinformationtoinferthedirectionofthegaze.By monitoring gaze direction, the system can allow users to selectkeysonthevirtualkeyboardsimplybymovingtheir eyes

E. Eye Blink Detection using vertical and horizontal lines

Todetecteyeblinks,theeyegaze-controlledtypingsystem uses image processing techniques to analyze the video feed from the camera and monitor changes in the eye regions over time. The basic principle behind eye blink detection is that the eyelids close when a person blinks, causing a temporary occlusion of the eye regions. This change in the eye regions can be detected using image processing techniques, such as edge detection, thresholding,and blob analysis.Intheeyegaze-controlled typing system, the eye blink detection algorithm first isolates the eye regions using the shape predictor 68 face algorithm. Once the eye regions are identified, the system appliesathresholdtothegrayscaleimagetoconvertitinto a binary image. This binary image is then used to detect changesintheeyeregionsovertime. Todetecteyeblinks, the system uses two lines that are drawn over the eye regions, one vertical and one horizontal. When the eye is open,bothlinesarevisible.However,whentheeyeblinks, the vertical line disappears temporarily due to the occlusion of the eyelid. The system tracks the disappearanceofthevertical lineandusesittodetect eye blinks.

F. Virtual Keyboard Displayed on Screen

The virtual keyboard in the eye gaze-controlled typing systemisasoftware-baseduserinterfacethatallowsusers to input text using eye movements. It is displayed on the screenandconsists ofa gridofkeys,similartoa standard physical keyboard. The keys can include letters, numbers, and symbols, depending on the language and preferences oftheuser.Thevirtualkeyboardinthissystemisdesigned tobeusedwitheyemovements,specificallyeyeblinksand gazedirection.Eachkeyonthevirtualkeyboardisselected by a combination of eye blinks and gaze direction. To select a key, the user first gazes at the key they want to select. Once the desired key is in focus, the user blinks their eyes to activate the selection. The system then registers the selected key and begins to build the text by adding the character to a buffer. The virtual keyboard output is received by the NodeMCU, which is a microcontroller with built-in Wi-Fi. The NodeMCU communicateswithavoiceplaybackand16x2 LCD,which shows the text received by NodeMCU and pronounces it through a speaker if the text is stored. By combining the

virtual keyboard with these hardware components, the system can provide a complete solution for text input and outputusingonlyeyemovements.

G Selecting Letters by Blinking Eyes

Intheeyegaze-controlledtypingsystem,eachletteronthe virtual keyboard is selected by a combination of eye gaze and eye blink. Here's how it works: The user gazes at the lettertheywanttoselectonthevirtualkeyboard.Oncethe desiredletterisinfocus,theuserblinkstheireyestoselect the letter. The system detects the eye blink and registers theselection. Theselectedletteristhenaddedtoa buffer, which holds the current text being input by the user. The processisrepeatedforeachlettertheuserwantstoselect. Thesystemusesacomputervisionalgorithmtodetecteye blinks and distinguish them from other eye movements. The algorithm detects when the upper and lower eyelids come together and then separate, which signifies a blink. By setting a threshold for the duration of this action, the system can distinguish between a blink and other eye movements, such as saccades or fixations. The combination of eye gaze and eye blink allows the user to selectlettersonthevirtualkeyboardwithahighdegreeof accuracy and precision. It can take some practice to get usedtothismethodoftextinput,butitcanbeaneffective wayforindividualswithmotordisabilitiestocommunicate usingonlytheireyes.

H.NodeMCU with Built-In

WIFI

NodeMCU is an open-source development board designed fortheInternetofThings(IoT).ItisbasedontheESP8266 microcontrollerandincludesbuilt-inWi-Fi,makingiteasy to connect to the internet and communicate with other devices. The NodeMCU board is similar to other development boards like the Arduino, but it is specifically designed for IoT applications. It is programmed using the Lua scripting language, which is easy to learn and use. NodeMCUincludesseveralfeaturesthatmakeitapopular choice for IoT projects, including Wi-Fi connectivity: NodeMCU has built-in Wi-Fi, which allows it to connect to the internet and communicate with other devices.GPIO pins: NodeMCU includes several general-purpose input/output(GPIO)pins,whichcanbeusedtoconnectto sensors, actuators, and other devices. Analog input: NodeMCU also includes analog input pins, which can be used to read values from sensors that output analog signals.USB interface: NodeMCU can be connected to a computer via USB, which allows it to be programmed and powered. Low cost: NodeMCU is a relatively low-cost development board, making it accessible to hobbyists and

students. In the eye gaze-controlled typing system, NodeMCU is used to receive the text selected by the user on the virtual keyboard. This text is then displayed on a 16x2 LCD display and pronounced using a speaker connected to the NodeMCU board. NodeMCU communicates with the other components of the system usingWi-Fi,whichallowsforwirelesscommunicationand eliminatestheneedforadditionalwiresorconnections.

J. Output Text or Speech

Fig.4.4.NodeMCUwithBuilt-InWIFI

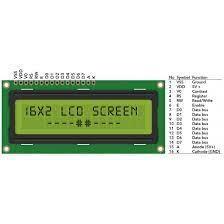

I. Display (16x2 LCD) and Voice Playback for User Feedback

In the eye gaze-controlled typing system, a 16x2 LCD displayandaspeakerareusedtoprovidevisualandaudio feedback to the user. The 16x2 LCD display is a type of alphanumericdisplaythatcanshowupto32charactersat atimeintwolinesof16characterseach.Itisacommonly useddisplayinembeddedsystemsandiseasytointerface with using digital input/output pins. In the eye gazecontrolled typing system, the LCD display is used to show the text selected by the user on the virtual keyboard. This allows the user to confirm that the correct text has been selected before it is pronounced by the speaker. The speaker is used to provide audio feedback to the user in the form of spoken words. In the eye gaze-controlled typing system, the speaker is connected to the NodeMCU board and is used to pronounce the text selected by the useronthevirtualkeyboard.Thespeakercanbeanytype of audio playback devices, such as a simple piezoelectric buzzer or a more advanced speaker with built-in amplification. Both the display and the speaker are connected to the NodeMCU board using digital input/output pins. The NodeMCU board communicates withthesecomponentsusingsoftwarelibrariesthatallow for easy interfacing with the display and speaker. This allows the user to receive both visual and audio feedback fromthesystem,makingitmoreuser-friendly.

Theoutputoftheeyegaze-controlledtypingsystemcanbe in the form of text or speech, depending on the user's preference. If the user selects text as the output, the system will display the selected text on the 16x2 LCD display. The user can then read the text and use it as needed.Iftheuserselectsspeechastheoutput,thesystem will pronounce the selected text using the speaker. The speakercanbeprogrammedtoproducespeechinavariety of languages and accents, allowing for greater flexibility and accessibility for users from different backgrounds. In eithercase,theoutputtextorspeechisdeterminedbythe user's selections on the virtual keyboard, which is controlled by eye movements and blinking. This allows users with motor impairments or disabilities to interact with the system and produce text or speech output in a waythatiscomfortableandnaturalforthem.

V. RESULT AND DISCUSSION

After implementing all setup and successful run of our message conveyer several times to obtain results. This device is designed to help people who are physically disabledandarenotabletoSpeaktoconveytheirmessage toothers.Thesystemdetectseyeblinkingandtheletterin whichThecursorisplacedduringthetimeofeyeblinking will beshownonthe screen,thusmakinga sentence. This systemisdesignedataverylowcostsothatitisaffordable toeveryoneandisalsoaccessibletosmallchildrenaswell. The proposed system will provide a robust and a sort of techniquetowriteforphysicallydisabledpeople,itisnota quick way of writing for normal people. The proposed system in this paper provides a new dimension in the life ofdisabledPeoplewhohaven’tanyotherphysicalabilities withouteyesmovement.

VI. CONCLUSION

The Message conveyor for locked syndrome patients is a significant advancement in assistive technology that provides an intuitive and efficient means for individuals with motor impairments or disabilities to communicate. The system uses advanced computer vision algorithms to detect the user's face, eyes, and gaze direction and providesasimpleandeasy-to-useinterfaceforcontrolling the virtual keyboard. The system is designed to be customizable,withvarioussettingsthatcanbeadjustedto suittheuser'sneedsandpreferences.Theoutputcanbein the form of text or speech, and is displayed on an LCD display or pronounced using a speaker. The Eye Gaze Controlled Typing System has the potential to greatly improve the quality of life for individuals with motor impairments or disabilities, enabling them to communicate more effectively and express themselves more freely. It represents a significant step forward in assistive technology and has the potential to become an essentialtoolforindividualswithdisabilities.

VII. REFERENCES

1.R. Zhang, Y. Yang, J. Wang, T. Ma, X. Lv and P. Xu, "A speller system for the locked-in patient to communicate with friends," 2015 IEEE 28th Canadian Conference on Electrical andComputerEngineering (CCECE),Halifax,NS, Canada, 2015, pp. 1203-1206, doi: 10.1109/CCECE.2015.7129448.

2.K. Ullah, M. Ali, M. Rizwan, and M. Imran, "Low-cost single-channel EEG based communication system for people with lock-in syndrome," 2011 IEEE 14th International Multitopic Conference, Karachi, Pakistan, 2011,pp.120-125,doi:10.1109/INMIC.2011.6151455

3.M. Mogrovejo, E. Pinos-Velez, R. Redrovan and L. SerpaAndrade, "Communication system for people with lockedin syndrome through electromyography signals," 2017 IEEE International Autumn Meeting on Power, Electronics andComputing(ROPEC),Ixtapa,Mexico,2017,pp.1-5,doi: 10.1109/ROPEC.2017.8261573.

4.S. Ramkumar et al., "Designing Communication System for Person with Locked-in Syndrome Using Machine Learning Technique," 2019 IEEE International Conference on Clean Energy and Energy Efficient Electronics Circuit for Sustainable Development (INCCES), Krishnankoil, India, 2019, pp. 1-5, doi: 10.1109/INCCES47820.2019.9167686.

5.S. Adama, U. Chaudhary, N. Birbaumer and M. Bogdan, "Longitudinal AnalysisoftheConnectivity andComplexity of Complete Locked-in Syndrome Patients Electroencephalographic signal," 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Korea (South), 2020, pp. 958-962, doi: 10.1109/BIBM49941.2020.9313391.

6.M. Moeneclaey and P. Sanders, "Syndrome-based Viterbi decoder node synchronization and out-of-lock detection," [Proceedings] GLOBECOM '90: IEEE Global Telecommunications Conference and Exhibition, San Diego, CA, USA, 1990, pp. 604-608 vol.1, doi: 10.1109/GLOCOM.1990.116581.

7.A. Comaniciu and L. Najafizadeh, "Enabling Communication for Locked-in Syndrome Patients using Deep Learning and an Emoji-based Brain Computer Interface," 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 2018, pp. 1-4, doi:10.1109/BIOCAS.2018.8584821.

8.P.Swetha,S.Amardeep,A.SivaNagasen,G.ManojKumar and G. Kranthi Kumar, "Arduino based Virtual Keyboard for Locked-in-Syndrome," 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 2020, pp. 10241028,doi:10.1109/ICCMC48092.2020.ICCMC-000191.

9.S.Aziz,S.Ibraheem,A.Malik,F.Aamir,M.U.KhanandU. Shehzad,"ElectrooculugrambasedCommunicationSystem for People with Locked-in-Syndrome," 2020 International Conference on Electrical, Communication, and Computer Engineering(ICECCE),Istanbul,Turkey,2020,pp.1-6,doi: 10.1109/ICECCE49384.2020.9179323

10.W. Shehieb, S. Alansari and N. Jadallah, "EEG-based communication system for patients with locked-in syndrome using fuzzy logic," 2017 10th Biomedical Engineering International Conference (BMEiCON), Hokkaido, Japan, 2017, pp. 1-5, doi: 10.1109/BMEiCON.2017.8229168.

11.C. Guger et al., "MindBEAGLE A new system for the assessment and communication with patients with disorders of consciousness and complete locked-in syndrom," 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 2017,pp.3008-3013,doi:10.1109/SMC.2017.8123086.

12.D.Koo,H.F.H.Polanco,M.Cross,Y.H.Rho,N.Amesand J. Raiti, "Demonstration of low-cost EEG system providing

on-demand communication for locked-in patients," 2021 IEEEGlobalHumanitarianTechnologyConference(GHTC), Seattle, WA, USA, 2021, pp. 108-111, doi: 10.1109/GHTC53159.2021.9612454.