Obstacle Detection for Visually Impaired Using Computer Vision

Tushar Vedika Avhad51234B E. Student, Dept of Information Technology, VPPCOE&VA, Mumbai, Maharashtra, India

5 Assistant Professor, Dept of Information Technology, VPPCOE&VA, Mumbai, Maharashtra, India

***

Abstract - Blindness refers to the loss of visual perception, which can cause mobility and self-reliance issues for people who are visually impaired or blind. “Visioner” is a tool for blind individuals, which uses computer vision through obstacle detection technology to improve their quality of life. This intelligent aid is equipped with different sensors and is designed to help the visually impaired and blindnavigateboth indoor andoutdoorenvironmentswithoutlimitations.Visioner is a cost-effective, highly efficient, and reliable device that is lightweight, fast-responding,andenergy-efficient.Thedevice's advanced algorithm and computer vision technology detect objects in the surrounding environment and relay this information to the user through voice messages. To enhance usability, support for multiple languages will be included, which will allow users to select the language according to their preference.

Key Words: LiDAR Sensor, Arduino Uno, ESP32 CAM, YOLO, Smart Blind Stick.

1. INTRODUCTION

In2014,theWorldHealthOrganization(WHO)releaseda report stating that approximately 285 million individuals werevisuallyimpaired.Ofthistotal,39millionpeoplewere classified as blind, while 246 million were categorized as havinglowvision.Theadventofmoderntechnologieshas led to the development of Electronic Travel Aids (ETAs), whicharedesignedtoenhancemobilityforvisuallyimpaired individuals. Thesedevices are equipped withsensorsthat candetectpotentialhazardsandalerttheuserwithsounds or vibrations, providing advanced warning and enabling users to avoid obstacles and navigate their surroundings with greater confidence. The availability of ETAs has significantlyimprovedsafetyandindependenceforvisually impaired individuals, enhancing their quality of life and enablinggreaterparticipationinsociety.

TheVisionersmartstickisanewtechnologydesignedtoaid visually impaired individuals in navigating their surroundings.ItisequippedwithaLidarsensor,anesp32 camera sensor, and a buzzer to help identify objects and obstaclesintheenvironment.Thepurposeofthesmartstick is to provide a more reliable and efficient way for the visually impaired to move around and navigate their surroundings.Visionerisacost-effective,efficient,anduserfriendlyETAthatusesInternetofThings(IoT)technology.

2. LITERATURE REVIEW

In this section the summarization of previous and existing systemisgiven.Thoughmanytechnologieshaveemergedin thelastfewyearsforvisuallychallengedpeople,therearea lotoflimitationsandrestrictionsinthoseinventions.

KimS.Y&ChoK.[1]Theauthorhasproposedinthispaper thatbyanalyzingusers'needsandrequirementsforasmart canewithobstacledetectionandindication,designguidelines canbegenerated.Thestudyfoundthatasmartcaneprovides moreadvantagestovisuallyimpairedpeoplethantraditional whitecanes,asitismoreeffectiveinavoidingobstacles

Z.Saquib,V.MurariandS.N.Bhargav.[2]Theauthorshave proposedintheirsystem,BlinDar-AnInvisibleEyeforthe BlindPeople,ahighlyefficientandcost-effectivedevicefor theblind,whichusesultrasonicsensors,MQ2Gassensors, ESP8266Wi-Fimodule,GPSmodule,Wristbandkeybutton, Buzzer,Vibratormodule,speechsynthesizer,andRFTx/RX moduletoaidinnavigation.However,onelimitationofthe deviceisthatittakesalongerscanningtime,resultingina longerwaitingtimefortheuser.

R.O'Keeffeetal.[3]Theauthorshaveproposedanobstacle detection system for the visually impaired and blind that utilizesalong-rangeLiDARsensormountedonawhitecane. Thesystemoperatesbothindoorsandoutdoors,hasarange ofupto5m,andaimstoreportthecharacterizationresultsof theGen1long-rangeLiDARsensor.Thesensorisintegrated with short-range LiDar, UWB radar, and ultrasonic range sensors, and each range sensor must meet specific requirements, including a small power budget, size, and weight, while maintaining the necessary detection range undervariousenvironmentalconditions.

N. Krishnan.[4] The authors have proposed a low-cost proximitysensingsystemembeddedwithLiDARforvisually impairedindividuals.Whilethesystemispreciseforaverage to large distances, it requires compensations for loss of linearity at short distances. The circuit diagram was establishedbasedonavailablecomponentresources,andthe DRV2605L device was placed in PWM interface mode to control vibration strength. The system efficiently detects obstacles, but the challenge remains in conveying the informationtotheuser

M.Maragatharajan,G.Jegadeeshwaran,R.Askash,K.Aniruth, A.Sarath.[5]Intheirpaper,theauthorshaveintroducedthe

ThirdEyefortheBlindproject,awearablebanddesignedto aid visually impaired individuals in navigating their environment.Thedeviceutilizesultrasonicwavestodetect nearbyobstaclesandalertstheuserthrougheithervibrations or buzzing sounds. The obstacle detector is based on the Arduino platform and is portable, cost-effective, and userfriendly. By enabling the detection of obstacles in all directions, the device empowers the visually impaired to moveindependentlyandwithgreaterconfidence,reducing theirrelianceonexternalassistance.

3. PROPOSED SYSTEM

3.1 PROBLEM STATEMENT

Thevisuallyimpairedfacesignificantchallengesinmobility andsafety,particularlyinunfamiliarenvironments.Existing assistive technologies such as canes and guide dogs have limitations in detecting obstacles and providing real-time informationabouttheenvironment.Thelackofaccessibility tovisualcuesmakesitdifficultforthevisuallyimpairedto navigate and avoid obstacles, leading to accidents and injuries. Therefore, there is a need for an assistive device thatcanprovideaccurateandreal-timeinformationabout the environment to enhance mobility and safety for the visuallyimpaired.TheVisionarysmartstickaimstoaddress this problem by utilizing advanced sensors and machine learning algorithms to detect and identify obstacles and provide speech output to the user in multiple languages, therebyimprovingtheirindependenceandqualityoflife.

3.2 PROPOSED METHODOLGY

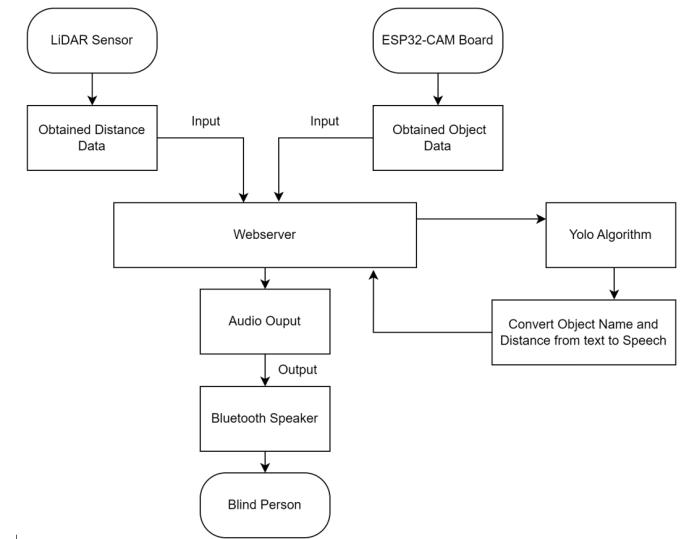

ThisprojectisanIoT-basedsolutionutilizingArduinoUno,a LiDARsensor,anESP32CAMboard,andtheYOLOalgorithm forobstacledetection.TheLiDARsensoridentifiesobstacles andrecordstheirprecisedistance,whichisthentransmitted totheESP32CAMboard.TheESP32CAMboardcapturesan image of the obstacle and transmits both the image and distancevaluetoaserver.Machinelearningalgorithmssuch asYOLOareemployedforreal-timeobjectclassificationand preciseidentification.Speechoutputisprovidedtotheuser intheirpreferredlanguage,utilizingeitherthepyttsx3library orwebspeechAPIs.Ensuringaseamlessdatatransferfrom the sensors to the web server and ultimately to the user's device, the overall experience is optimized for the visually impairedusertonavigatetheirsurroundingareaswithease.

3.3 System Architecture

The system architecture consists of a blind stick that housesanArduinoUnoBoard,connectedtoabatterysource. The Arduino board has a TF-Luna Micro Lidar sensor connectedto pins 2and3 for RXand TX respectively. The main purpose of this sensor is to capture the distance of obstacles.TheArduinoboardcalculatesthedistanceincm andsendsthisvaluetoanESP32CAMboardconnectedtoit atpins0and1forRXandTXrespectively.TheESP32CAM

boardalsocapturestheimageoftheobstacleandsendsboth theLidardistancevalueincmandtheimagetoawebserver.

On the web server, the image received from theESP32 CAM board is analyzed using the yolov4 algorithm. The objectsintheimageareclassified,andthetotalnumberof obstaclesdetected,alongwiththeirnameandthedistanceof the nearest obstacle,issentto theuser deviceasa speech output. This output is customizable in terms of language, volume,gender,andpitch,andcanbeconfiguredusingthe webserver'sspeechaidfunctionality.Inaddition,whenthe distanceofthenearestobstacleislessthan30cm,abuzzer sound is raised in the speech output, warning the visually impairedofanimpendingcollision.

Overall, the smart blind stick project provides a comprehensive solution to assist visually impaired individuals with navigation, and the system architecture ensuresthereliableandefficientoperationofthedevice.

3.4 YOLO Algorithm

Object detection is a complex computer vision task that involvesbothlocalizingoneormoreobjectswithinanimage andclassifyingeachobjectpresentintheimage.Thistaskis challengingasitrequiressuccessfulobjectlocalizationand classificationtopredictthecorrectclassofobject.

YOLOv4isanadvancedreal-timeobjectdetectionalgorithm that achieves state-of-the-art accuracy on multiple benchmarks.ItbuildsuponthepreviousversionsofYOLO (You Only Look Once) algorithms, which were already recognizedfortheirspeedandaccuracy.

The YOLOv4 algorithm utilizes a deep neural network to classifyobjectsinreal-timeimages.Itisasingle-stageobject detectionmodelthatdetectsobjectsinonepassthroughthe network,makingitfasterthanmanyotherobjectdetection algorithms.

Inthisproject,YOLOv4analyzestheimagescapturedbythe ESP32CAMboard.Thealgorithmdetectstheobjectspresent intheimageandclassifiesthemintodifferentcategories.The informationisthenusedtoprovidetheuserwithaspeech output that includes the total number of objects detected, theirnames,andthedistanceofthenearestobject.

The YOLOv4 algorithm works by dividing the input image intoa gridandpredictingtheobjectswithineachgridcell. The algorithm then assigns a confidence score to each predictionandfiltersoutlow-confidencedetections.Finally, non-maximumsuppressionisappliedtoremoveredundant detections.

TheYOLOv4algorithmistrainedonlargedatasets,enabling ittodetectobjectsaccuratelyinawiderangeofreal-world scenarios.Thealgorithmcanalsobefine-tunedforspecific use cases, making it highly adaptable to different applications.Itisahighlyaccurateandfastobjectdetection algorithmutilizedinthis projecttoclassifyobjectsinrealtime images captured by the ESP32 CAM board. The algorithm provides visually impaired individuals with a comprehensive understanding of their surroundings, allowingthemtonavigatesafely.

4. RESULTS AND DISCUSSION

Thesensorsand modulesdepictedinFigure1underwent individual testing, withtheirrespective outputsobserved. Firstly,theTF-LunaMicroLiDARwastested.Thedevicewas programmedinanArduinoIDEenvironmentandthecode wasuploadedtothemicrocontrollerboardofArduinoUno. TheLiDARsensoremitsalightbeamontheobject,andthe timetakentoreceivethereflectedlightisusedtocalculate thedistanceincentimeters.

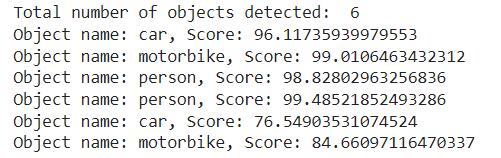

Subsequently, the ESP32 microcontroller, which has an OV2640 camera module attached to it, was tested. Its purpose is to capture an image and send it to the server whereobjectdetectionisapplied,asshowninFigure2.The figure displays the total number of objects detected, their names,andtheiraccuracy.

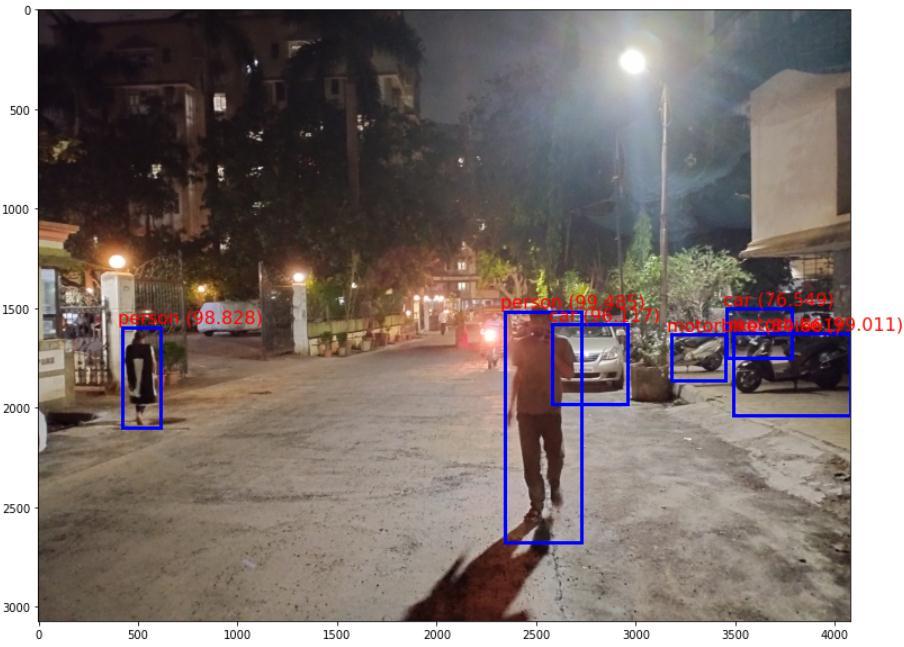

Fig.3. ScreenshotofYoloAlgorithmOutput2

Following the individual testing of the sensors, the integrationprocesswascarriedout,wherebyallcomponents wereconnectedtotheblindstick.Thebatterywasusedas theprimarypowersource,andaswitchwasincorporatedto providethefunctionalityofturningthestickonandoff.

5. CONCLUSION AND FUTURE SCOPE

5.1 CONCLUSION

Inconclusion,thedevelopmentoftechnologiessuchasIoT hascreatednewpossibilitiesforimprovingthequalityoflife forvisuallyimpairedindividuals.Thisprojectisanexample of how IoT technology can be harnessed to provide innovative solutions that aid the visually impaired in navigatingtheirsurroundingswithgreatereaseandsafety.

Fig.2. ScreenshotofYoloAlgorithmOutput1

The bounding box in the image for detected objects is presentedinFigure3.TheoutputoftheYoloAlgorithmis part of the backend process, and the user receives audio outputfromtheserverwherethe"Totalnumberofdetected Objectsinthearea,""Objects,"andtheirdistanceincmare announcedthroughthespeechsynthesislibrary.

ThesmartblindstickdevelopedinthisprojectutilizesLidar sensors,computervisionalgorithms,andspeechoutputto detectandclassifyobstaclesintheenvironmentandprovide real-time feedback to the user. The use of the YOLOv4 algorithmforobjectdetectionandclassificationmakesthe system highly accurate and reliable, and the ability to customizethespeechoutputtosuitindividualpreferences further enhances. In summary, the smart blind stick developed in this project is an IoT-based solution that leveragesthepowerofLidarsensors,computervision,and speechoutputtoprovidevisuallyimpairedindividualswith acomprehensiveunderstandingoftheirsurroundingsand navigatesafely.Thesalientfeaturesofthisprojectinclude accurate object detection and classification, real-time feedback, customizable speech output, cost-effectiveness, andeasyreplication.

5.2 FUTURE SCOPE

This project has a promising future scope, offering opportunities for further research and development. Possible directions include improving the accuracy and precision of sensors used for distance measurement and objectdetection,incorporatingmachinelearningalgorithms, andintegratingthedevicewithothertechnologieslikeGPS orhapticfeedbacksystems.Thedevice'saccessibilitycould be enhanced by exploring alternative components or optimizingthemanufacturingprocess.Thisprojectsetsthe groundworkforfutureinnovationinassistivetechnologies forindividualswithvisualimpairments,withthepotentialto greatlyimprovetheirqualityoflifeandindependence.

ACKNOWLEDGEMENT

We wish to express our sincere gratitude to Prof. Vedika Avhad, Assistant Professor of Information Technology Department, for her guidance and encouragement in carryingoutthisproject.

Wealsothankfacultyofourdepartmentfortheirhelpand supportduringthecompletionofourproject.Wesincerely thankthePrincipalof VasantdadaPatilPratishthan'sCollege ofEngineeringforprovidingustheopportunityofdoingthis project.

REFERENCES

[1] Kim S. Y & Cho K, “Usability and design guidelines of smart canes for users with visual impairments”, InternationalJournalofDesign7.1,pp.99-110,2013

[2] Z. Saquib, V. Murari and S. N. Bhargav, "BlinDar: An invisibleeyefortheblindpeoplemakinglifeeasyforthe blind with Internet of Things (IoT)," 2017 2nd IEEE International Conference on Recent Trends in Electronics,Information&CommunicationTechnology (RTEICT), Bangalore, India, 2017, pp. 71-75, doi: 10.1109/RTEICT.2017.8256560.

[3] R.O'Keeffeetal.,"LongRangeLiDARCharacterisation forObstacleDetectionforusebytheVisuallyImpaired andBlind,"2018IEEE68thElectronicComponentsand Technology Conference (ECTC), San Diego, CA, USA, 2018,pp.533-538,doi:10.1109/ECTC.2018.00084.

[4] N.Krishnan,"ALiDARbasedproximitysensingsystem for the visually impaired spectrum," 2019 IEEE 62nd International Midwest Symposium on Circuits and Systems (MWSCAS), Dallas, TX, USA, 2019, pp. 12111214,doi:10.1109/MWSCAS.2019.8884887.

[5] M. Maragatharajan, G. Jegadeeshwaran, R. Askash, K. Aniruth,A.Sarath,“ObstacleDetectorforBlindPeoples”, International Journal of Engineering and Advanced Technology(IJEAT),pp.61-64,Dec2019

[6] Y.-C.HuangandC.-H.Tsai,"Speech-BasedInterfacefor VisuallyImpairedUsers,"2018IEEE20thInternational Conference on High Performance Computing and Communications;IEEE16thInternationalConferenceon SmartCity;IEEE4thInternationalConferenceonData ScienceandSystems(HPCC/SmartCity/DSS),Exeter,UK, 2018, pp. 1223-1228, doi: 10.1109/HPCC/SmartCity/DSS.2018.00206.

[7] J.Redmon,S.Divvala,R.GirshickandA.Farhadi,"You OnlyLookOnce:Unified,Real-TimeObjectDetection," 2016IEEEConferenceonComputerVisionandPattern Recognition(CVPR),LasVegas,NV,USA,2016,pp.779788,doi:10.1109/CVPR.2016.91.