Deep Learning based Multi-class Brain Tumor Classification

1Student, Department of Information Technology, Pillai College of Engineering, New Panvel, Maharashtra, India

2Student, Department of Information Technology, Pillai College of Engineering, New Panvel, Maharashtra, India

3Student, Department of Information Technology, Pillai College of Engineering, New Panvel, Maharashtra, India

4Student, Department of Information Technology, Pillai College of Engineering, New Panvel, Maharashtra, India

5Professor, Department of Computer Engineering, Pillai College of Engineering, New Panvel, Maharashtra, India ***

Abstract - A braintumor is a kindofcancerthatcanimpact people, sometimes fatally or with significant quality of life impairment. UsingDeep learningtechniques, researchers can identify tumors and treat them more efficiently. Brain MRI pictures can be used in a variety of ways to find malignancies. Deep learningtechniques have significantly outperformedthe rest ofthese techniques. Withinthe framework, comparisonof the models has beenemployedfor tumor detectionfrom brain MRI scans. Among the Convolutional Neural Network (CNN) architectures that can be employed are Custom CNN, DenseNet169, MobileNet, VGG-16, andResNet152models.The same hyper-parameters can be used to train these models on MR images that have undergone the same dataset and preprocessing procedures. The goal is to develop an architecture that will compare various models to classify the Brain Tumor MRI. Machine learning and deep learning algorithms can be used to directly scan and determine the presence and type of tumor. Therefore, it is useful for analyzing brain tumor detection performance using various methods. The dataset usedfor BrainTumor Detectionconsists of approximately 5000 Brain MR Images.

Key Words: Classification,NeuralNetworks,BrainTumor Classification,DeepLearning,ArtificialIntelligence

1.INTRODUCTION

A relatively large number of people are diagnosed with secondary neuropathy. Although the exact number is unknownbutthistypeofbraintumorisontherise.Withthe use of extremely effective clinical imaging tools, early detectioncanalwaysspeeduptheprocessofcontrollingand eliminatingtumorsintheirearlystages.Apatientwiththe tumor may become immobile because a tumor may place pressureonthepartofthebrainthatregulatesmovementof thebody[6][14][15].Theaimofthisstudyistoimprovethe detection accuracy of brain tumors on MRI picture using imageprocessingandmachinelearningalgorithms,aswell as to develop a framework for rapidly diagnosing brain tumors from MRI images [19]. Amin et al. proposed [3] a three-stepmethodfordistinguishingbetweencancerousand non-cancerousbraintissueMRI.Amongthestepsinvolved are image processing, feature extraction, and image classification. This framework is useful not only for the medical staff but also for the other employees of the

companybecausethereisachancethatteamswillneedto divide the images into various categories. In such circumstances,thiscanbeusedtodistinguishbetweenthe images and keep patient records secure. This could be a crucialtoolthatishelpfulforanyhospitalemployeebecause medical images are delicate and must be handled with extreme care. The objective is to identify and classify differenttypesoftumorsandmostimportantlytosavetime of doctors/patients and provide a suitable remedy at an early stage and to identify and supply good insights to doctors[20].ExaminingtheBraintumorMRIimagesinthe Healthcare industry implies the process of identifying tumors in the early stage. The framework will help in automatic detection of images containing tumors and searchingforcorrelationsbetweenneighboringslicesalong withitcanalsodoautomaticdetectionofsymmetricalaxes oftheimage[13].Furthermore,thisframeworkcanbeused to create a full-fledged application to detect any type of CancerousPolyps.

2. BACKGROUND

2.1 Convolutional Neural Network

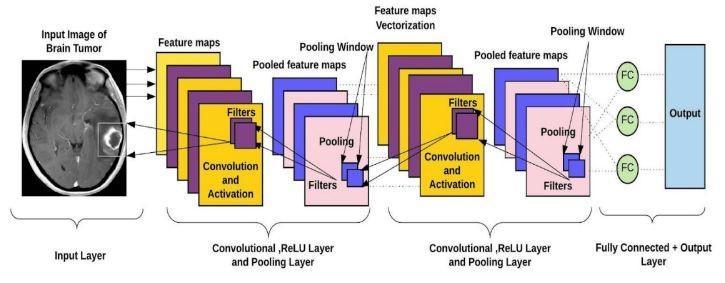

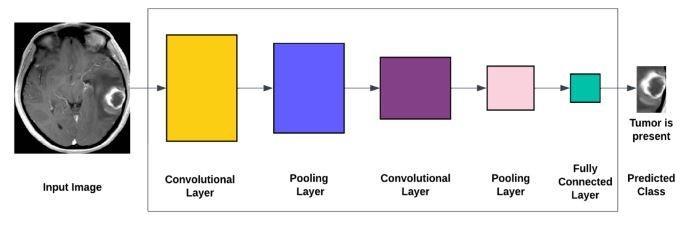

Convolutional Neural Networks (ConvNet/CNN) is a deep learningtechniquethatcanacceptinputimagesandassign gisttovariouselementsandobjects(learnableweightsand biases),andbeabletodistinguishbetweenthem.ConvNets, by comparison, depends upon approximately less preprocessingthanotherclassificationtechniques.ConvNets can learn from filters and properties, but primitive techniques create the filters manually. To classify multigradebraintumors,a novel convolutional neural network (CNN)isproposed[2].Individualneuronsperceivestimuli onlyinthisrestrictedareaofthevisualfield

Alayerthatreceivesmodel inputsiscalledaninputlayer. Thetotalnumberoffeaturesinthedataisthesameasthe number of neurons (pixels in the case of images) in that layer.InputLayer:Thehiddenlayerreceivestheinputfrom the input layer [1] [7]. Depending on our model and the volumeofthedata,theremaybenumeroushiddenlevelsas showninFig1.Thenumberofneuronsineachhiddenlayer varies, but usually exceeds the number of features. The outputofeachlayeriscomputedbymultiplyingtheoutput ofthelayerbelowbyalearnableweight,addingalearnable bias,andthencomputinganactivationfunctionthatmakes the network nonlinear [21]. The output from the hidden layer is then passed into the output layer, where it is convertedintothe probabilityscoreforeachclassusinga logistic function like sigmoid or SoftMax. Applications for CNN include decoding facial recognition, understanding climate, and gathering historical and environmental data [10].

2.2 Transfer Learning

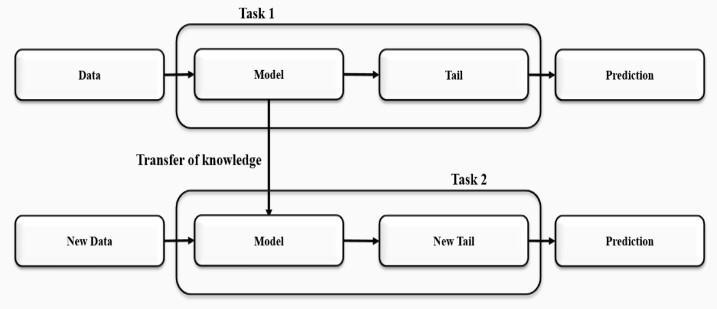

Transfer learning is a term used in machine learning to describetheuseofapreviouslytrainedmodelforanother task.Intransferlearning,machinesuseinformationgathered frompreviousworktoimprovepredictionsaboutnewtasks [16].Forinstance,ifausertrainsaclassifiertodetermineif animagecontainsfood,thelearnedknowledgecanbeused todistinguishbetweendrinks.Transferlearningappliesthe expertiseoftrainedmachinelearningmodelstounrelated but closely related problems. For example, in this case, if usertrainedasimpleclassifiertopredictwhetheranimage contains tumor, user can use modeling data to determine whetherthetumorintheimagearepresentornot.

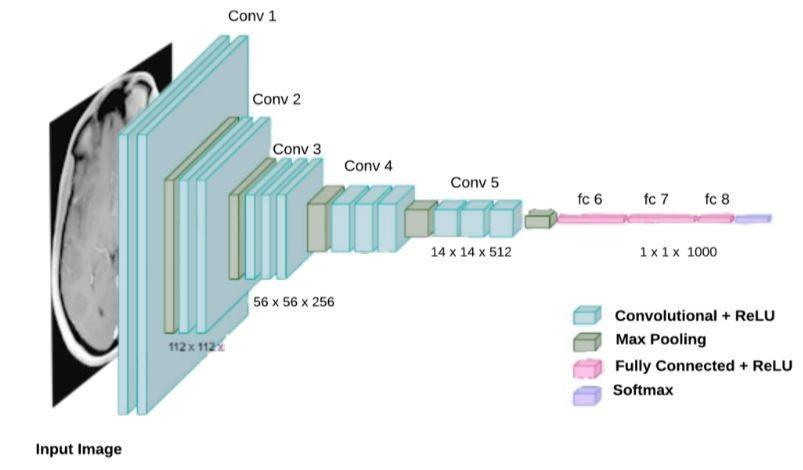

perform even better. The 16-layer VGG 16 architecture consistsofhistwolayersofconvolutions,alayerforpooling, andafullyconnectedlayer.Theconceptofamuchdeeper network with much smaller filters is known as a VGG network. It currently has VGGNet models with 16 to 19 layers. One crucial aspect of these models is that they consistentlyused3x3convolutionalfilters,whicharethe smallestconvolutionalfiltersizescapableofexaminingsome ofthenearbypixels.Allthewayacrossthenetwork,theyjust maintainedthisbasicstructureof3x3convswithperiodic pooling.BecauseVGGhadfewerparameters,itlayeredmore ofthemratherthanusinglargerfilters.Insteadofusinghuge filters, VGG uses smaller and deeper filters. With a 7 x 7 convolutionallayer,itnowhasthesameeffectivereceptive fieldasthatlayer.Convolutionallayers,apoolinglayer,afew otherconvolutionlayers,afewmorepoolinglayers,andso onarepresentinVGGNet.Fig3VGGarchitecturehas16fully connected

Fig - 2:TransferLearning

Shows how transfer learning basically uses what the machine or user has learned in one task to try to better understand the concepts of another task. The weights are automaticallytransferredfromthenetworkthatperformed "taskB"tothenetworkthatperformed"taskA".Becauseof high CPU power requirement, transfer learning is usually usedincomputervision.

2.3 VGG-16

The key difference between the networks and the deeper networks of 2014 is that some designs are flashier and

Convolutionlayers.Inthiscase,VGG16contains16andVGG 19contains19,whichmeansbasicallythesamearchitecture, butwithsomeextralayersofconvolution.

2.4

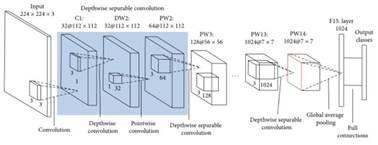

TheMobileNetmodel,asitsnamesuggests,isTensorFlows firstmobilecomputervisionmodelandisintendedforusein mobileapplications.

Fig4shows Depth-separablefoldingisusedinMobileNet. Whencomparedtoconventionalconvolutionnetswithequal depth folds, the number of parameters is significantly reduced.Asaresult,alightweightdeepneuralnetworkhas

beencreated.Twoprocessesareusedtocreateadepthwise separableconvolution.

1. Depthwiseconvolution.

2.Pointwiseconvolution.

The convolution described above is an excellent starting point for training ridiculously small and extremely fast classifiers. Google offers an open-source CNN class called MobileNet.

2.6 DenseNET-169

As demonstrated in the Fig 6, a forward pass in a conventionalconvolutionalneuralnetworkinvolvespassing aninputimagethroughthenetwork toobtaina predicted labelfortheoutput.

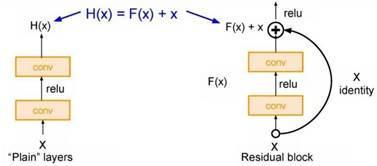

The residual block serves as ResNet’s primary base component.Thecomplexityofprocessingincreasesasdelve furtherintothenetworkwithseverallevels.Stackofthese layers on top of one another is done, with each layer attempting to uncover some underlying mapping of the desired function. However, instead of using these blocks, usertrytoadjusttheremainingmapping.

Because the input to these blocks is simply the incoming input,classesareuseddirectlytoadjusttheremainderofthe function H(X) - X rather than the predicted function H(X). Basically,theinputisjusttakenand passedthroughasan identity at the end of this block, where it takes the skip connectiononthisrighthere.Iftherewerenoweightlayers between,theinputwouldsimplybetheidentity.Iffurther weightlayersarenotemployed

Exceptforthefirstconvolutionallayer,whichusestheinput image,allsubsequentconvolutionallayersbuildtheoutput feature map that is passed to the next convolution layers usingtheoutputofthepreviouslayer.TheLlayershaveL direct connections, one from each layer to the next. Each layer in the DenseNet architecture is connected to every otherlayer,thusthetermdenselyconnectedconvolutional network. For L classes, there are L(L+1)/2 direct connections.Eachlayerusesthefeaturemapfromalllayers beforeitasinput,anditsownfeaturemapisusedasinput for each layer after it. DenseNet layers take input as a concatenationoffeaturesMapfrompreviouslevel.

3 RELATED WORKS

Techniquesbasedondeeplearninghaverecentlybeenused to classify and detect brain tumours using MRI and other imagingmethods.

[4]AcustomCNNalgorithmwascreated,whichimproved the model by training with additional MRI images to distinguish between tumor and non-tumor images. This paper only proposes to determine if an image contains a tumorandintroducesamobileapplicationasamedicaltool.

tolearnsomedeltafromresidualX,theresultwouldbethe sameastheoutput.Inaword,whilemovingfurtherintothe network,learningH(X)becomesincreasinglydifficultdueto thehighnumberoflayers.Asaresult,F(x)directinputofx astheoutcomeinthiscaseandemployedskipconnection.So F(x)isreferredtoasaresidualasshowninFig5.Allofthese blocksareverycloselystackedinResNet.Anotherbenefitof thisextremelydeeparchitectureisthatitallowsforup to 150levelsofthis,whichgetsperiodicallystack.Additionally, stridetwoisusedtodownsamplespatiallyanddoublethe number of filters. Only layer 1000 was ultimately fully connectedtotheoutputclasses.

Acomputer-basedmethodfordifferentiatingbraintumor regionsinMRIimages.ThealgorithmusesNNtechniquesto complete the appropriate phases of image preprocessing, image segmentation, image feature extraction, and image sorting[5].

[12] suggests developing an intelligent mechanism to detect brain tumours in MRI images using clustering algorithms such as Fuzzy C Means and intelligent optimizationtools.ACADsystemwasused,andtheresults showedthatPSOimprovedclassificationaccuracyaswellas typicalerrorrateaccuracyto92.8percent.

Anautomatedsystemforreal-timebraintumordetection wasproposedbytwodistinctdeeplearning-basedmethods forthedetectionandclassificationofbraintumors[11].

Sasikalaandteam[18]presentedageneticalgorithmfor selectingwaveletfeaturesforfeaturedimensionreduction. The best feature vector that can be fed into a selected classifier,suchasanANN,isthefoundationofthemethod. Thefindingsdemonstratedthatthegeneticalgorithmwas abletoachieveanaccuracyof98percentbyonlyselecting fourfeaturesoutofatotalof29.

Sajjad et al. [17] suggested a CNN method for data augmentation for the classification of brain tumors. The methodusedtoclassifybraintumorsbasedonMRIimages ofsegmentedbraintumors.Forclassification,theyutilizeda pre-trained VGG-19 CNN architecture and achieved accuraciesof87.39percentand90.66percentforthedata beforeandafteraugmentation,respectively.

A sophisticated method for classifying and categorising brain tumors from Magnetic Resonance Imaging (MR) images has been proposed [9]. The operation of the two imagerestorationfilters,theactiveuseoftheadaptivemean filter on the MR images, and the appropriate image enhancement and clipping steps required for tumor recognition are essential parameters needed to evaluate imagequality.Theestablishedtechniqueisprimarilyfocused on tumor detection, specifically detecting abnormal mass accumulation and significantly influencing the pixel-wise intensitydistributionoftheimage.

4. METHODOLOGY

4.1 About Dataset

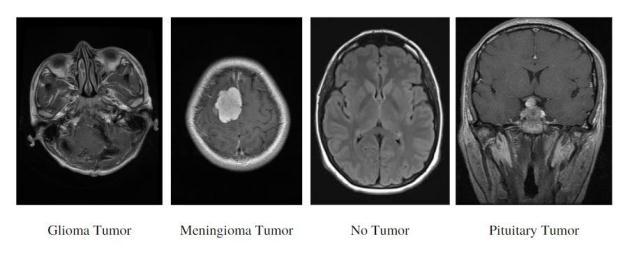

The dataset contains a total of about 5000 MR images divided into three different tumor types (Glioma, Meningioma and Pituitary) and one class of healthy brain depictingNotumor.

SampleMRImagesofallclassesarerepresentedinFig-7:

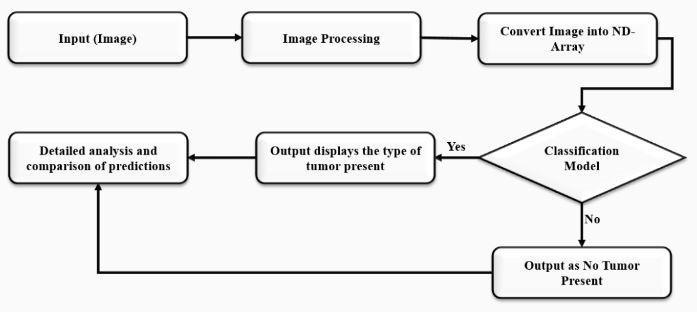

Theimagewillthenbescaledtomeetaspecificneed.After scaling, the image will then be reshaped into required dimensionssothatitcanfitinthealgorithmsthatitisgoing topassthrough.Theimagewillthenbetransformedintoan array for additional modeling. After all preprocessing is complete,theimageisrunthroughfivedifferentbestmodels which predicts effective output. Now since the model is trainedforpredictingtumorfromtheexistingfourclasses, thetypetumorthatispresentintheimagewillbepredicted based on the probability from the softmax activation function.Thetumortypethatisassociatedwithmaximum probabilitywillbegivenasoutput.Becausefivestrongand robustmodelsareusedtotestthesameinput,thisdesign’s unique feature is that it offers significant reliability of the presence of tumor and its type. As a result, a detailed comparison can be made between all five model outputs basedontheaccuracyoftheoutputaswellasthepredicted outputusingaweb-basedinterface.

4.2 Flow of the Project

Incontrasttotheexistingarchitecture,theproposedsystem addresses all its disadvantages and limitations. Fig 8 is a moredetailedexplanationoftheproposedsystem:First,the systemwillaccesstheimage,thenprepareit.Toensurethat the image will stand up for the algorithm to predict accurately,theimagewillbeenlargedduringpreprocessing.

Input Image

The first and foremost step is to collect the brain MRI images.

This data can be collected from websites like Kaggle, UCI repository etc. A lot of brain MRI images are required to train and test our model it to check the accuracy and precisionoftheproposedmodel.

Image Processing

After gettingthedata,data processingisdone.It isa very important step as if the image size is very large then the model will take a very long time to train the model. As a result,inputimagesareresizedtolowerdimensionssothat themodeldoesnottakealotoftimetotrain.Sometimesthe number of images will be very less, which is very bad for deep learning models as it is data hungry. Further, data augmentation is performed to increase the number of images.BrainMRIimagesarebasicallyGrayScaleimagesso thevaluerangesfrom0to255.So,normalizationisdoneon this data in the range of 0 to 1. Normalization is basically doneontopofimagessothattraining themodelbecomes

muchfaster.Theentirepreprocessingofalltheimagescan be done at once by using the keras ImageDataGenerator function in which custom parameters are provided and it will automatically convert set of images into desired processedimageandalsoit will convertdata intodesired batches so that it can be passed directly through the convolutionneuralnetworkortransferlearningmodel.Once theimageprocessingisdonethenitwillbepassedthrough nextstagewhichisconvertingimagetoNDArrayandbased onclassificationmodelpredictswhethertumorispresentor not.

Convert Image into N-D array

Images are basically in the form of pixels. It is basically a GrayScaleimagesoithas1singlechannel.Thepixelvalues rangebetween0to255.Theimagesarerepresentedinthe form of 2D matrix. Models like an ANN could not take 2D matrix as input, as a result there is need to convert the images into N-D array so that ANN could take this as an input. This section is optional if ImageDataGenerator functionisused.BecausethefunctionImageDataGenerator will automatically convert the set of images into suitable format for CNN and store it in a variable for further processing.

Classification model

AfterconvertingimagesintoN-Darrays,thenextstepisto train the model. Before training the model, since this is a classificationproblemandthedataisintheformofimages, CNN and transfer learning methodologies can be used to train the model. In the proposed system five different ideologies are been used i.e. Custom CNN with 6-layered architecture,MobileNETtransferlearning model,VGG- 16 transfer learning model, ResNET-152 transfer learning model and DenseNET-169 transfer learning model. These wereproventobethebestasfarasrobustnessandaccuracy areconcerned.Oncethemodelistrainedandanalyzed,the sametrainedmodelcanbeusedforanynewunseenimages to predict the type of tumor that is present inside it. For testing new unseen images, the same ideology of preprocessingwillbeappliedonitandthenitwillbepassed through these trained models and finally the prediction whethertheMRIcontainsthepituitarytumor,gliomatumor, meningiomatumorornotumorispresent.Currentlyonly thesefourclassesareavailableandinfuturemoreclasses canalsobeintegratedwiththeexistingdatatoextendthe scopeoftheproject.

Detailed analysis and comparison of prediction

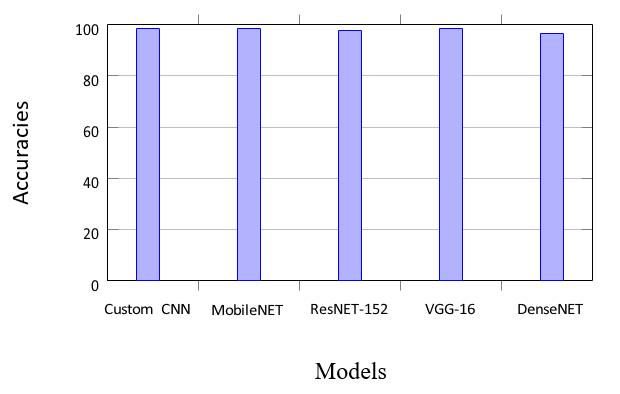

Sincetheworkhaveusedfiverobustmodels,aftertheimage ispassedthroughallthefivemodels,theoutputcanbeseen andfinallyadetailedcomparisonofeachmodel’soutputis made with graphs and accuracies associated with each as showninFig9.

5. RESULT AND ANALYSIS

TheobtainedresultsarepresentedintheFig9below.Inthis figure,onecanseetheaccuracyof98.32percentagewiththe customCNN6-layeredmodelwithsomepreprocessingon the unseen images. One can also see that usage of the transferlearningMobileNetarchitecturewithpreprocessing hasbroughtanaccuracyof98.63percentage.UsingResNET152andVGG-16architectureonecanseeagreataccuracyof 97.71 percentage and 98.62 percentage respectively on testingdata.WiththeusageofTransferlearningDenseNET architecture, an accuracy of 96.56 percentage has been obtainedontheunseenimagesofbraintumorMRI.

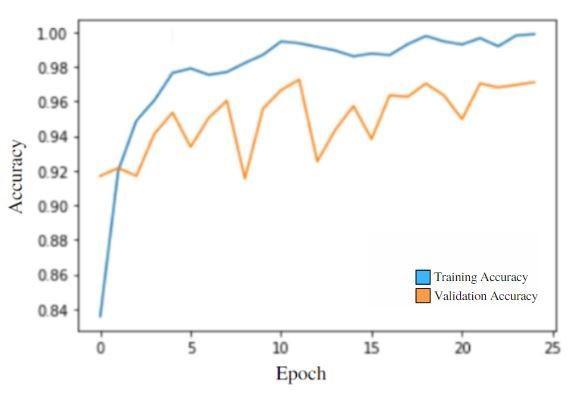

5.1 Custom CNN

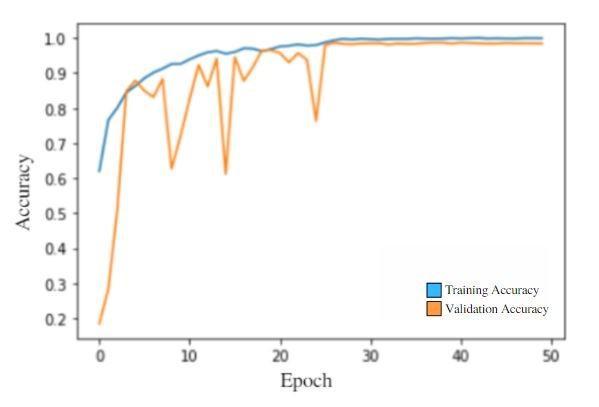

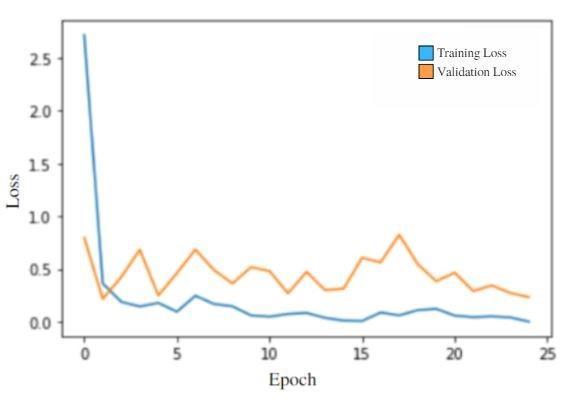

6-layered architecture of CNN has given pretty good accuracyonunseenimages[8].

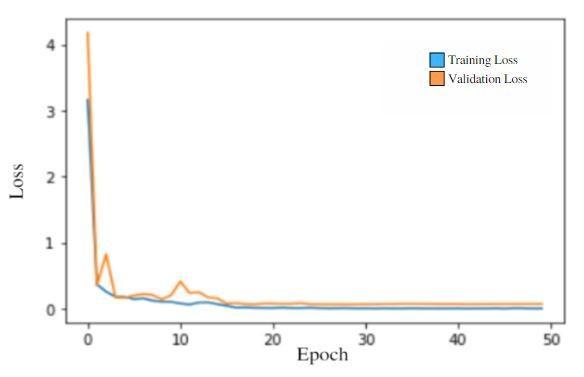

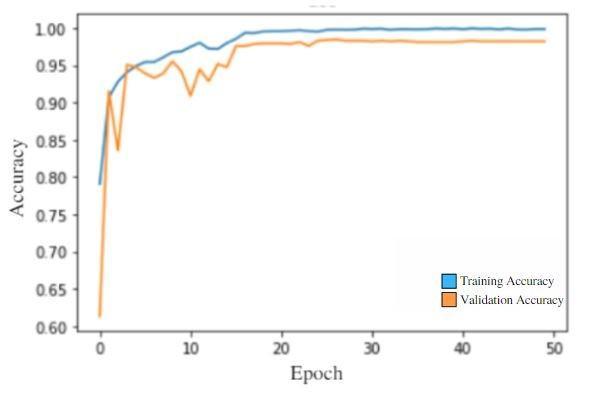

Accuracy: 98.32 percentage as represented in Table 1. AccuracyandLossgraphcomparisoncanbeseeninFig10 and11respectively.

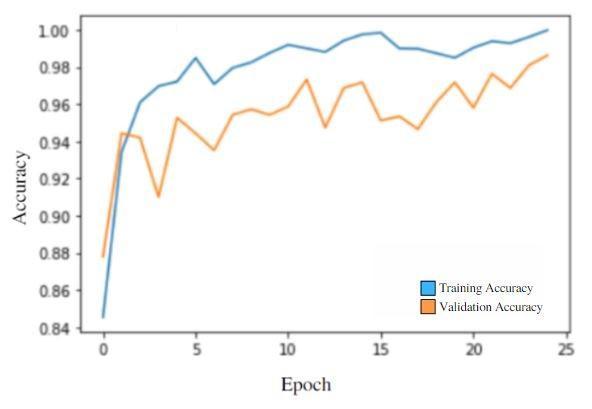

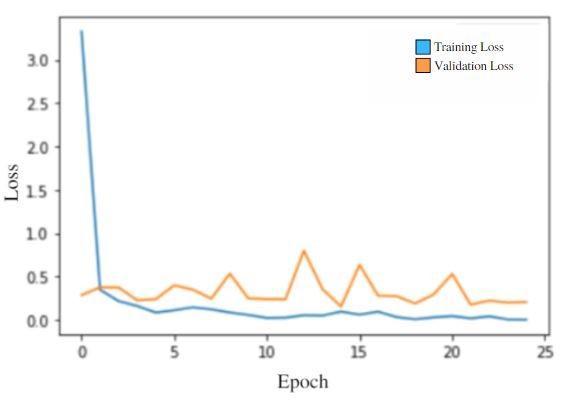

Pre-trained architecture of MobileNET has given great accuracyonunseenimages.

Accuracy:98.63percentageasrepresentedinTable2.

Accuracy and Loss comparison is shown in Fig 12 and 13 respectively.

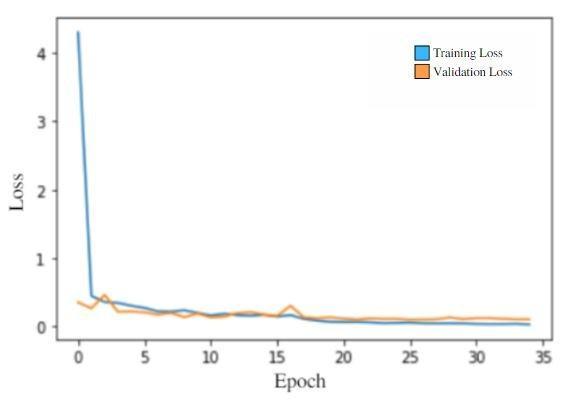

Pre-trained architecture of MobileNET has given great accuracyonunseenimages.

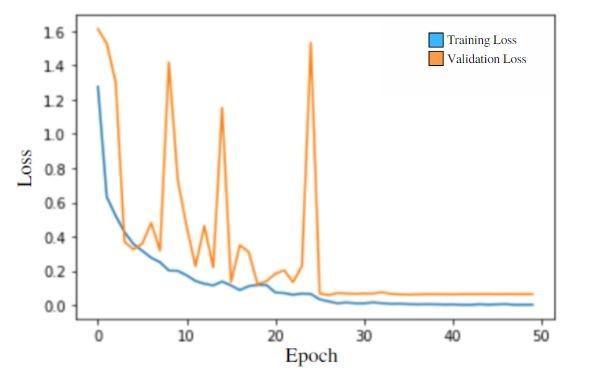

97.71 percentage as represented in Table 3. AccuracyandLossgraphcomparisonofResNET-152canbe seeninFig14and15respectively.

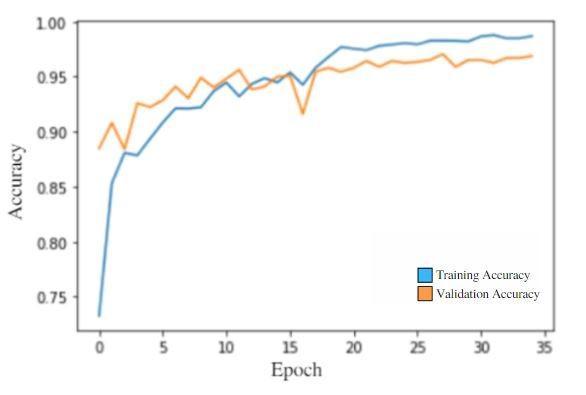

Pre-trainedarchitectureofVGG-16hasgivengreataccuracy onunseenimages.

98.62 percentage as represented in Table 4. AccuracyandLossgraphcomparisoncanbeseeninFig16 and17respectively.

Pre-trainedarchitectureofDenseNET-169hasgivenanice accuracyonunseenimages.

96.56 percentage as represented in Table 5. AccuracyandLossgraphcomparisoncanbeseeninFig18 and19respectively.

6. CONCLUSION

Inthisreport,apeculiarbraintumordetectionarchitecture hasbeendevelopedthatisbeneficialforthecharacterization of four MRI modalities. It implies that each modality has distinctivequalitiestoeffectivelyaidclassdistinctionbythe network. CNN model (the most popular deep learning architecture) can achieve performance close to that of humanobserversbyprocessingonlytheportionofthebrain image that is close to the tumor tissue. It has also been suggestedtouseaneasy-to-usebuteffectivecascadeCNN model to extract local and global characteristics in two separate methods utilising extraction patches of various sizes. The patches are chosen and fed into the network whose centres are situated in the predicted region of the tumourfollowingtheextractionofthetumourutilisingour method.Becauseaconsiderablenumberofuselesspixelsare eliminatedfromtheimageduringthepreprocessingstage, calculation time is decreased and the capacity for quick predictionsforclinicalimageclassificationisincreased.The comparativestudyofvarioustechniquesmentionedaboveis presentedinthisreport.

REFERENCES

[1] ZeynettinAkkus,AlfiiaGalimzianova,AssafHoogi,Daniel LRubin,andBradleyJErickson.Deeplearningforbrain mrisegmentation:stateoftheartandfuturedirections. Journal of digital imaging,30:449–459,2017.

[2] SaadAlbawi,TareqAbedMohammed,andSaadAl-Zawi. Understanding of a convolutional neural network. In 2017 international conference on engineering and technology (ICET),pages1–6.Ieee,2017.

[3] JaveriaAmin,MuhammadSharif,MussaratYasmin,and StevenLawrenceFernandes.Adistinctiveapproachin brain tumor detection and classification using mri. Pattern Recognition Letters,139:118–127,2020.

[4] Sagaya Aurelia et al. A machine learning entrenched brain tumor recognition framework. In 2022 International Conference on Electronics and Renewable Systems (ICEARS),pages1372–1376.IEEE,2022.

[5] Ehab F Badran, Esraa Galal Mahmoud, and Nadder Hamdy.Analgorithmfordetectingbraintumorsinmri images. In The 2010 International Conference on Computer Engineering & Systems,pages368–373.IEEE, 2010.

[6] Yoshua Bengio, Aaron Courville, and Pascal Vincent. Representationlearning:Areviewandnewperspectives. IEEE transactions on pattern analysis and machine intelligence,35(8):1798–1828,2013.

[7] Jose Bernal, Kaisar Kushibar, Daniel S Asfaw, Sergi Valverde,ArnauOliver,RobertMartí,andXavierLladó. Deep convolutional neural networks for brain image analysis on magnetic resonance imaging: a review. Artificial intelligence in medicine,95:64–81,2019.

[8] DanielBerrar.Cross-validation.,2019.

[9] Rupsa Bhattacharjee and Monisha Chakraborty. Brain tumor detection from mr images: Image processing, slicing and pca based reconstruction. In 2012 Third International Conference on Emerging Applications of Information Technology,pages97–101.IEEE,2012.

[10] Yi Chang, Luxin Yan, Meiya Chen, Houzhang Fang, and Sheng Zhong. Twostage convolutional neural network for medical noise removal via image decomposition. IEEE Transactions onInstrumentationandMeasurement, 69(6):2707–2721,2019.

[11] Nadim Mahmud Dipu, Sifatul Alam Shohan, and KMA Salam.Deeplearningbasedbraintumordetectionand classification. In 2021 International Conference on Intelligent Technologies (CONIT),pages1–6.IEEE,2021.

[12] N Nandha Gopal andM Karnan.Diagnose brain tumor through mri using image processing clustering algorithmssuchasfuzzycmeansalongwithintelligent optimization techniques. In 2010 IEEE international conference on computational intelligence and computing research,pages1–4.IEEE,2010.

[13] Yurong Guan, Muhammad Aamir, Ziaur Rahman, AmmaraAli,WaheedAhmedAbro,ZaheerAhmedDayo, MuhammadShoaibBhutta,andZhihuaHu.Aframework forefficientbraintumorclassificationusingmriimages. 2021.

[14] Paul Kleihues and Leslie H Sobin. World health organization classification of tumors. Cancer, 88(12):2887–2887,2000.

[15] Sarah H Landis, Taylor Murray, Sherry Bolden, and Phyllis A Wingo. Cancer statistics, 1999. CA: A cancer Journal for Clinicians,49(1):8–31,1999.

[16] Priyanka Modiya and Safvan Vahora. Brain tumor detection using transfer learning with dimensionality reduction method. International Journal of Intelligent Systems andApplications inEngineering,10(2):201–206, 2022.

[17] Muhammad Sajjad, Salman Khan, Khan Muhammad, Wanqing Wu,AminUllah,andSungWook Baik.Multigrade brain tumor classification using deep cnn with extensivedataaugmentation. Journal of computational science,30:174–182,2019.

[18] MSasikalaandNKumaravel.Awavelet-basedoptimal texture feature set for classification of brain tumours. Journal of medical engineering& technology,32(3):198–205,2008.

[19] JavierEVillanueva-Meyer,MarcCMabray,andSoonmee Cha.Currentclinicalbraintumorimaging. Neurosurgery, 81(3):397–415,2017.

[20] Sarmad Fouad Yaseen, Ahmed S Al-Araji, and Amjad J Humaidi.Braintumorsegmentationandclassification:A one-decade review. International Journal of Nonlinear Analysis and Applications,13(2):1879–1891,2022.

[21] QianruZhang,MengZhang,TinghuanChen,ZhifeiSun, YuzheMa,andBeiYu.Recentadvancesinconvolutional neuralnetworkacceleration. Neurocomputing,323:37–51,2019.