Deep Learning Based Music Recommendation System

Malige Gangappa1, Avuluri Nikhitha2, Bondugula Reshma3, Gundabathina Manasa4 , Pooja Kumari Singh5Abstract Many customers sense it's miles hard to create a listing from an extended listing of track. As a result, customers generally tend to play the following track in a random mode or through advice. There is an extensive variety of scientific settings and practices in track remedy for wellbeing support. Hence, a successful personalized track advice approach has emerged as a critical factor. With an aggregate of synthetic intelligence technology and generalized track remedy strategies, an advice machine is focused to assist humans with track choice for exceptional lifestyles and hold their intellectual and bodily conditions. Therefore, this research examines a contemporary framework and cutting-edge track models are provided. We used two well-known algorithms because they function well in recommender systems:Content-based modelling (CBM) and collaborative filtering (CF) (CBM). Two user-centered approaches: context-primarily based totally version and emotion-primarily based totally version were getting extra interest seeing that there may be terrible revelin lookingforatrack associated withmodern-day emotion.

Keywords Music recommendation; collaborative

I. INTRODUCTION

Music has a first-rate effect on human beings and is extensively used for relaxing, temper regulation, destruction from pressure and diseases, to preserve intellectualandbodilypaintings.Musichasturnedouttobe a critical part of our lives. And people regularly engage in the activity of listening to music, considering it to be a significant part of their lives. A good music recommendation system should be capable of automatically identifying preferences and generating playlists based on those tastes. Additionally, since they assist users in finding products they might not have discovered on their own, recommender systems are a useful approach to broaden search engines. Basically, The user should be given some products that suit his preferencesasarecommendation.Therearemanywaysto get information on users, such by watching how they interact, encouraging them to engage in specific activities, or having them complete forms that ask for personal information. Whenever someone feels low, tension or

excited they opt to pay attention to track. Study stress in college students and painting stress in specific fields along with IT has demanding sports inclusive of cut-off dates which has a tendency to temper swings and the personal flavor of track modifications primarily based totally on those temper swings. This task will give the layout of the personalized track advice system, pushed through listeners' interest contexts like pulse rate, coronary heart rate,sleeptime,stepswalked,energyburnedetc.[1].

The major focus of our project is to develop a music recommendation system which can recommend the music to the users based on their mood which is analyzed from different health parameters such as the heartbeat rate, pulse rate, no of steps walked etc. This project can assist the user in overcoming the issue of a cold start as well as theissueofhavingdifficultyselectingtheplaylistbasedon theircurrentmood.Oursystemcantacklethemoodswings of the users and recommend the best of the best music which can help them to get relaxed and get back to work with lots of enthusiasm and energy since our project considershealthparametersasthemaindatasetsbasedon theircurrenthealthparameters.

This paper discusses four key components which includes- audio processing, human emotion analysis, emotion to music generation, music recommendation [2]. However, there is no such music recommendation system whichrecommendsthemusicbasedonthecurrentstateof a user which includes different health parameters like pulse rate, number of hours slept, number of steps walked etc. [1]. In order to do this, we develop a deep learningbasedmusicrecommendationsystemthatreliesonhuman healthfactorsasaprimarymetric[1].

II. RELATED WORKS

This section shows the results for our investigation on current music recommendation systems and the algorithmsworkingonmusicrecommendation.

A.MusicRecommendationSystems workingnow

Music recommendation systems have gone through a great revolution in present generation, thanks to the development and success of internet streaming services, which occasionally put practically all the music in the entire globe at the user's fingertips. While current music recommendation systems almost help users to find

interesting music in the vast list of music, Research on music recommendation system is still dealing with significant obstacles. Music recommendation systems research is extremely important, especially when it comes to developing, implementing, and assessing recommendation strategies that incorporate data beyond straightforward user-item interactions or content-based descriptors but go in-depth into the very evolution of listener needs, preferences, and intentions. Despite the success of music information retrieval (MIR) approaches over the past ten years, music recommendation system development is still in its infancy.The traditional music recommendationsystemsrecommendmusicplaylistbased on user's preference list whereas the recent development in recommendation system included generating playlist based on user's heartbeat with in normal range and to stabilize his/her heartbeat. Iftheuser’sheartbeat exceeds the normal heart rate either increasing or decreasing,To lower/lift the user's heartbeat back to the normal range with the least amount of time spent, the system builds a user-preferred music playlist using the Markov decision method and also the mood of the user gets stabilized[11]. Another recommendation system, T-RECSYS, it includes a deep learning model is employed, similar to those used by Spotify,Pandora,andiTunes,tomakemusicsuggestionsby learning the user's preferences in music. This model receives input through both content-based and collaborativefiltering.

B.MostUsedAlgorithms

In recent times as the technology increase there are many algorithms that came into existence for recommendation systems, so far the following methods or algorithms are used for mostly for music recommendation system they are: Models for collaborative filtering and models that are basedoncontent.Thetypeofdatausedmakesadifference between them. Predictions are made by the first person and result from feedback of users to items and past experience and when filtered through the system, useritem interactions. The second one require characteristic features of the items. It need to know the content of both user and item. And some systems also use KNearestNeighbor (K-NN) algorithm which often used for recommendation systems because of it’s ability to handle large amounts of data and can predict with good accuracy

The K-NN algorithm works by finding the k nearest neighbors of a given item. The neighbors are then used to vote on the rating of the item. As per our investigation these are the most used algorithms for music recommendationsystem.

III. DEEP LEARNING BASED MUSIC RECOMMENDATION SYSTEM

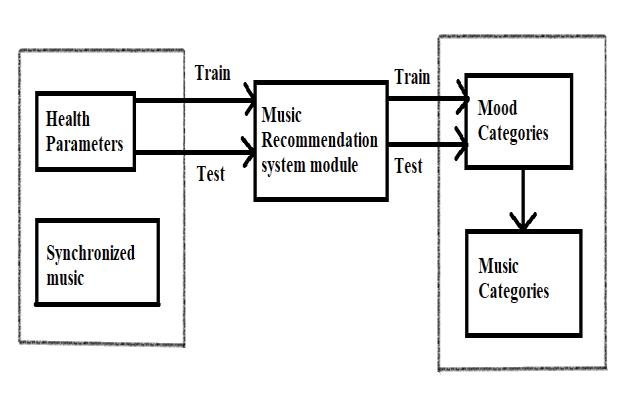

Proposed system is shown in the above figure. The system is designed to extract different health parameters suchasthe pulse rate, number of steps walked, numberof hourssleptetc.Thesystem isthentrainedandtestedwith these parameters. The music recommendation system module is generated and the current state of the user is detected and is categorised as sad, happy, angry, neutral [4]. Along with this, the music which is supposed to be recommended isalsocategorised in sucha waythatIt's to do with the user's situation right now. For example, if the mood of the user is detected as sad then this system recommendsthesongswhichcanmaketheuserfeelhappy andgetmotivated.

This paper discusses four key components which includes- Audio feature processing, Human emotion analysis, Emotion to music generation, Music recommendation[2].

3.1AudioFeatureProcessing

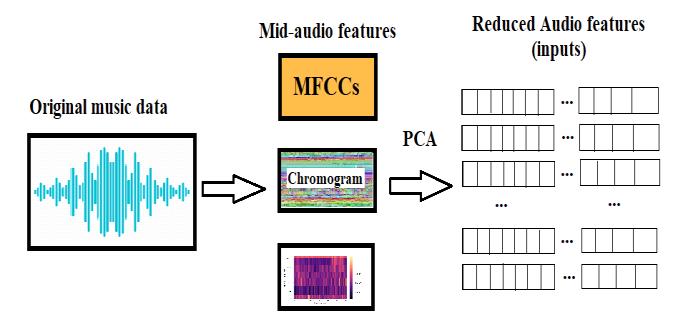

There's been a lot of research done on the analysis of audio functions. The most critical phase of audio characteristic analysis is audio characteristic retrieval [2]. Spectral factors like MFCCs, spectral centroid, and

chromogram, which reflect the loudness, timbre, and pitch of music, are considered in the modern look [1]. The rhythmic elements had also been eliminated. Each snippet was first sampled at a rate of 22050 Hz, which was an effective technique to retrieve the spectral functions. After that, a short-time Fourier transform was used to acquire the electricity spectrogram [1]. Over Hann home windows, wecomputedthediscreteFouriertransforms,andforeach sample,wecreatedaspectrogramwithasizeof10251077. The aforementioned preprocessing had resulted in the computationofnumerousspectralfeatures.ForMFCCs,for instance, we created a Mel-scale spectrogram by clearing out the spectrogram using a Mel clean out financial institution [1]. Then, we choose to compute the MFCCs using the decreasing cepstral coefficients [1]. The tonal centroid functions were then obtained by projecting chromogram functions upon a 6-dimensional basis after the chromogram had been computed using Ellis' method. The labors toolset had also been used to compute additional functions, including spectral contrast, spectral centroid,zero-crossingfeeandspectralrolloff[3].Theuse of basic thing analysis was subsequently reduced (by 99% of the variance) by linking the spectral and rhythmic functions. Finally, audio inputs had been made using the reducedfunction.

3.2Humanemotionanalysis

Humans today frequently experience significant levels ofstressasaresultofdifficultworkingconditions,financial concerns, and issues with their families or personal lives [7]. Listening to music is one method of reducing stress. However, our initiative is more beneficial to those for whom music best expresses their current state of stress, worry, or other emotion. Then, based on the user's health parameters,Theappreturnssongsintheexactsamestate. Thealgorithm wasableto distinguish betweenhappy,sad, angry, and neutral emotions with ease [8]. In order to monitor psychological health and facilitate humancomputer interaction, wearable technology that supports emotionrecognitionandtrackingcanbequiteuseful.

Accordingtothisstudy,therearefourdifferentcategories of music-related emotions: happy, sad, anger, neutral. Songs use an SVM-based method to separate the emotions inthemusic.Inferredfromtheresearch.Theheartrateofa neutral feeling ranges from 60 to 80 beats per minute, whiletheheartrateofahappymoodrangesfrom70to140 beats per minute, depending on the type of happiness [4]. The heart rate associated with sadness ranges from 80 to 100 beats per minute, whereas that associated with anger ranges from 110 to 135 beats per minute. Real-time pulse evaluation is used to distinguish between the four emotional states (happy, sad, neutral, and angry). The difference in sign is directly related to the various states. Neutral=60–80bpmHappy=70-140.

Heart Rate (Highest)

1 Angry 83perminute 135perminute

2 Happy 72perminute 140+perminute

3 Neutral 56perminute 72perminute

4 Sad 62perminute 100perminute

TABLE[2]:AVERAGEHEARTRATEBASEDEMOTION

Emotion AverageHeartRate

Neutral 64perminute

Sad 82perminute

Happy 106perminute

Angry 109perminute

3.3Emotiontomusicgeneration

There are not any present large-scale tune datasets containing human emotion label annotation. It is as a consequence now no longer intuitive the way to generate tunes conditioned on emotionlabels[3]. In this paper, we advocate a device which could hit upon the modern-day temperofthe consumer thatis an emotion and the tuneis generated on the idea of that emotion [5]. Specifically, we first teach the device with the fitness parameters of the consumer which incorporates pulse rate, wide variety of steps walked, wide variety of hours slept etc, automatic emotion popularity version is constructed which analyses theemotionandlabelsitintosad,happy,angry,neutral[9]. Nowthetunethatisassociatedwithaspecificemotionhas been clustered into clusters. The songs of clusters are fashioned and a class is made for every emotion wherein every class of tune is associated with one precise emotion and so on. In this manner the emotion is analyzed and the preciseassociatedtunetothatemotionisgenerated.

3.4Musicrecommendation

Musicrecommendationisoneofthecrucialcomponents of this survey as the whole topic is related to this recommendation. Two most popular ML algorithms CollaborativeFiltering(CF)andContentBasedFilteringare used to recommend the music to the users [6]. Once the currentmoodoftheuserisanalyzedbythesystemthenthe system tries to find out for the songs which are related to thatmood[3].Thisprocesscomesunderemotiontomusic generation which is discussed above. Once this music generation is done then comes the part of music recommendation where the music relevant to the current moodoftheuserisrecommendedtotheuser.

IV. TECHNOLOGIES

4.1CollaborativeFiltering

In general, recommender systems employ collaborative filtering. We try to find the similarities in the user preference and recommend them what the similar users like [10]. However, the collaborative filtering approach doesn’t use the features of the particular item for recommendation;instead,classificationamongeachuseris recommended according to the preference of its cluster after being grouped into clusters of people who share similar traits. The system is expected to consider health parameters, such as pulse rate, number of hours slept, numberofsteps walked etc is extracted from the user and by using collaborative filtering we classify the user emotions into sad, angry, happy, neutral [10]. Clusters of music are formed, each cluster of music is based on one emotionsuchasthereisaclusterofmusicwhichisrelated to the happy emotion and as follows. Whenever a music is tobe recommended then theuser emotionisdetectedand based on the current state of the user the classification of the emotion is performed and the song from the cluster of songsofthatparticularstateofemotionisrecommendedto the user whichcan help to improve their mood [10]. Here, weare notusingany one particularfeature to recommend thesongtotheuser,insteadtheclassificationofemotionis doneandthesongsfromoneoftheclustersofthatemotion isrecommendedtotheuser.

4.2Content-BasedFiltering

Along with collaborative filtering, the content-based filteringisalsoapopulartechniqueusedforrecommender systems. In this approach the system uses the users input features and tries to recommend which can be related to those features.With content-based filtering, products are categorised using specific keywords, the customer's preferences are ascertained, those keywords are searched for in the database, and then relevant products are recommended. [2]. The system is expected to take the health parameters such as pulse rate, number of hours slept, number of steps walked etc and based on these parameters the music is supposed to be recommended to theuser[4].Theinputfeaturesforthissystemisthehealth parameters of the user which acts as a keyword for the system and now the emotion is been analyzed from those parameters and the music which can be related to that emotion is been searched and the emotion to music generationisbeenperformedandthemusicrelatedtothat emotion is been recommended. The users are categorised based on their mood, the cluster of songs related to one emotionisformedandthemusicrecommendationisgiven fromthatclusterbasedonthecurrentmoodoftheuser[4].

V. CONCLUSIONS

This paper reviews the existing music recommender research results from the different perspectives including recommendation based on the user rating, hashtags used, users with similar contexts, implying similar interests in music. This shows that the existing recommendation systems have few drawbacks like the cold start problem, where the new user feels difficulty in listening to songs as there is no user's past listening history. The current music recommendation systems recommend the songs based on either the user's past listening history, or ratings of songs, or most frequently liked songs, or most listened songs etc. However, there isn't a system for music recommendations that suggests tunes to users depending on their mood swings. Therefore, the idea is to create a music recommendation system such that the user can get the songs based on their mood swings. The health parameters such as the pulse rate, number of hours slept, number of hours worked, number of steps walked etc are extracted and the mood of the user is analysed and by using collaborative filtering we are trying to generate a music from emotion and thenfinallyrecommending the music to theuserwhichisrelatedtotheircurrentstateofmood.

REFERENCES

[1] Song, Yading & Dixon, Simon & Pearce, Marcus. (2012). A Survey of Music Recommendation Systems andFuturePerspectives

[2] Gong, W. and Yu, Q. (2021). A Deep Music Recommendation Method Based on Human Motion Analysis. IEEE Access, 9, pp.26290–26300. doi:10.1109/access.2021.3057486.

[3] Xu,L.,Zheng,Y.,Xu,D.andXu,L.(2021).Predicting the Preference for Sad Music: The Role of Gender, Personality, and Audio Features. IEEE Access, 9, pp.92952–92963.doi:10.1109/access.2021.3090940.

[4] Chen, Z.-S., Jang, J.-S.R. and Lee, C.-H. (2011). A Kernel Framework for Content-Based Artist RecommendationSysteminMusic. IEEETransactionson Multimedia, 13(6), pp.1371–1380. doi:10.1109/tmm.2011.2166380

[5] Su, J.-H., Yeh, H.-H., Yu, P.S. and Tseng, V.S. (2010). Music Recommendation Using Content and Context Information Mining. IEEE Intelligent Systems, [online] 25(1),pp.16–26.doi:10.1109/MIS.2010.23.

[6] Nanopoulos, A., Rafailidis, D., Symeonidis, P. and Manolopoulos,Y.(2010).MusicBox:PersonalisedMusic Recommendation Based on Cubic Analysis of Social Tags. IEEE Transactions on Audio, Speech, and Language Processing, 18(2), pp.407–412. doi:10.1109/tasl.2009.2033973.

[7] Zangerle, E., Chen, C.-M., Tsai, M.-F. and Yang, Y.-H. (2018). Leveraging Affective Hashtags for Ranking MusicRecommendations. IEEETransactions on Affective Computing,pp.1–1.doi:10.1109/taffc.2018.2846596.

[8] Xu,L.,Zheng,Y.,Xu,D.and Xu,L.(2021).Predicting the Preference for Sad Music: The Role of Gender, Personality, and Audio Features. IEEE Access, 9, pp.92952–92963.doi:10.1109/access.2021.3090940.

[9] Bao, C. and Sun, Q. (2022). Generating Music with Emotions. IEEE Transactions on Multimedia, pp.1–1. doi:10.1109/tmm.2022.3163543.

[10] Riyan, A. and Ramayanti, D. (2019). A Model-based Music Recommender System using Collaborative Filtering Technique. International Journal of Computer Applications, 181(36), pp.1–4. doi:10.5120/ijca2019918320.

[11] Song, Y., Dixon, S., & Pearce, M. (2012). A Survey of Music Recommendation Systems and Future Perspectives.Researchgate.https://doi.org/June2012

[12] Angulo, C. Á. (2010). MUSIC RECOMMENDER SYSTEM.POLITECNICO DI MILANO. https://doi.org/2010/11