AUTONOMOUS SELF DRIVING CARS

Sidharth

Sidharth

Abstract: Self-driving cars aremoving from fictiontoreality,but are we ready forit? This study analysesthe currentstate ofself-driving cars. Addressgaps that need to befilledand identifyproblemsthat need to besolvedbefore selfdriving carsbecome a realityon theroad. Thereare 4,444technologicaladvancementseachyear, paving theway for theintegrationof artificial intelligence into automobiles. In this paper, we provideda comprehensivestudy-onhis implementation of self-driving cars based on deep learning. Foryour convenienceand safety,simulate yourcarwiththe simulator provided by Udacity.Datafor training the model iscollectedin the simulator and imported into the projectto trainthe model. Finally, we implemented and compared various existing deep learning models andpresentedthe results. Since the biggest challenge for self-driving cars is the autonomous lateral movementofthe,themaingoalofthiswhitepaperisto clone the drive using multilayer neural networks and deeplearningtechniques.andimprovetheperformance of self-driving cars. Focuses on the realization of his self-driving cars driving under stimulus conditions. Within the simulator, the mimics the images obtained fromthecamerasinstalledinhiscar,thedriver'svision, and the reaction that is her steering angle of the car. A neural network trains the deep learning technique based onphotostakenbycamerainmanualmode.This providestheconditionsfor driving thecar in automatic mode using a trained multilayer neural network. The driverimitationalgorithmcreatedandcharacterizedin thispaperisadeeplearningtechniquecenteredaround theNVIDIACNNmodel.

Index Terms Self-drivingCars,ArtificialIntelligence, DeepLearningModels.

I. Introduction

The rise of artificial intelligence (AI) has enabled 4,444 fully automated self-driving cars. In the near future, all technologieswillbeavailableforpassengerstorideinselfdrivingcars.Thevehicletakesherpassengerfromlocation A to location B, moving her fully automatically without

passenger intervention. Technology is one piece of the puzzle that must address before he can put self-driving carsontheroad.Thispaperanalyzescurrent(federaland state)policyregardingautonomousvehiclesandidentifies gaps that need to be addressed by governments and legislators. We also review the self-driving car safety featuresadvertisedbythevendor.Learnaboutself-driving car security from a cyberattack perspective. In addition, we analyze the psychological acceptance of self-driving cars by consumers. This whitepaper focuses on the obstacles that have prevented the realization of selfdriving cars in his decade. Self-driving cars are the dream of many. The advent of his car in self-driving could mean the dawn of ultimate his driving safety for some, or his newly discovered mobility. For example, think of an elderly relative who doesn't want anyone behind the wheel. A self-driving car can maintain his mobility and autonomy, but not endangeranyone. Or imagine someone who seems completely unaware that he drinks too much. NowI canseethem driving safely and I no longerhave to worry about the safety of myself or others who may be affected by poor judgment. Or imagine a disabled person whocannotdriveacartoday.Imaginethepossibilitiesthat will open up for them when self-driving cars become a reality. There are endless examples of how self-driving cars can help people and why they are needed, at least according to self-driving car proponents. His, who are against the idea that machines make the difference between life and death, are increasingly concerned as the autoindustrymovesclosertoself-drivingcars.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 10 Issue: 01 | Jan 2023 www.irjet.net p-ISSN:2395-0072

This is an algorithm tuned for lane recognition in bright, sunny conditions, but the may not perform well in dark, dark conditions. This framework uses a convolutional neural system to provide control points that depend on road images. Essentially, the model is set up to copy human driving behavior. The end-to-end model is less sensitive to changing lighting conditions, as the standard may not actually be written. At the end of this paper, we describe in detail how self-driving cars are controlled. Developa modelofcarmotionandtestthecontroldesign withthestimulator.

II. Literature Survey (Background study)

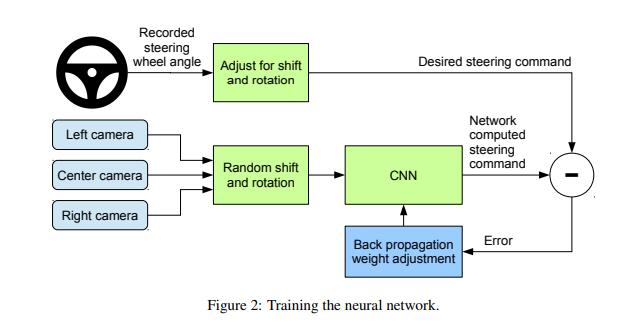

Convolutional Neural Networks (CNNs) have painted new perspectives in pattern recognition. Towards large-scale CNN deployments, many of the pattern recognition projectsendedwithearlystagesoftheirowncomponents, which were extracted and tracked by classifiers. The function used acquired knowledge using the CNN growth training example. This method is mainly used in image recognition , as the Convolution activity captures the 2D nature of a given image. Recently, convolutional neural networks(CNNs)are veryuseful inimageprocessing,and these types of neural networks have applications in various fields. For example, these are; Computer Vision, Face Recognition, Scene Labeling, Action Recognition, etc. CNN is a special architecture of the artificial neural networkproposedbyJanLekunin1988forthepurposeof effective image recognition. A CNN consists of layers of different types. They are the convolution layer, the subdiscretization layer (subsampling, subsampling), and the fully connected layer of the neural network perceptron. CNNsareaveryimportantpartofvariousneuralnetwork architectures. These architectures are used to generate new images, segment images, etc. The CNN's learning algorithm was parallelized with graphics processing units tofacilitatefastlearning.TheDARPAAutonomousVehicle (DAVE)wasusedtoidentifyend-to-endlearningpotential and validate the initiation of the DARPA Ground Robotic Learning (LAGR) program. However, DAVE's success has notbeenreliableenoughtosupportacompletealternative to the more modular approach for off-road driving: the average distance between collisions was about 20 m. A new application has been launched at NVIDIA. Based on DAVE,we create a powerful system thatcan learn the entire task of circuit and path monitoring without requiring manual decomposition, labeling, semantic abstraction, pathplanning,andcontrol.Theagendaofthis project is to circumvent his requirement to recognize certain characteristics. The transfer of training from common neural network architectureshasled to research

describingasimilarapproachtosteeringangle prediction. CNNhelpsextractfeaturesfromchassis.Iwouldlike tocreatea network withfewerparametersandtrainiton artificially modeled data compared to the author of this article. This allows him to use the at efficient computational costs to rapidly create solutions for selfdriving cars. Things such as: B. Lane marking, crash barriers,orothervehicles.

III. Methodology

a. DATASETANDSIMULATOR

Fortheexperiment,weusedtheUnity-basedCarND.

Udacity Simulator - This simulator allows you to simulate his movement of the car in manual and automatic mode. The software is very flexible and allows map customization.Wemodeledaboutanhourdriveinmanual modeinvariousstyles.Itriednottocollidewiththeobject and go off track, but you can model styles that are only implemented on special polygons in the real world. Recording and processing movements resulted in the formation of a log containing various modeling parameters. Here the main image is the image containing thesimulatedleft,right,centercameraandsteeringangles. A total of images of about 54000 were obtained. There is nodoubtthatdrivingavehiclewithastimulatorisnotthe same as actually driving a vehicle. There are so many similarities.Giventhecurrentstateofgamedesign,images captured in the reproduced situation (roads, markings, scenes) is a good estimate of the images he could actually capture. The Stimulator provides additional safety and comfort. Collecting information is not a problem. Collect information efficiently. Also, even if the model fails, there is no danger to life. The stimulator is the best stage for exploring and improving different model structures. Later we can implement the model in a real car with a real camera, but for that we need a stimulator to work properly.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

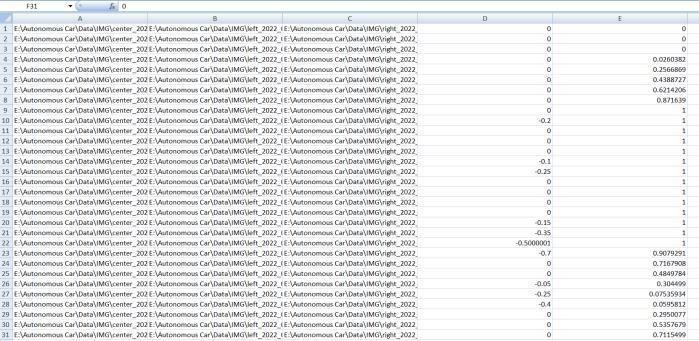

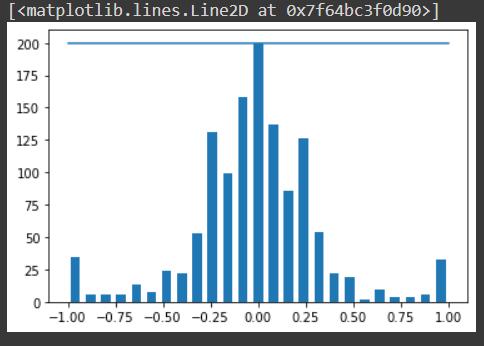

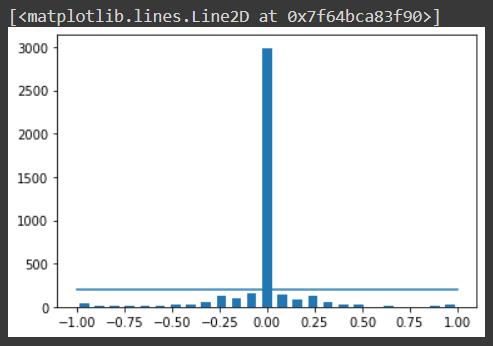

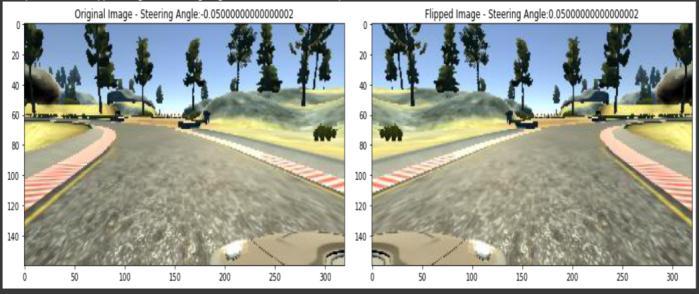

The original training dataset was biased toward either left or right-hand drive, as the ego-vehicle traversed the track in one direction. In addition, all collected data sets were heavily biased towards zero steering, as the steering angle was reset to zero each time the control buttonwasreleased.

To minimize these imbalances, we balanced the data set by removing random portions of the data set containing exactly zero steering angle measurements above a thresholdof400.

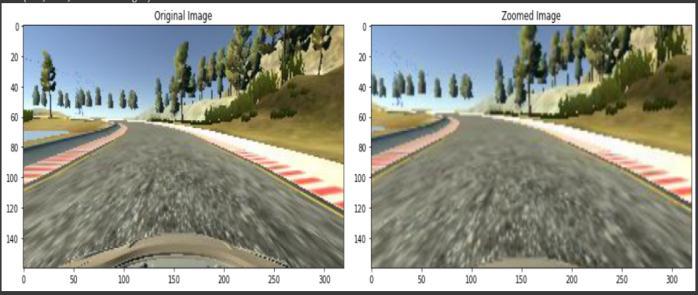

Image preprocessing and augmentation are necessary to improveconvergence,minimizeoverfitting,andspeedup the training process. The image processing pipeline was implemented in Python using computer vision libraries. Inthiswork,atotaloffiveenhancementtechniqueswere applied to the data set during the training phase: zoom, pan,brightnessandflip.

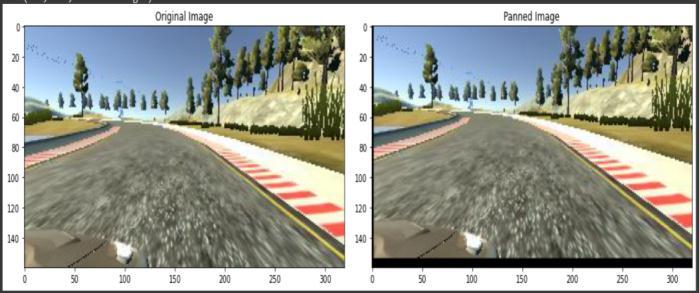

Fig(3.5)Dataaugmentedimages

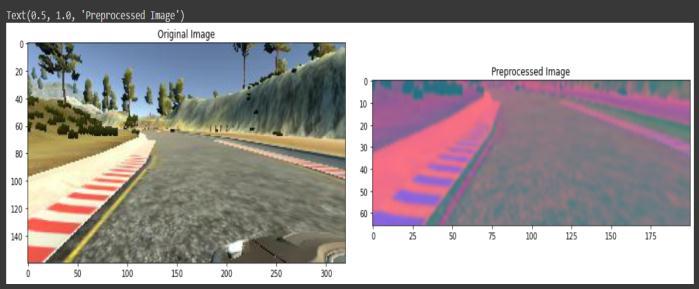

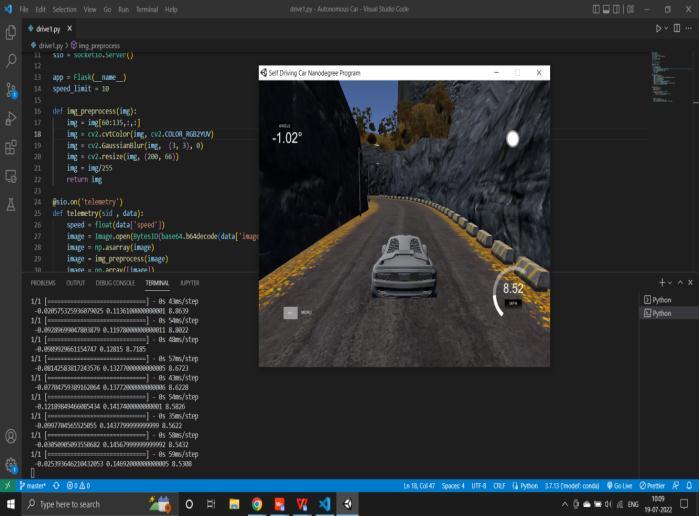

Preprocessing of data for faster and more efficient traininganddeployment.Thisworkpresentsatwo-stage preprocessing function that performs resize and normalize operations (with moderate centering) on the input image. This is one of the important steps. Because weknowtherearemanyareasoftheimagethatwedon't use,orwecantellthatwedon'tneedtofocusonit,sowe removedtheimage.FeaturessuchastheSkyMountainin the photo and the hood of the base vehicle. These parts have absolutely nothing to do with our car determining thesteeringangleofhis.SoItrimmedthiswithasimple NumPy array slice. As you can clearly see in the image, theimageonaxisis160x300,theheightfrom160to135 isthecarhood,andtherangefrom60to0istheskyand landscape. So I removed that part from the photo and changed the axis from 60 to 135. This allows them to focus more on its essential functions. Convert the RGB image to YUV using the CV2 library. The advantage of converting images is that YUV images require less bandwidth than RGB. After transforming the image, I smoothitusingtheGaussianBlurmethodprovidedinthe CV2library.

(3.6)Preprocessedimage

Croptheimageaccordingtotheregionofinterest.Image above shows the original image and image next to it showstheoutputafterthepreprocessingphasehasbeen performed.

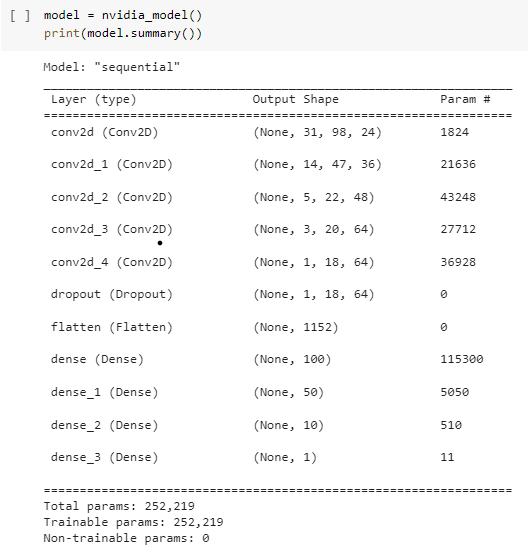

d. NEURALNETWORKARCHITECTURE

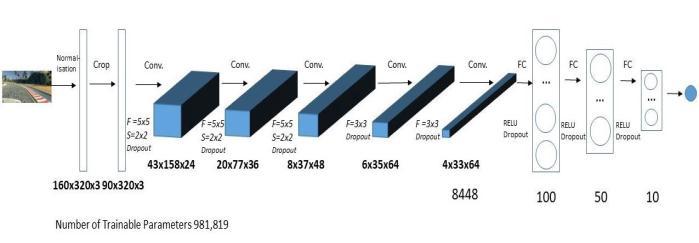

The entire network is implemented and trained using KerasandTensorFlowasbackends.Theneuralnetwork architecture I used is based on a paper published by NVIDIA and is designed to map raw pixels to control commands.The network consists of9layers,including a normalization layer, 5 convolution layers, and 3 fully connected layers. The image is normalized with a normalization layer. According to the paper, performing normalization on the network allows the normalization scheme to be tailored to the network architecture and acceleratedbyGPUprocessing.

Fig(3.7)NeuralNetworkArchitecture

Thereare5levelsofconvolutionusedtoextractfeatures from images. The first three convolutions are performed with strided convolutions with 2x2 stride and 5x5 kernels. The last two layers of convolution are obtained by strideless convolution performed on a 3x3 kernel. Convolutional layers are followed by three fully connected layers to give output control values. The model is implemented in Keras with Tensorflow as the backend. The final model architecture is shown in the imagebelow.

AdamOptimizerwasusedtoupdatenetworkweights.

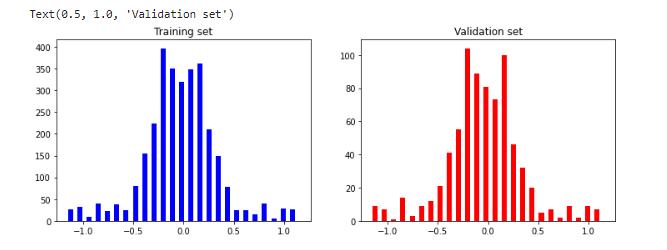

e. TRAININGANDVALIDATION

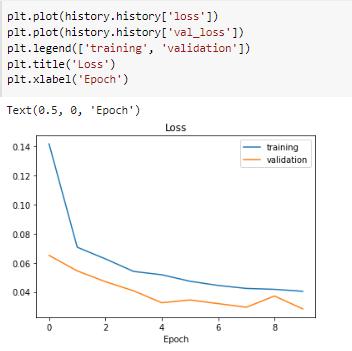

The collected dataset was randomly split into training andvalidationsubsetswith a 4:1ratio (i.e. 80% training data and 20% validation data).The random state of partitioning for each dataset was specially chosen such that the training and validation datasets had minimal deviationfromthesteeringmeasures.

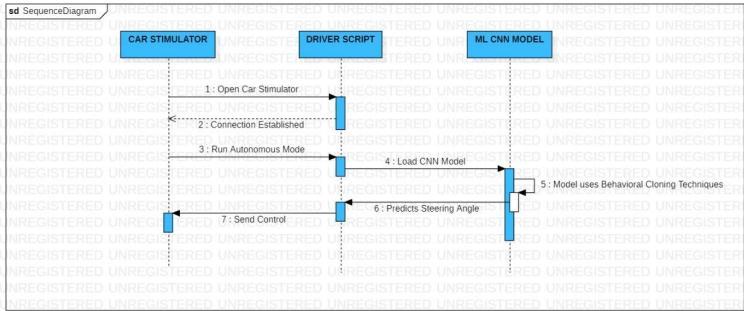

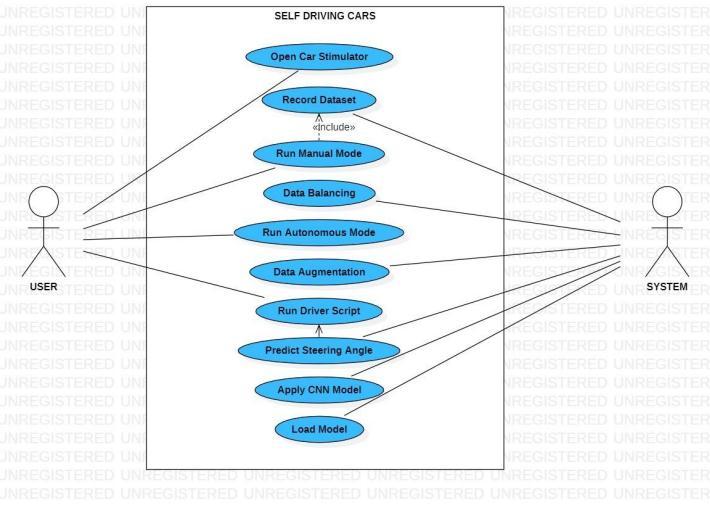

AUMLdiagramisthefinalresultofthewholediscussion. Create a complete UML diagram with all the elements and relationships, the diagram represents the system. ThevisualimpactofUMLdiagramsisthemostimportant partoftheoverallprocess.Allotherelementsareusedto complete it. UML is a standardized general-purpose modeling language in the field of object-oriented software engineering. Standards are managed and created by the Object Management Group. The goal of UML is to become a common language for modeling object-oriented computer software. UML in its current form consists of his two main components: the metamodel and the notation. Some form of method or processmayalsobeaddedinthefuture.Orrelated,UML.

IV. Results

Simply put, the job of behavioral cloning is to collect informationandusethecollecteddatatotrainamodelto mimic the behavior. Behavioral Cloning Leads to Simulated Driving Scenario. In behavioral cloning, we

stimulateadrivingscenarioimplementedusingCNN with an activation function that is Elu. The Elu function uses a mean squared error loss of used to validate the data. As expected,performedbetteraftertrainingthedata,butthe twovaluesinthetrainedandtesteddatawereveryclose, leading to the conclusion that the model represents a moregeneralquality.Hereisthe resultafterapplyingthe above method: The car drives that route and you can see that the right corner of is the speedometer. A trained model can successfully drive a car on a variety of unknown tracks. This model is able to consistently wrap withoutfailure.Usingalargetrainingdatasetconsistingof different scenarios improves the ability of the model to stayinautonomousmode Hereistheresultafterapplying theabovemethod:

V. Conclusion

Fig(4.1)Graphfortrainingandvalidation

Thecar drives that route and you can see that the right corner of is the speedometer.Atrained model can successfullydriveacaronavarietyofunknowntracks.

Thismodelis able toconsistentlywrapwithoutfailure. Usingalargetraining datasetconsistingofdifferent scenarios improvestheability of the modeltostayin autonomousmode.

Real-world automotive automation is a very large area withmanysensors.Ourideaisto resolvemanyofthese sensorstohistwosensors.Thisistodetectdistanceand objects such as stop signs, traffic lights and other obstacles in a single way for Monocular Vision. can be expanded. The prototype focuses on these features he developed on the Model RC car, while the other prototypes focus on only one aspect of it. This article describeshowtogeneratedatausingtheemulator.Data generation on the simulator has been shown to be very efficient. Easily create large datasets for experimentation. This avoids collecting data from the actual world. It is very resource intensive in terms of moneyand human resources.Asan example,I wasable to generate 54000 images in one hour without consuming additional resources. A neural network can betrainedonsyntheticimagestopredictsteeringangles for self-driving car motion. CNN can extract his data from camera images and find the dependencies needed for prediction. This article presents a comparisonofthe convolutional neural network architecture and a small set of parameters that can solve these problems. The resulting network had 3 layers of convolutions and a total of 26,000 parameters. It is possible to avoid overfitting, but had highvariability because there wasa small set of modeled data for. For example, we reduced the number of parameters and the memory used by neural networks by an orderof magnitude compared to other popular architectures, results show that these networksyieldhighresults,aremodifiableandcomplex.

VI. References

[1]Udacity.OpenSourceAutonomousVehicles,2017.

[2] Y. Sun, X. Wang, X.seaweed. Sparsification ofneural network connections for face recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition,2016,pp.4856-4864.

[3] Pichao Wang, Wanqing Li, Zhimin Gao, Chang Tang: Depth Pooling -Based Massive3D Action RecognitionandConvolutional Neural Networks. arXiv: 1804.01194,2018.

[4] Y.LeCun B.Boser, J S. Denker, D. Henderson,R.E.Howard, W. HubbardundL. D. Jackel: Back propagation Applied to Handwritten Zip Code Recognition, Neural Computation, 1(4):541-551, Winter 1989.

[5] He Huang, Phillip S. Yu: An Introduction to Image Synthesis with GenerativeHostile Net,arXiv:1803.04469, 2018.

[6] A. Canziani, A.Pasque,E.Curcello An Analysis of Deep NeuralNetwork Models for PracticalApplications 、 arXiv:1605.07678[cs],Apr.2017.

[7] C.Szegedy、S. Ioffe、 V. VanhouckeandA.A.Alemi. Inceptionv4, Effects of Inception Resnetandremainingconnections ontraining. AAAI ConferenceonArtificialIntelligence,2017,p.4278-4284.